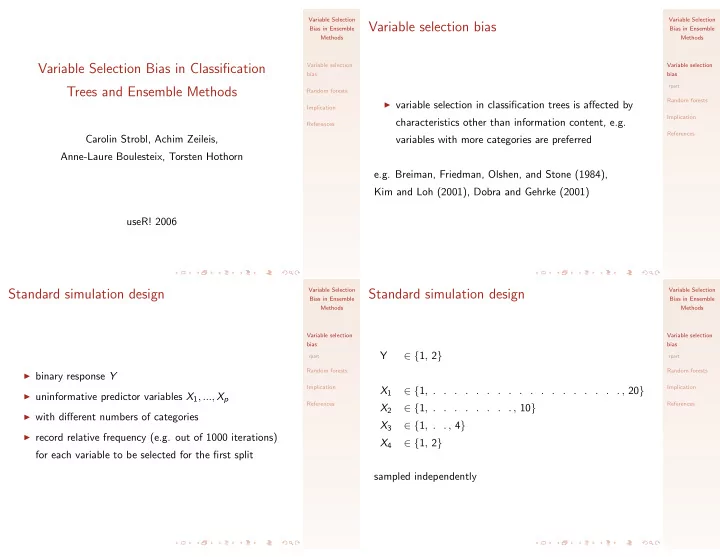

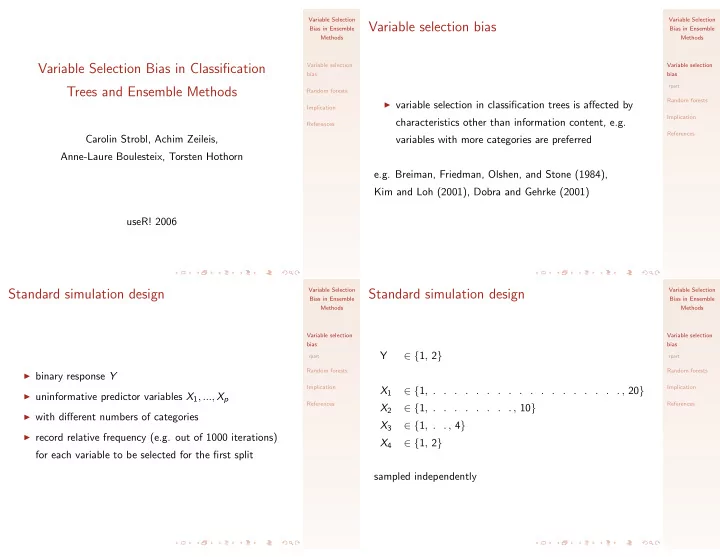

Variable Selection Variable Selection Variable selection bias Bias in Ensemble Bias in Ensemble Methods Methods Variable selection Variable selection Variable Selection Bias in Classification bias bias rpart Trees and Ensemble Methods Random forests Random forests ◮ variable selection in classification trees is affected by Implication Implication characteristics other than information content, e.g. References References Carolin Strobl, Achim Zeileis, variables with more categories are preferred Anne-Laure Boulesteix, Torsten Hothorn e.g. Breiman, Friedman, Olshen, and Stone (1984), Kim and Loh (2001), Dobra and Gehrke (2001) useR! 2006 Variable Selection Variable Selection Standard simulation design Standard simulation design Bias in Ensemble Bias in Ensemble Methods Methods Variable selection Variable selection bias bias Y ∈ { 1 , 2 } rpart rpart Random forests Random forests ◮ binary response Y Implication Implication X 1 ∈ { 1 , . . . . . . . . . . . . . . . . . . , 20 } ◮ uninformative predictor variables X 1 , ..., X p References References ∈ { 1 , . . . . . . . . , 10 } X 2 ◮ with different numbers of categories ∈ { 1 , . . , 4 } X 3 ◮ record relative frequency (e.g. out of 1000 iterations) X 4 ∈ { 1 , 2 } for each variable to be selected for the first split sampled independently

Variable Selection Variable Selection Variable selection bias in classification trees Sources of variable selection bias Bias in Ensemble Bias in Ensemble Methods Methods package: rpart function: rpart Variable selection Variable selection bias bias 1.0 rpart rpart Random forests Random forests relative variable selection frequency 0.8 Implication Implication References References ◮ estimation bias and variance of empirical entropy 0.6 measures (Strobl, Boulesteix, and Augustin, 2005) ◮ in binary splitting: multiple testing 0.4 (combined variable and cutpoint selection) 0.2 0.0 X1 X2 X3 X4 Variable Selection Variable Selection Permutation accuracy importance Random forests Bias in Ensemble Bias in Ensemble Methods Methods package: randomForest Variable selection Variable selection functions: randomForest, importance bias bias Random forests Random forests variable importance measure: randomForest randomForest ◮ informative variables produce a systematic decrease in cforest cforest permutation accuracy importance Implication Implication accuracy when permuted References References “In every tree grown in the forest, put down the oob cases and ◮ uninformative variables produce a random decrease or count the number of votes cast for the correct class. Now increase in accuracy when permuted randomly permute the values of variable X j in the oob cases and put these cases down the tree. Subtract the number of votes for the correct class in the variable-j-permuted oob data from the number of votes for the correct class in the untouched oob data. The average of this number over all trees in the forest is the raw importance score for variable X j .”

Variable Selection Variable Selection Permutation accuracy importance Permutation accuracy importance Bias in Ensemble Bias in Ensemble Methods Methods function: importance option: scale=FALSE Variable selection Variable selection bias bias employed as a criterion for variable selection in many recent Random forests Random forests ● ● ● randomForest randomForest publications in biochemistry, neurology, forestry, etc., e.g. by ● ● ● cforest cforest ● ● ● ● ● ● 0.05 ● ● Implication Implication variable importance Bureau et al. (2005), Chen and Lin (2005), Cummings and ● ● ● ● ● ● ● ● References ● References ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Segal (2004), Diaz-Uriarte and de Andr´ es (2006), Furlanello ● ● ● ● ● ● ● ● ● ● ● 0.00 et al. (2003), Guha and Jurs (2003), Jong et al. (2005), Lunetta et al. (2003), Lunetta et al. (2004), ● Ward et al. (2006) etc. −0.05 X1 X2 X3 X4 Variable Selection Variable Selection Permutation accuracy importance Permutation accuracy importance Bias in Ensemble Bias in Ensemble Methods Methods function: importance option: scale=TRUE Variable selection Variable selection bias bias Random forests ◮ due to variable selection bias in individual trees Random forests ● ● ● ● randomForest randomForest ● ⇒ variables with more categories end up closer to 2 cforest cforest ● ● ● ● ● ● ● ● Implication Implication ● ● ● root node of individual tree ● ● ● variable importance 1 References References ◮ potential change in accuracy is more pronounced for variables closer to root node 0 ⇒ variable importance of variables with more categories −1 shows higher deviation −2 X1 X2 X3 X4

Variable Selection Unbiased variable selection criteria for Variable Selection Expectation Bias in Ensemble Bias in Ensemble Methods Methods classification trees Variable selection Variable selection bias bias ◮ Strobl, Boulesteix, and Augustin (2005) Random forests Random forests exact p-value of maximally selected Gini gain randomForest randomForest cforest cforest package: exactmaxsel Implication Implication random forests built from unbiased trees function: maxsel.test References References do not produce biased variable selection measures ◮ Hothorn, Hornik, and Zeileis (2006) p-value of independence test in conditional inference framework package: party functions: ctree, cforest internal: party:::varimp Variable Selection Number of times variable is selected Variable Selection Permutation accuracy importance Bias in Ensemble Bias in Ensemble Methods Methods in individual trees internal: party:::varimp Variable selection Variable selection 200 bias bias Random forests Random forests ● ● randomForest randomForest ● ● ● 150 cforest cforest ● ● ● ● ● 0.05 ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Implication Implication ● ● ● ● ● ● variable importance ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● References References ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 100 ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 0.00 50 −0.05 0 X1 X2 X3 X4 X1 X2 X3 X4

Variable Selection Variable Selection Bootstrap bias Bootstrap bias Bias in Ensemble Bias in Ensemble Methods Methods distribution of the p-values of a χ 2 -test before and after Variable selection Variable selection bootstrapping (1000 iterations, each n = 10 000) bias bias Random forests Random forests X1 X2 X3 X4 randomForest randomForest 1.0 1.0 1.0 1.0 cforest ◮ bootstrap sampling with replacement artificially cforest ● ● ● ● ● ● ● ● ● ● Implication Implication ● ● ● ● induces an association ● ● ● 0.8 0.8 ● ● 0.8 0.8 ● ● ● ● ● ● ● ● ● References References ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ◮ the effect is more pronounced for contingency tables ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 0.6 0.6 ● 0.6 0.6 ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● with more cells and more df ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 0.4 ● ● 0.4 0.4 0.4 ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 0.2 ● ● ● ● ● 0.2 0.2 0.2 ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 0.0 0.0 0.0 0.0 Variable Selection Number of times variable is selected Variable Selection Expectation Bias in Ensemble Bias in Ensemble Methods Methods in individual trees Variable selection Variable selection 200 bias bias Random forests Random forests randomForest randomForest 150 cforest cforest Implication Implication when samples (e.g. of the size 0.632 · n) are drawn References References without replacement the bias is eliminated 100 50 0 X1 X2 X3 X4

Recommend

More recommend