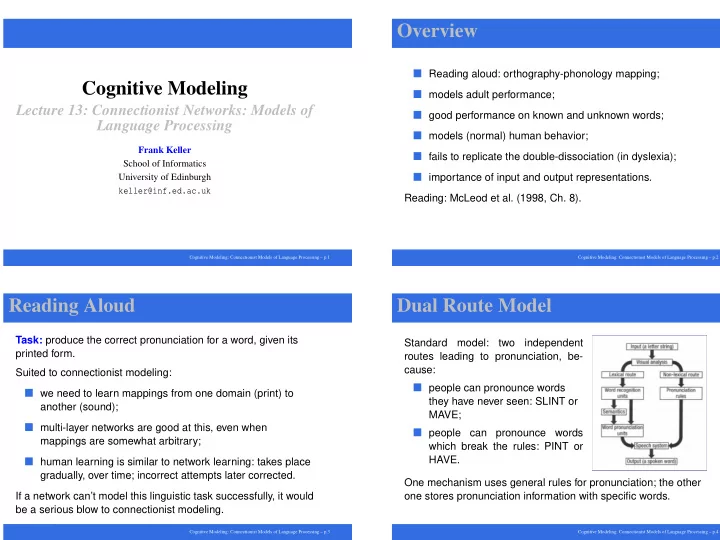

Overview � Reading aloud: orthography-phonology mapping; Cognitive Modeling � models adult performance; Lecture 13: Connectionist Networks: Models of � good performance on known and unknown words; Language Processing � models (normal) human behavior; Frank Keller � fails to replicate the double-dissociation (in dyslexia); School of Informatics University of Edinburgh � importance of input and output representations. keller@inf.ed.ac.uk Reading: McLeod et al. (1998, Ch. 8). Cognitive Modeling: Connectionist Models of Language Processing – p.1 Cognitive Modeling: Connectionist Models of Language Processing – p.2 Reading Aloud Dual Route Model Task: produce the correct pronunciation for a word, given its Standard model: two independent printed form. routes leading to pronunciation, be- cause: Suited to connectionist modeling: � people can pronounce words � we need to learn mappings from one domain (print) to they have never seen: SLINT or another (sound); MAVE; � multi-layer networks are good at this, even when � people can pronounce words mappings are somewhat arbitrary; which break the rules: PINT or HAVE. � human learning is similar to network learning: takes place gradually, over time; incorrect attempts later corrected. One mechanism uses general rules for pronunciation; the other If a network can’t model this linguistic task successfully, it would one stores pronunciation information with specific words. be a serious blow to connectionist modeling. Cognitive Modeling: Connectionist Models of Language Processing – p.3 Cognitive Modeling: Connectionist Models of Language Processing – p.4

Behavior of Dual-Route Models Evidence for the Dual-Route Model Consider: KINT, MINT, and PINT Double dissociation: neuropsychological evidence shows differential behavior for two types of brain damage: KINT is not a word: � no entry in the lexicon; � phonological dyslexia: symptom: words read without difficulty, but pronunciations for non-words can’t be produced; explanation: � an only be pronounced using the rule-based mechanism. damage to rule-based route; lexical route intact; MINT is a word: � surface dyslexia: symptom: words and non-words produced � can be pronounced using the rule-based mechanism; correctly, but errors on irregulars (tendency to regularize); explanation: damage to lexical route; rule-based route intact. � but exists in the lexicon, so also can be pronounced by the lexical route. All dual-route models share: PINT is a word, but irregular: � a lexicon for known words, with specific pronunciation � Can only be correctly pronounced by the lexical route; information; � Otherwise, it would rhyme with MINT. � a rule mechanism for the pronunciation of unknown words. Cognitive Modeling: Connectionist Models of Language Processing – p.5 Cognitive Modeling: Connectionist Models of Language Processing – p.6 Towards a Connectionist Model Seidenberg and McClelland (1989) It is unclear how a connectionist model could naturally 2-layer feed-forward model: implement a dual-route model: � distributed representations at input and output; � no obvious way to implement a lexicon to store information about particular words; storage is typically distributed; � distributed knowledge within the net; � no clear way to distinguish specific information from � gradient descent learning. general rules; only one uniform way to store information: Input and output: connection weights. � inputs are activated by the letters of the words: 20% Examine the behavior of a standard 2-layer feedforward model activated, on average; (Seidenberg and McClelland, 1989): � outputs represent the phonological features: 12% activated, on average; � trained to pronounce all the monosyllabic words of � encoding of features does not affect the success. English; Processing: net i = ∑ j w i j a j + bias i ; logistic activation function. � learning implemented using standard backpropagation. Cognitive Modeling: Connectionist Models of Language Processing – p.7 Cognitive Modeling: Connectionist Models of Language Processing – p.8

Training the Model Results Learning: The model successfully learns to map most regular and � weights and bias are initially random; irregular word forms to their correct pronunciation. � words are presented, and outputs are computed; � connection weights are adjusted using backpropagation of error. � it does this without separate routes for lexical and Training: rule-based processing; � all monosyllabic words of 3 or more letters (about 3000); � there is no word-specific memory � in each epoch, a sub-set was presented: frequent words appeared more often; It does not perform as well as human in pronouncing � over 250 epochs, THE was presented 230 times, least common non-words. word 7 times. Performance: Naming Latency: experiments have shown that adult reaction � outputs were considered correct if the pattern was closer to the times for naming a word is a function of variables such as word correct pronunciation than that of any word; frequency and spelling regularity. � after 250 epochs, accuracy was 97%. Cognitive Modeling: Connectionist Models of Language Processing – p.9 Cognitive Modeling: Connectionist Models of Language Processing – p.10 Results Word Frequency Effects Experimental finding: common words are pronounced more The current model cannot directly mimic latencies, since the quickly than uncommon words. computation of outputs is constant. Conventional (localist) explanation: The model can be seen as simulating this observation if we � frequent words require a lower threshold of activity for the relate the output error score to latency. “word recognition device” to “fire”; � infrequent words require a higher threshold of activity. � phonological error score is the difference between the actual pattern and the correct pattern; In the S&M model, naming latency is modeled by the error: � hypothesis: high error should correlate with longer � word frequency is reflected in the training procedure; latencies. � phonological error is reduced by training, and therefore lower for high frequency words. The explanation of latencies in terms of error follows directly from the network s architecture and the training regime. Cognitive Modeling: Connectionist Models of Language Processing – p.11 Cognitive Modeling: Connectionist Models of Language Processing – p.12

Frequency × Regularity Frequency × Neighborhood Size In addition to faster naming of frequent words, subjects exhibit: The neighborhood size of a word is defined as the number of words that differ by one letter. � faster pronunciation of regular words (e.g., GAVE or Neighborhood size has an effect on naming latency: MUST) than irregular words (e.g., HAVE or PINT); � but this effect interacts with frequency: it is only observed � not much influence for high frequency words; with low frequency words. � low frequency words with small neighborhoods are read more slowly than words with large neighborhoods. Regulars: small frequency effect; it takes slightly longer to pronounce low frequency regulars. Shows cooperation of the information learned in response to Regulars: large frequency effect. different (but similar) inputs. The model mimics this pattern of behavior in the error. Again, the connectionist model directly predicts this. Dual route model: both lexical and rule outcome possible; Dual route model: ad hoc explanation, grouping across localist lexical route wins for high frequency words (as it’s faster). representations of the lexicon. Cognitive Modeling: Connectionist Models of Language Processing – p.13 Cognitive Modeling: Connectionist Models of Language Processing – p.14 Experimental Data vs. Model Spelling-to-Sound Consistency Frequency × regularity: Consistent spelling patterns: all words have the same pronunciation, e.g., _UST Inconsistent patterns: more than one pronunciation, e.g., _AVE. regular: filled circles irregular: open squares Observation: adult readers produce pronunciations more quickly for non-words derived from consistent patterns (NUST) than from inconsistent patterns (MAVE). Frequency × neighborhood size: Dual route: difficult: both are pro- cessed by non-lexical route; would small neighborhood: need to distinguish consistent and in- filled circles consistent rules. large neighborhood: The error in the connectionist model open squares predicts this latency effect. Cognitive Modeling: Connectionist Models of Language Processing – p.15 Cognitive Modeling: Connectionist Models of Language Processing – p.16

Recommend

More recommend