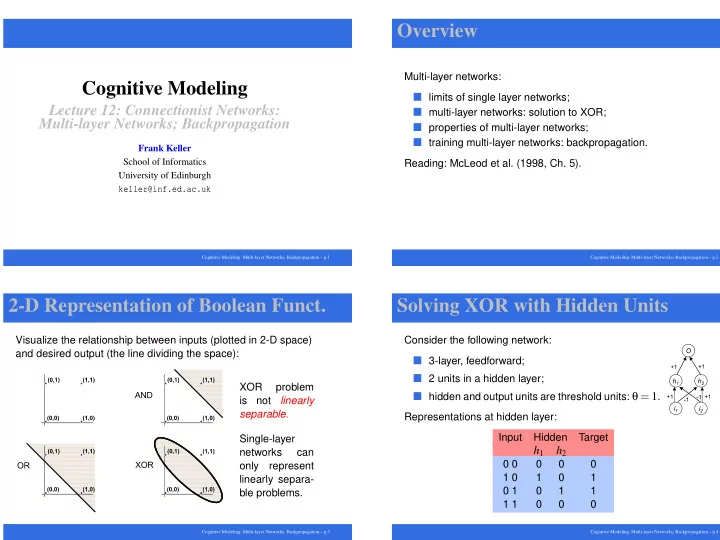

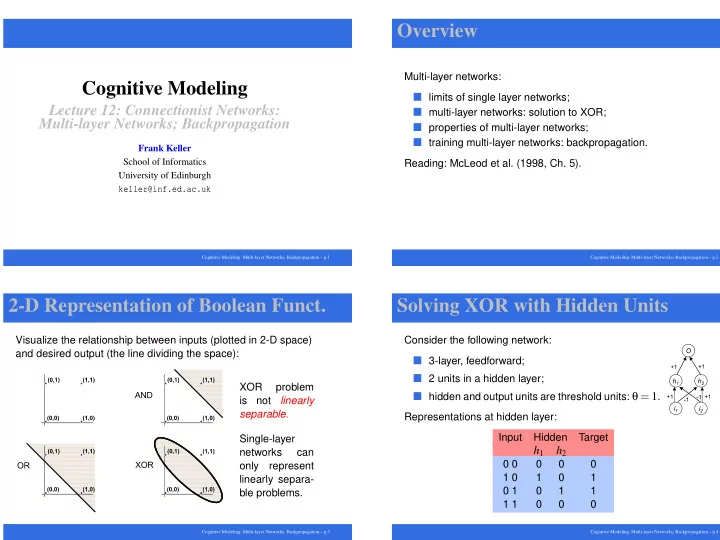

Overview Multi-layer networks: Cognitive Modeling � limits of single layer networks; Lecture 12: Connectionist Networks: � multi-layer networks: solution to XOR; Multi-layer Networks; Backpropagation � properties of multi-layer networks; � training multi-layer networks: backpropagation. Frank Keller School of Informatics Reading: McLeod et al. (1998, Ch. 5). University of Edinburgh keller@inf.ed.ac.uk Cognitive Modeling: Multi-layer Networks; Backpropagation – p.1 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.2 2-D Representation of Boolean Funct. Solving XOR with Hidden Units Visualize the relationship between inputs (plotted in 2-D space) Consider the following network: and desired output (the line dividing the space): � 3-layer, feedforward; � 2 units in a hidden layer; XOR problem � hidden and output units are threshold units: θ = 1 . is not linearly separable. Representations at hidden layer: Input Hidden Target Single-layer h 1 h 2 networks can 0 0 0 0 0 only represent 1 0 1 0 1 linearly separa- 0 1 0 1 1 ble problems. 1 1 0 0 0 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.3 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.4

Solving XOR with Hidden Units Backpropagation of Error (a) Forward propagation of activity: Problem: current learning rules cannot be used for hidden units: net out = ∑ wa hidden � we don’t know what the error is at these nodes; a out = f ( net out ) � delta rule requires that we know the desired activation: ∆ w = 2 εδ f ∗ a in (b) Backward propagation of error: Solution: algorithm based on: net hidden = ∑ w δ out � forward propagation of activity and δ hidden = f ( net hidden ) � backpropagation of error through the network. Cognitive Modeling: Multi-layer Networks; Backpropagation – p.5 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.6 Learning in Multi-layer Networks Learning in Multi-layer Networks Reason why backpropagation sometimes fails to find the The generalized Delta rule: correction mapping function: ∆ w i j = ηδ i a j δ i = f ′ ( net i )( t i − a i ) It gets stuck in a local minimum instead of finding the global for output nodes δ i = f ′ ( net i ) ∑ k δ k w ki minimum: for hidden nodes where f ′ ( net i ) = a i ( 1 − a i ) Multi-layer networks can, in principle, learn any mapping function: not constrained to problems which are linearly backprop trapped here separable. global minimum is here While there exists a solution for any mapping problem, backpropagation is not guaranteed to find it (unlike the perceptron convergence rule). Cognitive Modeling: Multi-layer Networks; Backpropagation – p.7 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.8

Example of Backpropagation Example of Backpropagation Consider the following network, containing hidden nodes. Calculate the weight changes for both layers of the network, assuming targets of: 1 1 The generalized Delta rule: ∆ w i j = ηδ i a j δ i = f ′ ( net i )( t i − a i ) for output nodes δ i = f ′ ( net i ) ∑ k δ k w ki for hidden nodes where f ′ ( net i ) = a i ( 1 − a i ) Cognitive Modeling: Multi-layer Networks; Backpropagation – p.9 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.10 Some Comments Biological Plausibility Single layer networks (perceptrons): Backpropagation requires bi-directional signals: � can only solve problems which are linearly separable; � forward propagation of activation and backward propagation of � but a solution is guaranteed by the perceptron convergence rule. error; Multi-layer networks (with hidden units): � nodes must ‘know’ the strengths of all synaptic connections to compute error: non-local. � can in principle solve any input-output mapping function; � backpropagation performs a gradient descent of the error surface; But: axons are uni-directional transmitters. Possible justification: backpropagation explains what is learned, not how. � can get caught in a local minimum; � cannot guarantee to find the solution. Network architecture: Finding solutions: � successful learning crucially depends on number of hidden units; � manipulate learning rule parameters: learning rate, momentum; � there is no way to know, a priori, what that number is. � brute force search (sampling) of the error surface to find a set of Alternative solution: use a network with a local learning rule, starting position in weight space; e.g., Hebbian learning. � computationally impractical for complex networks. Cognitive Modeling: Multi-layer Networks; Backpropagation – p.11 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.12

Summary References McLeod, Peter, Kim Plunkett, and Edmund T. Rolls. 1998. Introduction to Connectionist � There are simple Boolean functions (e.g., XOR) that a Modelling of Cognitive Processes . Oxford: Oxford University Press. single layer perceptron can’t represent; Plaut, David C., James L. McClelland, Mark S. Seidenberg, and Karalyn Patterson. 1996. Understanding normal and impaired word reading: Computational principles in � hidden layers need to be introduced to fix this problem; quasi-regular domains. Psychological Review 103: 56–115. Seidenberg, Mark S., and James L. McClelland. 1989. A distributed developmental model of � the perceptron learning rule needs to be extended to the word recognition and naming. Psychological Review 96: 523–568. generalized delta rule; � it performs forward propagation of activity and backpropagation of error through the network; � it is not guaranteed to find a global minimum of the error function; might get stuck in local minima; � limited biological plausibility of backpropagation. Cognitive Modeling: Multi-layer Networks; Backpropagation – p.13 Cognitive Modeling: Multi-layer Networks; Backpropagation – p.14

Recommend

More recommend