Overview Computational Tricks Objective Computationally efficient - PDF document

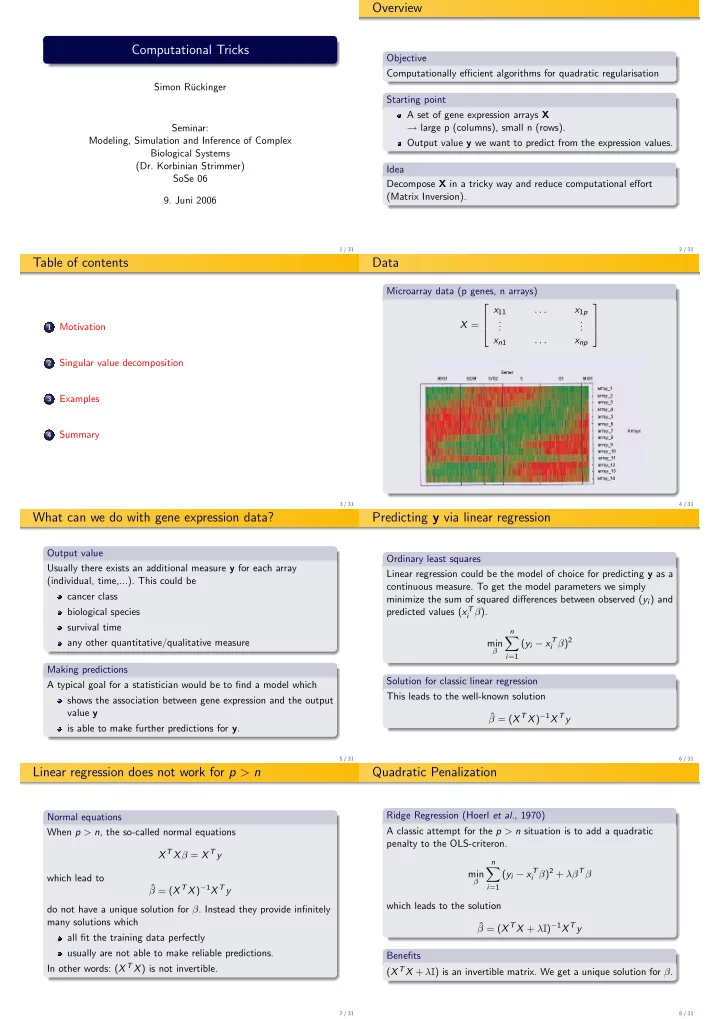

Overview Computational Tricks Objective Computationally efficient algorithms for quadratic regularisation Simon R uckinger Starting point A set of gene expression arrays X large p (columns), small n (rows). Seminar: Modeling,

Overview Computational Tricks Objective Computationally efficient algorithms for quadratic regularisation Simon R¨ uckinger Starting point A set of gene expression arrays X → large p (columns), small n (rows). Seminar: Modeling, Simulation and Inference of Complex Output value y we want to predict from the expression values. Biological Systems (Dr. Korbinian Strimmer) Idea SoSe 06 Decompose X in a tricky way and reduce computational effort (Matrix Inversion). 9. Juni 2006 1 / 31 2 / 31 Table of contents Data Microarray data (p genes, n arrays) x 11 . . . x 1 p . . . . X = 1 Motivation . . x n 1 . . . x np 2 Singular value decomposition 3 Examples 4 Summary 3 / 31 4 / 31 What can we do with gene expression data? Predicting y via linear regression Output value Ordinary least squares Usually there exists an additional measure y for each array Linear regression could be the model of choice for predicting y as a (individual, time,...). This could be continuous measure. To get the model parameters we simply cancer class minimize the sum of squared differences between observed ( y i ) and predicted values ( x T biological species i β ). survival time n � ( y i − x T i β ) 2 any other quantitative/qualitative measure min β i =1 Making predictions Solution for classic linear regression A typical goal for a statistician would be to find a model which This leads to the well-known solution shows the association between gene expression and the output value y ˆ β = ( X T X ) − 1 X T y is able to make further predictions for y . 5 / 31 6 / 31 Linear regression does not work for p > n Quadratic Penalization Ridge Regression (Hoerl et al. , 1970) Normal equations A classic attempt for the p > n situation is to add a quadratic When p > n , the so-called normal equations penalty to the OLS-criteron. X T X β = X T y n � i β ) 2 + λβ T β ( y i − x T min which lead to β ˆ β = ( X T X ) − 1 X T y i =1 which leads to the solution do not have a unique solution for β . Instead they provide infinitely many solutions which ˆ β = ( X T X + λ I ) − 1 X T y all fit the training data perfectly usually are not able to make reliable predictions. Benefits In other words: ( X T X ) is not invertible. ( X T X + λ I ) is an invertible matrix. We get a unique solution for β . 7 / 31 8 / 31

Expensive Computation Table of contents Matrix Inversion 1 Motivation ( X T X + λ I ) is a p × p matrix. Thus the computational cost of inverting this matrix is O ( p 3 ) operations. 2 Singular value decomposition p is large Typical expression arrays include between 1,000 and 20,000 genes. For 10,000 genes it means we have to invert a 3 Examples 10 , 000 × 10 , 000-matrix. This involves O (10 , 000 3 ) operations. 4 Summary How long does it take? Even on a modern PC this is a matter of hours or even days. 9 / 31 10 / 31 Getting ’tricky’ Getting ’tricky’ Singular value decomposition (SVD) Singular value decomposition (SVD) Every Matrix X ∈ R p × n can be decomposited in the following way Every Matrix X ∈ R p × n can be decomposited in the following way X = UDV T = RV T X = UDV T = RV T T T R � �� � d 1 ... d 1 ... V V X = U X = U d n d n � �� � � �� � � �� � � �� � � �� � � �� � nxp nxn nxp nxn nxn nxn � �� � � �� � pxn pxn 11 / 31 11 / 31 Applying SVD to ridge regression About Singular Value Decomposition Another solution for ridge regression Computational costs Plugging X = RV T into Getting the parameters for ridges regression via the SVD trick involves − 1 X T y ˆ β = ( X T X + λ I ) reducing p variables to n variables by SVD → O ( pn 2 ) � �� � pxp solving n dimensional ridge regression → O ( n 3 ) transforming back to p dimensions → O ( np ) one gets − 1 R T y ˆ β = V ( R T R + λ I ) Thus it saves inverting a p × p matrix with O ( p 3 ) operations. � �� � nxn Software Conclusion SVD is a standard linear algebra tool. It is implemented in most We can get the parameters for ridge regression by mathematical languages. In R applying SVD to X svd() fast.svd() → faster for small n, large p using R as a ’data-matrix’ ( library(corpcor) ) multiplying β by V 12 / 31 13 / 31 Applying SVD to other models I Applying SVD to other models II Linear predictors Quadratic regularization Many models ’connect’ the variables with the outcome through a Like linear regression, all models using a linear predictor and some linear predictor . E.g. kind of loss function don’t work properly when p ≫ n . logistic regression This can be fixed by adding a quadratic penalty linear discriminant analysis n � L ( y i , β 0 + x T i β ) + λβ T β. support-vector machines min β 0 ,β i =1 Loss functions The good news is The parameters are estimated by minimizing a loss function � n i =1 L ( y i , β 0 + x T The SVD trick can be used with all models in the above i β ) which can be the mentioned form. squared error All aspects of model evaluation can be performed in this negative log-likelihood reduced space. etc... 14 / 31 15 / 31

Reduced space computations Cross-validation I Theorem 1 Let X = RV T be the SVD of X, and denote by r i the i th row of R, Where to get λ from? a vector of n predictor values for the ith observation. Consider the The regularization parameter λ is often selected by k-fold pair of optimization problems: cross-validation. n � ( ˆ β 0 , ˆ How does cross-validation work? L ( y i , β 0 + x T i β ) + λβ T β β ) = argmin β 0 ,β ∈ R p divide the training data into k groups of size n i =1 k fit the model to k − 1 and test it on 1 k of the data n k � ( ˆ θ 0 , ˆ L ( y i , θ 0 + r T i θ ) + λθ T θ θ ) = argmin repeat this k seperate times θ 0 ,θ ∈ R n i =1 average the results Then ˆ β 0 = ˆ θ 0 , and ˆ β = V ˆ θ . This is done for a series of values for λ , and a preferred value is chosen. Proof see Hastie et al. , 2004 16 / 31 17 / 31 Cross-validation II Illustration / Eigengenes X → R Corollary 1 The entire model-selection process via cross-validation can be performed using a single reduced data set R . Hence, when we perform cross-validation, we simply sample from the rows of R . Proof Cross-validation relies on predictions x T β , which are equivariant under orthogonal rotations. ✷ Eigengenes If the columns of X are centered, the columns or R are the principal components of X also called eigengenes . 18 / 31 19 / 31 Derivatives I Derivatives II Corollary 2 1st/2nd derivate Define If the loss function is based on a log-likelihood we can: n n � � L ( y i , β 0 + x T L ( y i , β 0 + r T L ( β ) = i β ) , L ( θ ) = i θ ) . perform score tests with its first derivative i =1 i =1 gain variances of the parameters from its second derivative (information matrix / hessian matrix) Then with θ = V T β L ( β ) = L ( θ ). ⇒ If L is differentiable, then Expensive computation ∂ L ( β ) = V ∂ L ( θ ) Differentation in the p-dimensional space is a waste of time. ∂θ ; ∂β SVD once again ∂ 2 L ( β ) ∂β∂β T = V ∂ 2 L ( θ ) ∂θ∂θ T V T , SVD helps us to obtain the p-dimensional derivates from the n-dimensional versions. with the partial derivatives in the right-hand side evaluated at θ = V T β 20 / 31 21 / 31 Derivatives III Parenthesis: Woodbury matrix identity Matrix inversion lemma ( A + UCV ) − 1 = A − 1 − A − 1 U ( C − 1 + VA − 1 U ) − 1 VA − 1 Proof of Corollary 2 where The euqivalence A is p × p L ( β ) = L ( θ ) U is p × n follows immediately from the identity C is n × n X = RV T , V is n × p and the fact that x T and r T are the i th rows of X and R . i i Link to SVD The derivatives are simple applications of the chain rule to For L ( β ) = L ( θ ). ✷ A = λ I UCV = X T X = ( VDU T )( UDV T ) = VD 2 V T we see the coherency with SVD. 22 / 31 23 / 31

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.