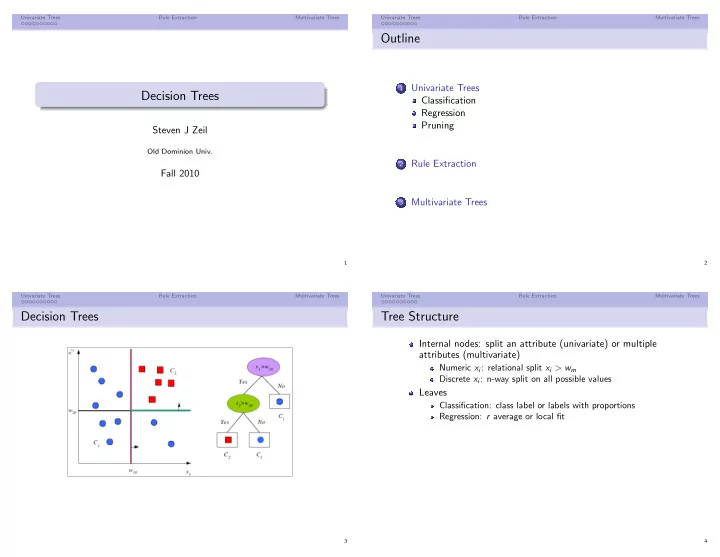

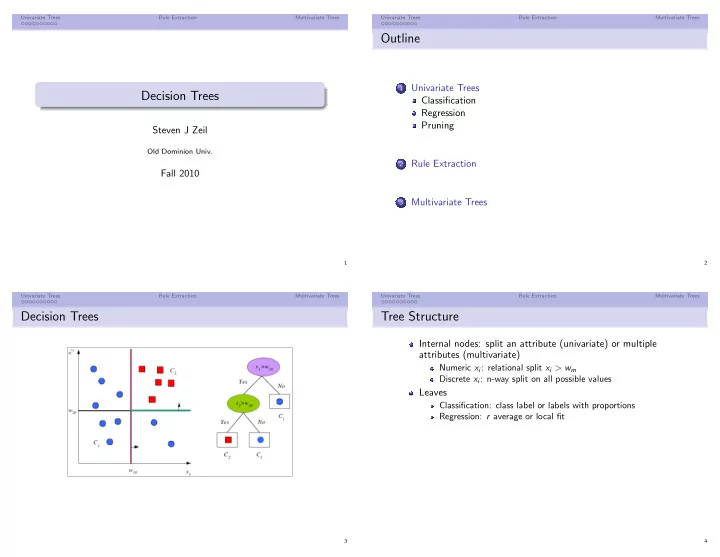

Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees Outline Univariate Trees 1 Decision Trees Classification Regression Pruning Steven J Zeil Old Dominion Univ. Rule Extraction 2 Fall 2010 Multivariate Trees 3 1 2 Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees Decision Trees Tree Structure Internal nodes: split an attribute (univariate) or multiple attributes (multivariate) Numeric x i : relational split x i > w m Discrete x i : n-way split on all possible values Leaves Classification: class label or labels with proportions Regression: r average or local fit 3 4

Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees Classification Trees Entropy For a node m , let N m be # of training instances that reach Shannon’s entropy is a measure whose m , of which N i m are in class C i . expected value is the # of bits required to encode a message m = N i ˆ x , m ) ≡ p i m P ( C i | � Alteratively, the amount of N m information we are missing if we don’t know the message Node m is pure if p i m is 0 or 1 Represents a limit on what can be Measure of purity is “entropy” achived with lossless compression Variants: ID3, CART, C4.5 5 6 Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees Why Entropy? Entropy and Tree Nodes Information content of x ∈ { v 1 , ..., v n } Entropy is a measure of the impurity of I ( x ) = − log p ( x ) a node If all instance that reach a node are in Log (base 2) reflects idea of encoding as a bit string. the same class, then we lose no Entropy infromation by not asking which � instances actually brought us here. I ( § ) ≡ E [ I ( § )] = − √ ( § � ) log √ ( § � ) By contrast, if the instances reaching � this node are evenly distributed among Why “entropy” rather than “expected information content”? the classes, we learn nothing by coming This formula resembles one from physics: here that we did not know in the step before. � H ( x ) = − k B p i ln p i i and, like the original entropy, information loss is minimized as order increases. 7 8

Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees Greedy Splitting GenerateTree(X) If node m is pure, generate a leaf and stop. if I ( X ) < θ I then Create leaf labelled by majority class in X Otherwise split and continue recursively else Impurity after the split: Suppose N mj of the N m take branch j i ← SplitAttribute(X) and that N i mj of these belong to C i for all branches of x i do Find X i falling in branch N i ˆ mj x , m , j ) ≡ p i P ( C i | � mj = Generatetree( X i ) N mj end for end if n N i K I ′ � mj � p i mj log 2 p i m = − mj N mj j =1 i =1 Select the variable and split that minimizes impurity For numeric variables, include choices of split positions 9 10 Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees SplitAttribute(X) Regression Trees � 1 if x ∈ X m MinEnt ← ∞ Let b m ( � x ) = for all attributes i = 1 , . . . , d do 0 otherwise if x i is discrete with n values then Split X into X 1 , . . . , X n by x i e ← SplitEntropy( X 1 , . . . , X n ) Error at node m: if e < MinEnt then MinEnt ← e 1 bestf ← i ( r t − g m ) 2 b m ( � � x t ) E m = end if N m else for all possible splits of numeric attribute do t Split X into X 1 , X 2 by x i x t ) r t � t b m ( � e ← SplitEntropy( X 1 , X 2 ) g m = if e < MinEnt then � t b m ( � x t ) MinEnt ← e bestf ← i end if After splitting end for end if end for return bestf 1 ( r t − g mj ) 2 b mj ( � E ′ � � x t ) = m N m j t x t ) r t � t b mj ( � g mj = � t b mj ( � x t ) 11 12

Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees Examples Pruning Pre-pruning: Stop generating nodes when number of training instances reaching a node is small (e.g., 5% of trianing set) Post-pruning: Grow full tree then prune subtrees that overfit on trianing set use a set-aside pruning set Replace subtrees by a leaf and eval on pruning set. If leaf performs as well as subtree, keep the leaf. Example: Replace lowest node ( x < 6 . 31) in third tree by leaf with 0.9 13 14 Univariate Trees Rule Extraction Multivariate Trees Univariate Trees Rule Extraction Multivariate Trees Rule Extraction Multivariate Trees Trees can be interpreted as programming instructions: R1: IF (age > 38.5) AND years-in-job>2.5) then y=0.8 R2: IF (age > 38.5) AND years-in-job <= 2.5) then y=0.6 R3: IF (age <= 38.5) AND job-type=’A’) then y=0.4 R4: IF (age <= 38.5) AND job-type=’B’) then y=0.3 R5: IF (age <= 38.5) AND job-type=’C’) then y=0.2 Called a rule base . 15 16

Recommend

More recommend