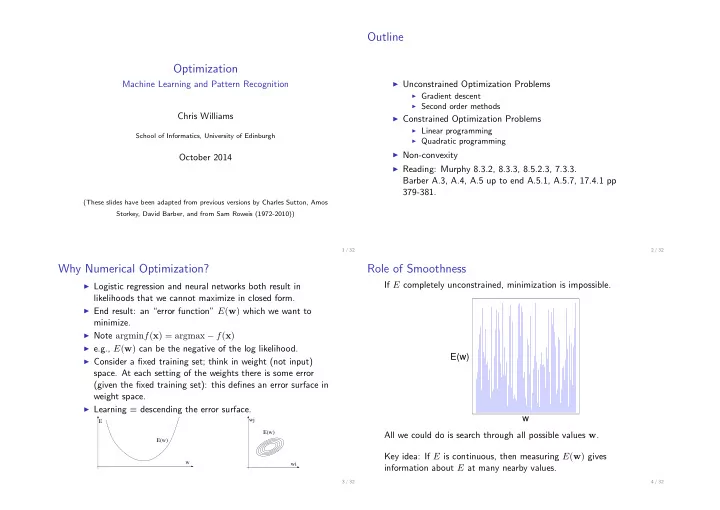

Outline Optimization ◮ Unconstrained Optimization Problems Machine Learning and Pattern Recognition ◮ Gradient descent ◮ Second order methods Chris Williams ◮ Constrained Optimization Problems ◮ Linear programming School of Informatics, University of Edinburgh ◮ Quadratic programming ◮ Non-convexity October 2014 ◮ Reading: Murphy 8.3.2, 8.3.3, 8.5.2.3, 7.3.3. Barber A.3, A.4, A.5 up to end A.5.1, A.5.7, 17.4.1 pp 379-381. (These slides have been adapted from previous versions by Charles Sutton, Amos Storkey, David Barber, and from Sam Roweis (1972-2010)) 1 / 32 2 / 32 Why Numerical Optimization? Role of Smoothness ◮ Logistic regression and neural networks both result in If E completely unconstrained, minimization is impossible. likelihoods that we cannot maximize in closed form. ◮ End result: an “error function” E ( w ) which we want to minimize. ◮ Note argmin f ( x ) = argmax − f ( x ) ◮ e.g., E ( w ) can be the negative of the log likelihood. E(w) ◮ Consider a fixed training set; think in weight (not input) space. At each setting of the weights there is some error (given the fixed training set): this defines an error surface in weight space. ◮ Learning ≡ descending the error surface. w wj E E(w) All we could do is search through all possible values w . E(w) Key idea: If E is continuous, then measuring E ( w ) gives w wi information about E at many nearby values. 3 / 32 4 / 32

Role of Derivatives Numerical Optimization Algorithms ◮ Another powerful tool that we have is the gradient ◮ Numerical optimization algorithms try to solve the general problem ∇ E = ( ∂E , ∂E , . . . , ∂E ) T . min w E ( w ) ∂w 1 ∂w 2 ∂w D ◮ Different types of optimization algorithms expect different ◮ Two ways to think of this: inputs ◮ Each ∂E ∂w k says: If we wiggle w k and keep everything else the ◮ Zero-th order: Requires only a procedure that computes E ( w ) . same, does the error get better or worse? ◮ The function These are basically search algorithms. ◮ First order: Also requires the gradient ∇ E f ( w ) = E ( w 0 ) + ( w − w 0 ) ⊤ ∇ E | w 0 ◮ Second order: Also requires the Hessian matrix ∇∇ E ◮ High order: Uses higher order derivatives. Rarely useful. is a linear function of w that approximates E well in a ◮ Constrained optimization: Only a subset of w values are legal. neighbourhood around w 0 . (Taylor’s theorem) ◮ Today we’ll discuss first order, second order, and constrained ◮ Gradient points in the direction of steepest error ascent in optimization weight space. 5 / 32 6 / 32 Optimization Algorithm Cartoon Gradient Descent ◮ Basically, numerical optimization algorithms are iterative. They generate a sequence of points ◮ Locally the direction of steepest descent is the gradient. w 0 , w 1 , w 2 , . . . ◮ Simple gradient descent algorithm: E ( w 0 ) , E ( w 1 ) , E ( w 2 ) , . . . initialize w ∇ E ( w 0 ) , ∇ E ( w 1 ) , ∇ E ( w 2 ) , . . . while E ( w ) is unacceptably high calculate g ← ∂E ◮ Basic optimization algorithm is ∂ w w ← w − η g initialize w end while while E ( w ) is unacceptably high return w calculate g = ∇ E ◮ η is known as the step size (sometimes called learning rate ) Compute direction d from w , E ( w ) , g ◮ We must choose η > 0 . (can use previous gradients as well...) ◮ η too small → too slow w ← w − η d ◮ η too large → instability end while return w 7 / 32 8 / 32

Effect of Step Size Effect of Step Size ◮ Take η = 1 . 1 . Not so good. If you Goal: Minimize Goal: Minimize step too far, you can leap over the E ( w ) = w 2 E ( w ) = w E ( w ) = w 2 E ( w ) = w ◮ Take η = 0 . 1 . Works well. region that contains the minimum 8 8 w 0 = 1 . 0 w 0 = 1 . 0 6 6 w 1 = w 0 − 1 . 1 · 2 w 0 = − 1 . 2 w 1 = w 0 − 0 . 1 · 2 w 0 = 0 . 8 E(w) E(w) 4 w 2 = w 1 − 1 . 1 · 2 w 1 = 1 . 44 w 2 = w 1 − 0 . 1 · 2 w 1 = 0 . 64 4 w 3 = w 2 − 1 . 1 · 2 w 2 = − 1 . 72 2 w 3 = w 2 − 0 . 1 · 2 w 2 = 0 . 512 2 · · · · · · 0 0 w 25 = 79 . 50 w 25 = 0 . 0047 − 3 − 2 − 1 0 1 2 3 − 3 − 2 − 1 0 1 2 3 w w ◮ Finally, take η = 0 . 000001 . What happens here? 9 / 32 10 / 32 Batch vs online Batch vs online ◮ So far all the objective functions we have seen look like: n ◮ Batch learning: use all patterns in training set, and update � E n ( w ; y n , x n ) . E ( w ; D ) = weights after calculating n =1 D = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . ( x n , y n ) } is the training set. N ∂E n ∂E � ∂ w = ◮ Each term sum depends on only one training instance ∂ w n =1 ◮ The gradient in this case is always ◮ On-line learning: adapt weights after each pattern N ∂E n ∂E presentation, using ∂E n � ∂ w = ∂ w ∂ w ◮ Batch more powerful optimization methods n =1 ◮ The algorithm on slide 8 scans all the training instances ◮ Batch easier to analyze before changing the parameters. ◮ On-line more feasible for huge or continually growing datasets ◮ Seems dumb if we have millions of training instances. Surely ◮ On-line may have ability to jump over local optima we can get a gradient that is “good enough” from fewer instances, e.g., a couple thousand? Or maybe even from just one? 11 / 32 12 / 32

Algorithms for Batch Gradient Descent Algorithms for Online Gradient Descent ◮ Here is (a particular type of) online gradient descent algorithm ◮ Here is batch gradient descent. initialize w initialize w while E ( w ) is unacceptably high while E ( w ) is unacceptably high Pick j as uniform random integer in 1 . . . N calculate g ← � N ∂E n calculate g ← ∂E j n =1 ∂ w ∂ w w ← w − η g w ← w − η g end while end while return w return w ◮ This is just the algorithm we have seen before. We have just ◮ This version is also called “stochastic gradient ascent” “substituted in” the fact that E = � N n =1 E n . because we have picked the training instance randomly. ◮ There are other variants of online gradient descent. 13 / 32 14 / 32 Problems With Gradient Descent Shallow Valleys ◮ Typical gradient descent can be fooled in several ways, which is why more sophisticated methods are used when possible. One problem: quickly down the valley walls but very slowly along the valley bottom. ◮ Setting the step size η dE ◮ Shallow valleys dw ◮ Highly curved error surfaces ◮ Gradient descent goes very slowly once it hits the shallow ◮ Local minima valley. ◮ One hack to deal with this is momentum d t = β d t − 1 + (1 − β ) η ∇ E ( w t ) ◮ Now you have to set both η and β . Can be difficult and irritating. 15 / 32 16 / 32

Curved Error Surfaces Second Order Information ◮ A second problem with gradient descent is that the gradient ◮ Taylor expansion might not point towards the optimum. This is because of E ( w + δ ) ≃ E ( w ) + δ T ∇ w E + 1 curvature directly at the nearest local minimum. 2 δ T H δ dE dW where ∂ 2 E H ij = ◮ Note: gradient is the locally steepest direction. Need not ∂w i ∂w j directly point toward local optimum. ◮ H is called the Hessian. ◮ Local curvature is measured by the Hessian matrix: ◮ If H is positive definite, this models the error surface as a H ij = ∂ 2 E/∂w i w j . quadratic bowl. ◮ By the way, do these ellipses remind you of anything? 17 / 32 18 / 32 Quadratic Bowl Direct Optimization ◮ A quadratic function 3 E ( w ) = 1 2 w T H w + b T w 2 can be minimised directly using 1 w = − H − 1 b 0 but this requires 1 ◮ Knowing/computing H , which has size O ( D 2 ) for a 1 D -dimensional parameter space 0 0 ◮ Inverting H , O ( D 3 ) −1 −1 19 / 32 20 / 32

Recommend

More recommend