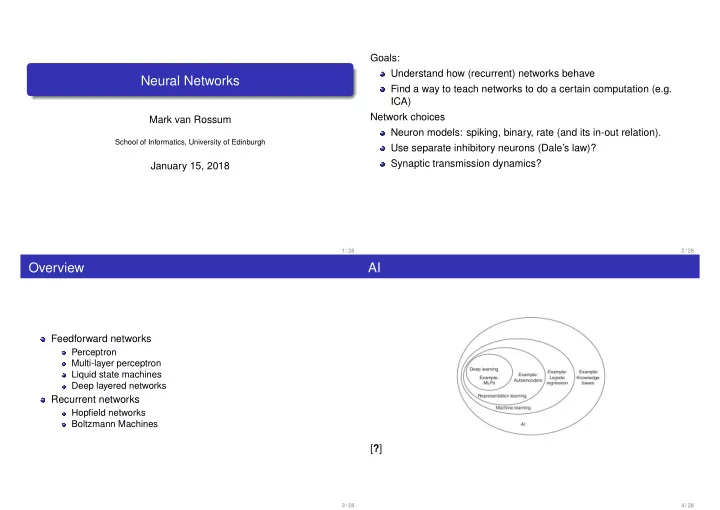

Goals: Understand how (recurrent) networks behave Neural Networks Find a way to teach networks to do a certain computation (e.g. ICA) Network choices Mark van Rossum Neuron models: spiking, binary, rate (and its in-out relation). School of Informatics, University of Edinburgh Use separate inhibitory neurons (Dale’s law)? Synaptic transmission dynamics? January 15, 2018 1 / 28 2 / 28 Overview AI Feedforward networks Perceptron Multi-layer perceptron Liquid state machines Deep layered networks Recurrent networks Hopfield networks Boltzmann Machines [ ? ] 3 / 28 4 / 28

AI History McCullough & Pitts (1943): Binary neurons can implement any finite state machine. Rosenblatt: Perceptron learning rule: Learning of (some) classification problems. Backprop: Universal function approximator. Generalizes, but has local maxima. 5 / 28 6 / 28 [ ? ] Perceptrons Multi-layer perceptron (MLP) Overcomes limited functions the single perceptron Supervised binary classification of N-dimensional x pattern With continuous units, MLP can approximate any function! vectors. Tradionally one hidden layer. More layers does enhance repetoire y = H ( w . x + b ) , H is step function (but could help learning, see below). General trick: replace bias b = w b . 1 with ’always on’ input. Learning: backpropagation of errors. Error: E = � E µ = � P actual ( x µ ; w )) 2 Gradient descent µ = 1 ( y µ goal − y µ Perceptron learning algorithm: (batch) ∆ w ij = − η ∂ E Learnable if patterns are linearly seperable. ∂ w ij , where w are all the weights (input → hidden, hidden → output, bias). If learnable, rule converges. XXXCOntinuous input??? other cost functions are possible XXX figure XXX picture Stochastic descent: use ∆ w ij = − ∂ E µ Cerebellum? ∂ w i j . Learning MLPs is slow, local maxima. 7 / 28 8 / 28

Deep MLPs Liquid state machines Traditional MLPs are also called shallow [ ? ] While deeper nets do not have more computational power, they Motivation: arbitrary spatio-temporal computation without precise can lead to better representations. Better representations lead to design. better generalization and better learning. Create pool of spiking neurons with random connections. Learning slows down in deep networks, as transfer functions g () Results in very complex dynamics if weights are strong enough saturate at 0 or 1. Solutions: Similar to echo state networks (but those are rate based). pre-training Both are known as reservoir computing convolutional networks Similar theme as HMAX model: only learn at the output layer. Better representation by adding noisy/partial stimuli 9 / 28 10 / 28 Optimal reservoir? Best reservoir has rich yet predictable dynamics. Edge of Chaos [ ? ] Network 250 binary nodes, w ij = N ( 0 , σ 2 ) (x-axis is recurrent strength) 11 / 28 12 / 28

Optimal reservoir? Relation to Support Vector Machines Task: Parity ( in ( t ) , in ( t − 1 ) , in ( t − 2 )) Best (darkest in plot) at edge of chaos. Does chaos exist in the brain? In spiking network models: yes [ ? ] Map problem in to high dimensional space F ; there it often becomes In real brains: ? linearly separable. This can be done without much computational overhead (kernel trick). 13 / 28 14 / 28 Hopfield networks Under these conditions network moves from initial condition (stimulus, s ( t = 0 ) = x ) into the closest attractor state (’memory’). All to all connected network (can be relaxed) Binary units s i = ± 1, or rate with sigmodial transfer. Auto-associative, pattern completion Simple (suboptimal) learning rule: w ij = � M µ x µ i x µ Dynamics s i ( t + 1 ) = sign ( � j w ij s j ( t )) j ( µ indexes patterns x µ ). Using symmetric weights w ij = w ji , we can define energy E = − 1 � ij s i w ij s j . 2 15 / 28 16 / 28

Winnerless competition How to escape from attractor states? Noise, asymmetric connections, adaptation. From [ ? ]. Indirect experimental evidence using maze deformation[ ? ] 17 / 28 18 / 28 Boltzmann machines Learning in Boltzmann machines Hopfield network is not ’smart’. In Hopfield network it is impossible to learn only The generated probability for state s α , after equilibrium is reached, is ( 1 , 1 , − 1 ) , ( − 1 , − 1 , − 1 ) , ( 1 , − 1 , 1 ) , ( − 1 , 1 , 1 ) but not given by the Boltzmann distribution ( − 1 , − 1 , 1 ) , ( 1 , 1 , 1 ) , ( − 1 , 1 , − 1 ) , ( 1 , − 1 , 1 ) (XOR again)... � � Because � x i � = = 0 P α = 1 x i x j � e − β H αγ Z Two, somewhat unrelated, modifications: γ Introduce hidden units, these can extract features. H αγ = − 1 � w ij s i s j 1 2 Stochastic updating: p ( s i = 1 ) = 1 + e − β Ei ij E i = � j w ij s j − θ i , E = � i E i . � e − β H αγ Z = T = 1 /β is temperature (set to some arbitrary value). αβ where α labels states of visible units, γ the hidden states. 19 / 28 20 / 28

Boltzmann machines: applications As in other generative models, we match true distribution to generated one. Minimize KL divergence between input and generated dist. Shifter circuit. G α log G α � Learning symmetry [ ? ]. Create a network that categorizes C = P α horizontal, vertical, diagonal symmetry (2nd order predicate). α Minimize to get [ ? ] � � � � clamped − ∆ w ij = ηβ [ s i s j s i s j free ] (note, w ij = w ji ) Wake (’clamped’) phase vs. sleep (’dreaming’) phase Clamped phase: Hebbian type learning. Average over input patterns and hidden states. Sleep phase: unlearn erroneous correlations. The hidden units will ’discover’ statistical regularities. 21 / 28 22 / 28 Restricted Boltzmann Need for multiple relaxation runs for every weight update (triple loop), makes training Boltzmann networks very slow. Speed up learning in restricted Boltzmann: No hidden-hidden connections Don’t wait for the sleep state to fully settle Stack multiple layers (deep-learning) Application: high quality autoencoder (i.e. compression) [ ? ] [also good webtalks by Hinton on this] Le etal. ICML 2012 Deep auto-encoder network with 10 9 weights learns high level features from images unsupervised . 23 / 28 24 / 28

Relation to schema learning? Discussion Networks still very challenging Can we predict activity? What is the network trying to do? What are the learning rules? Maria Shippi & MvR Cortex learns semantic /scheme (i.e. statistical) information Presence of a schema can speed up subsequent fact learning. 25 / 28 26 / 28 References I 27 / 28

Recommend

More recommend