Multi-Layer Networks and Backpropagation Algorithm M. Soleymani - PowerPoint PPT Presentation

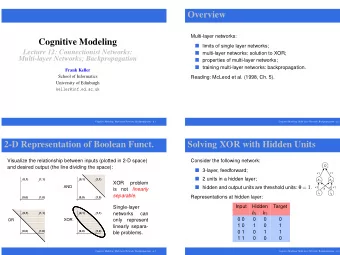

Multi-Layer Networks and Backpropagation Algorithm M. Soleymani Sharif University of Technology Fall 2017 Most slides have been adapted from Fei Fei Li lectures, cs231n, Stanford 2017 and some from Hinton lectures, NN for Machine Learning

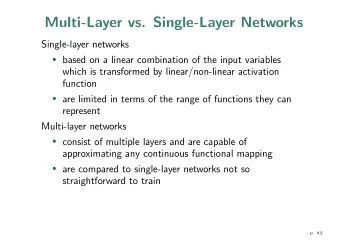

MLP with Different Number of Layers MLP with unit step activation function Decision region found by an output unit. Structure Type of Decision Regions Interpretation Example of region Single Layer Half space Region found by a (no hidden layer) hyper-plane Two Layer Polyhedral (open or Intersection of half (one hidden layer) closed) region spaces Three Layer Arbitrary regions Union of polyhedrals (two hidden layers) 33

Beyond linear models

Beyond linear models

MLP with single hidden layer • Two-layer MLP (Number of layers of adaptive weights is counted) 𝑁 𝑁 𝑒 [2] 𝑨 [2] 𝜚 [1] 𝑦 𝑗 𝑝 𝑙 𝒚 = 𝜔 𝑥 ⇒ 𝑝 𝑙 𝒚 = 𝜔 𝑥 𝑥 𝑗𝑘 𝑘 𝑘𝑙 𝑘𝑙 𝑘=0 𝑘=0 𝑗=0 𝑨 0 = 1 𝑨 𝑘 [2] [1] 𝑥 𝑥 𝑗𝑘 𝑘𝑙 𝑨 1 𝑦 0 = 1 𝜚 𝜔 𝑝 1 𝑦 1 … … 𝜚 … 𝜔 𝑝 𝐿 𝑦 𝑒 𝜚 𝑨 𝑁 Input Output 𝑗 = 0, … , 𝑒 𝑘 = 1 … 𝑁 𝑘 = 1 … 𝑁 𝑙 = 1, … , 𝐿 36

learns to extract features • MLP with one hidden layer is a generalized linear model: [2] 𝑔 𝑁 – 𝑝 𝑙 (𝒚) = 𝜔 𝑘=1 𝑥 𝑘 (𝒚) 𝑘𝑙 [1] 𝑦 𝑗 𝑒 𝑘 𝒚 = 𝜚 𝑗=0 – 𝑔 𝑥 𝑘𝑗 – The form of the nonlinearity (basis functions 𝑔 𝑘 ) is adapted from the training data (not fixed in advance) • 𝑔 𝑘 is defined based on parameters which can be also adapted during training • Thus, we don ’ t need expert knowledge or time consuming tuning of hand-crafted features 37

Deep networks • Deeper networks (with multiple hidden layers) can work better than a single-hidden-layer networks is an empirical observation – despite the fact that their representational power is equal. • In practice usually 3-layer neural networks will outperform 2-layer nets, but going even deeper may not help much more. – This is in stark contrast to Convolutional Networks

How to adjust weights for multi layer networks? • We need multiple layers of adaptive, non-linear hidden units. But how can we train such nets? – We need an efficient way of adapting all the weights, not just the last layer. – Learning the weights going into hidden units is equivalent to learning features. – This is difficult because nobody is telling us directly what the hidden units should do.

Gradient descent • We want 𝛼 𝑿 𝑀 𝑿 • Numerical gradient: – slow :( – approximate :( – easy to write :) • Analytic gradient: – fast :) – exact :) – error-prone :( • In practice: Derive analytic gradient, check your implementation with numerical gradient

Training multi-layer networks • Backpropagation – Training algorithm that is used to adjust weights in multi-layer networks (based on the training data) – The backpropagation algorithm is based on gradient descent – Use chain rule and dynamic programming to efficiently compute gradients 42

Computational graphs

Backpropagation: a simple example

Backpropagation: a simple example

Backpropagation: a simple example

Backpropagation: a simple example

Backpropagation: a simple example

Backpropagation: a simple example

Backpropagation: a simple example

How to propagate the gradients backward

How to propagate the gradients backward

Another example

Another example

Another example

Another example

Another example

Another example

Another example

Another example

Another example

Another example

Another example

Another example

Another example

Another example [local gradient] x [upstream gradient] x0: [2] x [0.2] = 0.4 w0: [-1] x [0.2] = -0.2

Derivative of sigmoid function

Derivative of sigmoid function

Patterns in backward flow • add gate: gradient distributor • max gate: gradient router

Gradients add at branches

Simple chain rule • 𝑨 = 𝑔 𝑦 • 𝑧 = (𝑦)

Multiple paths chain rule

Modularized implementation: forward / backward API

Modularized implementation: forward / backward API

Modularized implementation: forward / backward API

Caffe Layers

Caffe sigmoid layer

Output as a composite function 𝑏 [𝑀] 𝑏 [𝑀] = 𝑝𝑣𝑢𝑞𝑣𝑢 𝑃𝑣𝑢𝑞𝑣𝑢 = 𝑏 [𝑀] 𝑨 [𝑀] 𝑔 = 𝑔 𝑨 [𝑀] 𝑏 [𝑀−1] × = 𝑔 𝑋 [𝑀] 𝑏 [𝑀−1] 𝑋 [𝑀] = 𝑔 𝑋 [𝑀] 𝑔(𝑋 [𝑀−1] 𝑏 [𝑀−2] 𝑔 𝑨 [2] = 𝑔 𝑋 [𝑀] 𝑔 𝑋 [𝑀−1] … 𝑔 𝑋 [2] 𝑔 𝑋 [1] 𝑦 𝑏 [1] × 𝑔 𝑨 [1] 𝑋 [2] × For convenience, we use the same activation functions for all layers. 𝑋 [1] 𝑦 However, output layer neurons most commonly do not need activation function (they show class scores or real-valued targets.)

Backpropagation: Notation • 𝒃 [0] ← 𝐽𝑜𝑞𝑣𝑢 • 𝑝𝑣𝑢𝑞𝑣𝑢 ← 𝒃 [𝑀] 𝑔(. ) 𝑔(. ) 𝒃 [𝑚−1] 𝒃 [𝑚] 𝒜 [𝑚] 𝑔(. ) 𝑔(. ) 𝑔(. ) 79

Backpropagation: Last layer gradient 𝜖𝑏 [𝑀] 𝜖𝐹 𝑜 [𝑀] = 𝜖𝐹 𝑜 [𝑚] = 𝑔 𝑨 𝑗 [𝑚] 𝜖𝑏 [𝑀] [𝑀] 𝑏 𝑗 𝜖𝑋 𝜖𝑋 𝑗𝑘 𝑗𝑘 𝑁 [𝑚] = [𝑚] 𝑏 𝑗 [𝑚−1] 𝑨 𝑘 𝑥 𝑗𝑘 For squared error loss: 𝜖𝐹 𝑘=0 2 𝜖𝑏 [𝑀] = 2(𝑧 − 𝑏 [𝑀] ) 𝐹 𝑜 = 𝑏 [𝑀] − 𝑧 𝑜 [𝑚] 𝑏 𝑘 𝑔 [𝑀] 𝜖𝑏 [𝑀] 𝜖𝑨 [𝑀] = 𝑔 ′ 𝑨 𝑘 [𝑚] [𝑀] 𝑨 𝑘 𝑘 [𝑀] 𝜖𝑋 𝜖𝑋 𝑗𝑘 𝑗𝑘 [𝑀] 𝑏 𝑗 [𝑚] = 𝑔 ′ 𝑨 [𝑀−1] 𝑥 𝑗𝑘 𝑘 [𝑚−1] 𝑏 𝑗

Backpropagation: [𝑚] 𝜖𝑏 𝑘 [𝑚] = 𝑔 𝑨 𝑗 𝜖𝐹 𝑜 [𝑚] = 𝜖𝐹 𝑜 [𝑚] 𝑏 𝑗 [𝑚] × 𝑁 [𝑚] 𝜖𝑥 𝑗𝑘 𝜖𝑏 𝑘 𝜖𝑥 𝑗𝑘 [𝑚] = [𝑚] 𝑏 𝑗 [𝑚−1] 𝑨 𝑘 𝑥 𝑗𝑘 𝑘=0 [𝑚] × 𝑏 𝑗 [𝑚−1] × 𝑔 ′ 𝑨 𝑗 [𝑚] = 𝜀 [𝑚] 𝑏 𝑘 𝑘 𝑔 [𝑚] = 𝜖𝐹 𝑜 [𝑚] 𝜀 [𝑚] is the sensitivity of the output to 𝑏 𝑘 [𝑚] 𝑨 𝑘 𝑘 𝜖𝑏 𝑘 sensitivity vectors can be obtained by running a [𝑚] 𝑥 𝑗𝑘 backward process in the network architecture (hence the name backpropagation.) [𝑚−1] 𝑏 𝑗 81

[𝑚−1] from 𝜀 𝑗 [𝑚] [𝑚] 𝜀 𝑗 𝑏 𝑘 𝑔 [𝑚] 𝑨 𝑘 [𝑚−1] = 𝑔 𝑨 𝑗 [𝑚−1] We will compute 𝜺 [𝑚−1] from 𝜺 [𝑚] : 𝑏 𝑗 𝑁 𝜖𝐹 𝑜 [𝑚−1] = [𝑚] = [𝑚] 𝑏 𝑗 [𝑚−1] [𝑚] 𝑨 𝑘 𝑥 𝑗𝑘 𝑥 𝑗𝑘 𝜀 𝑗 [𝑚−1] 𝜖𝑏 𝑗 𝑘=0 𝑒 [𝑚] [𝑚] [𝑚] 𝜖𝑏 𝑘 𝜖𝑨 [𝑚−1] 𝜖𝐹 𝑜 𝑏 𝑗 𝑘 = [𝑚] × [𝑚] × [𝑚−1] 𝜖𝑏 𝑘 𝜖𝑨 𝜖𝑏 𝑗 𝑘=1 𝑘 𝑒 [𝑚] 𝜖𝐹 𝑜 [𝑚] × 𝑥 𝑗𝑘 [𝑚] × 𝑔 ′ 𝑨 𝑗 [𝑚] [𝑚] = 𝑏 𝑘 𝜖𝑏 𝑘 𝑘=1 𝑒 [𝑚] [𝑚] 𝜀 𝑘 [𝑚] × 𝑔 ′ 𝑨 𝑗 [𝑚] × 𝑥 𝑗𝑘 [𝑚] = 𝜀 𝑘 [𝑚] 𝑥 𝑗𝑘 𝑘=1 𝑒 [𝑚] [𝑚−1] [𝑚] × [𝑚] × 𝑥 𝑗𝑘 = 𝑔 ′ 𝑨 𝑗 [𝑚] 𝑏 𝑗 𝜀 𝑘 𝑔 ′ 𝑨 𝑗 [𝑚−1] 𝑘=1 [𝑚−1] 𝜀 82 𝑘

Find and save 𝜺 [𝑀] • Called error, computed recursively in backward manner • For the final layer 𝑚 = 𝑀 : [𝑀] = 𝜖𝐹 𝑜 𝜀 𝑘 [𝑀] 𝜖𝑏 𝑘 83

Backpropagation of Errors 2 [𝑀] − 𝑧 𝑘 𝑜 𝐹 𝑜 = 𝑏 𝑘 𝑘 [2] [2] [2] [2] [2] 𝜀 1 𝜀 2 𝜀 3 𝜀 𝜀 𝐿 [2] = 2 𝑏 𝑘 [2] − 𝑧 𝑘 (𝑜) 𝑔 ′ 𝑨 𝑘 𝑘 [2] 𝜀 𝑘 [2] 𝑥 𝑗𝑘 𝑒 [2] [1] 𝜀 𝑗 [1] = 𝑔 ′ 𝑨 𝑗 [1] × [2] × 𝑥 𝑗𝑘 [2] 𝜀 𝑗 𝜀 𝑘 𝑘=1 84

Gradients for vectorized code

Vectorized operations

Vectorized operations

Vectorized operations

Vectorized operations

Vectorized operations

Always check: The gradient with respect to a variable should have the same shape as the Variable

SVM example

Summary • Neural nets may be very large: impractical to write down gradient formula by hand for all parameters • Backpropagation = recursive application of the chain rule along a computational graph to compute the gradients of all inputs/parameters/intermediates • Implementations maintain a graph structure, where the nodes implement the forward () / backward () API – forward : compute result of an operation and save any intermediates needed for gradient computation in memory – backward : apply the chain rule to compute the gradient of the loss function with respect to the inputs

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.