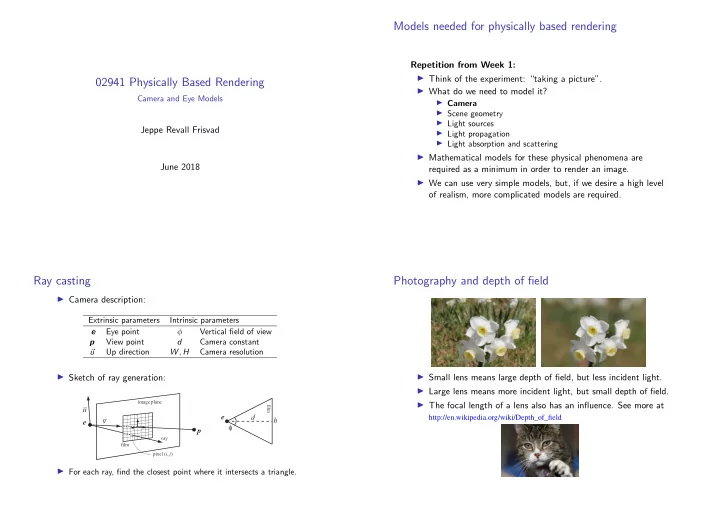

Models needed for physically based rendering Repetition from Week 1: ◮ Think of the experiment: “taking a picture”. 02941 Physically Based Rendering ◮ What do we need to model it? Camera and Eye Models ◮ Camera ◮ Scene geometry ◮ Light sources Jeppe Revall Frisvad ◮ Light propagation ◮ Light absorption and scattering ◮ Mathematical models for these physical phenomena are June 2018 required as a minimum in order to render an image. ◮ We can use very simple models, but, if we desire a high level of realism, more complicated models are required. Ray casting Photography and depth of field ◮ Camera description: Extrinsic parameters Intrinsic parameters Eye point φ Vertical field of view e View point d Camera constant p u � Up direction W , H Camera resolution ◮ Sketch of ray generation: ◮ Small lens means large depth of field, but less incident light. ◮ Large lens means more incident light, but small depth of field. image plane ◮ The focal length of a lens also has an influence. See more at film u e d http://en.wikipedia.org/wiki/Depth_of_field v h e φ p ray film pixel ( i , j ) ◮ For each ray, find the closest point where it intersects a triangle.

geometry\.~ image'~ . :,. ~-+-.!.C , ~ Efect~ A model for thin lenses The thin lens equation d d image plane f image plane f z δ z δ focal plane focal plane aperture , ℓ aperture , ℓ ◮ An image will be perfectly sharp for objects at the distance z is the distance to the photographed object. z d , where d is the camera constant : the distance between the lens and the image 1 + 1 d = 1 . plane. z d f is the focal length : the distance to the focal plane where collimated light f ◮ Using the concept of similar triangles, we may derive is focused in a point. z d ℓ is the aperture : the diameter of the lens. � � � z − 1 � ∆ d � � � � δ = � ℓ = � f ℓ . � � � � δ is the diameter of the circle of confusion for objects at the distance z . d + ∆ d z d − f � � 6. 10. Reflections 6. Advanced Lighting and Shading 239 238 Modelling depth of field Modelling depth of field ◮ Centering the circle of confusion around the eye point, we can Environment mapping techniques for providing reflections of objects at a distance have been covered in Section 5.7.4 and 6.4.2, with reflected rays simulate depth of field by sampling different eye positions computed using Equation 4.5 on page 76. The limitation of such techniques within the circle of confusion. is that they work on the assumption that the reflected objects are located ◮ Then we need a circle of confusion that is independent of z . far from the reflector, so that the same texture can be used by all reflection ◮ Suppose we let z go to infinity, then rays. Generating planar reflections of nearby objects will be presented in z d � � z − 1 this section, along with methods for rendering frosted glass and handling � � δ ∞ = z →∞ δ = lim lim � f ℓ � � curved reflectors. z d − f z →∞ � f ℓ f ℓ � z d '_ __ focal point � � = lim z − 1 z d − f = . � � z d − f z →∞ � Planar Reflections 6.10.1 ◮ Now, we can sample an offset inside the circle of confusion with diameter δ ∞ . Planar reflection, by which we mean reflection off a flat surface such as a ◮ Blending images seen from slightly displaced viewers that look mirror, is a special case of reflection off arbitrary surfaces. As often occurs at the same focal point will result in a depth of field effect. with special cases, planar reflections are easier to implement and execute ◮ Error (considering similar triangles): Figure 6.27. Depth of field. The viewer's location is moved a small amount, keeping more rapidly than general reflections. ◮ Centering the circle of confusion around the eye point, we can the view direction pointing at the focal point, and the images are accumulated. δ ∞ z d = δ model | z d − z | ⇔ δ model = | z d − z | An ideal reflector follows the law of reflection, which states that the δ ∞ = z d z δ . simulate depth of field by sampling different eye positions z d angle of incidence is equal to the angle of reflection. That is, the angle ◮ Thus, since f is constant and zoom changes d , the camera has within the circle of confusion. between the incident ray and the normal is equal to the angle between the Reflections 6.10 largest depth of field when zoomed out as much as possible. reflected ray and the normal. This is depicted in Figure 6.29, which illus- trates a simple object that is reflected in a plane. The figure also shows Reflection, refraction, and shadowing are all examples of global illumination an "image" of the reflected object. Due to the law of reflection, the re- effects in which one object in a scene affects the rendering of another. flected image of the object is simply the object itself physically reflected such as reflections and shadows contribute greatly to increasing the realism in a rendered image, but they perform another important task as well. They are used by the viewer as cues to determine spatial relationships, viewer as shown in Figure 6.28. angle of 7'- . / incidence reflector ~ .. . ... . . .. . :/ ~ . , . {, ..... .. ~' . . .... -. ': I ' .• _J Figure 6.28. The leh image was rendered without shadow and reflections, an~ so it is hard to see where the object is truly located. The right image was rendered With both Figure 6.29. Reflection in a plane, showing angle of incidence and reflection, the reflected shadow and reflections, and the spatial relationships are easier to estimate. (Car model geometry, and the reflector. is reused courtesy 0/ Nya Perspektiv Design AB.)

Example ◮ A demo program is available in the OptiX SDK. 02941 Physically Based Rendering Glare and Fourier Optics Jeppe Revall Frisvad June 2018 Examples of artistic and simulated glare Categories of glare ◮ Glare ◮ All people experience glare to some degree ◮ An interference with visual perception caused by a bright light source or reflection. ◮ A form of visual noise. ◮ Discomfort glare ◮ Glare which is distracting or uncomfortable. ◮ Does not significantly reduce the ability to see information needed for activities. ◮ The sensation one experiences when the overall illumination is too bright e.g. on a snow field under bright sun. ◮ Disability glare ◮ Painting by Carl Saltzmann, 1884 ◮ Glare which reduces the ability to perceive the visual ◮ Renderings by Kakimoto et al. [2005] information needed for a particular activity. ◮ Columbia Pictures Intro Video ◮ A haze of veiling luminance that decreases contrast and reduces visibility. ◮ Why is it not in photos? ◮ Typically caused by pathological defects in the eye.

Anatomy of the human eye Ocular haloes and coronas ◮ The glare phenomenon as described by Descartes in 1637: ✞ ✌ ☎ ✄☛ ✄ ✡ ☞ ☎ ✡ ☛ ✍ ✝ ✁ ✎ ✂ ☎ ✡ ☛ ✌ ✁ ✎ ☎ ✄☛ ☞ ✂ ☎ ✓ ✏ ✁ ✡ ✠ ✂ ✙ ✝ ✂ ☛ ✗ ✘ ✁ ✡ ☎ ✡ ☛ ✏ ✁ ✡ ✠ ✂ ✕ ✔ ✝ ☎ ✂ ☛ ✌ ✝ ✏ ✎ ✖ ◮ This cannot be captured by a camera as it happens inside the eye, but could we simulate this? � ✁ ✂ ✄☎ ✆ ◮ Fourier developed his transform to solve heat transfer ✒ ✑ ☎ ✄✆ ✌ ☎ ✄☛ problems. It is well-known that there are many other uses. � ✝ ✞ ✝ ✆✂ ✟ ✞ ◮ In Fourier optics, it is used to compute the scattering of ✠ ✡ ☛☞ ☎ ☛ particles that we can model as obstacles in a plane. ◮ This is particularly useful for modelling lens systems such as ◮ Glare is due to particle scattering. the human eye. Related Work Related Work Kakimoto et al. [2004] Simpson [1953] “Glare Generation Based “Occular Haloes and on Wave Optics” Coronas” van den Berg et al. [2005] Nakamae et al. [1992] “Physical Model and “A Lighting Model Simulation of the Fine Aiming at Drive Needles Radiating from Simulators” Point Light Sources” Spencer et al. [1995] Yoshida et al. [2008] “Physically-Based “Brightness of the Glare Glare Effects for Illusion” Digital Images”

Recommend

More recommend