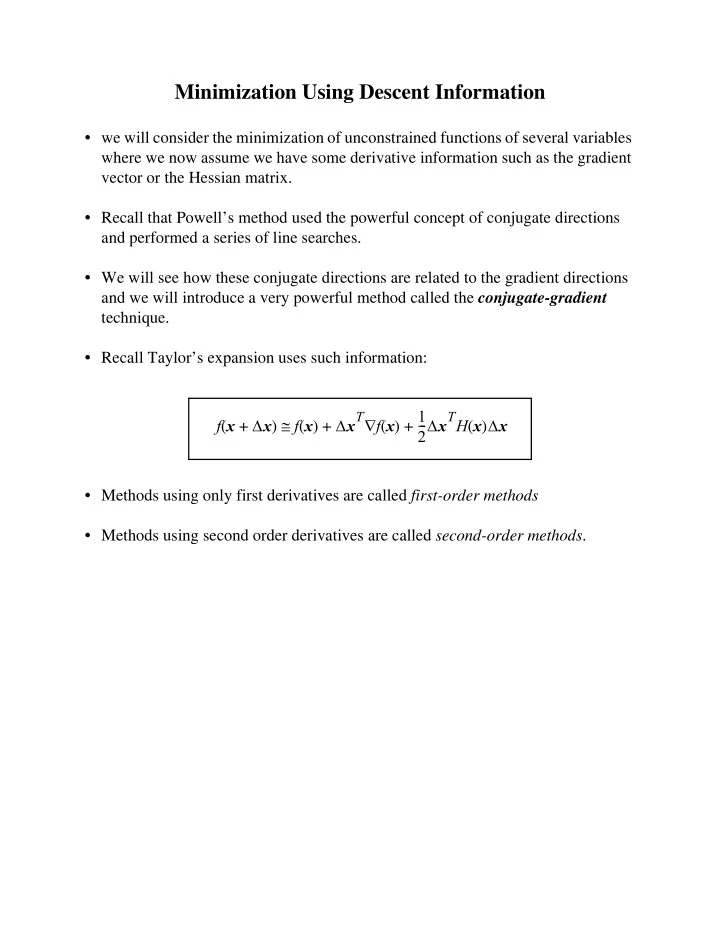

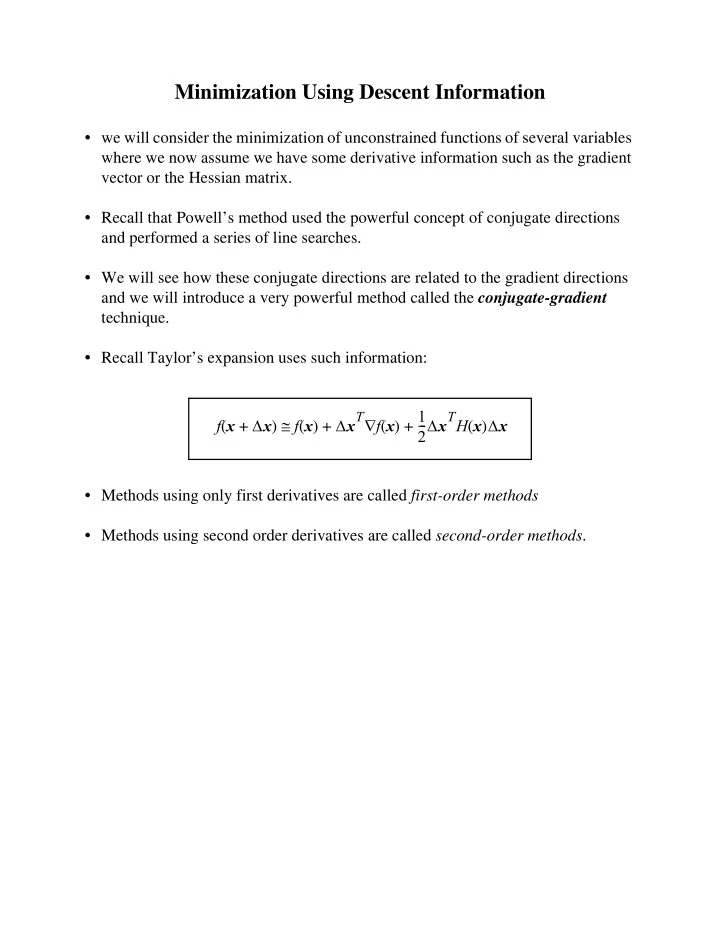

Minimization Using Descent Information • we will consider the minimization of unconstrained functions of several variables where we now assume we have some derivative information such as the gradient vector or the Hessian matrix. • Recall that Powell’s method used the powerful concept of conjugate directions and performed a series of line searches. • We will see how these conjugate directions are related to the gradient directions and we will introduce a very powerful method called the conjugate-gradient technique. • Recall Taylor’s expansion uses such information: ∆ x T f x 1 - ∆ x T H x ∆ x ≅ ∇ - ( ) ∆ x ( + ) ( ) + ( ) + f x f x 2 • Methods using only first derivatives are called first-order methods • Methods using second order derivatives are called second-order methods .

The Gradient Vector Re-examined R n ∈ • Recall that the gradient vector of a function f x ( ), x : ∂ f ∂ x 1 ∂ f ∇ g x ( ) = f x ( ) = ∂ x 2 … ∂ f ∂ x n • Consider a differential length dx 1 dx 2 = = d x u s d … dx n where u = and u holds the directional information of the differential. 1 • Now a change in the function f x ( ) along d x is given by n ∂ f ∑ ( ∇ , ) ( ∇ , ) ( ∇ , ) d f = d x i = f x ( ) d x = f x ( ) u s d = d s f x ( ) u ∂ x i i = 1 the rate of change of f x ( ) along the arbitrary direction u is given by

d f ( ∇ , ) = f x ( ) u d s • On the other hand, along a direction u , the function f x ( ) is described as α u f x ( + ) and thus the rate of change along this direction is also written as d f d f x ( ∇ , ) α u = f x ( ) u = ( + ) (1) α d s d • We can examine (1) to see which direction gives the maximum rate of increase. • A well known inequality in functional analysis is called the Cauchy-Schwarz inequality which says ( , ) ≤ (2) a b a b Applying this to (1) we find ( ∇ , ) ≤ ∇ ∇ ( ) ( ) = ( ) (3) f x u f x u f x ∇ ( ) f x and letting u = - - - - - - - - - - - - - - - - - we have ∇ f x ( ) 2 ∇ ∇ f x ( ) f x ( ) ⎛ ⎞ ∇ , ∇ - - - - - - - - - - - - - - - - - f x ( ) = - - - - - - - - - - - - - - - - - - - - = f x ( ) (4) ⎝ ⎠ ∇ f x ( ) ∇ f x ( ) which shows that for this choice of direction, the directional derivative ( ∇ , ) f x ( ) u reaches its maximum value. ∇ • Therefore we say that f x ( ) is the direction of maximum increase and ∇ is the direction of maximum decrease • – ( ) f x (also: steepest ascent and steepest descent ).

Cauchy’s Method (Steepest Descent) • A logical minimization strategy is to use the direction of steepest descent and perform a line search in that direction. • Assume we are at a point x k and that we have calculated the gradient at this ∇ point, g k = g x k ( ) = f x k ( ) . • Then we can minimize along g k starting from x k : α k α g k = arg min α f x k ( + ) (5) and arrive at the new point α k g k x k = x k + . (6) + 1 Algorithm: Cauchy’s Method of Steepest Descent 1. input: f x ( ), g x ( ), x 0 , g tol , k max 2. set: x = , g = ( ) x 0 g x < > 3. while k and g k max g tol set: α α g 4. = arg min α f x ( + ) α g 5. set: x = x + 6. set: g = ( ) , k = + g x k 1 7. end • Note that we don’t bother to take the negative of g in the line search since this is automatically accomplished by allowing negative values of α . • The iterations end when either a maximum number of iterations have been reached or the norm of the gradient at the current point is less than a user defined value g tol which is a value close to zero.

• The successive directions of minimization in the method of steepest descent are orthogonal to each other. • This can be shown as follows: assume that we are at a point x k and we need to find α k α g k = arg min α f x k ( + ) (7) in order to arrive at the new point α k g k x k = x k + . (8) + 1 • We can find α k by setting the derivative of F α α g k ( ) = f x k ( + ) with respect to α equal to zero, which from (1) we can write: d F α ( ∇ , ) ( , ) ( ) = f x k ( ) g k = g k g k = 0 . (9) + 1 + 1 α d this immediately shows the orthogonality between the successive directions + and g k . g k 1

• Now, although it may seem like a good idea to minimize along the direction of steepest descent, it turns out that this method is not very efficient. • For relatively complicated functions, this method will tend to zig-zag towards the minimum. x 2 g 1 g 2 x 1 Zig-zaging effect of Cauchy’s method. • Note that since successive directions of descent are always orthogonal, in two dimensions the algorithm searches in only two directions. • This is what makes the algorithm slow to converge to the minimum and is generally not recommended.

The Conjugate-Gradient Method • It turns out that if we have access to the gradient of the objective function, then we can determine conjugate directions relatively efficiently. • Recall that Powell’s conjugate direction method requires n single variable minimizations per iteration in order to determine one new conjugate direction at the end of the iteration. This results in approximately n 2 line minimizations to find the minimum of a quadratic function. • In the conjugate-gradient algorithm, access to the gradient of f x ( ), that is ∇ g x ( ) = f x ( ) , allows us to set up a new conjugate direction after every line minimization. • Consider the quadratic function 1 b T x - x T C x - ( ) = + + (10) Q x a 2 R n ∈ where x . • We want to perform successive line minimizations along conjugate directions, say s x k ( ), where x k is the current search point and we minimize to the next point as λ k s x k = + ( ) . (11) x k x k + 1 • Now, how do we find these conjugate directions = ( ) s k s x k given previous information?

• Expand the search directions in terms of the gradient at the current point ∇ g k = f x k ( ) and a linear combination of the previous search directions: – k 1 ∑ γ i s i s k = – g k + (12) = i 0 where we start with the initial search direction as the steepest descent direction = – s 0 g 0 . • The coefficients of the expansion, γ i , i 1 … k = – 1 , are to be chosen so that the s k are C -conjugate . • Therefore, we have γ 0 s 0 γ 0 g 0 s 1 = – g 1 + = – g 1 – (13) and we require that ( , ) ( γ 0 g 0 , ) s 1 C s 0 = 0 = – g 1 – C s 0 (14) λ 0 s 0 but x 1 = x 0 + which can be solved for s 0 as ∆ x 0 x 1 – x 0 - - - - - - - - - - - - - - - - - = s 0 = - - - - - - - - - (15) λ 0 λ 0 where ∆ x 0 = x 1 – x 0 is the forward difference operator. Using this in (14) we have ∆ x 0 ⎛ ⎞ γ 0 g 0 , ⎜ - - - - - - - - - ⎟ – g 1 – C = 0 (16) λ 0 ⎝ ⎠ but g x ( ) = C x + b which means that we can set ∆ g 0 ( ) C ∆ x 0 = – = – = (17) g 1 g 0 C x 1 x 0

which substituting into (16), we have ∆ g 0 ⎛ ⎞ γ 0 g 0 , ⎜ - - - - - - - - - ⎟ – g 1 – = 0 λ 0 ⎝ ⎠ ( , ∆ g 0 ) γ 0 g 0 ∆ g 0 ( , ) – g 1 = and finally ( ∆ g 0 g 1 , ) γ 0 = – - - - - - - - - - - - - - - - - - - - - - - - . (18) ( ∆ g 0 g 0 , ) • We can further reduce the numerator as follows: 2 ( ∆ g 0 g 1 , ) ( , ) ( , ) ( , ) = – = – = (19) g 1 g 0 g 1 g 1 g 1 g 0 g 1 g 1 ( , ) since g 0 g 1 = 0 (successive directions of minimization are orthogonal) • The denominator can also be rewritten as 2 ( ∆ g 0 g 0 , ) ( , ) ( , ) ( , ) = g 1 – g 0 g 0 = g 1 g 0 – g 0 g 0 = – g 0 (20) and therefore the coefficient γ 0 can be calculated from 2 g 1 γ 0 = - - - - - - - - - - - - - . (21) 2 g 0 • The conjugate direction, s 1 , can be calculated as 2 g 1 - - - - - - - - - - - - s 0 - = – + . (22) s 1 g 1 2 g 0

• Now although we derived this for s 1 , we could have derived it for any s k to get 2 g k - - - - - - - - - - - - - - - - - - - - s k s k = – g k + (23) – 1 2 g k – 1 • This represents the Fletcher-Reeves scheme (1964) for determining conjugate directions for search from the gradient at the current point, g k , the previous search direction, s k – , and the magnitude of the gradient at the previous point, 1 . g k – 1 • Two alternative update equations are the Hestenes-Stiefel method (1952): ( ∆ g k , ) g k – 1 - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - s k s k = – g k + (24) – 1 ( ∆ g k , ) s k – 1 and the Polak-Ribière method (1969): ( ∆ g k , ) g k – 1 - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - s k s k = – g k + (25) – 1 2 g k – 1 • All three schemes are identical for quadratic functions but will be different for non-quadratic functions. • For quadratic functions, these methods will find the exact minimum in n iterations. • For non-quadratic functions, the search direction is reset to the steepest descent direction every n iterations.

Recommend

More recommend