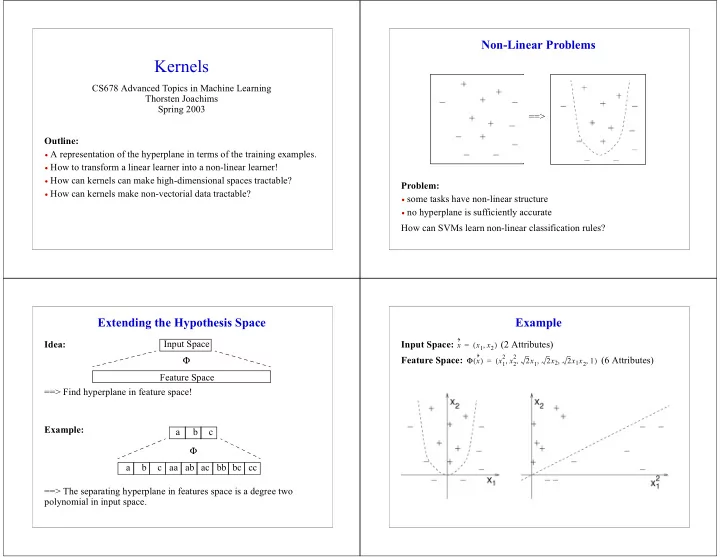

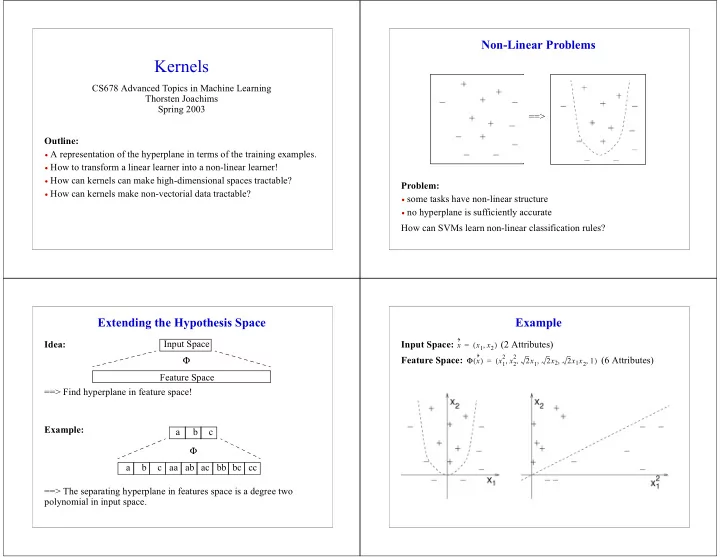

Non-Linear � Problems Kernels CS678 � Advanced � Topics � in � Machine � Learning Thorsten � Joachims Spring � 2003 ==> Outline: • A � representation � of � the � hyperplane � in � terms � of � the � training � examples. • How � to � transform � a � linear � learner � into � a � non-linear � learner! • How � can � kernels � can � make � high-dimensional � spaces � tractable? Problem: • How � can � kernels � make � non-vectorial � data � tractable? • some � tasks � have � non-linear � structure • no � hyperplane � is � sufficiently � accurate How � can � SVMs � learn � non-linear � classification � rules? Extending � the � Hypothesis � Space Example Idea: Input � Space Input � Space: � � (2 � Attributes) x = x 1 x 2 ( , ) 2 x 2 2 Feature � Space: � � (6 � Attributes) Φ Φ x = x 1 2 x 2 x 2 2 x 1 x 2 1 ( ) ( , , , , , ) 1 Feature � Space ==> � Find � hyperplane � in � feature � space! Example: � � a b c Φ a b c aa ab ac bb bc cc ==> � The � separating � hyperplane � in � features � space � is � a � degree � two � polynomial � in � input � space.

Kernels SVM � with � Kernels � � 1 n n n � � � Problem: � Very � many � Parameters! � Polynomials � of � degree � p � over � N � Training: �� maximize � � � L α ( ) = α i – - - - α i α j y i y j K x i x j ( , ) 2 � � O N p attributes � in � input � space � lead � to � � attributes � in � feature � space! ( ) i = 1 i = 1 j = 1 n � � s. � t. �� α i y i = 0 und 0 C ≤ α i ≤ Solution: � [Boser � et � al.] � The � dual � OP � depends � only � on � inner � products � i = 1 => � Kernel � Functions � K a b , = Φ a ( ) Φ b ( ) ⋅ ( ) � � � Classification: � For � new � example � x �� � � h x = sign α i y i K x i x , + b ( ) ( ) � � x i ∈ SV 2 x 2 2 Example: � For � � calculating Φ x = x 1 2 x 2 x 2 2 x 1 x 2 1 ( ) ( , , , , , ) 1 New � hypotheses � spaces � through � new � Kernels: 2 K a b , = a b + 1 = Φ a ( ) Φ b ( ) [ ⋅ ] ⋅ ( ) Linear: � K x i x j , = x i x j ( ) ⋅ gives � inner � product � in � feature � space. d Polynomial: � K x i x j ( , ) = [ x i x j ⋅ + 1 ] 2 Radial � Basis � Functions: � K x i x j , = exp – x i – x j ( ) ( γ ) We � do � not � need � to � represent � the � feature � space � explicitly! Sigmoid: � K x i x j , = tanh γ x i – x j + c ( ) ( ( ) ) Example: � SVM � with � Polynomial � of � Degree � 2 Example: � SVM � with � RBF-Kernel 2 2 Kernel: � K x i x j Kernel: � K x i x j , = x i x j + 1 , = exp – x i – x j ( ) [ ⋅ ] ( ) ( γ )

What � is � a � Valid � Kernel? How � to � Construct � Valid � Kernels? N Definition: �� Let � X � be � a � nonempty � set. � A � function � � is � a � valid � kernel � Theorem: � Let � � and � � be � valid � Kernels � over � , � , � , � K x i x j ( , ) K 1 K 2 X × X X ⊆ ℜ a ≥ 0 in � X � if � for � all � n � and � all � � it � produces � a � Gram � matrix , � � a � real-valued � function � on � , � m � with � � a � kernel � over � x 1 … x n , X 0 1 f X φ X ; K 3 , ∈ ≤ λ ≤ → ℜ m m , � and � � a � summetric � positive � semi-definite � matrix. � Then � the � K ℜ × ℜ G ij = K x i x j , ( ) following � functions � are � valid � Kernels K x z = λ K 1 x z + 1 – ) K 2 x z ( , ) ( , ) ( λ ( , ) that � is � symmetric K x z = aK 1 x z ( , ) ( , ) G T G = K x z = K 1 x z ) K 2 x z ( , ) ( , ( , ) and � positive � semi-definite K x z = f x ( ) f z ( , ) ( ) n n � � K x z = K 3 φ x ( ) φ z ( , ) ( , ( ) ) � T � � � G α = α i α j K x i x j , 0 ∀ α α ( ) ≥ � � T � � K x z = x Kz ( , ) i = 1 j = 1 => � Construct � complex � Kernels � from � simple � Kernels. Kernels � for � Discrete � and � Structured � Representations Computing � String � Kernel � (I) Kernels � for � Sequences: � Two � sequences � are � similar, � if � the � have � many � Definitions: common � and � consecutive � subsequences. Σ n : � sequences � of � length � n � over � alphabet � Σ • Example � [Lodhi � et � al., � 2000]: � For � � consider � the � following � : � index � sequence � (sorted) 0 1 i = i 1 … i n ≤ λ ≤ ( , , ) • features � space : � substring � operator s i ( ) • : � range � of � index � sequence r i = i n – i 1 + 1 ( ) • c-a c-t a-t b-a b-t c-r a-r b-r Kernel: � Average � range � of � common � subsequences � of � length � n 2 3 2 φ cat ( ) λ λ λ 0 0 0 0 0 i n + j n – i 1 – j 1 + 2 � � � 2 3 2 K n s t = ( , ) λ φ car ( ) λ λ λ 0 0 0 0 0 2 2 3 Σ n φ bat ( ) λ λ λ 0 0 0 0 0 u i u ; = s i j u ; = s j ∈ ( ) ( ) 2 2 3 φ bar ( ) λ λ λ 0 0 0 0 0 Auxiliary � Function: � Average � range � to � end � of � sequence � of � common � subsequences � of � length � n => � 4 , � efficient � computation � via � dynamic � programming. K car cat = ( , ) λ s + t – i 1 – j 1 + 2 � � � K d ′ s t = ( , ) λ => � Fisher � Kernels � [Jaakkola � & � Haussler, � 1998] Σ n u ∈ i u ; = s i ( ) j u ; = s j ( )

Computing � String � Kernel � (II) Kernel: K n s t ( , ) = 0 if min s t ( ( , ) < n ) � )λ 2 K n sx t = K n s t + K ′ n s t 1 … j – 1 ( , ) ( , ) ( , [ ] – 1 j t j ; = x Auxiliary: K ′ 0 s t = 1 ( , ) K ′ d s t = 0 if min s t d ( , ) ( ( , ) < ) � )λ t – j + 2 K ′ d sx t = λ K ′ d s t + K ′ d s t 1 … j – 1 ( , ) ( , ) ( , [ ] – 1 j t j ; = x

Recommend

More recommend