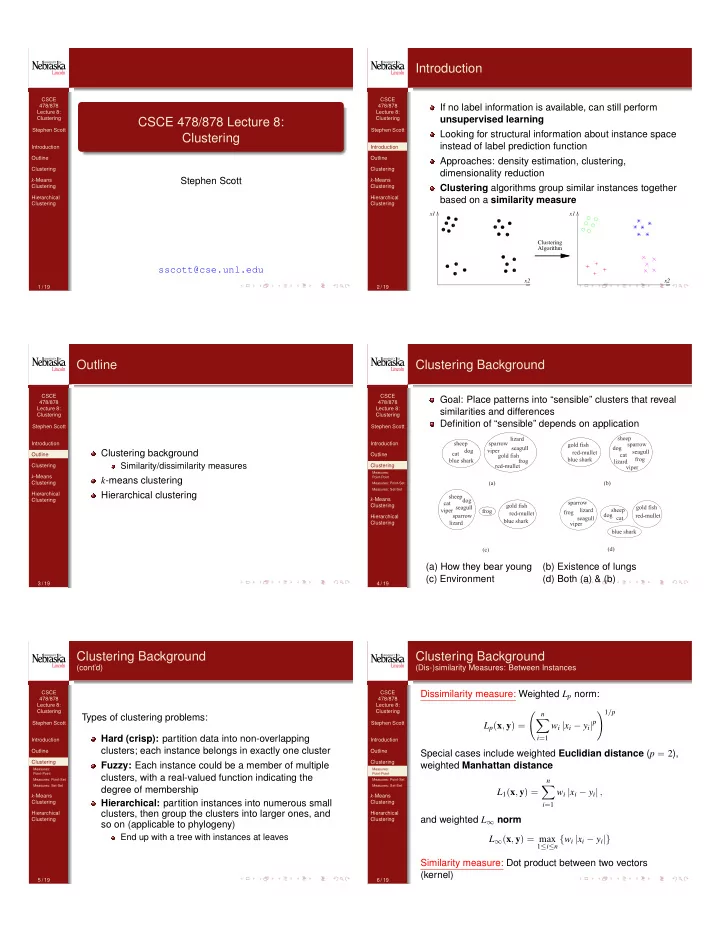

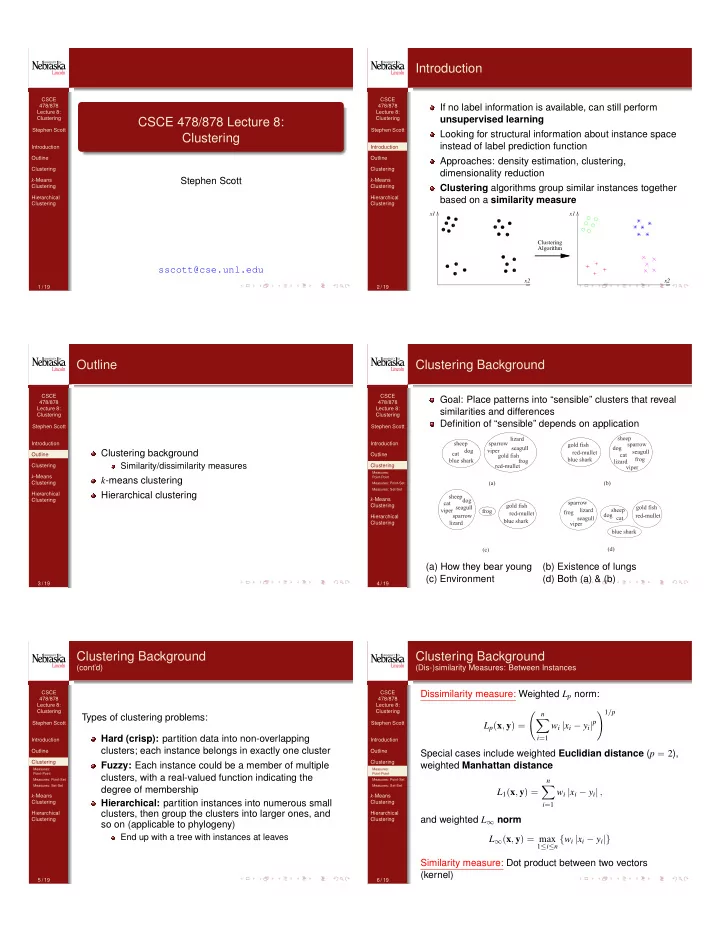

Introduction CSCE CSCE If no label information is available, can still perform 478/878 478/878 Lecture 8: Lecture 8: unsupervised learning Clustering Clustering CSCE 478/878 Lecture 8: Stephen Scott Stephen Scott Looking for structural information about instance space Clustering instead of label prediction function Introduction Introduction Outline Outline Approaches: density estimation, clustering, Clustering Clustering dimensionality reduction Stephen Scott k -Means k -Means Clustering Clustering Clustering algorithms group similar instances together Hierarchical Hierarchical based on a similarity measure Clustering Clustering x1 x1 Clustering Algorithm sscott@cse.unl.edu x2 x2 1 / 19 2 / 19 Outline Clustering Background CSCE CSCE Goal: Place patterns into “sensible” clusters that reveal 478/878 478/878 Lecture 8: Lecture 8: similarities and differences Clustering Clustering Definition of “sensible” depends on application Stephen Scott Stephen Scott Introduction Introduction Clustering background Outline Outline Similarity/dissimilarity measures Clustering Clustering Measures: k -Means k -means clustering Point-Point Clustering Measures: Point-Set Measures: Set-Set Hierarchical Hierarchical clustering k -Means Clustering Clustering Hierarchical Clustering (a) How they bear young (b) Existence of lungs (c) Environment (d) Both (a) & (b) 3 / 19 4 / 19 Clustering Background Clustering Background (cont’d) (Dis-)similarity Measures: Between Instances CSCE CSCE Dissimilarity measure: Weighted L p norm: 478/878 478/878 Lecture 8: Lecture 8: Clustering Clustering ! 1 / p n Types of clustering problems: X w i | x i � y i | p Stephen Scott Stephen Scott L p ( x , y ) = Hard (crisp): partition data into non-overlapping i = 1 Introduction Introduction clusters; each instance belongs in exactly one cluster Outline Outline Special cases include weighted Euclidian distance ( p = 2 ), Clustering Clustering Fuzzy: Each instance could be a member of multiple weighted Manhattan distance Measures: Measures: Point-Point Point-Point clusters, with a real-valued function indicating the Measures: Point-Set Measures: Point-Set n Measures: Set-Set Measures: Set-Set degree of membership X L 1 ( x , y ) = w i | x i � y i | , k -Means k -Means Clustering Hierarchical: partition instances into numerous small Clustering i = 1 clusters, then group the clusters into larger ones, and Hierarchical Hierarchical and weighted L ∞ norm Clustering Clustering so on (applicable to phylogeny) End up with a tree with instances at leaves L ∞ ( x , y ) = max 1 ≤ i ≤ n { w i | x i � y i |} Similarity measure: Dot product between two vectors (kernel) 5 / 19 6 / 19

Clustering Background Clustering Background (Dis-)similarity Measures: Between Instances (cont’d) (Dis-)similarity Measures: Between Instance and Set CSCE CSCE 478/878 478/878 Might want to measure proximity of point x to existing Lecture 8: Lecture 8: Clustering Clustering cluster C If attributes come from { 0 , . . . , k � 1 } , can use measures for Stephen Scott Stephen Scott real-valued attributes, plus: Can measure proximity α by using all points of C or by Introduction Introduction using a representative of C Outline Hamming distance : DM measuring number of places Outline If all points of C used, common choices: Clustering Clustering where x and y differ Measures: Measures: Point-Point Point-Point Tanimoto measure : SM measuring number of places α ps max ( x , C ) = max y ∈ C { α ( x , y ) } Measures: Point-Set Measures: Point-Set Measures: Set-Set Measures: Set-Set where x and y are same, divided by total number of k -Means k -Means places α ps min ( x , C ) = min y ∈ C { α ( x , y ) } Clustering Clustering Ignore places i where x i = y i = 0 Hierarchical Hierarchical Clustering Clustering avg ( x , C ) = 1 Useful for ordinal features where x i is degree to which x X α ps α ( x , y ) , possesses i th feature | C | y ∈ C where α ( x , y ) is any measure between x and y 7 / 19 8 / 19 Clustering Background Clustering Background (Dis-)similarity Measures: Between Instance and Set (cont’d) (Dis-)similarity Measures: Between Sets CSCE Alternative: Measure distance between point x and a CSCE 478/878 478/878 representative of the cluster C Lecture 8: Lecture 8: Clustering Clustering Mean vector m p = 1 Given sets of instances C i and C j and proximity measure Stephen Scott Stephen Scott X y α ( · , · ) | C | Introduction Introduction y ∈ C Mean center m c 2 C : Outline Outline Max : α ss max ( C i , C j ) = x ∈ C i , y ∈ C j { α ( x , y ) } max Clustering Clustering X X d ( m c , y ) d ( z , y ) 8 z 2 C , Measures: Measures: Min : α ss min ( C i , C j ) = x ∈ C i , y ∈ C j { α ( x , y ) } Point-Point Point-Point min Measures: Point-Set y ∈ C y ∈ C Measures: Point-Set Measures: Set-Set Measures: Set-Set where d ( · , · ) is DM (if SM used, reverse ineq.) 1 k -Means k -Means X X Average : α ss avg ( C i , C j ) = α ( x , y ) Clustering Clustering Median center : For each point y 2 C , find median | C i | | C j | Hierarchical Hierarchical x ∈ C i y ∈ C j dissimilarity from y to all other points of C , then take Clustering Clustering Representative (mean) : α ss mean ( C i , C j ) = α ( m C i , m C j ) , min; so m med 2 C is defined as med y ∈ C { d ( m med , y ) } med y ∈ C { d ( z , y ) } 8 z 2 C Now can measure proximity between C ’s representative and x with standard measures 9 / 19 10 / 19 k -Means Clustering k -Means Clustering Algorithm CSCE CSCE 478/878 478/878 Very popular clustering algorithm Lecture 8: Lecture 8: Clustering Clustering Choose value for parameter k Represents cluster i (out of k total) by specifying its Stephen Scott Stephen Scott Initialize k arbitrary representatives m 1 , . . . , m k representative m i (not necessarily part of the original Introduction Introduction E.g., k randomly selected instances from X set of instances X ) Outline Outline Repeat until representatives m 1 , . . . , m k don’t change Each instance x 2 X is assigned to the cluster with Clustering Clustering For all x 2 X 1 nearest representative k -Means k -Means Assign x to cluster C j such that k x � m j k (or other Clustering Clustering Goal is to find a set of k representatives such that sum Algorithm Algorithm measure) is minimized Example Example of distances between instances and their I.e., nearest representative Hierarchical Hierarchical representatives is minimized Clustering Clustering For each j 2 { 1 , . . . , k } 2 NP-hard (intractable) in general m j = 1 X Will use an algorithm that alternates between y C j determining representatives and assigning clusters y 2 C j until convergence (in the style of the EM algorithm ) 11 / 19 12 / 19

Recommend

More recommend