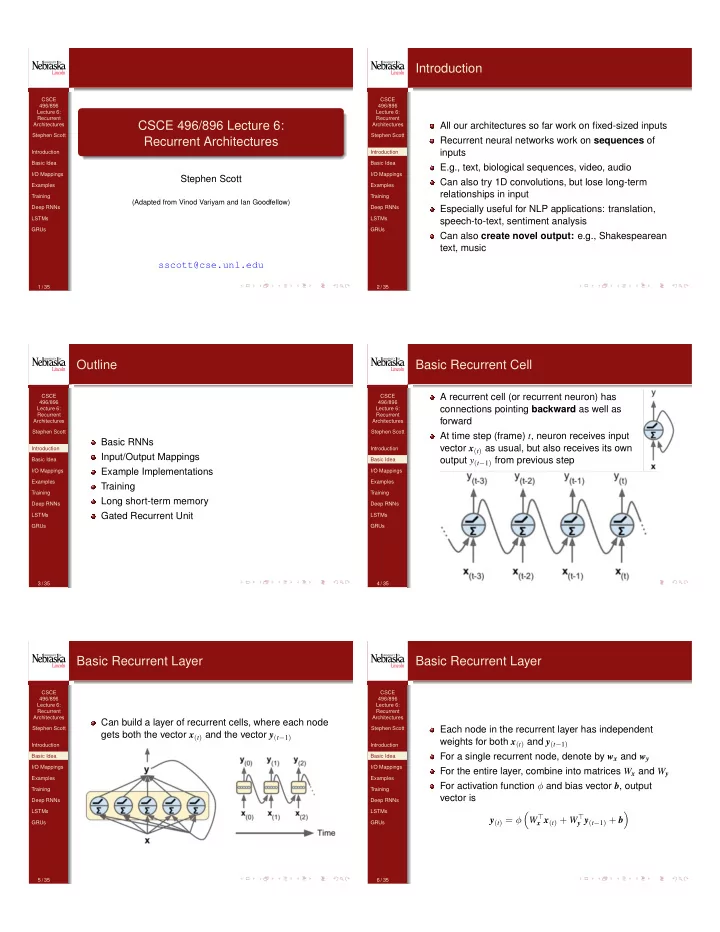

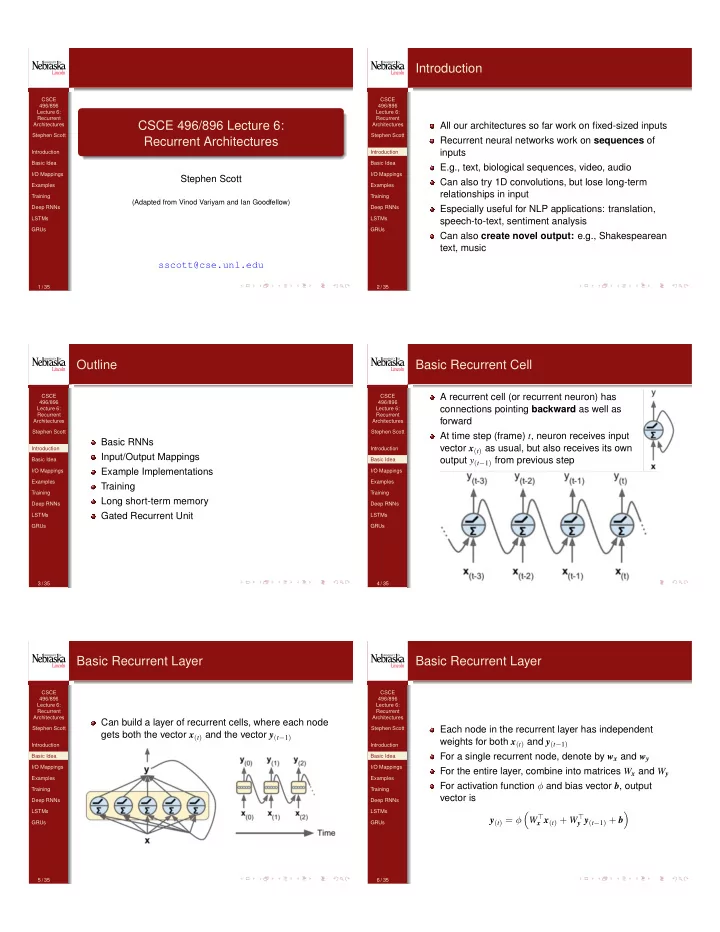

Introduction CSCE CSCE 496/896 496/896 Lecture 6: Lecture 6: Recurrent Recurrent CSCE 496/896 Lecture 6: Architectures Architectures All our architectures so far work on fixed-sized inputs Stephen Scott Stephen Scott Recurrent Architectures Recurrent neural networks work on sequences of inputs Introduction Introduction Basic Idea Basic Idea E.g., text, biological sequences, video, audio I/O Mappings I/O Mappings Stephen Scott Can also try 1D convolutions, but lose long-term Examples Examples relationships in input Training Training (Adapted from Vinod Variyam and Ian Goodfellow) Deep RNNs Deep RNNs Especially useful for NLP applications: translation, LSTMs LSTMs speech-to-text, sentiment analysis GRUs GRUs Can also create novel output: e.g., Shakespearean text, music sscott@cse.unl.edu 1 / 35 2 / 35 Outline Basic Recurrent Cell A recurrent cell (or recurrent neuron) has CSCE CSCE 496/896 496/896 connections pointing backward as well as Lecture 6: Lecture 6: Recurrent Recurrent forward Architectures Architectures Stephen Scott Stephen Scott At time step (frame) t , neuron receives input Basic RNNs vector x ( t ) as usual, but also receives its own Introduction Introduction Input/Output Mappings output y ( t � 1 ) from previous step Basic Idea Basic Idea Example Implementations I/O Mappings I/O Mappings Examples Examples Training Training Training Long short-term memory Deep RNNs Deep RNNs LSTMs Gated Recurrent Unit LSTMs GRUs GRUs 3 / 35 4 / 35 Basic Recurrent Layer Basic Recurrent Layer CSCE CSCE 496/896 496/896 Lecture 6: Lecture 6: Recurrent Recurrent Architectures Architectures Can build a layer of recurrent cells, where each node Each node in the recurrent layer has independent Stephen Scott Stephen Scott gets both the vector x ( t ) and the vector y ( t � 1 ) weights for both x ( t ) and y ( t � 1 ) Introduction Introduction For a single recurrent node, denote by w x and w y Basic Idea Basic Idea I/O Mappings I/O Mappings For the entire layer, combine into matrices W x and W y Examples Examples For activation function φ and bias vector b , output Training Training vector is Deep RNNs Deep RNNs LSTMs LSTMs ⇣ ⌘ W > x x ( t ) + W > y ( t ) = φ y y ( t � 1 ) + b GRUs GRUs 5 / 35 6 / 35

Input/Output Mappings Memory and State Sequence to Sequence CSCE CSCE Since a node’s output depends on its past, it can be 496/896 496/896 Many ways to employ this basic architecture: Lecture 6: Lecture 6: thought of having memory or state Recurrent Recurrent Architectures Architectures State at time t is h ( t ) = f ( h ( t � 1 ) , x ( t ) ) and output Sequence to sequence: Input is a sequence and Stephen Scott Stephen Scott y ( t ) = g ( h ( t � 1 ) , x ( t ) ) output is a sequence State could be the same as the output, or separate Introduction Introduction E.g., series of stock predictions, one day in advance Can think of h ( t ) as storing important information about Basic Idea Basic Idea input sequence I/O Mappings I/O Mappings Examples Examples Analogous to convolutional outputs summarizing Training Training important image features Deep RNNs Deep RNNs LSTMs LSTMs GRUs GRUs 7 / 35 8 / 35 Input/Output Mappings Input/Output Mappings Sequence to Vector Vector to Sequence CSCE CSCE Sequence to vector: Input is sequence and output a 496/896 496/896 Vector to sequence: Input is a single vector (zeroes Lecture 6: Lecture 6: vector/score/ classification Recurrent Recurrent for other times) and output is a sequence Architectures Architectures E.g., sentiment score of movie review Stephen Scott Stephen Scott E.g., image to caption Introduction Introduction Basic Idea Basic Idea I/O Mappings I/O Mappings Examples Examples Training Training Deep RNNs Deep RNNs LSTMs LSTMs GRUs GRUs 9 / 35 10 / 35 Input/Output Mappings Input/Output Mappings Encoder-Decoder Architecture Encoder-Decoder Architecture: NMT Example CSCE CSCE Encoder-decoder: Sequence-to-vector ( encoder ) Pre-trained word embeddings fed into input 496/896 496/896 Lecture 6: Lecture 6: followed by vector-to-sequence ( decoder ) Recurrent Recurrent Encoder maps word sequence to vector, decoder maps Architectures Architectures Input sequence ( x 1 , . . . , x T ) yields hidden outputs to translation via softmax distribution Stephen Scott Stephen Scott ( h 1 , . . . , h T ) , then mapped to context vector After training, do translation by feeding previous Introduction c = f ( h 1 , . . . , h T ) Introduction translated word y 0 ( t � 1 ) to decoder Basic Idea Basic Idea Decoder output y t 0 depends on previously output I/O Mappings I/O Mappings ( y 1 , . . . , y t 0 � 1 ) and c Examples Examples Example application: neural machine translation Training Training Deep RNNs Deep RNNs LSTMs LSTMs GRUs GRUs 11 / 35 12 / 35

Input/Output Mappings Input/Output Mappings Encoder-Decoder Architecture E-D Architecture: Attention Mechanism (Bahdanau et al., 2015) CSCE CSCE 496/896 496/896 Bidirectional RNN reads input Lecture 6: Lecture 6: Recurrent Recurrent Works through an embedded space like an forward and backward Architectures Architectures autoencoder, so can represent the entire input as an Stephen Scott Stephen Scott simultaneously embedded vector prior to decoding Encoder builds annotation h j Introduction Introduction as concatenation of − → h j and ← − Issue: Need to ensure that the context vector fed into Basic Idea Basic Idea h j decoder is sufficiently large in dimension to represent I/O Mappings I/O Mappings ⇒ h j summarizes preceding context required Examples Examples and following inputs Training Training Can address this representation problem via attention i th context vector Deep RNNs mechanism mechanism Deep RNNs c i = P T j = 1 α ij h j , where LSTMs LSTMs Encodes input sequence into a vector sequence rather exp( e ij ) α ij = GRUs GRUs than single vector P T k = 1 exp( e ik ) As it decodes translation, decoder focuses on relevant and e ij is an alignment score between inputs around j and subset of the vectors outputs around i 13 / 35 14 / 35 Input/Output Mappings Example Implementation E-D Architecture: Attention Mechanism (Bahdanau et al., 2015) Static Unrolling for Two Time Steps The i th element of CSCE CSCE 496/896 496/896 attention vector α j tells Lecture 6: Lecture 6: Recurrent Recurrent us the probability that Architectures Architectures target output y i is aligned X0 = tf.placeholder(tf.float32, [None, n_inputs]) Stephen Scott Stephen Scott X1 = tf.placeholder(tf.float32, [None, n_inputs]) to (or translated from) Wx = tf.Variable(tf.random_normal(shape=[n_inputs, n_neurons],dtype=tf.float32)) Wy = tf.Variable(tf.random_normal(shape=[n_neurons,n_neurons],dtype=tf.float32)) Introduction Introduction input x j b = tf.Variable(tf.zeros([1, n_neurons], dtype=tf.float32)) Basic Idea Basic Idea Y0 = tf.tanh(tf.matmul(X0, Wx) + b) Y1 = tf.tanh(tf.matmul(Y0, Wy) + tf.matmul(X1, Wx) + b) Then c i is expected I/O Mappings I/O Mappings annotation over all Examples Examples Input: annotations with Training Training probabilities α j Deep RNNs Deep RNNs # Mini-batch: instance 0, instance 1, instance 2, instance 3 X0_batch = np.array([[0, 1, 2], [3, 4, 5], [6, 7, 8], [9, 0, 1]]) # t = 0 LSTMs LSTMs X1_batch = np.array([[9, 8, 7], [0, 0, 0], [6, 5, 4], [3, 2, 1]]) # t = 1 GRUs Alignment score e ij indicates how much we should GRUs focus on word encoding h j when generating output y i (in decoder state s i � 1 ) Can compute e ij via dot product h > j s i � 1 , bilinear function h > j W s i � 1 , or nonlinear activation 15 / 35 16 / 35 Example Implementation Example Implementation Static Unrolling for Two Time Steps Automatic Static Unrolling Can avoid specifying one placeholder per time step via CSCE CSCE 496/896 496/896 tf.stack and tf.unstack Lecture 6: Lecture 6: Recurrent Recurrent Architectures Architectures X = tf.placeholder(tf.float32, [None, n steps, n_inputs]) X_seqs = tf.unstack(tf.transpose(X, perm=[1, 0, 2])) Stephen Scott Stephen Scott basic_cell = tf.contrib.rnn.BasicRNNCell(num_units=n_neurons) Can achieve the same thing more compactly via output_seqs, states = tf.contrib.rnn.static_rnn(basic_cell, X_seqs, static_rnn() dtype=tf.float32) Introduction Introduction outputs = tf.transpose(tf.stack(output_seqs), perm=[1, 0, 2]) ... Basic Idea X0 = tf.placeholder(tf.float32, [None, n_inputs]) Basic Idea X_batch = np.array([ X1 = tf.placeholder(tf.float32, [None, n_inputs]) # t=0 t=1 I/O Mappings I/O Mappings basic_cell = tf.contrib.rnn.BasicRNNCell(num_units=n_neurons) [[0, 1, 2], [9, 8, 7]], # instance 0 output_seqs, states = tf.contrib.rnn.static rnn(basic_cell, [X0, X1], Examples Examples [[3, 4, 5], [0, 0, 0]], # instance 1 dtype=tf.float32) [[6, 7, 8], [6, 5, 4]], # instance 2 Y0, Y1 = output_seqs Training Training [[9, 0, 1], [3, 2, 1]], # instance 3 ]) Deep RNNs Deep RNNs Automatically unrolls into length-2 sequence RNN LSTMs LSTMs GRUs GRUs Uses static_rnn() again, but on all time steps folded into a single tensor Still forms a large, static graph (possible memory issues) 17 / 35 18 / 35

Recommend

More recommend