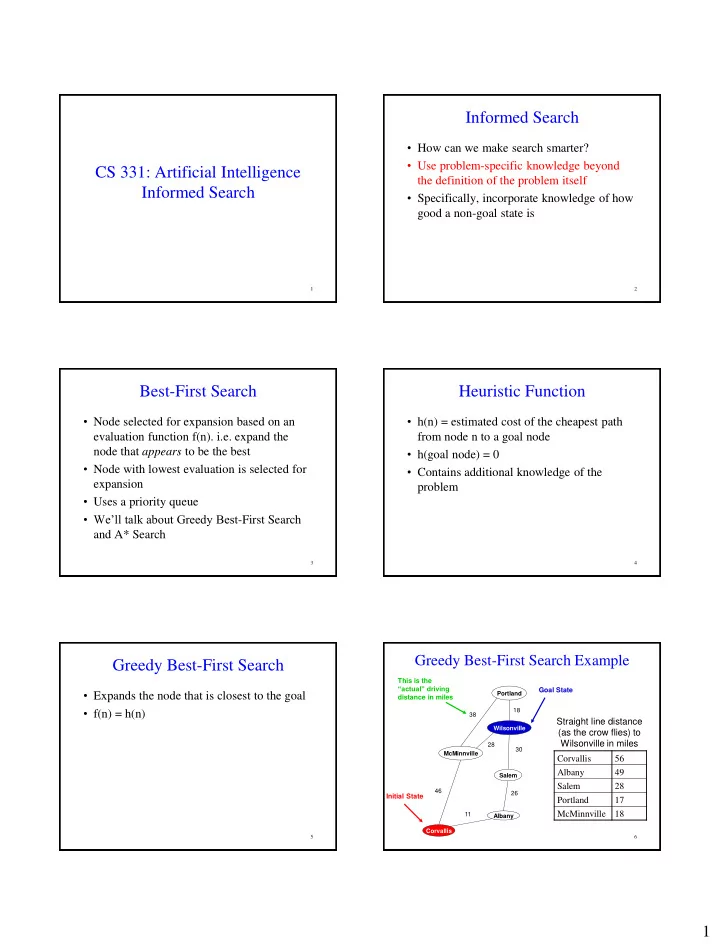

Informed Search • How can we make search smarter? • Use problem-specific knowledge beyond CS 331: Artificial Intelligence the definition of the problem itself Informed Search • Specifically, incorporate knowledge of how good a non-goal state is 1 2 Best-First Search Heuristic Function • Node selected for expansion based on an • h(n) = estimated cost of the cheapest path evaluation function f(n). i.e. expand the from node n to a goal node node that appears to be the best • h(goal node) = 0 • Node with lowest evaluation is selected for • Contains additional knowledge of the expansion problem • Uses a priority queue • We’ll talk about Greedy Best -First Search and A* Search 3 4 Greedy Best-First Search Example Greedy Best-First Search This is the “actual” driving Goal State • Expands the node that is closest to the goal Portland distance in miles • f(n) = h(n) 18 38 Straight line distance Wilsonville (as the crow flies) to Wilsonville in miles 28 30 McMinnville Corvallis 56 Albany 49 Salem Salem 28 46 26 Initial State Portland 17 McMinnville 18 11 Albany Corvallis 5 6 1

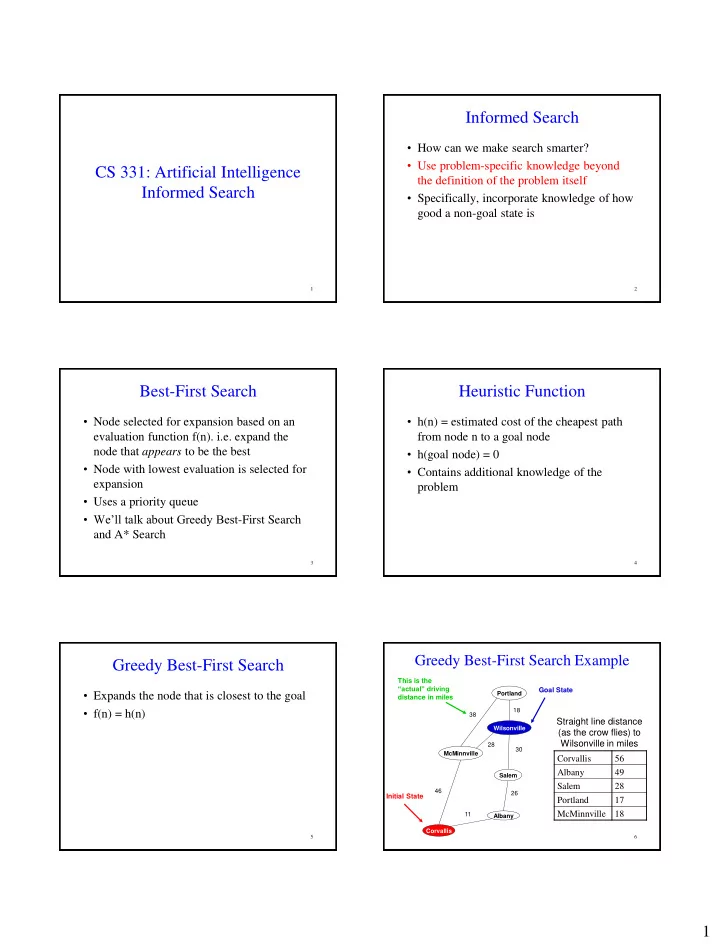

Greedy Best-First Search Example Greedy Best-First Search Example Corvallis Corvallis 56 h(n) McMinnville Albany 18 49 Corvallis 56 Corvallis 56 Albany 49 Albany 49 Salem 28 Salem 28 Portland 17 Portland 17 McMinnville 18 McMinnville 18 7 8 Greedy Best-First Search Example Greedy Best-First Search Example Goal State Portland Corvallis 18 38 Wilsonville McMinnville Albany 28 But the route below 49 30 McMinnville is much shorter than Corvallis 56 the route found by Portland Corvallis Wilsonville Salem Albany 49 17 56 0 Greedy Best-First 46 26 Salem 28 Initial State Search! Portland 17 11 Albany McMinnville 18 Corvallis Corvallis →McMinnville→ Wilsonville = 74 miles Corvallis →Albany→ Salem → Wilsonville = 67 miles Evaluating Greedy Best-First Search Evaluating Greedy Best-First Search Complete? No (could start down an infinite Complete? No (could start down an infinite path) path) Optimal? Optimal? No Time Complexity Time Complexity Space Complexity Space Complexity Greedy Best-First search results in lots of dead ends which leads to unnecessary nodes being expanded 11 12 2

Evaluating Greedy Best-First Search Evaluating Greedy Best-First Search Complete? No (could start down an infinite Complete? No (could start down an infinite path) path) Optimal? No Optimal? No O(b m ) O(b m ) Time Complexity Time Complexity O(b m ) Space Complexity Space Complexity Greedy Best-First search results in lots of dead ends which Greedy Best-First search results in lots of dead ends which leads to unnecessary nodes being expanded leads to unnecessary nodes being expanded 13 14 A* Search Admissible Heuristics • A much better alternative to greedy best- • A* is optimal if h(n) is an admissible first search heuristic • Evaluation function for A* is: • An admissible heuristic is one that never overestimates the cost to reach the goal f(n) = g(n) + h(n) • Admissible heuristic = optimistic where g(n) = path cost from the start node • Straight line distance was an admissible to n heuristic • If h(n) satisfies certain conditions, A* search is optimal and complete! 15 16 Greedy Best-First Search Example A* Search Example This is the “actual” driving Goal State Corvallis Portland distance in miles 56=0+56 18 38 Straight line distance Straight line distance f(n)=g(n)+h(n) Wilsonville (as the crow flies) to (as the crow flies) to Wilsonville in miles Wilsonville in miles 28 30 McMinnville Corvallis 56 Corvallis 56 Albany 49 Albany 49 Salem Salem 28 Salem 28 46 26 Initial State Portland 17 Portland 17 McMinnville 18 McMinnville 18 11 Albany Corvallis 17 18 3

A* Search Example A* Search Example Corvallis Corvallis 46 11 11 46 Straight line distance McMinnville McMinnville Albany Albany (as the crow flies) to 46+18=64 11+49=60 46+18=64 26 11 Wilsonville in miles Corvallis 56 Salem Corvallis Corvallis 56 37+28=65 22+56=78 Albany 49 Albany 49 Salem 28 Salem 28 Portland 17 Portland 17 McMinnville 18 McMinnville 18 19 20 A* Search Example A* Search Example Corvallis Corvallis 46 11 46 11 McMinnville McMinnville Albany Albany 38 38 28 11 28 26 26 46 46 11 Portland Corvallis Wilsonville Portland Corvallis Wilsonville Salem Corvallis Salem Corvallis 84+17=101 92+56=148 74+0=74 37+28=65 22+56=78 84+17=101 92+56=148 74+0=74 22+56=78 30 26 Wilsonville Albany Note: Don’t stop when you put a goal state on the priority 67+0=67 63+49=112 queue (otherwise you get a suboptimal solution) Proper termination: Stop when you pop a goal state from the 21 priority queue Proof that A* using TREE-SEARCH What about search graphs (more is optimal if h(n) is admissible than one path to a node)? • What if we expand a state we’ve already seen? • Suppose A* returns a suboptimal goal node G 2 . • G 2 must be the least cost node in the fringe. Let • Suppose we use the GRAPH-SEARCH solution the cost of optimal solution be C* and not expand repeated nodes h(G 2 ) = 0 because it • Because G 2 is suboptimal: is a goal node • Could discard the optimal path if it’s not the first one generated f(G 2 ) = g(G 2 ) + h(G 2 ) = g(G 2 ) > C* • Now consider a fringe node n on an optimal G 2 n • One simple solution: ensure optimal path to any solution path to the goal G C* repeated state is always the first one followed (like • If h(n) is admissible then: in Uniform-cost search) G f(n) = g(n) + h(n) ≤ C* • Requires an extra requirement on h(n) called • We have shown that f(n) ≤ C* < f(G 2 ), so G 2 will consistency (or monotonicity) not get expanded before n. Henc A* must return an optimal solution. 23 24 4

Consistency Consistency • Every consistent heuristic is also admissible • A heuristic is consistent if, for every node n and every successor n’ of n generated by any action a: • A* using GRAPH-SEARCH is optimal if h(n) ≤ c( n,a,n ’) + h(n’) h(n) is consistent Step cost of going from n to n’ • Most admissible heuristics are also by doing action a • A form of the triangle inequality – each side of the triangle consistent cannot be longer than the sum of the two sides n’ h(n ’)=1 h(n ’)=1 n’ n’ c(n,a,n’) h(n’) c(n,a,n ’)=2 c(n,a,n ’)=2 n G n n G G h(n) h(n)=2 h(n)=4 26 CONSISTENT INCONSISTENT Consistency A* is Optimally Efficient • Claim: If h(n) is consistent, then the values of f(n) along • Among optimal algorithms that expand search any path are nondecreasing paths from the root, A* is optimally efficient for • Proof: Suppose n’ is a successor of n. Want to show f(n’) ≥ f(n) any given heuristic function Then g(n’) = g(n) + c( n,a,n ’) for some a • Optimally efficient: no other optimal algorithm is f(n’) = g(n’) + h(n’) guaranteed to expand fewer nodes than A* = g(n) + c(n,a,n ’) + h(n’) – Fine print: except A* might possibly expand more nodes with f(n) = C* ≥ g(n) + h(n) From defn of consistency: where C* is the cost of the optimal path – tie-breaking issues c(n,a,n’) + h(n’) ≥ h(n) = f(n) • Any algorithm that does not expand all nodes with • Thus, the sequence of nodes expanded by A* is in f(n) < C* runs the risk of missing the optimal nondecreasing order of f(n) • First goal selected for expansion must be an optimal solution solution since all later nodes will be at least as expensive 27 28 Evaluating A* Search Evaluating A* Search With a consistent heuristic, A* is complete, optimal and With a consistent heuristic, A* is complete, optimal and optimally efficient. Could this be the answer to our optimally efficient. Could this be the answer to our searching problems? searching problems? The Dark Side of A*… Time complexity is exponential (although it can be reduced significantly with a good heuristic) The really bad news: space complexity is exponential (usually need to store all generated states). Typically runs out of space on large-scale problems. 29 30 5

Recommend

More recommend