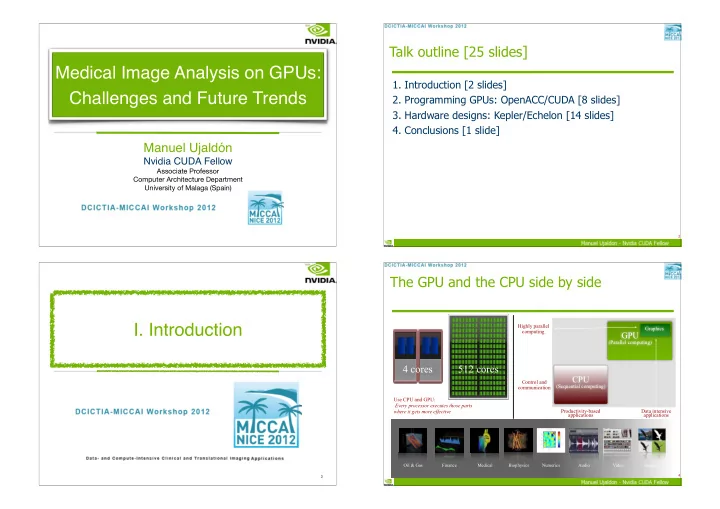

Talk outline [25 slides ] Medical Image Analysis on GPUs: 1. Introduction [2 slides] Challenges and Future Trends 2. Programming GPUs: OpenACC/CUDA [8 slides] 3. Hardware designs: Kepler/Echelon [14 slides] 4. Conclusions [1 slide] Manuel Ujaldón Nvidia CUDA Fellow Associate Professor Computer Architecture Department University of Malaga (Spain) 2 The GPU and the CPU side by side I. Introduction Highly parallel Graphics computing GPU (Parallel computing) 4 cores 512 cores CPU Control and (Sequential computing) communication Use CPU and GPU: Every processor executes those parts where it gets more effective Productivity-based Data intensive applications applications Oil & Gas Finance Medical Biophysics Numerics Audio Video Imaging 4 3

End users for GPUs II. Programming GPUs: Hundreds of researchers More than a million $ Large- scale OpenACC / CUDA clusters Between 50.000 and 1.000.000 Thousand of researchers Cluster of dollars Tesla servers Millions of researchers Less than 5000 dollars Tesla graphics card 5 6 OpenACC is an alternative to computer The OpenACC initiative scientist’s CUDA for average programmers The idea: Introduce a parallel programming standard for accelerators based on directives (like OpenMP), which: Are inserted into C, C++ or Fortran programs to direct the compiler to parallelize certain code sections. Provide a common code base: Multi-platform and multi-vendor. Enhance portability across other accelerators and multicore CPUs. Bring an ideal way to preserve investment in legacy applications by enabling an easy migration path to accelerated computing. Relax programming effort (and expected performance). First supercomputing customers: United States: Oak Ridge National Lab. Europe: Swiss National Supercomputing Centre. 7 8

OpenACC: The way it works OpenACC: Use of directives 9 10 OpenACC: Results CUDA: How we reached the current status Before 2005 2005 - 2007 2008 - 2012 11 12

CUDA: How programming elements CUDA: Hardware targets from a source code are related to the underlying hardware 13 14 Mapping CUDA elements to the GPU III. Hardware designs: GPU Thread Multiprocessor N Kepler and Echelon Thread block !!! Multiprocessor 2 Multiprocessor 1 Shared memory Memory integrated Registers Registers on the GPU Registers SIMD … Control Processor 1 Processor 2 Processor M Unit ! ! ! ! ! ! ! ! ! Grid 0 Constant External cache ! ! ! ! ! ! ! ! ! memory Texture (but cache inside !!! !!! !!! the Grid 1 graphics !!! !!! !!! card) Global memory !!! !!! !!! 16 15

The evolution of the GPU hardware Commercial models Fermi Fermi Kepler Kepler Architecture G80 GT200 GF100 GF104 GK104 GK110 Time frame 2006-07 2008-09 2010 2011 2012 2013 CUDA Compute 1.0 1.2 2.0 2.1 3.0 3.5 Capability (CCC) N (multiprocs.) 16 30 16 7 8 15 M (cores/multip.) 8 8 32 48 192 192 Number of cores 128 240 512 336 1536 2880 17 18 GPU multiprocessors: Kepler GK110: Enhancements versus Fermi From Fermi SMs to Kepler SMXs 19 20

The memory hierarchy: The memory hierarchy Fermi vs. Kepler GPU generation Fer Fermi Kepl Kepler Limi- Limi- Hardware model GF100 GF104 GK104 GK110 Impact tation tation CUDA Compute Capability (CCC) 2.0 2.1 3.0 3.5 Max. 32-bits registers / thread 63 63 63 255 SW. Working set 32-bit registers / Multiprocessor 32 K 32 K 64 K 64 K HW. Working set Shared memory / Multiprocessor 16-48KB 16-48KB 16-32-48KB 16-32-48 KB HW. Tile size Access L1 cache / Multiprocessor 48-16KB 48-16KB 48-32-16KB 48-32-16 KB HW. speed Access L2 cache / GPU HW. 768 KB. 768 KB. 1536 KB. 1536 KB. speed 21 22 GPUDirect Kepler vs. Fermi: Large scale computations Direct transfers between GPUs and network interfaces: GPU generation Fer Fermi Kepler Kepl Hardware model GF100 GF104 GK104 GK110 Limitation Impact Compute Capability (CCC) 2.0 2.1 3.0 3.5 Max. X dimension on CUDA grids 2^16-1 2^16-1 2^32-1 2^32-1 Software Problem size Problem Dynamic parallelism No No No Yes Hardware structure Thread Hyper-Q No No No Yes Hardware scheduling 23 24

The way we did biomedical Dynamic parallelism image analysis in the past High resolution image Image tiles (40x zoom) CPU GPU CPU GPU ` Computational units Mapa de clasificación … … CPU GPU PS 3 Assign classification tasks Label 1 background The GPU as a co-processor The GPU as an autonomous processor Label 2 undetermined 25 26 Parallelism can be deployed depending on data Parallelism can be deployed depending on volume of each computational region run-time evolution It makes easier the GPU computation. It broadens the range of GPU applications. Power can be assigned to regions proportionally to their requirements CUDA until 2012 CUDA on Kepler 27 28

Fermi without Hyper-Q: Temporal division on each SM. A look ahead: The Echelon execution model 100 Object Thread GPU utilization (%) A Swift operations: A B 50 Thread array creation. Global address space B Messages. Block transfers. 0 A B C D E F A Time C Collective operations. M Kepler with Hyper-Q: Simultaneous multip. SMX e m o 100 r D y h D D i e C F r r A a e C f GPU utilization (%) r X c k E l h u C B Load/Store y B B B 50 B E F F E F A B A E 0 D 0 Saved time Active message CPU processes... ...mapped onto the GPU 29 30 Conclusions Thanks for your attention! Kepler represents a new generation of GPU hardware, You can always reach me at: deploying thousands of cores to benefit from the CUDA email: ujaldon@uma.es model in large-scale applications. Major enhancements: Web page at the University of Malaga: http://manuel.ujaldon.es The GPU gets more autonomous, can create threads by itself. Web site at Nvidia: Threads scheduling gets more efficient, particularly on tiny http://research.nvidia.com/users/manuel-ujaldon threads. For additional information, read the Kepler whitepaper: Larger caches and faster memory bandwidth, also between GPUs. http://www.nvidia.com/object/nvidia-kepler.html The GPU can now execute much larger problem sizes and deploy To attend to the official talk given at GTC'12 (webinar): more parallelism. http://www.gputechconf.com/gtcnew/on-demand-gtc.php#1481 Biomedicine and image processing are two fields which To listen additional Nvidia material about GPU computing: can get more benefit from these enhancements. http://www.nvidia.com/object/webinar.html 31 32

Recommend

More recommend