Homework

Homework

Lecture 7: Linear Classification Methods Final projects? Groups Topics Proposal week 5 Lecture 20 is poster session, Jacobs Hall Lobby, snacks Final report 15 June.

What is “linear” classification? Classification is intrinsically non-linear It puts non-identical things in the same class, so a difference in input vector sometimes causes zero change in the answer “Linear classification” means that the part that adapts is linear The adaptive part is followed by a fixed non-linearity. It may be preceded by a fixed non-linearity (e.g. nonlinear basis functions). T = + = y ( x ) w x w , Decision f ( y ( x )) 0 adaptive linear function fixed non-linear function 1 y 0.5 0 0 z

Representing the target values for classification For two classes, we use a single valued output that has target values 1 for the “positive” class and 0 (or -1 ) for the other class For probabilistic class labels the target value can then be P(t=1) and the model output can also represent P(y=1). For N classes we often use a vector of N target values containing a single 1 for the correct class and zeros elsewhere. For probabilistic labels we can then use a vector of class probabilities as the target vector.

Three approaches to classification Use discriminant functions directly without probabilities: Convert input vector into real values. A simple operation (like thresholding) can get the class. Choose real values to maximize the useable information about the class label that is in the real value. = p ( class C | x ) Infer conditional class probabilities : k Compute the conditional probability of each class. Then make a decision that minimizes some loss function Compare the probability of the input under separate , class- specific, generative models. E.g. fit a multivariate Gaussian to the input vectors of each class and see which Gaussian makes a test data vector most probable. (Is this the best bet?)

Discriminant functions The planar decision surface in data-space for the simple linear discriminant function: T x + w 0 ³ w 0 X on plane => y=0 => Distance from plane

Discriminant functions for N>2 classes One possibility is to use N two-way discriminant functions. Each function discriminates one class from the rest. Another possibility is to use N(N-1)/2 two-way discriminant functions Each function discriminates between two particular classes. Both these methods have problems Two-way preferences More than one good need not be transitive! answer

A simple solution (4.1.2) Use N discriminant functions, y , y , y ... and pick the max. i j k This is guaranteed to give consistent and convex decision regions if y is linear. > > y ( x ) y ( x ) and y ( x ) y ( x ) k A j A k B j B a implies ( for positive ) that ( ) ( ) a + - a > a + - a y x ( 1 ) x y x ( 1 ) x k A B j A B Decision boundary?

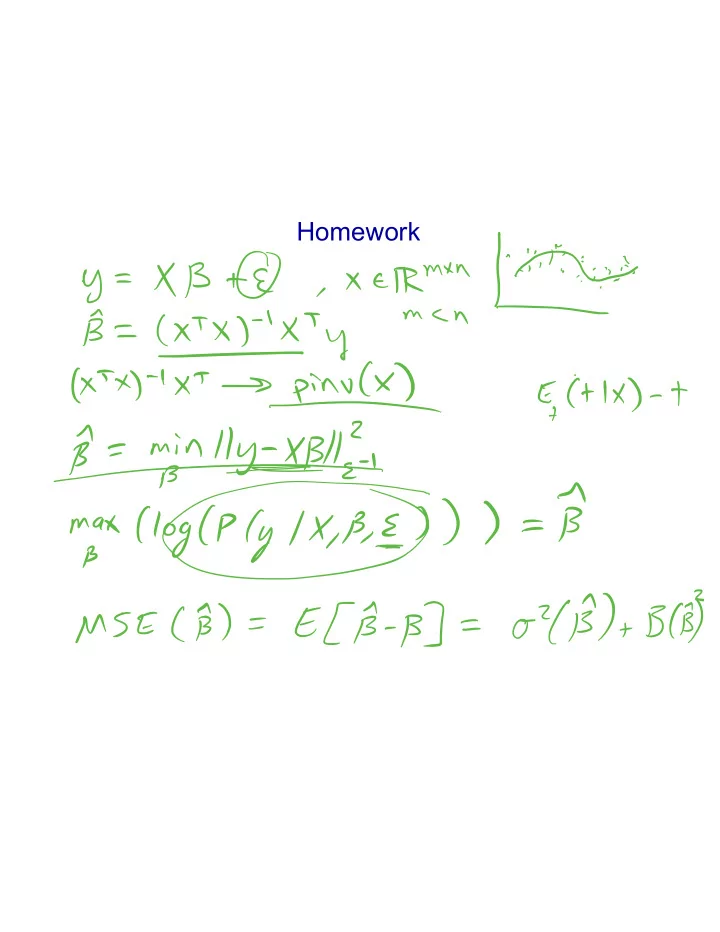

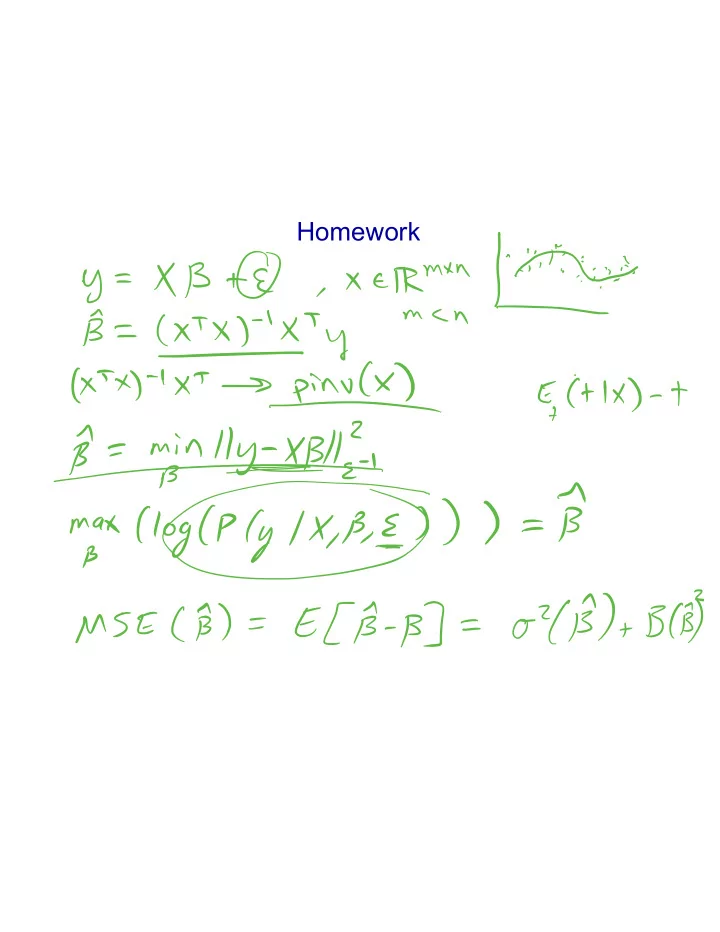

Maximum Likelihood and Least Squares (from lecture 3) Computing the gradient and setting it to zero yields Solving for w , The Moore-Penrose pseudo-inverse, . where

LSQ for classification Each class C k is described by its own linear model so that y k ( x ) = w T k x + w k 0 (4.13) where k = 1 , . . . , K . We can conveniently group these together using vector nota- tion so that y ( x ) = � W T � x (4.14) Consider a training set {" # , $ # }, ' = 1 … N Define X and T � LSQ solution: X T � X ) − 1 � W = ( � � X T T = � X † T (4.16) � And prediction � x = T T � X † � T y ( x ) = � � W T � � x . (4.17)

Using “least squares” for classification It does not work as well as better methods, but it is easy: It reduces classification to least squares regression. logistic regression least squares regression

PCA don ’ t work well

picture showing the advantage of Fisher’s linear discriminant When projected onto the line Fisher chooses a direction that makes joining the class means, the the projected classes much tighter, classes are not well separated. even though their projected means are less far apart.

Math of Fisher’s linear discriminants w T y = x What linear transformation is best for discrimination? The projection onto the vector separating µ - w m m the class means seems sensible: 2 1 å 2 = - s ( y m ) But we also want small variance within each 1 n 1 class: e n C 1 å 2 = - s ( y m ) 2 n 2 e n C 2 2 - ( m m ) between Fisher’s objective function is: 2 1 = J ( w ) 2 2 + s s 1 2 within

More math of Fisher’s linear discriminants 2 T - ( m m ) w S w 2 1 B = = J ( w ) 2 2 T + s s w S w 1 2 W T = - - S ( m m ) ( m m ) B 2 1 2 1 å å T T = - - + - - S ( x m ) ( x m ) ( x m ) ( x m ) W n 1 n 1 n 2 n 2 Î Î n C n C 1 2 - 1 µ - Optimal solution : w S ( m m ) W 2 1

We have probalistic classification!

Probabilistic Generative Models for Discrimination (Bishop p 196) Use a generative model of the input vectors for each class, and see which model makes a test input vector most probable. The posterior probability of class 1 is: p ( C ) p ( x | C ) 1 = 1 1 = p ( C | x ) 1 - + z p ( C ) p ( x | C ) p ( C ) p ( x | C ) + 1 e 1 1 0 0 p ( C ) p ( x | C ) p ( C | x ) 1 1 1 = = where z ln ln - p ( C ) p ( x | C ) 1 p ( C | x ) 0 0 1 z is called the logit and is given by the log odds

An example for continuous inputs Assume input vectors for each class are Gaussian, all classes have the same covariance matrix. normalizing inverse covariance matrix { } constant 1 - T 1 = - - S - p ( x | C ) a exp ( x µ ) ( x µ ) k k k 2 For two classes, C 1 and C 0 , the posterior is a logistic: T = s + p ( C | x ) ( w x w ) 1 0 - 1 = - w Σ ( µ µ ) 1 0 p ( C ) - - 1 1 T 1 T 1 1 = - + + w µ Σ µ µ Σ µ ln 0 1 1 0 0 2 2 p ( C ) 0

! = #$ % &|( ) % ( ) % &|( * % ( *

The role of the inverse covariance matrix - 1 If the Gaussian is spherical no need to worry = - w Σ ( µ µ ) 1 0 about the covariance matrix. gives the same value So, start by transforming the data space to make the Gaussian spherical T for w x as : This is called “ whitening” the data. 1 1 It pre-multiplies by the matrix square - - = - w Σ µ Σ µ 2 2 root of the inverse covariance matrix . aff 1 0 In transformed space, the weight vector is 1 - = the difference between transformed means. and x Σ x 2 aff T gives for w x aff aff

The posterior when the covariance matrices are different for different classes (Bishop Fig ) The decision surface is planar when the covariance matrices are the same and quadratic when not.

Bernoulli distribution Random variable ! ∈ 0,1 Coin flipping: heads=1, tails=0 Bernoulli Distribution ML for Bernoulli Given:

The logistic function The output is a smooth function T = + z w x w 0 of the inputs and the weights. 1 = s = y ( z ) - z + e 1 1 y ¶ ¶ z z = = x w i i ¶ ¶ w x 0.5 i i dy = - y ( 1 y ) 0 dz 0 z Its odd to express it in terms of y.

Logistic regression (Bishop 205) ! " # $ = &(( ) $ ) Observations Likelihood 2 = &(( ) $ ) ! 2 $, ( = ! 4 $, ( = Log-likelihood C ( = −EF ( ! 4 $, ( )= Minimize –log like Derivative ∇ ( C ( =

Logistic regression (page 205) When there are only two classes we can model the conditional probability of the positive class as 1 T = s + s = p ( C | x ) ( w x w ) where ( z ) 1 0 + - 1 exp( z ) If we use the right error function, something nice happens: The gradient of the logistic and the gradient of the error function cancel each other: N å = - Ñ = - E ( w ) ln p ( t | w ), E ( w ) ( y t ) x n n n = n 1

Recommend

More recommend