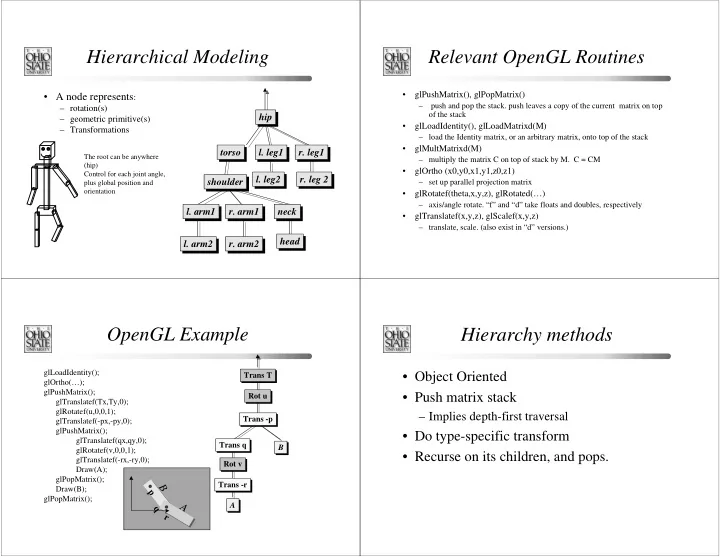

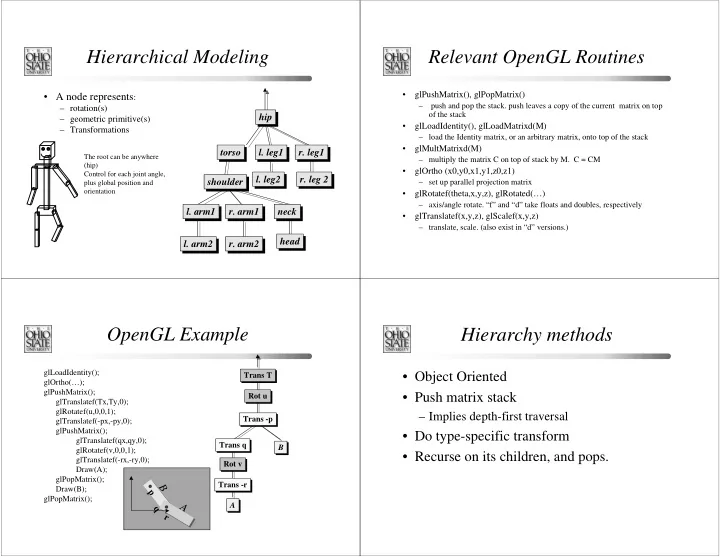

Hierarchical Modeling Relevant OpenGL Routines • glPushMatrix(), glPopMatrix() • A node represents : – push and pop the stack. push leaves a copy of the current matrix on top – rotation(s) of the stack hip – geometric primitive(s) • glLoadIdentity(), glLoadMatrixd(M) – Transformations – load the Identity matrix, or an arbitrary matrix, onto top of the stack • glMultMatrixd(M) torso l. leg1 r. leg1 The root can be anywhere – multiply the matrix C on top of stack by M. C = CM (hip) • glOrtho (x0,y0,x1,y1,z0,z1) Control for each joint angle, l. leg2 r. leg 2 shoulder – set up parallel projection matrix plus global position and orientation • glRotatef(theta,x,y,z), glRotated(…) – axis/angle rotate. “f” and “d” take floats and doubles, respectively l. arm1 r. arm1 neck • glTranslatef(x,y,z), glScalef(x,y,z) – translate, scale. (also exist in “d” versions.) head l. arm2 r. arm2 OpenGL Example Hierarchy methods glLoadIdentity(); • Object Oriented Trans T glOrtho(…); glPushMatrix(); • Push matrix stack Rot u glTranslatef(Tx,Ty,0); glRotatef(u,0,0,1); – Implies depth-first traversal Trans -p glTranslatef(-px,-py,0); glPushMatrix(); • Do type-specific transform glTranslatef(qx,qy,0); Trans q B glRotatef(v,0,0,1); • Recurse on its children, and pops. glTranslatef(-rx,-ry,0); Rot v Draw(A); glPopMatrix(); Trans -r B Draw(B); p glPopMatrix(); A A q r

Interactive Applications Interactive Applications • How do we add interactive control? • What do we use to control the motion? • Many different paradigms – Mouse – Examiner => Object in hand • One-button, two-button, three-button – Fly-thru => In a virtual vehicle pod • What button does what? • Only when mouse is clicked down, released up, or – Walk-thru => Constrained to stay on ground. continuously as the mouse moves? – Move-to / re-center => Pick a location to fly to. – Keyboard • Collision detection? • Arrow keys? – Can we pass thru objects like ghosts? Input Devices • Interactive user control devices – Mouse – 3D pointer - Polhemus, Microscribe, … – Spaceball – Hand-held wand – Data Glove – Gesture – Custom

A Virtual Trackball A Virtual Trackball • A rather standard and easy-to-use interface. • Points inside the projection of the hemi-sphere are mapped up to the surface. • Examiner type of interaction. – Determine distance from point (mouse position) to the • Consider a hemi-sphere over the image- image-plane center. plane. – Scale such that points on the silhouette of the sphere • Each point in the have unit length. image is projected – Add the z-coordinate to onto the hemi-sphere. normalize the vector. A Virtual Trackball A Virtual Trackball • Do this for all points. • Rotation axis: • Keep track of the last trackball (mouse) location and the u � = v � ⊗ v � current location. 1 2 • This is the direction we want the scene to move in. • Take the direction Where, v 1 and v 2 are the perpendicular to this and use mouse points mapped it as the axis of rotation. v 2 to the sphere. • Use the distance between the two points to determine the v 1 rotation angle (or amount).

A Virtual Trackball Virtual Reality • Use glRotatef( angle, u x , u y , u z ) Roger Crawfis • Slight problem: We want the rotation to be the last operation performed. • Easily fixed: – Read out the current GL_MODELVIEW matrix – Load the identity matrix – Rotate – Multiply by the saved GL_MODELVIEW matrix Virtual reality technology Related terminology • many definitions of virtual reality (VR), for example: • virtual environment • • virtual world "the creation of the effect of immersion in a computer- generated three-dimensional environment in which objects • artificial reality have spatial presence" [Bryson & Feiner, 1994] • augmented reality • telepresence • "things as opposed to pictures of things” • interaction, not content • Teleoperation • many variations, desktop VR, fish tank VR, augmented • Collaborative Spaces reality

Performance requirements The problem with VR is… • wide-field stereoscopic display fill's the user's field of view • that it is apparently simple • head-tracking supports the illusion that the user is looking • NOT the unusual hardware around in an environment • many components must work together in • 3D computer graphics fills the environment with objects • 3D interaction gives users the feeling that they are real-time interacting with real objects • many criteria must be met • overall frame rate must be > 10 frames/sec • unclear how to use the interface • end-to-end delays must be < 0.1 sec for interactive control • human factors issues not well understood The evolution of VR Degrees of immersion • 1960 Morton Heilig files patent to the US Patent Office DISPLAY INTERACTION IMMERSION "Stereoscopic TV Apparatus for Individual Use" colour keyboard low My invention generally speaking comprises the following elements: a hollow casing, a pair of optical units, a pair of television tube units, a high resolution 2D Mouse pair of earphones and a pair of air discharge nozzles, all coacting to cause the user to comfortably see the images, hear the sound effects Stereo 6D input device and to be sensitive to the air discharge of the said nozzles. head tracking 6D tracking + buttons • 1960-70 Sutherland's head-mounted display • 1984 NASA Ames VIVED project wide field of view 6D tracking + gloves • 1986-90 NASA Ames VIEW lab and VPI head coupled force tracking High • 1990-onwards VR community fully formed and flourishing…

Virtual Environments Typical configuration • Immersive tracker tracker electronics source • Interactive main computer �� - computation • User Centered speech - synthesis glove glove - recognition HMD electronics ������ graphics microphone sound ����������� headphones ���� ��������������� �������� ��������� Displays Virtual Environments • Display Technologies • primary technology underlying immersion – HMD’s - Head Mounted Displays • many aspects: colour, resolution, field of view… – Large theater - Imax, Omnimax • display paradigms: – Stereo displays – stereo via two displays – HUD’s - Head’s Up Displays – stereo via one display images synchronised (eyewear) • windshields – CAVE: immersion via surrounding large screens • goggles – head tracking (fish tank VR) – head tracking head-mounted – CAVE - Surround video projections

Tracking paradigms What to track? • head position and orientation • usually a sensor determines position and orientation relative to source (calibration renders • any significant body part position of source irrelevant) • any articulations • sensor detects a signal from the source in such a Other tracking technologies way that the position and orientation can be • passive stereo vision systems determined • marker systems (used in motion capture) • either the source or the sensor can be fixed • structured light methods (light stripe) • numerous technologies: electromagnetic, • inertial tracking (using accelerometers) ultrasonic, mechanical, video, inertial • eye tracking (commonly optical - corneal reflection) Virtual Environments Virtual Environments • Interactive user control devices • Interactive user navigation devices – Head tracker – Mouse – Treadmill – 3D pointer - Polhemus, Microscribe, … – Bicycle – Spaceball – Wheelchair – Hand-held wand – Boom – Data Glove – Video detection – Gesture • Anyone seen the new – Custom game at GameWorks?

Fakespace BOOM 3C Video Output Full Color Stereo - or Monoscopic. Resolution Up to 1280 x 1024 pixels per eye. Optics User intercangeable modules offer from 40 to 110 degrees horizontal FOV Tracking Opto-mechanical Accuracy 0.015" at 30" Latency 200ns Sampling Frequency >70Hz Range 6' diameter horizontal circle (center 1 foot unavailable) 2.5' vertical. Human factors of virtual reality Human factors of virtual reality • Limits on motion frequencies: • Limits on Vision (optical resolution): – head (5 Hz) – angular size of the smallest object that can be resolved: – hand (10 Hz) • essentially the angular size of a colour pixel – full body (5 Hz) • measure as a linear size in minutes of arc – eye (100 Hz) • full moon is 30 minutes of arc across its diameter • human visual system can resolve 0.5 minutes of arc in the central visual field • 2-3 minutes of arc in the peripheral visual field

Recommend

More recommend