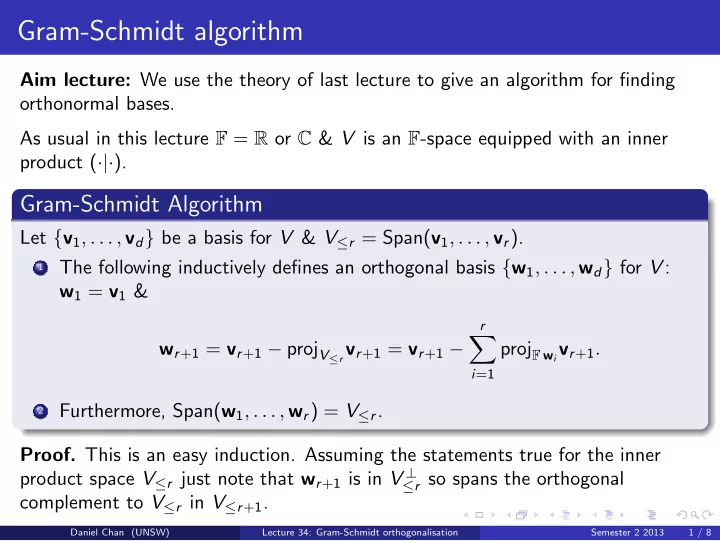

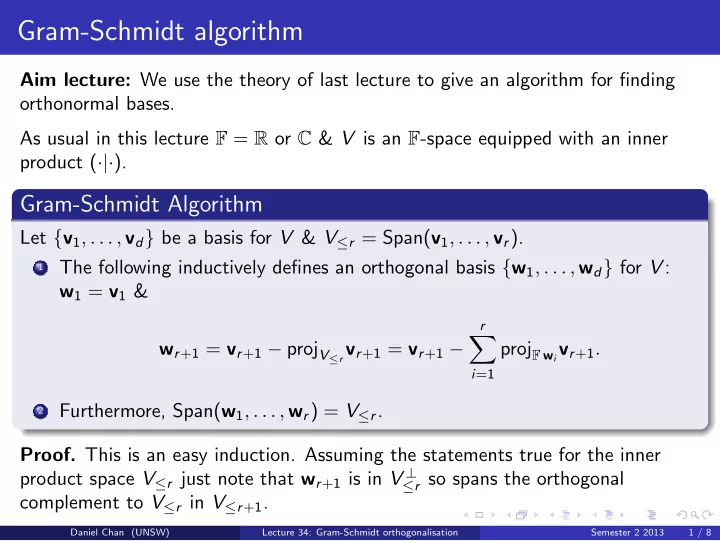

Gram-Schmidt algorithm Aim lecture: We use the theory of last lecture to give an algorithm for finding orthonormal bases. As usual in this lecture F = R or C & V is an F -space equipped with an inner product ( ·|· ). Gram-Schmidt Algorithm Let { v 1 , . . . , v d } be a basis for V & V ≤ r = Span( v 1 , . . . , v r ). The following inductively defines an orthogonal basis { w 1 , . . . , w d } for V : 1 w 1 = v 1 & r � w r +1 = v r +1 − proj V ≤ r v r +1 = v r +1 − proj F w i v r +1 . i =1 Furthermore, Span( w 1 , . . . , w r ) = V ≤ r . 2 Proof. This is an easy induction. Assuming the statements true for the inner product space V ≤ r just note that w r +1 is in V ⊥ ≤ r so spans the orthogonal complement to V ≤ r in V ≤ r +1 . Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 1 / 8

Polynomial example E.g. Find an orthonormal basis for V = R [ x ] ≤ 1 equipped with the inner product � 1 ( f | g ) = 0 f ( t ) g ( t ) dt . Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 2 / 8

Example in R 4 E.g. Find an orthog basis for the image of the following matrix 1 1 2 0 2 0 A = 0 1 0 0 2 1 Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 3 / 8

Example cont’d Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 4 / 8

Projection formula revisited Let { w 1 , . . . , w d } ⊂ V be an orthogonal set spanning W ≤ V . Recall for any v ∈ V we have proj W v = ( w 1 | v ) � w 1 � 2 w 1 + . . . + ( w d | v ) � w d � 2 w d . Prop Consider the orthogonal co-ord system Q = ( w 1 . . . w d ) : F d − → W . Let � ( w 1 |· ) � T � w 1 � 2 , . . . , ( w d |· ) → F d . P = : V − � w d � 2 Then proj W = Q ◦ P . 1 If V = F m so Q ∈ M md ( F ), then we may re-write P = D − 1 Q ∗ where D is 2 the diagonal matrix D = ( � w 1 � 2 ) ⊕ . . . ⊕ ( � w d � 2 ). Hence proj W = QD − 1 Q ∗ . Proof. 1) is just a restatement of the projection formula of lecture 33 above. For 2), just note that the linear map ( w i |· ) is given by left multn by w ∗ i so calculating D − 1 C ∗ gives the result. Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 5 / 8

Example of a projection matrix E.g. Let W = Span((2 , 1 , 2) T , (1 , 2 , − 2) T ). Find the matrix representing proj W : R 3 − → R 3 . Rem Note the formula in prop 2), above generalises the formula proj u = uu T for u ∈ R n a unit vector. Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 6 / 8

QR -factorisation Let A = ( v 1 . . . v n ) ∈ M mn ( C ) be an m × n -matrix with lin indep columns v 1 , . . . , v n . Let w 1 , . . . , w n be an orthogonal basis of im A such that Span( v 1 , . . . , v i ) = Span( w 1 , . . . , w i ) for i = 1 , . . . , n e.g. the one that Gram-Schmidt gives, or any derived from that by scaling the vectors. Prop-Defn [ QR -factorisation] If Q = ( w 1 . . . w n ) & D = ( � w 1 � 2 ) ⊕ . . . ⊕ ( � w d � 2 ), then A = QR where R = D − 1 Q ∗ A is invertible and upper triangular. When Q has orthonormal columns, we call this a QR-factorisation of A . Proof. Our new projection formula shows projim A = QD − 1 Q ∗ so A = ( v 1 . . . v n ) = ( QD − 1 Q ∗ v 1 . . . QD − 1 Q ∗ v n ) = QD − 1 Q ∗ A & it suffices to show that R = D − 1 Q ∗ A is upper triangular with all diagonal entries non-zero. If ( β 1 , . . . , β n ) T is the i -th column of R then comparing the i -columns of A & QR give v i = β 1 w 1 + . . . + β n w n . Our condition on span ensures 0 = β i +1 = β i +2 = . . . = β n whilst β i � = 0. Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 7 / 8

Example Rem An easy way to remember the formula is to note Q ∗ Q = D so Q ∗ A = Q ∗ QR = DR . E.g. QR -factorise the matrix 1 0 A = 1 1 1 1 Daniel Chan (UNSW) Lecture 34: Gram-Schmidt orthogonalisation Semester 2 2013 8 / 8

Recommend

More recommend