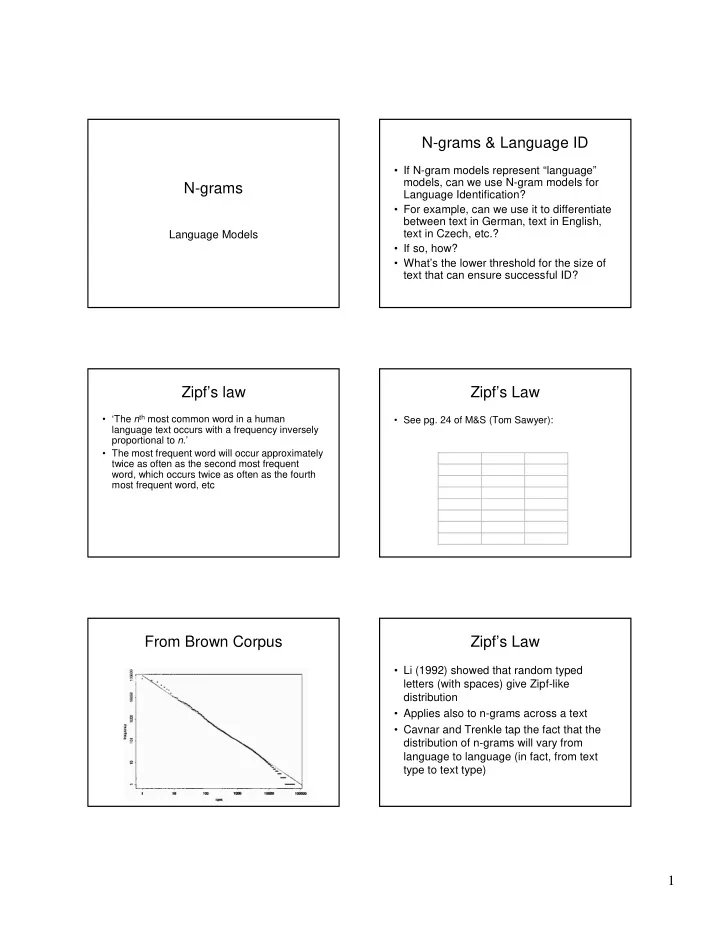

N-grams & Language ID • If N-gram models represent “language” models, can we use N-gram models for N-grams Language Identification? • For example, can we use it to differentiate between text in German, text in English, text in Czech, etc.? Language Models • If so, how? • What’s the lower threshold for the size of text that can ensure successful ID? Zipf’s law Zipf’s Law • ‘The n th most common word in a human • See pg. 24 of M&S (Tom Sawyer): language text occurs with a frequency inversely proportional to n .’ • The most frequent word will occur approximately Word Freq rank twice as often as the second most frequent the 3332 1 word, which occurs twice as often as the fourth and 2972 2 most frequent word, etc and 1775 3 he 877 10 but 410 20 be 294 30 there 222 40 From Brown Corpus Zipf’s Law • Li (1992) showed that random typed letters (with spaces) give Zipf-like distribution • Applies also to n-grams across a text • Cavnar and Trenkle tap the fact that the distribution of n-grams will vary from language to language (in fact, from text type to text type) 1

N-grams, Cavnar & Trenkle Cavnar & Trenkle methodology • Split the text into separate tokens consisting only of letters and apostrophes. Digitsand punctuation are discarded. Pad the token with sufficient blanks before and after. • Generate all possible N-grams, for N=1 to 5. Use positions that span the padding blanks, as well. • Hash into a table to find the counter for the N- gram, and increment it. The hash table • When done, output all N-grams and their counts. • Sort those counts into reverse order by the number of occurrences. Cavnar & Trenkle methodology Cavnar & Trenkle methodology • N-grams profiles created for a body of text for each target language (or document type, etc.) • A profile is created for a text to be evaluated. • This profile is compared against stored profiles using a simple rank-order statistic. • The closest profile is the one with the smallest distance measure. Cavnar & Trenkle Damashek Methodology • Download implementation from: • Damashek 1995: Gauging Similarity with n-Grams: Language-Independent http://software.wise-guys.nl/libtextcat/ Categorization of Text • Taps into the similar Zipfian notion, but uses Vector Space Model instead 2

Vector Space Models Vector Space Models • Often used in IR and search tasks • Distance measured by cosine measure, • (We’ll be covering these more this term) which if vectors are normalized, is their dot • Essentially: represent some source data (text product: document, Web page, e-mail) by some vector representation N → → → → sim(q k ,d j ) = q k • d j = Σ w i,k × w i,j – Vectors composed of counts/frequency of particular i =1 = cos θ qd words (esp. certain content words) or other objects of interest – ‘Search’ vector compared against ‘target’ vectors • Cos = 1 means identical vectors, 0 not – Most closely related vectors cluster together • Perl has a built in methods for working with vectors. Damashek’s methodology Damashek • Step n-gram window through document, one • Vectors built of documents compared character at a time against known vectors • Convert each n-gram into an indexing key (for • Highest cosine measure means closest eventual hashing) language (or document) • Concatenate all such keys into a list and note the length • Damashek’s method works better with • Order list by key value (sort) longer documents • Count and store # of occurrences of each distinct key • Divide # of occurrences of each distinct key by the length of the original list (normalization) Damashek • See the following URL for demo: http://epsilon3.georgetown.edu/~ballc/languagei d/index.html • For his original Science paper, see Jstor: http://www.jstor.org/view/00368075/di002302/00 p0139l/0?currentResult=00368075%2bdi002302 %2b00p0139l%2b0%2c03&searchUrl=http%3A %2F%2Fwww.jstor.org%2Fsearch%2FBasicRes ults%3Fhp%3D25%26si%3D1%26Query%3Dda mashek 3

Recommend

More recommend