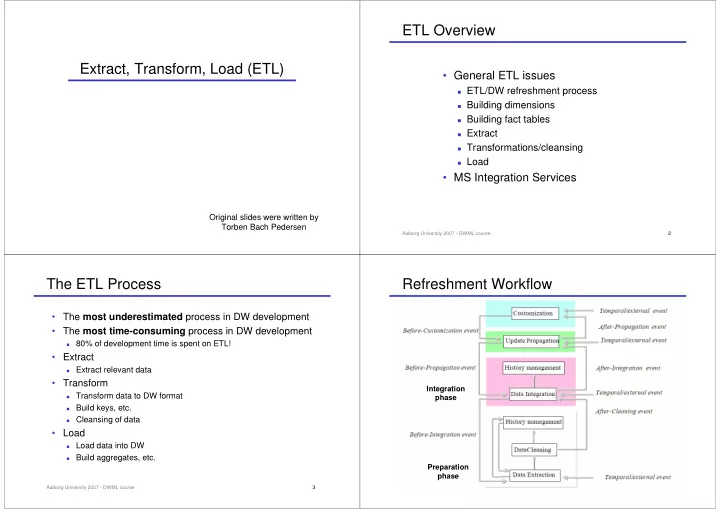

ETL Overview Extract, Transform, Load (ETL) • General ETL issues � ETL/DW refreshment process � Building dimensions � Building fact tables � Extract � Transformations/cleansing � Load • MS Integration Services Original slides were written by Torben Bach Pedersen Aalborg University 2007 - DWML course 2 The ETL Process Refreshment Workflow • The most underestimated process in DW development • The most time-consuming process in DW development � 80% of development time is spent on ETL! • Extract � Extract relevant data • Transform Integration � Transform data to DW format phase � Build keys, etc. � Cleansing of data • Load � Load data into DW � Build aggregates, etc. Preparation phase Aalborg University 2007 - DWML course 3 Aalborg University 2007 - DWML course 4

ETL In The Architecture Data Staging Area (DSA) • Transit storage for data in the ETL process � Transformations/cleansing done here ETL side Query side • No user queries Metadata Data • Sequential operations on large data volumes sources Query Reporting Tools Services � Performed by central ETL logic Presentation servers Desktop Data -Warehouse Browsing Access Tools - Extract - Access and Security � No need for locking, logging, etc. Data marts with - Query Management - Transform aggregate-only data - Standard Reporting � RDBMS or flat files? (DBMS have become better at this) Data mining - Load - Activity Monitor Conformed Data Data Staging • Finished dimensions copied from DSA to relevant marts Warehouse dimensions Area Operational Bus and facts system • Allows centralized backup/recovery Data marts with atomic data � Often too time consuming to initial load all data marts by failure � Backup/recovery facilities needed Service � Better to do this centrally in DSA than in all data marts Data Element Aalborg University 2007 - DWML course 5 Aalborg University 2007 - DWML course 6 ETL Construction Process Building Dimensions � Plan • Static dimension table Make high-level diagram of source-destination flow � DW key assignment: production keys to DW keys using table 1) Test, choose and implement ETL tool � Combination of data sources: find common key? 2) Outline complex transformations, key generation and job � Check one-one and one-many relationships using sorting 3) sequence for every destination table • Handling dimension changes � Construction of dimensions � Described in last lecture Construct and test building static dimension � Find the newest DW key for a given production key 4) Construct and test change mechanisms for one dimension � Table for mapping production keys to DW keys must be updated 5) Construct and test remaining dimension builds 6) • Load of dimensions � Construction of fact tables and automation � Small dimensions: replace Construct and test initial fact table build � Large dimensions: load only changes 7) Construct and test incremental update 8) Construct and test aggregate build ( you do this later) 9) 10) Design, construct, and test ETL automation Aalborg University 2007 - DWML course 7 Aalborg University 2007 - DWML course 8

Building Fact Tables Types of Data Sources • Two types of load • Initial load • Non-cooperative sources � ETL for all data up till now � Snapshot sources – provides only full copy of source, e.g., files � Done when DW is started the first time � Specific sources – each is different, e.g., legacy systems � Very heavy - large data volumes � Logged sources – writes change log, e.g., DB log • Incremental update � Queryable sources – provides query interface, e.g., RDBMS � Move only changes since last load • Cooperative sources � Done periodically (e.g., month or week) after DW start � Replicated sources – publish/subscribe mechanism � Less heavy - smaller data volumes � Call back sources – calls external code (ETL) when changes occur • Dimensions must be updated before facts � Internal action sources – only internal actions when changes occur � The relevant dimension rows for new facts must be in place ◆ DB triggers is an example � Special key considerations if initial load must be performed again • Extract strategy depends on the source types Aalborg University 2007 - DWML course 9 Aalborg University 2007 - DWML course 10 Extract Computing Deltas • Delta = changes since last load • Goal: fast extract of relevant data • Store sorted total extracts in DSA � Extract from source systems can take long time � Delta can easily be computed from current+last extract • Types of extracts: � + Always possible � + Handles deletions � Extract applications (SQL): co-existence with other applications � - High extraction time � DB unload tools: faster than SQL-based extracts • Put update timestamp on all rows (in sources) • Extract applications the only solution in some scenarios � Updated by DB trigger • Too time consuming to ETL all data at each load � Extract only where “timestamp > time for last extract” � Extraction can take days/weeks � + Reduces extract time � Drain on the operational systems � - Cannot (alone) handle deletions � Drain on DW systems � - Source system must be changed, operational overhead � => Extract/ETL only changes since last load (delta) Aalborg University 2007 - DWML course 11 Aalborg University 2007 - DWML course 12

Changed Data Capture Common Transformations • Messages � Applications insert messages in a “queue” at updates � + Works for all types of updates and systems • Data type conversions � - Operational applications must be changed+operational overhead � EBCDIC � ASCII/UniCode • DB triggers � String manipulations � Triggers execute actions on INSERT/UPDATE/DELETE � Date/time format conversions � + Operational applications need not be changed • Normalization/denormalization � + Enables real-time update of DW � To the desired DW format � - Operational overhead � Depending on source format • Replication based on DB log • Building keys � Find changes directly in DB log which is written anyway � Table matches production keys to surrogate DW keys � + Operational applications need not be changed � Correct handling of history - especially for total reload � + No operational overhead � - Not possible in some DBMS Aalborg University 2007 - DWML course 13 Aalborg University 2007 - DWML course 14 Data Quality Cleansing • Data almost never has decent quality • BI does not work on “raw” data • Data in DW must be: � Pre-processing necessary for BI analysis � Precise • Handle inconsistent data formats ◆ DW data must match known numbers - or explanation needed � Spellings, codings, … � Complete • Remove unnecessary attributes ◆ DW has all relevant data and the users know � Production keys, comments,… � Consistent • Replace codes with text ( Why?) ◆ No contradictory data: aggregates fit with detail data � Unique � City name instead of ZIP code,… ◆ The same things is called the same and has the same key • Combine data from multiple sources with common key (customers) � E.g., customer data from customer address, customer name, … � Timely ◆ Data is updated ”frequently enough” and the users know when Aalborg University 2007 - DWML course 15 Aalborg University 2007 - DWML course 16

Types Of Cleansing Cleansing • Conversion and normalization � Text coding, date formats, etc. • Mark facts with Data Status dimension � Most common type of cleansing � Normal, abnormal, outside bounds, impossible,… • Special-purpose cleansing � Facts can be taken in/out of analyses � Normalize spellings of names, addresses, etc. • Uniform treatment of NULL � Remove duplicates, e.g., duplicate customers � Use explicit NULL value rather than “special” value (0,-1,…) • Domain-independent cleansing � Use NULLs only for measure values (estimates instead?) � Approximate, “fuzzy” joins on records from different sources � Use special dimension keys for NULL dimension values • Rule-based cleansing ◆ Avoid problems in joins, since NULL is not equal to NULL � User-specifed rules, if-then style • Mark facts with changed status � Automatic rules: use data mining to find patterns in data � New customer, Customer about to cancel contract, …… ◆ Guess missing sales person based on customer and item Aalborg University 2007 - DWML course 17 Aalborg University 2007 - DWML course 18 Improving Data Quality Load • Goal: fast loading into DW • Appoint “data quality administrator” � Loading deltas is much faster than total load � Responsibility for data quality • SQL-based update is slow � Includes manual inspections and corrections! � Large overhead (optimization, locking, etc.) for every SQL call • Source-controlled improvements � DB load tools are much faster � The optimal? • Index on tables slows load a lot • Construct programs that check data quality � Drop index and rebuild after load � Are totals as expected? � Can be done per index partition � Do results agree with alternative source? � Number of NULL values? • Parallellization • Do not fix all problems with data quality � Dimensions can be loaded concurrently � Allow management to see “weird” data in their reports? � Fact tables can be loaded concurrently � Such data may be meaningful for them? (e.g., fraud detection) � Partitions can be loaded concurrently Aalborg University 2007 - DWML course 19 Aalborg University 2007 - DWML course 20

Recommend

More recommend