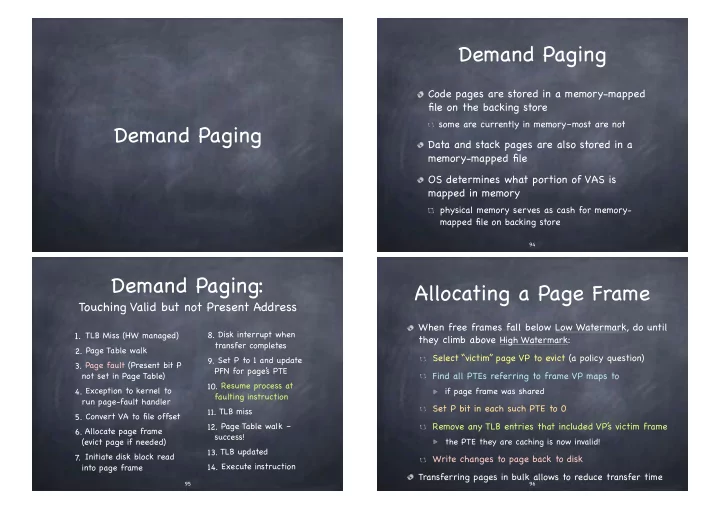

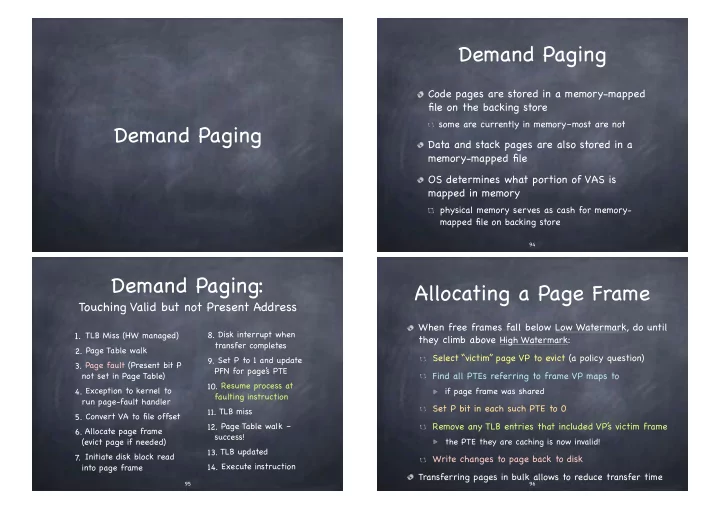

Demand Paging Code pages are stored in a memory-mapped file on the backing store some are currently in memory–most are not Demand Paging Data and stack pages are also stored in a memory-mapped file OS determines what portion of VAS is mapped in memory physical memory serves as cash for memory- mapped file on backing store 94 Demand Paging: Allocating a Page Frame Touching Valid but not Present Address When free frames fall below Low Watermark, do until 1. TLB Miss (HW managed) 8. Disk interrupt when they climb above High Watermark: transfer completes 2. Page Table walk Select “victim” page VP to evict (a policy question) 9. Set P to 1 and update 3. Page fault (Present bit P PFN for page’ s PTE Find all PTEs referring to frame VP maps to not set in Page Table) 10. Resume process at 4. Exception to kernel to if page frame was shared faulting instruction run page-fault handler Set P bit in each such PTE to 0 11. TLB miss 5. Convert VA to file offset 12. Page Table walk – Remove any TLB entries that included VP’ s victim frame 6. Allocate page frame success! the PTE they are caching is now invalid! (evict page if needed) 13. TLB updated . Initiate disk block read 7 Write changes to page back to disk 14. Execute instruction into page frame Transferring pages in bulk allows to reduce transfer time 95 96

Page Replacement How do we pick a victim? We want: Local vs Global replacement low fault-rate for pages Local: victim chosen from frames of process experiencing page fault page faults as inexpensive as possible fixed allocation per process We need: Global: victim chosen from frames allocated to any process a way to compare the relative performance variable allocation per process of different page replacement algorithms Many replacement policies some absolute notion of what a “good” page Random, FIFO, LRU, Clock, Working set, etc. replacement algorithm should accomplish Goal: minimizing number of page faults 97 98 Comparing Page Optimal Page Replacement Replacement Algorithms Replace page needed furthest in future Record a trace of the pages accessed by a process Time 0 1 2 3 4 5 6 7 8 9 10 E.g. 3,1,4,2,5,2,1,2,3,4 (or c,a,d,b,e,b,a,b,c,b) b d c b e Requests c a d b e b a b c d 0 a a a a a a a a a a d Simulate behavior of page replacement Page Frames 1 b b b b b b b b b b b algorithm on trace 2 c c c c c c c c c c c Record number of page faults generated 3 d d d d d e e e e e e X X Faults a = 7 a = ∞ Time page b = 6 b = 11 c = 9 c = 13 needed next d = 10 e = 15 99 100

+ Frames FIFO Replacement - Page Faults Replace pages in the order they come into memory Assume: Time 0 1 2 3 4 5 6 7 8 9 10 Number of page faults Requests c a d b e b a b c d a @ -3 b @ -2 e e e e d 0 a a a a a e Page Frames c @ -1 d @ 0 1 b b b b b b b a a a a 2 c c c c c c c c b b b 3 d d d d d d d d d c c X X X X X Faults Number of frames 101 102 For example... Belady’ s Anomaly Time 0 1 2 3 4 5 6 7 8 9 10 11 12 Time 0 1 2 3 4 5 6 7 8 9 10 11 12 Request a b c d a b e a b c d e Request a b c d a b e a b c d e s s a a a a a e e e e d d 0 a Page Frames Page Frames 0 a a a d d d e e e e e e FIFO FIFO 1 b b b b b a a a a a e 1 b b b a a a a a c c c 2 c c c c c b b b b b 2 c c c b b b b b d d 3 d d d d d c c c d Faults X X X X X X X X X X X X X X X X X X X Faults 3 frames - 9 page faults! 4 frames - 10 page faults! 103 104

+ Frames Locality of Reference - Page Faults? If a process access a memory location, then it is likely that Number of page faults the same memory location is going to be accessed again in the near future (temporal locality) nearby memory locations are going to be accessed in the future (spatial locality) 90% of the execution of a program is sequential Number of frames Most iterative constructs consist of a relatively small Yes, but only for stack page replacement policies number of instructions set of pages in memory with n frames is a subset of set of pages in memory with n+1 frames 105 106 LRU: Least Recently Used Implementing LRU Replace page not referenced for the longest time Maintain a “stack” of recently used pages Time 0 1 2 3 4 5 6 7 8 9 10 Time 0 1 2 3 4 5 6 7 8 9 10 Requests c a d b e b a b c d Requests c a d b e b a b c d a a a 0 a a a a a a a a Page Frames 0 a a a a a a a a a a a Page Frames b b b b b b b b b b 1 b c e e e e d 1 b b b b b b b b b b b 2 c c c c e 3 d d d d d d d d d c c 2 c c c c c e e e e e d X X X Faults 3 d d d d d d d d d c c c a d b e b a b c d LRU Page Stack c a d b e b a b c X X X Faults c a d d e e a b c a a d d e a a = 2 a = 7 a = 7 Time page b = 4 b = 8 b = 8 c = 1 e = 5 e = 5 last used Page to replace c d e d = 3 d = 3 c = 9 107 108

No-Locality Workload 80%-20% Workload 100% 100% 10,000 references, but Workload references with some locality 100 unique pages over 80% 80% Hit Rate Hit Rate time OPT OPT 80% of references to 60% LRU 60% LRU 20% of the pages FIFO 10,000 references FIFO 40% 40% RAND RAND 20% of references to Next page chosen at 20% 20% the remaining 80% of random 40 60 80 100 20 pages. 80 20 40 60 100 Cache size (blocks) Cache size (blocks) What do you notice? What do you notice? Cache Size (Blocks) Sequential-in-a-loop Implementing LRU Workload Add a (64-bit) timestamp to 100% FIFO, OPT each page table RAND & LRU 80% 10,000 references entry Hit Rate OPT HW counter 60% LRU We access 50 pages in incremented on FIFO sequence, then repeat, 40% each instruction RAND in a loop. Page table entry FIFO & 20% LRU timestamped with counter when 20 40 60 80 100 referenced Cache size (blocks) Replace page with What do you notice? lowest timestamp 112

Implementing LRU The Clock Algorithm Page 3 Organize pages in memory Add a (64-bit) Approximate LRU through aging 1 2 as a circular list timestamp to keep a k-bit tag in each table entry Page 2 Page 0 each page table at every “tick”: When page is referenced, i) Shift tag right one bit 0 7 1 4 ii) Copy Referenced (R) bit in tag set its reference bit R to 1 entry iii) Reset Refereced bits to 0 HW counter If needed, evict page with lowest tag On page fault, look at page incremented on the hand points: R bits at R bits at R bits at R bits at R bits at each instruction Tick 0 Tick 2 Tick 1 Tick 4 Tick 5 if R = 0: 1 0 1 0 1 1 1 1 0 0 1 0 1 1 0 1 0 1 1 0 0 0 1 0 0 1 1 0 0 0 Page table entry Page 0 10000000 11000000 11100000 11110000 01111000 evict the page timestamped with Page 4 Page 1 00000000 10000000 11000000 01100000 10110000 counter when Page 5 set R bit of newly referenced Page 2 10000000 01000000 00100000 00100000 10001000 1 12 1 1 loaded page to 1 Page 3 00000000 00000000 10000000 01000000 00100000 Replace page with else (R = 1): clear R R bit Page 4 10000000 11000000 01100000 10110000 01011000 lowest timestamp 0 5 frame # advance hand Page 5 10000000 01000000 10100000 01010000 00101000 Page 1 113 114 Clock Page Replacement The Second Chance Algorithm Page 3 Dirty pages get “second 0 1 2 Time 0 1 2 3 4 5 6 7 8 9 10 chance” before eviction Page 2 Page 0 Requests c a d b e b a b c d synchronously replacing 0 0 7 1 1 4 dirty pages is expensive! a e e e e e d 0 a a a a Page Frames 1 b b b b b b b b b b b If clock’ s hand points at …this is what c c a c a a 2 c c c c c P and this is P’ s state… happens 3 d d d d d d c c dirty R dirty R d d d 0 0 replace page X X X X Faults 0 1 0 0 Page 4 Page 5 1 0 0 0 1 1 12 0 1 1 Page table entries 1 a 1 e 1 e 1 e 1 e 1 e 1 d 1 1 1 0 for resident pages 1 b 0 b 1 b 0 b 1 b 1 b 0 b R bit 1 c 0 c 0 c 1 a 1 a 1 a 0 a 1 0 5 [Start asynchronous transfer frame # Hand clock: 1 d 0 d 0 d 0 d 0 d 1 c 0 c of dirty page to disk] dirty bit Page 1 115 116

Second Chance Page Replacement Time 0 1 2 3 4 5 6 7 8 9 10 Requests c a w d b w e b a w b c d a a a a a a a a 0 a a a Page Frames b b d 1 b b b b b b b b 2 c c c e e e e e e c c 3 d d d d d d d c c d d Faults X X X 01 a 11 a 00 a 00 a 11 a 11 a 00 a Page table entries for resident pages 00 b 01 b 11 b 01 b 01 b 01 b 01 d 00 e 01 c 01 c 01 e 01 e 01 e 01 e Hand clock: 01 d 01 d 00 d 00 d 00 d 01 c 00 c Async copy: 117

Recommend

More recommend