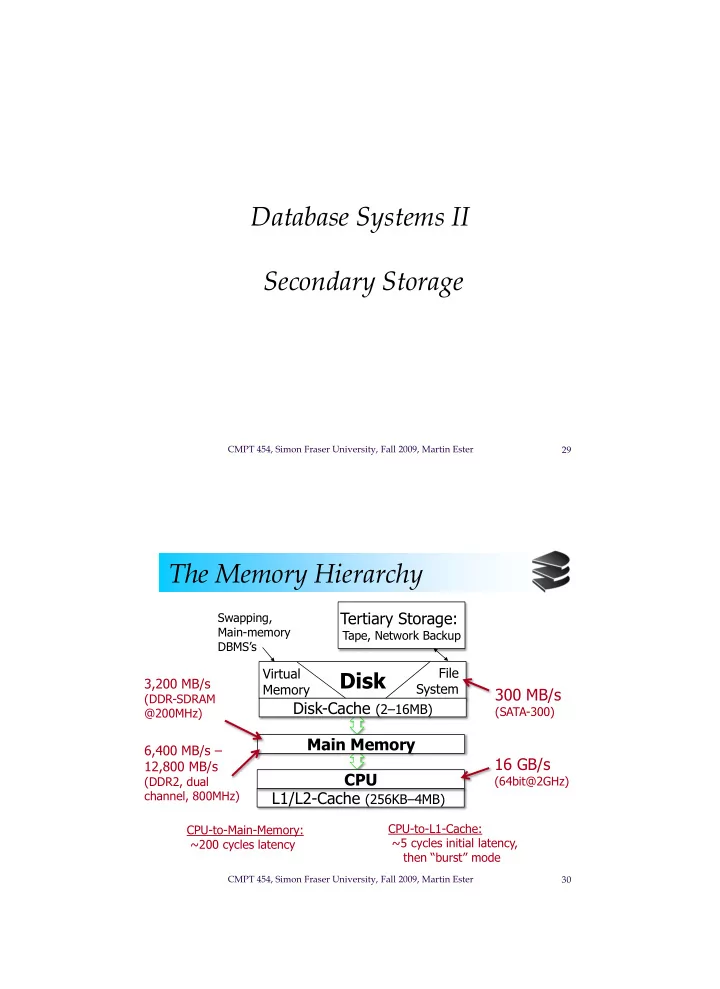

Database Systems II Secondary Storage CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 29 The Memory Hierarchy Tertiary Storage: Swapping, Main-memory Tape, Network Backup DBMS’s Virtual File Disk 3,200 MB/s Memory System 300 MB/s (DDR-SDRAM Disk-Cache (2 – 16MB) (SATA-300) @200MHz) Main Memory 6,400 MB/s – 16 GB/s 12,800 MB/s CPU (DDR2, dual (64bit@2GHz) L1/L2-Cache (256KB – 4MB) channel, 800MHz) CPU-to-L1-Cache: CPU-to-Main-Memory: ~5 cycles initial latency, ~200 cycles latency then “burst” mode CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 30

The Memory Hierarchy Cache Data and instructions in cache when needed by CPU. On-board (L1) cache on same chip as CPU, L2 cache on separate chip. Capacity ~ 1MB, access time a few nanoseconds. Main memory All active programs and data need to be in main memory. Capacity ~ 1 GB, access time 10-100 nanoseconds. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 31 The Memory Hierarchy Secondary storage Secondary storage is used for permanent storage of large amounts of data, typically a magnetic disk. Capacity up to 1 TB, access time ~ 10 milliseconds. Tertiary storage To store data collections that do not fit onto secondary storage, e.g. magnetic tapes or optical disks. Capacity ~ 1 PB, access time seconds / minutes. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 32

The Memory Hierarchy Trade-off The larger the capacity of a storage device, the slower the access (and vice versa). A volatile storage device forgets its contents when power is switched off, a n on-volatile device remembers its content. Secondary storage and tertiary storage is non- volatile, all others are volatile. DBS needs non-volatile (secondary) storage devices to store data permanently. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 33 The Memory Hierarchy RAM (main memory) for subset of database used by current transactions. Disk to store current version of entire database (secondary storage). Tapes for archiving older versions of the database (tertiary storage). CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 34

The Memory Hierarchy Typically programs are executed in virtual memory of size equal to the address space of the processor. Virtual memory is managed by the operating system, which keeps the most relevant part in the main memory and the rest on disk. A DBS manages the data itself and does not rely on the virtual memory. However, main memory DBS do manage their data through virtual memory. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 35 Moore’s Law Gordon Moore in 1965 observed that the density of integrated circuits (i.e., number of transistors per unit) increased at an exponential rate, thus roughly doubles every 18 months . Parameters that follow Moore‘s law: - number of instructions per second that can be exceuted for unit cost, - number of main memory bits that can be bought for unit cost, - number of bytes on a disk that can be bought for unit cost. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 36

Moore’s Law Number of transistors on an integrated circuit CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 37 Moore’s Law Disk capacity CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 38

Moore’s Law But some other important hardware parameters do not follow Moore’s law and grow much slower. Theses are, in particular, - speed of main memory access, and - speed of disk access. For example, disk latencies (seek times) have almost stagnated for past 5 years. Thus, moving data from one level of the memory hierarchy to the next becomes progressively larger. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 39 Disks Secondary storage device of choice. Data is stored and retrieved in units called disk blocks or pages . Main advantage over tapes: random access vs. sequential access . Unlike RAM, time to retrieve a disk page varies depending upon location on disk. Therefore, relative placement of pages on disk has major impact on DBMS performance! CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 40

Disks Disk consists of Spindle two main, Tracks Disk head moving parts: disk assembly and head assembly. Disk assembly stores Platters information, head Arm movement assembly reads and writes information. Arm assembly CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 41 Disks The platters rotate around central spindle. Upper and lower platter surfaces are covered with magnetic material, which is used to store bits. The arm assembly is moved in or out to position a head on a desired track. All tracks under heads at the same time make a cylinder (imaginary!). Only one head reads/writes at any one time. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 42

Disks Track Sector Top view of a platter surface Gap CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 43 Disks Block size is a multiple of sector size (which is fixed). Time to access (read/write) a disk block ( disk latency ) consists of three components: - seek time : moving arms to position disk head on track, - rotational delay (waiting for block to rotate under head), and - transfer time (actually moving data to/from disk surface). Seek time and rotational delay dominate. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 44

Disks Seek time Time 3 or 5x x 1 N Cylinders Traveled CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 45 Disks Average seek time N N SEEKTIME (i j) j=1 i=1 S = j i N(N-1) Typical average seek time = 5 ms CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 46

Disks Average rotational delay Head Here Block I Want Average rotational delay R = 1/2 revolution Typical R = 5 ms CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 47 Disks Transfer time Typical transfer rate: 100 MB/sec Typical block size: 16KB Transfer time: block size transfer rate Typical transfer time = 0.16 ms CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 48

Disks Typical average disk latency is 10 ms, maximum latency 20 ms. In 10 ms, a modern microprocessor can execute millions of instructions. Thus, the time for a block access by far dominates the time typically needed for processing the data in memory. The number of disk I/Os (block accesses) is a good approximation for the cost of a database operation. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 49 Accelerating Disk Access Organize data by cylinders to minimize the seek time and rotational delay. ‘ Next ’ block concept: - blocks on same track, followed by - blocks on same cylinder, followed by - blocks on adjacent cylinder. Blocks in a file are placed sequentially on disk (by ‘next’). Disk latency can approach the transfer rate. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 50

Accelerating Disk Access Example Assuming 10 ms average seek time, no rotational delay, 40 MB/s transfer rate. Read a single 4 KB Block – Random I/O: 10 ms – Sequential I/O: 10 ms Read 4 MB in 4 KB Blocks (amortized) – Random I/O: 10 s – Sequential I/O: 0.1 s Speedup factor of 100 CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 51 Accelerating Disk Access Block size selection Bigger blocks amortize I/O cost. Bigger blocks read in more useless stuff and takes longer to read. Good trade-off block size from 4KB to 16 KB. With decreasing memory costs, blocks are becoming bigger! CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 52

Accelerating Disk Access Using multiple disks Replace one disk (with one independent head) by many disks (with many independent heads). Striping a relation R: divide its blocks over n disks in a round robin fashion. Assuming that disk controller, bus and main memory can handle n times the transfer rate, striping a relation across n disks can lead to a speedup factor of up to n . CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 53 Accelerating Disk Access Disk scheduling For I/O requests from different processes, let the disk controller choose the processing order. According to the elevator algorithm , the disk controller keeps sweeping from the innermost to the outermost cylinder, stopping at a cylinder for which there is an I/O request. Can reverse sweep direction as soon as there is no I/O request ahead in the current direction. Optimizes the throughput and average response time. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 54

Accelerating Disk Access Double buffering In some scenarios, we can predict the order in which blocks will be requested from disk by some process. Prefetching ( double buffering ) is the method of fetching the necessary blocks into the buffer in advance. Requires enough buffer space. Speedup factor up to n , where n is the number of blocks requested by a process. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 55 Accelerating Disk Access Single buffering (1) Read B1 Buffer (2) Process Data in Buffer (3) Read B2 Buffer (4) Process Data in Buffer ... Execution time = n(P+R) where P = time to process one block R = time to read in one block n = # blocks read. CMPT 454, Simon Fraser University, Fall 2009, Martin Ester 56

Recommend

More recommend