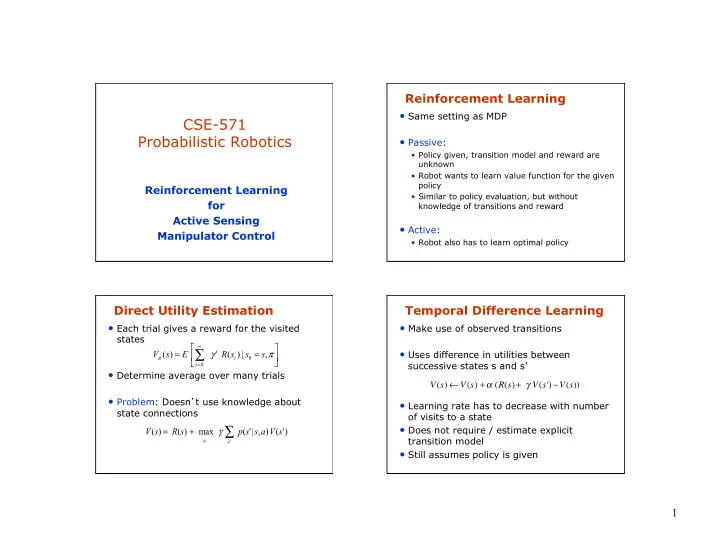

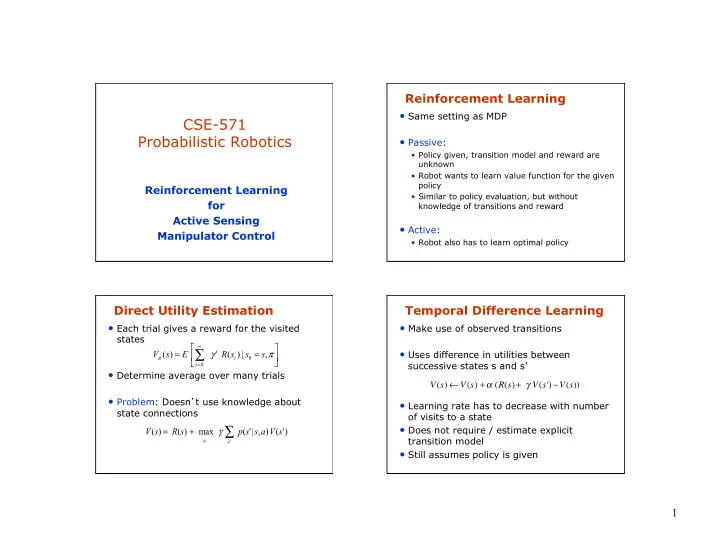

Reinforcement Learning • Same setting as MDP CSE-571 Probabilistic Robotics • Passive: • Policy given, transition model and reward are unknown • Robot wants to learn value function for the given policy Reinforcement Learning • Similar to policy evaluation, but without for knowledge of transitions and reward Active Sensing • Active: Manipulator Control • Robot also has to learn optimal policy Direct Utility Estimation Temporal Difference Learning • Each trial gives a reward for the visited • Make use of observed transitions states ⎡ ∞ ⎤ ∑ • Uses difference in utilities between = γ = π t V ( s ) E R ( s ) | s s , ⎢ ⎥ π t 0 ⎣ ⎦ = successive states s and s’ t 0 • Determine average over many trials V ( s ) ← V ( s ) + α ( R ( s ) + γ V ( s ') − V ( s )) • Problem: Doesn t use knowledge about • Learning rate has to decrease with number state connections of visits to a state ∑ • Does not require / estimate explicit = + γ V ( s ) R ( s ) max p ( s ' | s , a ) V ( s ' ) transition model a s ' • Still assumes policy is given 1

Active Reinforcement Learning Q-Learning • First learn model, then use Bellman equation • Model-free: learn action-value function • Equilibrium for Q-values: ∑ = + γ V ( s ) R ( s ) max p ( s ' | s , a ) V ( s ' ) a ∑ s ' = + γ Q ( a , s ) R ( s ) p ( s ' | a , s ) max Q ( a ' , s ' ) • Use this model to perform optimal policy a ' s ' ∑ = + γ V ( s ) R ( s ) max p ( s ' | s , a ) V ( s ' ) • Problem? a s ' • Updates: • Robot must trade off exploration (try new actions/states) and exploitation (follow ← + α + γ − Q ( a , s ) Q ( a , s ) ( R ( s ) max Q ( a ' , s ' ) Q ( a , s )) current policy) a ' RL for Active Sensing Active Sensing � u Sensors have limited coverage & range u Question: Where to move / point sensors? u Typical scenario: Uncertainty in only one type of state variable u Robot location [Fox et al., 98; Kroese & Bunschoten, 99; Roy & Thrun 99] u Object / target location(s) [Denzler & Brown, 02; Kreuchner et al., 04, Chung et al., 04] u Predominant approach: Minimize expected uncertainty (entropy) 2

Converting Beliefs to Augmented Active Sensing in Multi-State States Domains � u Uncertainty in multiple, different state variables Robocup: robot & ball location, relative goal location, … u Which uncertainties should be minimized? State variables u Importance of uncertainties changes over time. u Ball location has to be known very accurately before a kick. Uncertainty variables u Accuracy not important if ball is on other side of the field. u Has to consider sequence of sensing actions! Belief Augmented state u RoboCup: typically use hand-coded strategies. Projected Uncertainty Why Reinforcement Learning? (Goal Orientation) u No accurate model of the robot and the environment. � r � g u Particularly difficult to assess how Goal ( a ) ( b ) (projected) entropies evolve over time. u Possible to simulate robot and noise in actions and observations. ( c ) ( d ) 3

Least-squares Policy Iteration Active Sensing for Goal Scoring u Model-free approach u Task: AIBO trying to score goals u Sensing actions: look at ball, or the u Approximates Q-function by linear goals, or the markers function of state features u Fixed motion control policy: Uses k ∑ ˆ π ≈ π = φ Q ( s , a ) Q ( s , a ; w ) ( s , a ) w most likely states to dock the robot Ball j j Goal to the ball, then kicks the ball into = j 1 the goal. u No discretization needed Robot u Find sensing strategy that “ best ” u No iterative procedure needed for policy supports the given control policy. evaluation Marker u Off-policy: can re-use samples [Lagoudakis and Parr 01, 03] Augmented State Space and Experiments Features u Strategy learned from simulation u Episode ends when: § State variables: • Scores (reward +5) § Distance to ball • Misses (reward 1.5 – 0.1) § Ball Orientation • Loses track of the ball (reward -5) Robot § Uncertainty variables: • Fails to dock / accidentally kicks the ball § Ent. of ball location away (reward -5) � § Ent. of robot location g � b u Applied to real robot § Ent. of goal orientation u Compared with 2 hand-coded Goal Ball strategies § Features: • Panning: robot periodically scans • Pointing: robot periodically looks up at φ = θ θ ( s , a , d ) , H , H , H , , 1 markers/goals b b b θ r a g 4

Rewards (simulation) Success Ratio (simulation) 1 4 2 0.8 Average rewards 0 Success Ratio 0.6 -2 -4 0.4 -6 0.2 -8 Learned Learned Pointing Pointing Panning Panning -10 0 0 100 200 300 400 500 600 700 0 100 200 300 400 500 600 700 Episodes Episodes � Learned Strategy � Results on Real Robots • � 45 episodes of goal kicking u Initially, robot learns to dock (only looks at ball) Goals Misses Avg. Miss Kick u Then, robot learns to look at goal and Distance Failures markers Learned 31 10 6±0.3cm 4 u Robot looks at ball when docking Pointing 22 19 9±2.2cm 4 u Briefly before docking, adjusts by looking 15 21 22±9.4cm 9 Panning at the goal � u Prefers looking at the goal instead of markers for location information � 5

Adding Opponents Learning With Opponents 1 Learned with pre-trained data Learned from scratch Pre-trained 0.8 Robot Lost Ball Ratio 0.6 Opponent 0.4 o d v o 0.2 b Goal u Ball 0 0 100 200 300 400 500 600 700 Episodes Additional features: ball velocity, knowledge about other robots u Robot learned to look at ball when opponent is close to it. Thereby avoids losing track of it. � PILCO: Probabilistic Inference Policy Search for Learning Control • Works directly on parameterized • Model-based policy search representation of policy • Learn Gaussian process dynamics • Compute gradient of expected reward model wrt. policy parameters • Goal-directed exploration • Get gradient analytically or empirical à no “motor babbling” required via sampling (simulation) • Consider model uncertainties à robustness to model errors • Extremely data efficient 6

Demo: Standard Benchmark PILCO: Overview Problem • Swing pendulum up and balance in inverted position • Learn nonlinear control from scratch • 4D state space, 300 controll parameters • 7 trials/17.5 sec experience • Cost function given • Control freq.: 10 Hz • Policy: mapping from state to control • Rollout: plan using current policy and GP model • Policy parameter update via CG/BFGS Controlling a Low-Cost Robotic Collision Avoidance Manipulator 1.2 • 1 Low-cost system ($500 for − 0.2 robot arm and Kinect) y − dist. to target (in m) 0.8 − 0.1 • Very noisy 0.6 • No sensor information about 0.4 0 robot’s joint configuration used 0.2 0.1 • Goal: Learn to stack tower of 5 0 blocks from scratch − 0.2 0.2 • Kinect camera for tracking − 0.4 block in end-effector 0.3 − 0.2 − 0.1 0 0.1 0.2 0.3 x − dist. to target (in m) • State: coordinates (3D) of block center (from Kinect • Use valuable prior information about obstacles if camera) • 4 controlled DoF available • 20 learning trials for stacking 5 blocks (5 seconds long each) • Incorporation into planning à penalize in cost • Account for system noise, e.g., – Robot arm function – Image processing 7

Collision Avoidance Results Training runs (during Experimental Setup learning) with collisions • Cautious learning and exploration (rather safe than risky-successful) • Learning slightly slower, but with significantly fewer collisions during training • Average collision reduction (during training): 32.5% à 0.5% 8

Recommend

More recommend