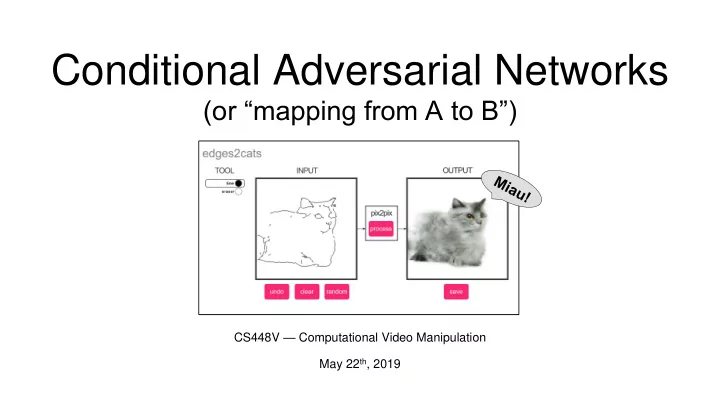

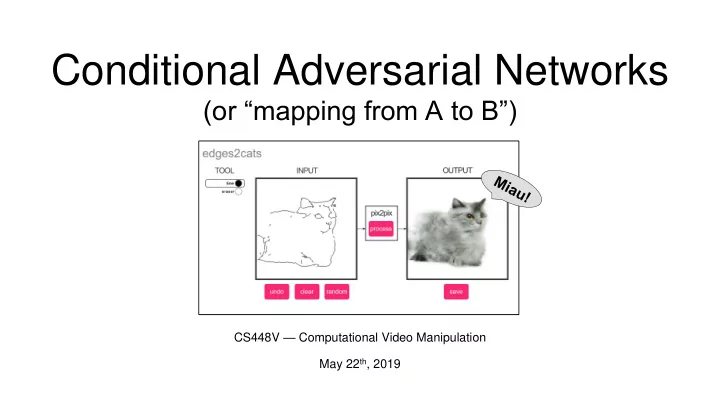

Conditional Adversarial Networks (or “mapping from A to B”) CS448V — Computational Video Manipulation May 22 th , 2019

Why? - Cool! Trendy! - Google Scholar Pix2Pix CycleGAN … Hundreds of applications and follow-up works …

Why? - Cool! Trendy! - Google Scholar Pix2Pix CycleGAN … Hundreds of applications and follow-up works …

Enhancing Transitions

Single-Photo Facial Animation

Text-based Editing

Few-Shot Reenactment

Digital Humans

Overview • Convolutional Neural Networks • Generative Modeling • Pix2Pix (“mapping from A to B”)

Convolutional Neural Network Components? • 2D Convolution Layers (Conv2D) • Subsampling Layers (MaxPool , …) • Non-linearity Layers (ReLU , …) • Normalization Layers (BatchNorm , …) • Upsampling Layers (TransposedConv , …) • …

Convolutional Neural Network Components? • 2D Convolution Layers (Conv2D) • Subsampling Layers (MaxPool , …) • Non-linearity Layers (ReLU , …) • Normalization Layers (BatchNorm , …) • Upsampling Layers (TransposedConv , …) • …

Convolution 32x32x3 image height 32 32 width 3 depth

Convolution 32x32x3 image 5x5x3 filter height 32 5 Convolve the filter with the image, 3 5 i.e., “slide over the image spatially, computing dot products” 32 width 3 depth

Convolution 32x32x3 image 5x5x3 filter height 32 Result: 1 number, the result of taking the dot product between the filter and a small 5x5x3 chunk of the image, i.e., 5x5x3 = 70-dimensional dot product + bias width 32 w T x + b 3 depth

Convolution 32x32x3 image Activation map 5x5x3 filter height 28 32 Convolve (slide) over all spatial locations width 28 32 3 1 depth

Convolution 32x32x3 image Activation map ? 5x5x3 filter height 28 32 Convolve (slide) over all spatial locations width 28 32 3 1 depth

Convolution 32x32x3 image Activation map height 28 32 Convolve (slide) over all spatial locations width 28 32 Invariant to? 3 1 ? depth Rotation ? Translation ? Scaling

Convolution 32x32x3 image Activation map height 28 32 Convolve (slide) over all spatial locations width 28 32 Invariant to? 3 1 depth Rotation Translation Scaling

Convolution 32x32x3 image Activation map height 32 Convolve (slide) over all spatial locations width 32 3 depth

Convolution Layer 32x32x3 image Activation tensor height 32 Convolution Layer width 32 3 depth

Convolutional Neural Network 32x32x3 image 32 ? Convolution ReLU e.g. 6 5x5x3 32 filters 3

Convolutional Neural Network 32x32x3 image 28x28x6 tensor 32 28 Convolution ReLU e.g. 6 5x5x3 32 28 filters 3 6

Convolutional Neural Network 32x32x3 image 28x28x6 tensor 32 28 ? Convolution Convolution ReLU ReLU e.g. 6 e.g. 10 5x5x3 5x5x6 32 28 filters filters 3 6

Convolutional Neural Network 32x32x3 image 28x28x6 tensor 24x24x10 tensor 32 28 24 ... Convolution Convolution Convolution ReLU ReLU ReLU e.g. 6 e.g. 10 5x5x3 5x5x6 32 28 24 filters filters 3 6 10

Convolutional Neural Networks [LeNet-5, LeCun 1980]

Feature Hierarchy Learn the features from data instead of hand engineering them! (If enough data is available)

U-Net Skip connections “Propagate low -level features directly, helps with details”

Overview • Convolutional Neural Networks • Generative Modeling • Pix2Pix

Overview • Convolutional Neural Networks • Generative Modeling • Pix2Pix

Generative Modeling 𝑂 𝐲 𝑗 𝑗=1 𝑞(X) x ~ 𝑞 X Density Function “more of the same!” 𝑞 X Training Data New Samples We want to learn 𝑞 X from data, such that we can “sample from it”!

Generative 2D Face Modeling 𝑂 𝐲 𝑗 𝑗=1 x ~ 𝑞 X Training Data New Samples The world needs more celebrities … or not … ?

3.5 Years of Progress on Faces

https://thispersondoesnotexist.com 2018

StyleGAN - Interpolation

Overview • Convolutional Neural Networks • Generative Modeling • Pix2Pix (“mapping from A to B”)

Overview • Convolutional Neural Networks • Generative Modeling • Pix2Pix (“mapping from A to B”)

Image-to-Image Translation

Image-to-Image Translation

Image-to-Image Translation

Image-to-Image Translation

Image-to-Image Translation G argmin 𝔽 𝐲,𝐳 [L(G 𝐲 , 𝐳)] G Loss Neural Network [Zhang et al., ECCV 2016]

Image-to-Image Translation G Paired! argmin 𝔽 𝐲,𝐳 [L(G 𝐲 , 𝐳)] G Loss Neural Network [Zhang et al., ECCV 2016]

Image-to-Image Translation G argmin 𝔽 𝐲,𝐳 [L(G 𝐲 , 𝐳)] G Loss Neural Network [Zhang et al., ECCV 2016]

Image-to-Image Translation G argmin 𝔽 𝐲,𝐳 [L(G 𝐲 , 𝐳)] G “ What should I do?” Neural Network [Zhang et al., ECCV 2016]

Image-to-Image Translation G argmin 𝔽 𝐲,𝐳 [L(G 𝐲 , 𝐳)] G “ What should I do?” “ How should I do it?” [Zhang et al., ECCV 2016]

Be careful what you wish for! 2 𝑀 𝐳, 𝐳 = 𝐳 − 𝐳 2

Degradation to the mean! 2 𝑀 𝐳, 𝐳 = 𝐳 − 𝐳 2

Automate Design of the Loss?

Automate Design of the Loss?

Automate Design of the Loss? Deep learning got rid of handcrafted features. Can we also get rid of handcrafting the loss function?

Automate Design of the Loss? Deep learning got rid of handcrafted features. Can we also get rid of handcrafting the loss function? Universal loss function?

Automate Design of the Loss? Deep learning got rid of handcrafted features. Can we also get rid of handcrafting the loss function? Universal loss function?

Discriminator as a Loss Function Discriminator Real or Fake? (Classifier)

Conditional GAN

Conditional GAN Input 𝐲 Output 𝐳 Generator (G)

Conditional GAN Input 𝐲 Output 𝐳 Discriminator Generator (G) Real or Fake? (D) G tries to synthesize fake images that fool D D tries to tell real from fake

Conditional GAN (Discriminator) Input 𝐲 Output 𝐳 “1” Discriminator Generator (G) Fake (0.9) (D) D tries to identify the fakes arg max D 𝔽 𝐲,𝐳 [ log D G 𝐲 + log 1 − D 𝐳 ]

Conditional GAN (Discriminator) Input 𝐲 Output 𝐳 “1” Discriminator Generator (G) Fake (0.9) (D) “0” Discriminator D tries to identify the fakes Real (0.1) (D) D tries to identify the real images GT 𝐳 arg max D 𝔽 𝐲,𝐳 [ log D G 𝐲 + log 1 − D 𝐳 ]

Conditional GAN (Generator) Input 𝐲 Output 𝐳 “0” Discriminator Generator (G) Real (0.1) (D) G tries to synthesize fake images that fool D. arg min G 𝔽 𝐲,𝐳 [ log D G 𝐲 + log 1 − D 𝐳 ]

Conditional GAN (Generator) Input 𝐲 Output 𝐳 “0” Discriminator Generator (G) Real (0.1) (D) G tries to synthesize fake images that fool D. arg min G 𝔽 𝐲,𝐳 [ log D G 𝐲 + log 1 − D 𝐳 ]

Conditional GAN Input 𝐲 Output 𝐳 Discriminator Generator (G) Real or Fake? (D) G tries to synthesize fake images that fool the best D. arg min max 𝔽 𝐲,𝐳 [ log D G 𝐲 + log 1 − D 𝐳 ] G D

Conditional GAN Input 𝐲 Output 𝐳 Loss Function Generator (G) Real or Fake? (D) G’s perspective: D is a loss function Rather than being hand-designed, it is learned jointly !

Conditional Discriminator Input 𝐲 Output 𝐳 Generator (G) Discriminator (D) Input 𝐲 arg min max 𝔽 𝐲,𝐳 [ log D 𝐲, G 𝐲 + log 1 − D 𝐲, 𝐳 ] G D

Patch Discriminator “Rather than penalizing if the output image looks fake, penalize if each overlapping patch in the output looks fake”

1x1 Pixel Discriminator

Image Discriminator

70x70 Patch Discriminator

Conditional Discriminator Input 𝐲 Output 𝐳 Generator (G) Discriminator (D) 𝑀 𝑑𝐻𝐵𝑂 G, D = 𝔽 𝐲,𝐳 [ log D 𝐲, G 𝐲 + log 1 − D 𝐲, 𝐳 ]

Reconstruction Loss 𝑚 1 Generator (G) 𝑀 𝑚 1 G = 𝔽 𝐲,𝐳 G x − y 1 “Stable training + fast convergence” 𝐻 ∗ = arg min max 𝑀 𝑑𝐻𝐵𝑂 G, D + 𝜇 𝑀 𝑚 1 (G) G D 100

Ablation Study ?

Ablation Study

Results on the Test Split

Results for Hand Drawings ?

Demo: Pix2Pix

Limitations 1. Paired data is required 2. Temporally instable if applied per-frame to a video sequence 3. Does not generalize to 3D transformations

CycleGAN

Cycle Consistency

CycleGAN

Recycle-GAN

Limitations 1. Paired data is required 2. Temporally instable if applied per-frame to a video sequence 3. Does not generalize to 3D transformations

Limitations 1. Paired data is required 2. Temporally instable if applied per-frame to a video sequence 3. Does not generalize to 3D transformations

Vid2Vid

Vid2Vid

Recommend

More recommend