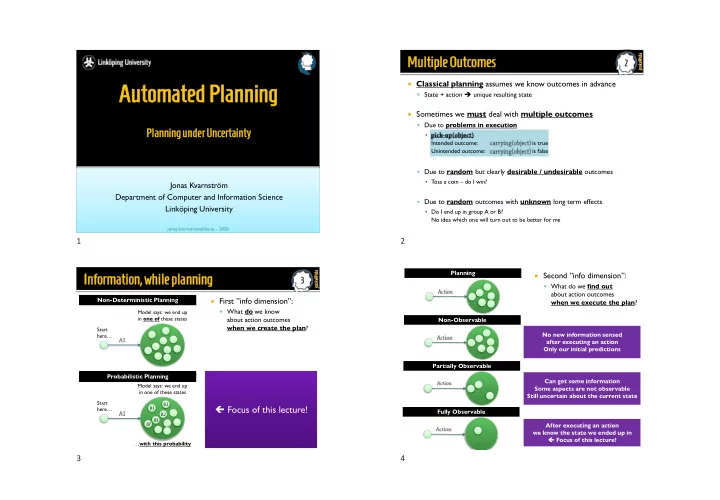

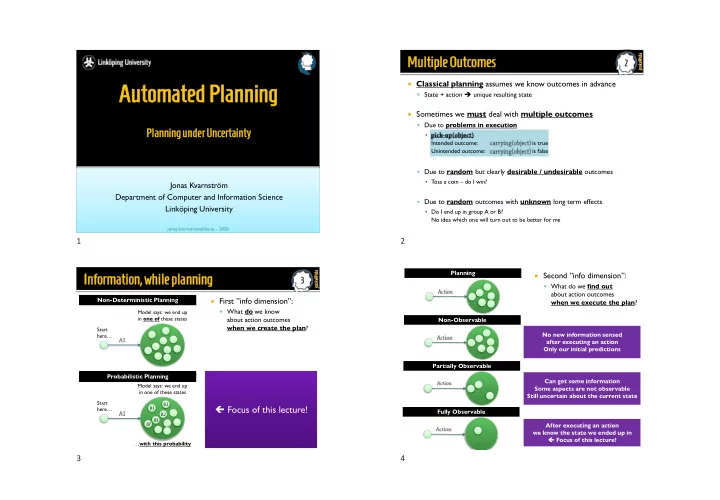

jonkv@ida jonkv@ida Multiple iple Outcome comes 2 2 Classical planning assumes we know outcomes in advance Auto tomat mated d Pla lanning ing ▪ State + action ➔ unique resulting state Sometimes we must deal with multiple outcomes ▪ Due to problems in execution Planni ning ngunder Uncert rtainty nty ▪ Intended outcome: is true Unintended outcome: is false ▪ Due to random but clearly desirable / undesirable outcomes ▪ Toss a coin – do I win? Jonas Kvarnström Department of Computer and Information Science ▪ Due to random outcomes with unknown long term effects Linköping University ▪ Do I end up in group A or B? No idea which one will turn out to be better for me jonas.kvarnstrom@liu.se – 2020 1 2 Planning jonkv@ida jonkv@ida Second ”info dimension”: Infor ormation, mation, while le planning ing 3 3 ▪ What do we find out about action outcomes First ”info dimension”: Non-Deterministic Planning when we execute the plan ? ▪ What do we know Model says: we end up in one of these states about action outcomes Non-Observable when we create the plan ? Start No new information sensed here… after executing an action Only our initial predictions Partially Observable Probabilistic Planning Can get some information Model says: we end up Some aspects are not observable in one of these states Still uncertain about the current state Start 0.1 Focus of this lecture! here… 0.1 Fully Observable 0.2 .03 .07 After executing an action we know the state we ended up in Focus of this lecture! … with this probability 3 4

jonkv@ida jonkv@ida State te Transi sition tion Syste tem 6 6 Classical planning: A state transition system Σ = (𝑇, 𝐵, 𝛿) Finite set of world states ▪ 𝑇 Finite set of actions ▪ 𝐵 × → State transition function , ▪ 𝛿 specifying all “edges” 5 6 jonkv@ida jonkv@ida jonkv@ida jonkv@ida Stoch chas astic tic Syste tem Stoch chas astic tic Syste tems ms (2) 7 7 8 8 Probabilistic planning uses a stochastic system Σ = (𝑇, 𝐵, 𝑄) Example with "desirable outcome" Finite set of world states ▪ Finite set of actions ▪ Arc indicates Action: drive-uphill Given that we are in s and execute a , outcomes of a ▪ Replaces single action the probability of ending up in s’ At location 6 At location 5 Planning Intermediate location Model says: 2% risk Model says: we end up of slipping, ending up in one of these states somewhere else Start 0.1 here… 0.1 0.2 .03 .07 … with this probability 7 8

jonkv@ida jonkv@ida jonkv@ida jonkv@ida Stoch chas astic tic Syste tems ms (3) Stoch chas astic tic Syste tems ms (4) 9 9 10 10 May have very unlikely outcomes… As always, can have many executable actions in a state 3 possible actions Probability = 1 (red, blue, green) The planner chooses (certain outcome) the action At location 6 to execute… Probability sum = 1 Suppose we choose At location 5 (three possible green. Intermediate outcomes of A2) location Nature chooses Arcs connect the outcome , so we edges must be prepared for belonging to all 4 green outcomes! Very unlikely outcome, the same but may still be action Probability sum = 1 Broken Directly searching important to consider, (four possible if it has great impact on the state space outcomes of A3) yields goal achievement! an AND/OR tree 9 10 jonkv@ida jonkv@ida Stoch chas astic tic Syste tem Exam ample ple 12 12 Example : A single robot wait s5 ▪ Moving between locations move(l2,l3 l3) ▪ For simplicity, wait s2 s3 states correspond wait move(l5,l4 directly to move(l3,l2 l2) locations move(l4,l3) move(l2,l1 move(l3,l4 l2) move(l1,l2 l4) ▪ l1) l4) ▪ move(l4,l1) ▪ wait s1 s4 ▪ ▪ move(l1,l4 l4) wait ▪ Some transitions are deterministic , some are stochastic ▪ Trying to move from to : You may end up at instead ( % risk) Important concepts, ▪ Trying to move from to : You may stay where you are instead ( % risk) before we define the planning problem itself! 11 12

jonkv@ida jonkv@ida jonkv@ida jonkv@ida Polic icies; ies; Example mple 1 Polic icy y Example mple 2 13 13 14 14 One type of formal plan structure: Policy 𝜌 ∶ 𝑇 → 𝐵 Example ▪ Defining, for each state , which action to execute whenever we are there ▪ ▪ Possible due to full observability ! wait s5 wait s5 move(l2,l3 l3) Example move(l2,l3 l3) wait wait s2 s3 wait s2 s3 wait move(l5,l4 ▪ move(l5,l4 move(l3,l2 l2) move(l3,l2 l2) move(l4,l3) l2) move(l2,l1 move(l3,l4 move(l1,l2 l4) move(l4,l3) move(l2,l1 move(l3,l4 l2) move(l1,l2 l4) l1) l4) l1) l4) move(l4,l1) move(l4,l1) wait s1 s4 wait s1 s4 move(l1,l4 l4) wait Start move(l1,l4 l4) wait Start Reaches or , waits there infinitely many times Always reaches state , waits there infinitely many times 13 14 jonkv@ida jonkv@ida jonkv@ida jonkv@ida Polic icy y Example mple 3 Polic icies ies and Histor tories ies 15 15 16 16 Example The outcome of sequentially executing a policy: ▪ A state sequence called a history ▪ ▪ Infinite, since policies have no termination criterion wait s5 move(l2,l3 l3) For each policy, there can be many potential histories wait s2 s3 wait move(l5,l4 ▪ Which one is the actual result? move(l3,l2 l2) Gradually discovered at execution time! move(l4,l3) move(l2,l1 move(l3,l4 l2) move(l1,l2 l4) l1) l4) move(l4,l1) wait s1 s4 move(l1,l4 l4) wait Start Reaches state with % probability ”in the limit” (the more steps, the greater the probability) 15 16

jonkv@ida jonkv@ida jonkv@ida jonkv@ida Histor tory y Example mple Probabili abilitie ties: Initia tial l State tes, s, Transi sitions tions 17 17 18 18 Example 1 Each policy has a probability distribution over histories/outcomes wait s5 ▪ With known fixed initial state 𝑡 0 : ▪ move(l2,l3 l3) wait 𝒕 𝟏 ,𝒕 𝟐 , 𝒕 𝟑 , 𝒕 𝟒 , … 𝜌 = ෑ s2 s3 𝑄 𝑄(𝑡 𝑗 , 𝜌 𝑡 𝑗 , 𝑡 𝑗+1 ) wait move(l5,l4 𝑗≥0 Probabilities for each required move(l3,l2 l2) move(l4,l3) l2) move(l2,l1 move(l3,l4 state transition move(l1,l2 l4) ▪ With unknown initial state: l1) l4) 𝑄(〈𝒕 𝟏 , 𝒕 𝟐 , 𝒕 𝟑 , 𝒕 𝟒 , … | 𝜌) = 𝑄 𝑡 0 ⋅ ෑ 𝑄(𝑡 𝑗 , 𝜌 𝑡 𝑗 , 𝑡 𝑗+1 ) move(l4,l1) wait s1 s4 𝑗≥0 wait move(l1,l4 l4) s5 wait Probability Start of starting in move(l2,l3) 3) wait s2 s3 wait this specific 𝑡 0 move(l5, Even if we only consider starting in : Two possible histories move(l3, 3,l2) move(l4,l3) 3) move(l1,l2) move(l2,l1) move(l3, 5,l4) – Reached , waits indefinitely ▪ 3,l4) – Reached , waits indefinitely move(l4,l1) wait s1 s4 How probable are these histories? move(l1,l4) wait Start 17 18 jonkv@ida jonkv@ida jonkv@ida jonkv@ida Histor tory y Example mple 1 Histor tory y Example mple 2 19 19 20 20 Example Example wait wait s5 s5 ▪ ▪ move(l2,l3 l3) move(l2,l3 l3) wait wait s2 s3 s2 s3 wait wait move(l5,l4 move(l5,l4 move(l3,l2 l2) move(l3,l2 l2) move(l4,l3) move(l4,l3) move(l2,l1 move(l3,l4 move(l2,l1 move(l3,l4 l2) l2) move(l1,l2 move(l1,l2 l4) l4) l1) l4) l1) l4) move(l4,l1) move(l4,l1) wait wait s1 s4 s1 s4 move(l1,l4 l4) move(l1,l4 l4) wait wait Start Start Two possible histories, if 𝑄 𝑡1 = 1: ▪ ▪ 19 20

jonkv@ida jonkv@ida Histor tory y Example mple 3 21 21 Example wait s5 ▪ move(l2,l3 l3) wait s2 s3 wait move(l5,l4 move(l3,l2 l2) move(l4,l3) l2) move(l2,l1 move(l3,l4 move(l1,l2 l4) l1) l4) move(l4,l1) wait s1 s4 move(l1,l4 l4) wait Start ▪ ∞ 21 22 jonkv@ida jonkv@ida Stoch chas astic tic Shorte test st Path h Proble lem 23 23 Closest to classical : Stochastic Shortest Path Problem ▪ Let = (𝑇, 𝐵, 𝑄) be a stochastic system ▪ Let 𝑑: 𝑇, 𝐵 → 𝑆 be a cost function ▪ Let 𝑡 0 ∈ 𝑇 be an initial state ▪ Let 𝑇 ⊆ 𝑇 be a set of goal states ▪ Then, find a policy that can be applied starting at and that reaches a state in Not covered here 23 24

Recommend

More recommend