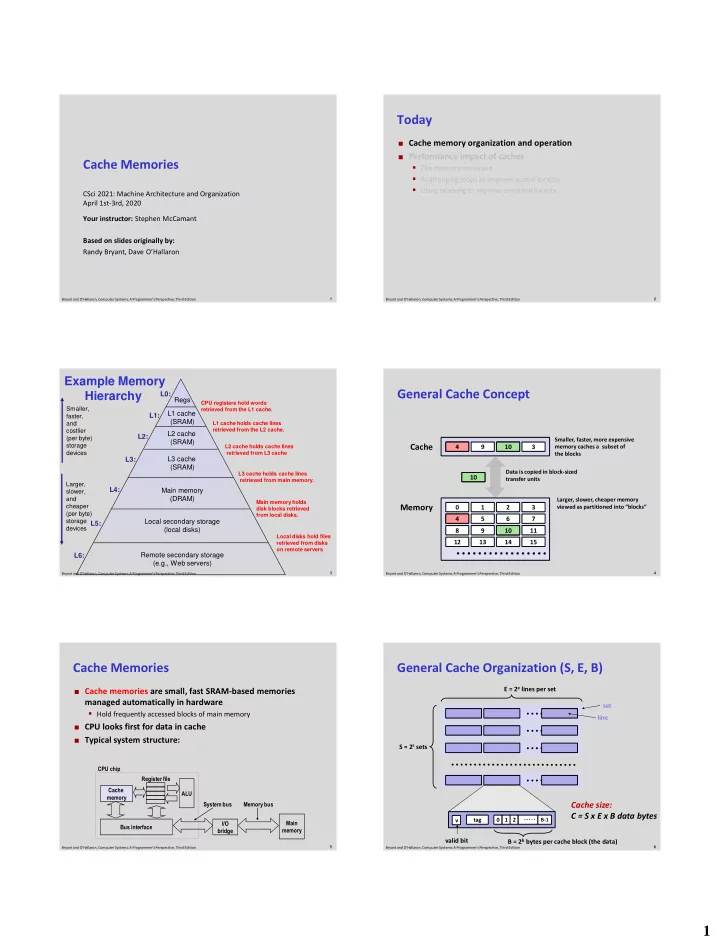

Today Cache memory organization and operation Performance impact of caches Cache Memories The memory mountain Rearranging loops to improve spatial locality Using blocking to improve temporal locality CSci 2021: Machine Architecture and Organization April 1st-3rd, 2020 Your instructor: Stephen McCamant Based on slides originally by: Randy Bryant, Dave O’Hallaron 1 2 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Example Memory General Cache Concept Hierarchy L0: Regs CPU registers hold words Smaller, retrieved from the L1 cache. L1 cache L1: faster, (SRAM) and L1 cache holds cache lines retrieved from the L2 cache. costlier L2 cache L2: (per byte) Smaller, faster, more expensive (SRAM) storage Cache L2 cache holds cache lines 4 8 9 10 14 3 memory caches a subset of devices retrieved from L3 cache the blocks L3: L3 cache (SRAM) Data is copied in block-sized L3 cache holds cache lines 10 4 transfer units retrieved from main memory. Larger, L4: Main memory slower, and (DRAM) Larger, slower, cheaper memory Main memory holds cheaper Memory 0 1 2 3 viewed as partitioned into “blocks” disk blocks retrieved (per byte) from local disks. 4 4 5 6 7 storage Local secondary storage L5: devices (local disks) 8 9 10 10 11 Local disks hold files 12 13 14 15 retrieved from disks on remote servers Remote secondary storage L6: (e.g., Web servers) 3 4 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Cache Memories General Cache Organization (S, E, B) E = 2 e lines per set Cache memories are small, fast SRAM-based memories managed automatically in hardware set Hold frequently accessed blocks of main memory line CPU looks first for data in cache Typical system structure: S = 2 s sets CPU chip Register file Cache ALU memory System bus Memory bus Cache size: C = S x E x B data bytes tag 0 1 2 B-1 v I/O Main Bus interface bridge memory valid bit B = 2 b bytes per cache block (the data) 5 6 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition 1

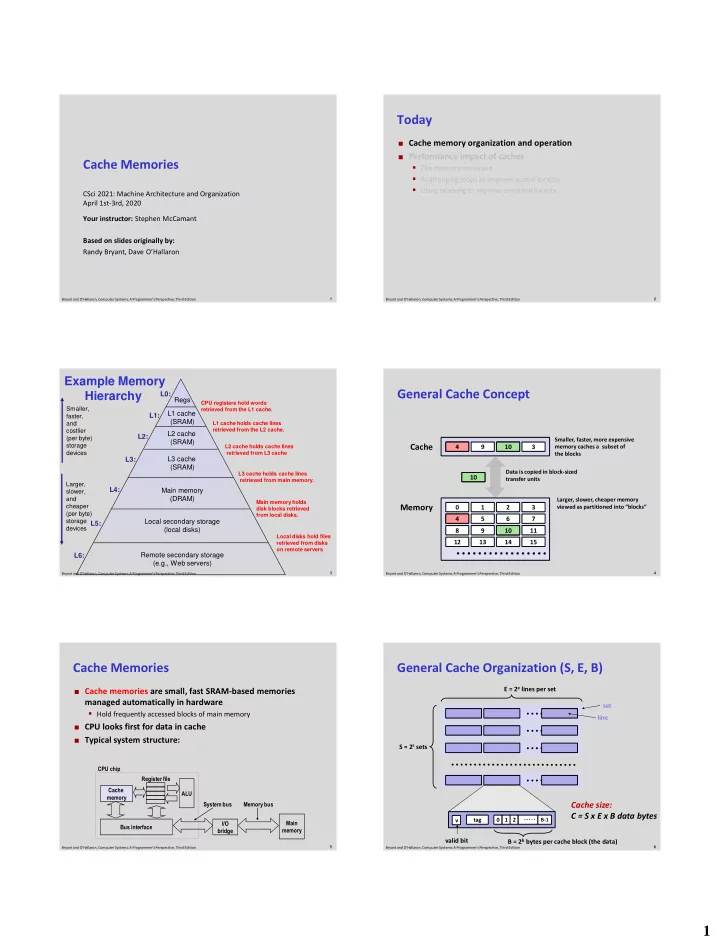

• Locate set Cache Read Example: Direct Mapped Cache (E = 1) • Check if any line in set has matching tag Direct mapped: One line per set E = 2 e lines per set • Yes + line valid: hit Assume: cache block size 8 bytes • Locate data starting at offset Address of int: Address of word: v tag 0 1 2 3 4 5 6 7 t bits 0…01 100 t bits s bits b bits S = 2 s sets v tag 0 1 2 3 4 5 6 7 find set tag set block S = 2 s sets index offset v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 data begins at this offset v tag 0 1 2 B-1 valid bit B = 2 b bytes per cache block (the data) 7 8 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Example: Direct Mapped Cache (E = 1) Example: Direct Mapped Cache (E = 1) Direct mapped: One line per set Direct mapped: One line per set Assume: cache block size 8 bytes Assume: cache block size 8 bytes Address of int: Address of int: valid? + match: assume yes = hit valid? + match: assume yes = hit t bits 0…01 100 t bits 0…01 100 v tag tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 block offset block offset int (4 Bytes) is here If tag doesn’t match: old line is evicted and replaced 9 10 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Direct-Mapped Cache Simulation E-way Set Associative Cache (Here: E = 2) E = 2: Two lines per set t=1 s=2 b=1 M=16 bytes (4-bit addresses), B=2 bytes/block, Assume: cache block size 8 bytes Address of short int: x xx x S=4 sets, E=1 Blocks/set t bits 0…01 100 Address trace (reads, one byte per read): v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 miss 0 [0000 2 ], hit 1 [0001 2 ], find set v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 miss 7 [0111 2 ], miss 8 [1000 2 ], v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 miss 0 [0000 2 ] v Tag Block 1 1 0 1 1 0 0 ? M[8-9] M[0-1] M[0-1] ? Set 0 v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 Set 1 Set 2 Set 3 1 0 M[6-7] 11 12 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition 2

E-way Set Associative Cache (Here: E = 2) E-way Set Associative Cache (Here: E = 2) E = 2: Two lines per set E = 2: Two lines per set Assume: cache block size 8 bytes Assume: cache block size 8 bytes Address of short int: Address of short int: t bits 0…01 100 t bits 0…01 100 compare both compare both valid? + match: yes = hit valid? + match: yes = hit v tag tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 block offset block offset short int (2 Bytes) is here No match: • One line in set is selected for eviction and replacement • Replacement policies: random, least recently used (LRU), … 13 14 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition What about writes? 2-Way Set Associative Cache Simulation Multiple copies of data exist: t=2 s=1 b=1 L1, L2, L3, Main Memory, Disk M=16 byte addresses, B=2 bytes/block, xx x x S=2 sets, E=2 blocks/set What to do on a write-hit? Write-through (write immediately to memory) Address trace (reads, one byte per read): Write-back (defer write to memory until replacement of line) miss 0 [0000 2 ], hit Need a dirty bit (line different from memory or not) 1 [0001 2 ], miss 7 [0111 2 ], What to do on a write-miss? miss 8 [1000 2 ], Write-allocate (load into cache, update line in cache) hit 0 [0000 2 ] Good if more writes to the location follow No-write-allocate (writes straight to memory, does not load into cache) v Tag Block 0 1 ? 00 ? M[0-1] Typical Set 0 0 1 10 M[8-9] Write-through + No-write-allocate Write-back + Write-allocate 0 1 01 M[6-7] Set 1 0 15 16 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Intel Core i7 Cache Hierarchy Cache Performance Metrics Processor package Miss Rate Core 0 Core 3 Fraction of memory references not found in cache (misses / accesses) L1 i-cache and d-cache: 32 KB, 8-way, = 1 – hit rate Regs Regs Access: 4 cycles Typical numbers (in percentages): 3-10% for L1 L1 L1 L1 L1 L2 unified cache: can be quite small (e.g., < 1%) for L2, depending on size, etc. d-cache i-cache … d-cache i-cache 256 KB, 8-way, Hit Time Access: 10 cycles Time to deliver a line in the cache to the processor L2 unified cache L2 unified cache L3 unified cache: includes time to determine whether the line is in the cache 8 MB, 16-way, Typical numbers: Access: 40-75 cycles 4 clock cycle for L1 L3 unified cache 10 clock cycles for L2 Block size : 64 bytes for (shared by all cores) Miss Penalty all caches. Additional time required because of a miss typically 50-200 cycles for main memory (Trend: increasing!) Main memory 17 18 Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition Bryant and O’Hallaron, Computer Systems: A Programmer’s Perspective, Third Edition 3

Recommend

More recommend