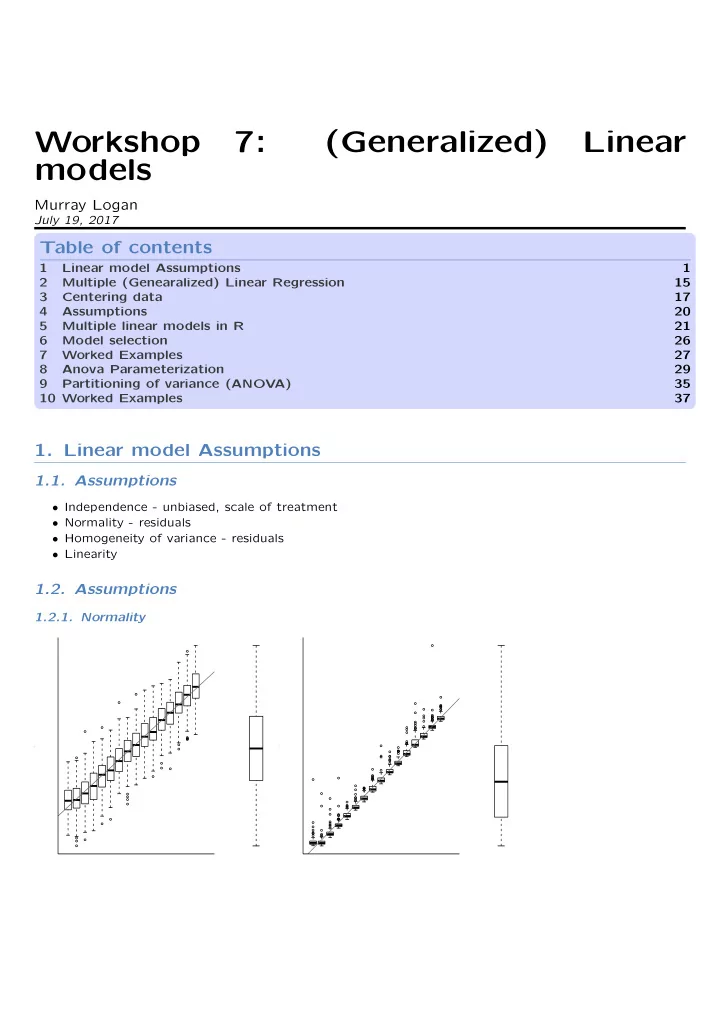

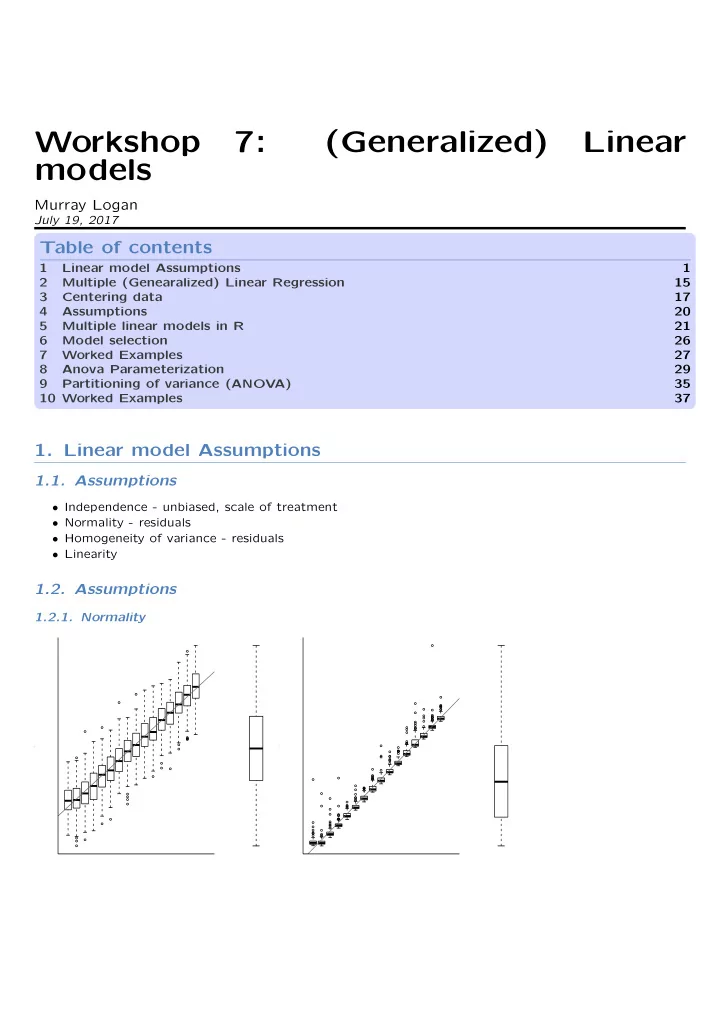

-1- Workshop 7: (Generalized) Linear models Murray Logan July 19, 2017 Table of contents 1 Linear model Assumptions 1 2 Multiple (Genearalized) Linear Regression 15 3 Centering data 17 4 Assumptions 20 5 Multiple linear models in R 21 6 Model selection 26 7 Worked Examples 27 8 Anova Parameterization 29 9 Partitioning of variance (ANOVA) 35 10 Worked Examples 37 1. Linear model Assumptions 1.1. Assumptions • Independence - unbiased, scale of treatment • Normality - residuals • Homogeneity of variance - residuals • Linearity 1.2. Assumptions 1.2.1. Normality ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● y y ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ●

● 2.5 ● ● ● ● ● ● -2- 2.0 ● ● ● ● 1.5 ● ● ● 1.0 ● ● ● ● 0.5 ● 0.0 0 5 10 15 20 25 30 1.3. Assumptions 1.3.1. Homogeneity of variance ● ● ● ● ● ● Residuals ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● res Y ● ● ● ● y y ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● X x Predicted x ● ● ● ● ● Residuals ● ● ● ● ● ● ● ● ● ● ● ● res Y y ● ● y ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● X x Predicted x ● 2.5 ● 1.4. Assumptions ● 1.4.1. Linearity ● ● ● Trendline ● ● ● 2.0 60 ● ● 2.5 ● ● ● ● ● ● ● ● ● 50 ● 2.0 ● 40 ● ● ● ● ● ● ● 1.5 ● ● ● ● 30 ● 1.5 ● ● ● 1.0 ● ● 20 ● ● ● ● ● ● ● ● 0.5 10 ● ● ● ● ● ● ● 0 0.0 ● 0 5 10 15 20 25 30 0 5 10 15 20 25 30 1.0 1.5. Assumptions ● ● 1.5.1. Linearity ● Loess (lowess) smoother 0.5 ● 0.0 0 5 10 15 20 25 30

-3- ● 60 ● ● ● ● 50 40 ● ● ● ● 30 ● ● ● 20 ● ● ● ● 10 ● ● ● ● 0 0 5 10 15 20 25 30 ● ● 2.5 ● ● ● ● ● ● 2.0 ● ● ● ● 1.5 ● ● ● ● 1.0 ● ● ● 0.5 ● 0.0 0 5 10 15 20 25 30 1.6. Assumptions 1.6.1. Linearity Spline smoother

-4- ● ● 60 ● ● 2.5 ● ● ● ● ● ● ● ● 50 ● 2.0 ● 40 ● ● ● ● ● ● 1.5 ● ● 30 ● ● ● ● 1.0 ● ● 20 ● ● ● ● ● ● ● 0.5 10 ● ● ● ● ● 0 0.0 0 5 10 15 20 25 30 0 5 10 15 20 25 30 1.7. Assumptions y i = β 0 + β 1 × x i + ε i ϵ i ∼ N (0, σ 2 ) 1.8. Assumptions y i = β 0 + β 1 × x i + ε i ϵ i ∼ N (0, σ 2 ) 1.9. The linear predictor y = β 0 + β 1 x Y X 3 0 2.5 1 6 2 5.5 3 9 4 8.6 5 12 6 β 0 × 1 β 1 × 0 3.0 = + β 0 × 1 β 1 × 1 2.5 = + β 0 × 1 β 1 × 2 6.0 = + 5.5 = β 0 × 1 + β 1 × 3 9.0 = β 0 × 1 + β 1 × 4 8.6 = β 0 × 1 + β 1 × 5 12.0 = β 0 × 1 + β 1 × 6

-5- 3.0 1 0 2.5 1 1 6.0 1 2 ( β 0 ) 5.5 = 1 3 × β 1 9.0 1 4 � �� � 8.6 1 5 Parameter vector 12.0 1 6 � �� � � �� � Response values Model matrix 1.10. Linear models in R > lm(formula, data= DATAFRAME) Model R formula Description y i = β 0 + β 1 x i y~1+x Full model y~x y i = β 0 Null model y~1 y i = β 1 Through origin y~-1+x > lm(Y~X, data= DATA) Call: lm(formula = Y ~ X, data = DATA) Coefficients: (Intercept) X 2.136 1.507 1.11. Linear models in R > Xmat = model.matrix(~X, data=DATA) > Xmat (Intercept) X 1 1 0 2 1 1 3 1 2 4 1 3 5 1 4 6 1 5 7 1 6 attr(,"assign") [1] 0 1 > lm.fit(Xmat,DATA$Y) $coefficients (Intercept) X 2.135714 1.507143

-6- $residuals [1] 0.8642857 -1.1428571 0.8500000 -1.1571429 0.8357143 -1.0714286 0.8214286 $effects (Intercept) X -17.6131444 7.9750504 0.6507186 -1.5697499 0.2097817 -1.9106868 -0.2311552 $rank [1] 2 $fitted.values [1] 2.135714 3.642857 5.150000 6.657143 8.164286 9.671429 11.178571 $assign [1] 0 1 $qr $qr (Intercept) X 1 -2.6457513 -7.93725393 2 0.3779645 5.29150262 3 0.3779645 0.03347335 4 0.3779645 -0.15550888 5 0.3779645 -0.34449112 6 0.3779645 -0.53347335 7 0.3779645 -0.72245559 attr(,"assign") [1] 0 1 $qraux [1] 1.377964 1.222456 $pivot [1] 1 2 $tol [1] 1e-07 $rank [1] 2 attr(,"class") [1] "qr" $df.residual [1] 5 1.12. Example 1.12.1. Linear Model N (0, σ 2 ) y i = β 0 + β 1 x i > DATA.lm<-lm(Y~X, data=DATA)

-7- 1.12.2. Generalized Linear Model N (0, σ 2 ) g ( y i ) = β 0 + β 1 x i > DATA.glm<-glm(Y~X, data=DATA, family='gaussian') 1.13. Model diagnostics 1.13.1. Residuals 30 25 20 ● ● y 15 ● 10 ● ● ● 5 ● ● 0 0 1 2 3 4 5 6 x 1.14. Model diagnostics

-8- 1.14.1. Residuals 30 25 20 ● ● y 15 ● 10 ● ● ● 5 ● ● 0 0 1 2 3 4 5 6 x 1.15. Model diagnostics 1.15.1. Leverage 30 ● ● 25 20 y 15 10 ● ● ● ● 5 ● ● 0 0 5 10 15 20 x

-9- 1.16. Model diagnostics 1.16.1. Cook’s D 50 40 ● ● 30 y 20 10 ● ● ● ● ● ● 0 0 5 10 15 20 x 1.17. Example 1.17.1. Model evaluation Extractor Description residuals() Extracts residuals from model 15 ● 10 ● ● ● y 5 ● ● ● 0 0 1 2 3 4 5 6 x > residuals(DATA.lm) 1 2 3 4 5 6 7 0.8642857 -1.1428571 0.8500000 -1.1571429 0.8357143 -1.0714286 0.8214286 1.18. Example

-10- 1.18.1. Model evaluation Extractor Description residuals() Extracts residuals from model fitted() Extracts the predicted values 15 ● ● 10 ● ● ● ● ● y ● 5 ● ● ● ● ● ● 0 0 1 2 3 4 5 6 x > fitted(DATA.lm) 1 2 3 4 5 6 7 2.135714 3.642857 5.150000 6.657143 8.164286 9.671429 11.178571 1.19. Example 1.19.1. Model evaluation Extractor Description Extracts residuals from model residuals() fitted() Extracts the predicted values plot() Series of diagnostic plots > plot(DATA.lm)

-11- Residuals vs Fitted Normal Q−Q 1.0 Standardized residuals 1.0 ● ● ● ● ● ● ● ● Residuals 0.0 0.0 −1.0 −1.0 ● ● ● 6 2 4 4 6 ● ● ● 2 2 4 6 8 10 −1.0 0.0 0.5 1.0 Fitted values Theoretical Quantiles Scale−Location Residuals vs Leverage Standardized residuals 2 ● Standardized residuals ● 4 6 ● 1.0 ● 1 7 ● 0.5 ● ● ● ● ● ● 0.8 0.0 0.4 −1.0 0.5 ● ● Cook's distance ● 2 0.0 2 4 6 8 10 0.0 0.1 0.2 0.3 0.4 Fitted values Leverage 1.20. Example 1.20.1. Model evaluation Extractor Description residuals() Residuals Predicted values fitted() Diagnostic plots plot() Leverage (hat) and Cook’s D influence.measures() 1.21. Example 1.21.1. Model evaluation > influence.measures(DATA.lm) Influence measures of lm(formula = Y ~ X, data = DATA) : dfb.1_ dfb.X dffit cov.r cook.d hat inf 1 0.9603 -7.99e-01 0.960 1.82 0.4553 0.464 2 -0.7650 5.52e-01 -0.780 1.15 0.2756 0.286 3 0.3165 -1.63e-01 0.365 1.43 0.0720 0.179 4 -0.2513 -7.39e-17 -0.453 1.07 0.0981 0.143 5 0.0443 1.60e-01 0.357 1.45 0.0696 0.179 6 0.1402 -5.06e-01 -0.715 1.26 0.2422 0.286 7 -0.3466 7.50e-01 0.901 1.91 0.4113 0.464 1.22. Example 1.22.1. Model evaluation

-12- Extractor Description Residuals residuals() Predicted values fitted() Diagnostic plots plot() Leverage, Cook’s D influence.measures() Summarizes important output from model summary() 1.23. Example 1.23.1. Model evaluation > summary(DATA.lm) Call: lm(formula = Y ~ X, data = DATA) Residuals: 1 2 3 4 5 6 7 0.8643 -1.1429 0.8500 -1.1571 0.8357 -1.0714 0.8214 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 2.1357 0.7850 2.721 0.041737 * X 1.5071 0.2177 6.923 0.000965 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 1.152 on 5 degrees of freedom Multiple R-squared: 0.9055, Adjusted R-squared: 0.8866 F-statistic: 47.92 on 1 and 5 DF, p-value: 0.0009648 1.24. Example 1.24.1. Model evaluation Extractor Description Residuals residuals() Predicted values fitted() Diagnostic plots plot() Leverage, Cook’s D influence.measures() Model output summary() Confidence intervals of parameters confint() 1.25. Example

Recommend

More recommend