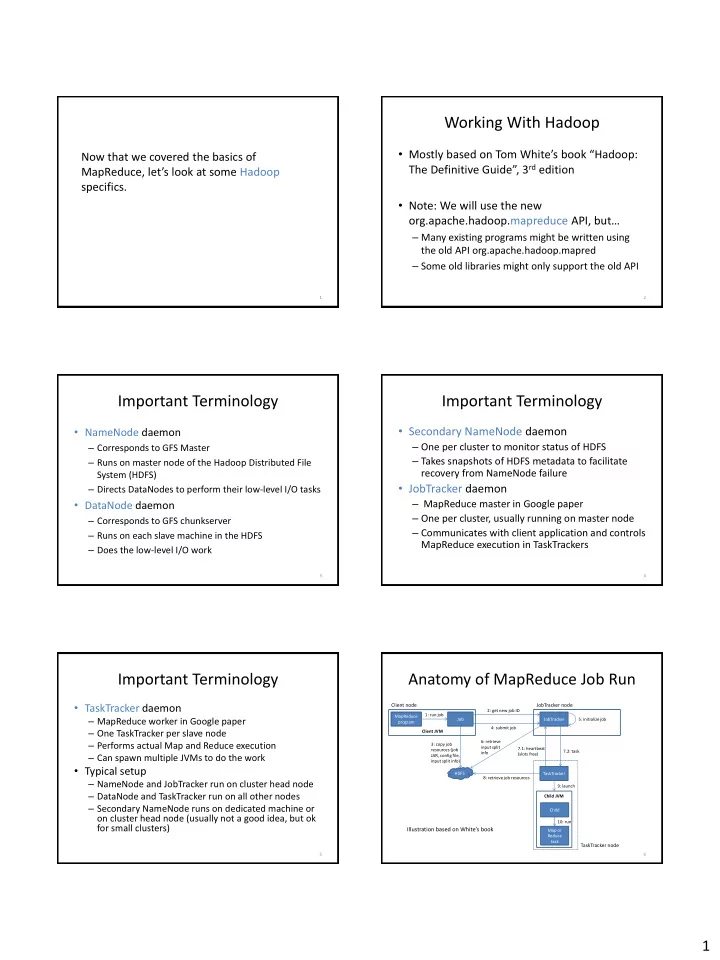

Working With Hadoop • Mostly based on Tom White’s book “ Hadoop: Now that we covered the basics of The Definitive Guide”, 3 rd edition MapReduce , let’s look at some Hadoop specifics. • Note: We will use the new org.apache.hadoop.mapreduce API, but… – Many existing programs might be written using the old API org.apache.hadoop.mapred – Some old libraries might only support the old API 1 2 Important Terminology Important Terminology • Secondary NameNode daemon • NameNode daemon – One per cluster to monitor status of HDFS – Corresponds to GFS Master – Takes snapshots of HDFS metadata to facilitate – Runs on master node of the Hadoop Distributed File recovery from NameNode failure System (HDFS) • JobTracker daemon – Directs DataNodes to perform their low-level I/O tasks • DataNode daemon – MapReduce master in Google paper – One per cluster, usually running on master node – Corresponds to GFS chunkserver – Communicates with client application and controls – Runs on each slave machine in the HDFS MapReduce execution in TaskTrackers – Does the low-level I/O work 3 4 Important Terminology Anatomy of MapReduce Job Run • TaskTracker daemon Client node JobTracker node 2: get new job ID 1: run job MapReduce – MapReduce worker in Google paper Job JobTracker 5: initialize job program 4: submit job – One TaskTracker per slave node Client JVM – Performs actual Map and Reduce execution 6: retrieve 3: copy job input split 7.1: heartbeat resources (job 7.2: task – Can spawn multiple JVMs to do the work info (slots free) JAR, config file, input split info) • Typical setup HDFS TaskTracker 8: retrieve job resources – NameNode and JobTracker run on cluster head node 9: launch – DataNode and TaskTracker run on all other nodes Child JVM – Secondary NameNode runs on dedicated machine or Child on cluster head node (usually not a good idea, but ok 10: run for small clusters) Illustration based on White’s book Map or Reduce task TaskTracker node 5 6 1

Job Submission Job Initialization • Client submits MapReduce job through Job.submit() • JobTracker puts ready job into internal queue call • Job scheduler picks job from queue – waitForCompletion() submits job and polls JobTracker about progress every sec, outputs to console if changed – Initializes it by creating job object • Job submission process – Creates list of tasks – Get new job ID from JobTracker • One map task for each input split – Determine input splits for job • Number of reduce tasks determined by – Copy job resources (job JAR file, configuration file, mapred.reduce.tasks property in Job, which is set by computed input splits) to HDFS into directory named after setNumReduceTasks() the job ID – Informs JobTracker that job is ready for execution • Tasks need to be assigned to worker nodes 7 8 Task Assignment Task Execution • TaskTrackers send heartbeat to JobTracker • TaskTracker copies job JAR and other – Indicate if ready to run new tasks configuration data (e.g., distributed cache) – Number of “slots” for tasks depends on number of from HDFS to local disk cores and memory size • Creates local working directory • JobTracker replies with new task • Creates TaskRunner instance – Chooses task from first job in priority-queue • Chooses map tasks before reduce tasks • TaskRunner launches new JVM (or reuses one • Chooses map task whose input split location is closest to from another task) to execute the JAR machine running the TaskTracker instance – Ideal case: data-local task – Could also use other scheduling policy 9 10 Monitoring Job Progress Handling Failures: Task • Error reported to TaskTracker and logged • Tasks report progress to TaskTracker • Hanging task detected through timeout • TaskTracker includes task progress in • JobTracker will automatically re-schedule heartbeat message to JobTracker failed tasks • JobTracker computes global status of job – Tries up to mapred.map.max.attempts many times progress (similar for reduce) • JobClient polls JobTracker regularly for status – Job is aborted when task failure rate exceeds mapred.max.map.failures.percent (similar for • Visible on console and Web UI reduce) 11 12 2

Handling Failures: TaskTracker and Moving Data From Mappers to JobTracker Reducers • Shuffle and sort phase = synchronization barrier • TaskTracker failure detected by JobTracker between map and reduce phase from missing heartbeat messages • Often one of the most expensive parts of a – JobTracker re-schedules map tasks and not MapReduce execution completed reduce tasks from that TaskTracker • Mappers need to separate output intended for • Hadoop cannot deal with JobTracker failure different reducers – Could use Google’s proposed JobTracker take-over • Reducers need to collect their data from all idea, using ZooKeeper to make sure there is at mappers and group it by key most one JobTracker • Keys at each reducer are processed in order 13 14 Shuffle and Sort Overview NCDC Weather Data Example • Raw data has lines like these (year, temperature in Reduce task starts copying data from map task as soon as it completes. Reduce cannot start working on the data bold ) until all mappers have finished and their data has arrived. – 006701199099999 1950 051507004+68750+023550FM- Map task Reduce task Merge happens in Spill files on Spill files merged memory if data fits, 12+038299999V0203301N00671220001CN9999999N9 +00 disk: partitioned Spilled to a into single output otherwise also on disk by reduce task, 00 1+99999999999 new disk each partition file file when sorted by key – 004301199099999 1950 051512004+68750+023550FM- almost full merge R Fetch over HTTP e 12+038299999V0203201N00671220001CN9999999N9 +00 M Buffer in d a merge 22 1+99999999999 memory u p c • Goal: find max temperature for each year merge e Input – Map: emit (year, temp) for each year Output split – Reduce: compute max over temp from (year, (temp, temp,…)) list There are tuning parameters To other Reduces to control the performance From other Maps of this crucial phase. Illustration based on White’s book 15 16 Map Map() Method • Hadoop’s Mapper class • Input: input key type, input value type (and a – Org.apache.hadoop.mapreduce.Mapper Context) • Type parameters: input key type, input value type, – Line of text from NCDC file output key type, and output value type – Converted to Java String type, then parsed to get – Input key: line’s offset in file (irrelevant) – Input value: line from NCDC file year and temperature – Output key: year • Output: written using Context – Output value: temperature – Uses output key and value types • Data types are optimized for network serialization • Only write (year, temp) pair if the temperature – Found in org.apache.hadoop.io package • Work is done by the map() method is present and quality indicator reading is OK 17 18 3

Recommend

More recommend