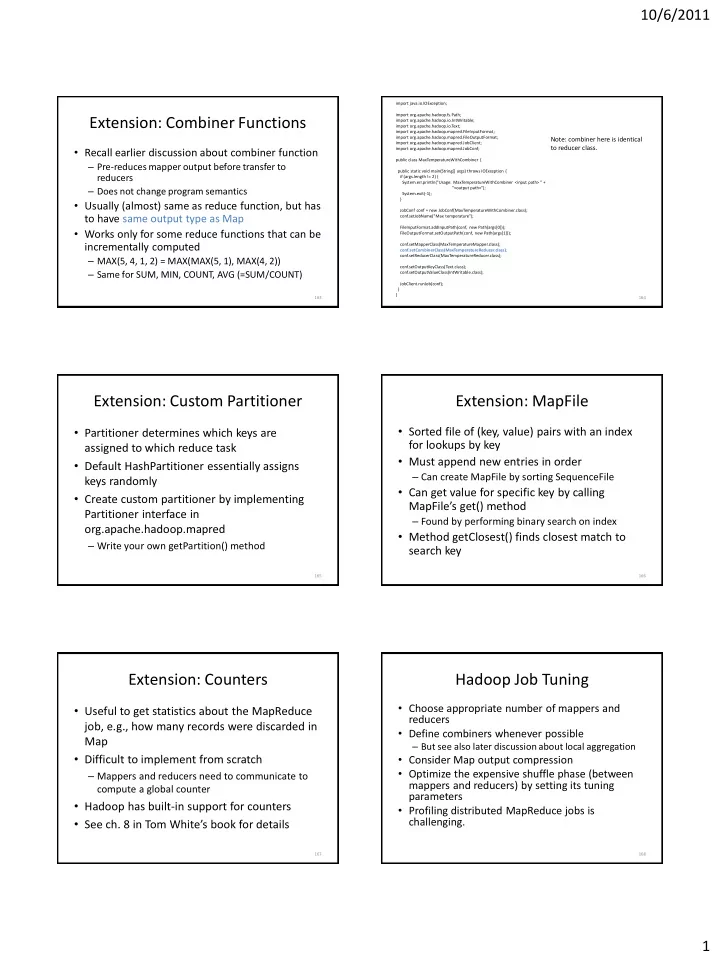

10/6/2011 import java.io.IOException; import org.apache.hadoop.fs.Path; Extension: Combiner Functions import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapred.FileInputFormat; import org.apache.hadoop.mapred.FileOutputFormat; Note: combiner here is identical import org.apache.hadoop.mapred.JobClient; to reducer class. • Recall earlier discussion about combiner function import org.apache.hadoop.mapred.JobConf; public class MaxTemperatureWithCombiner { – Pre-reduces mapper output before transfer to public static void main(String[] args) throws IOException { reducers if (args.length != 2) { System.err.println("Usage: MaxTemperatureWithCombiner <input path> " + – Does not change program semantics "<output path>"); System.exit(-1); } • Usually (almost) same as reduce function, but has JobConf conf = new JobConf(MaxTemperatureWithCombiner.class); to have same output type as Map conf.setJobName("Max temperature"); FileInputFormat.addInputPath(conf, new Path(args[0])); • Works only for some reduce functions that can be FileOutputFormat.setOutputPath(conf, new Path(args[1])); incrementally computed conf.setMapperClass(MaxTemperatureMapper.class); conf.setCombinerClass(MaxTemperatureReducer.class) ; conf.setReducerClass(MaxTemperatureReducer.class); – MAX(5, 4, 1, 2) = MAX(MAX(5, 1), MAX(4, 2)) conf.setOutputKeyClass(Text.class); – Same for SUM, MIN, COUNT, AVG (=SUM/COUNT) conf.setOutputValueClass(IntWritable.class); JobClient.runJob(conf); } } 163 164 Extension: Custom Partitioner Extension: MapFile • Sorted file of (key, value) pairs with an index • Partitioner determines which keys are for lookups by key assigned to which reduce task • Must append new entries in order • Default HashPartitioner essentially assigns – Can create MapFile by sorting SequenceFile keys randomly • Can get value for specific key by calling • Create custom partitioner by implementing MapFile’s get() method Partitioner interface in – Found by performing binary search on index org.apache.hadoop.mapred • Method getClosest() finds closest match to – Write your own getPartition() method search key 165 166 Extension: Counters Hadoop Job Tuning • Choose appropriate number of mappers and • Useful to get statistics about the MapReduce reducers job, e.g., how many records were discarded in • Define combiners whenever possible Map – But see also later discussion about local aggregation • Difficult to implement from scratch • Consider Map output compression • Optimize the expensive shuffle phase (between – Mappers and reducers need to communicate to mappers and reducers) by setting its tuning compute a global counter parameters • Hadoop has built-in support for counters • Profiling distributed MapReduce jobs is • See ch. 8 in Tom White’s book for details challenging. 167 168 1

10/6/2011 Hadoop and Other Programming Multiple MapReduce Steps Languages • Hadoop Streaming API to write map and • Example: find average max temp for every day reduce functions in languages other than Java of the year and every weather station – Any language that can read from standard input – Find max temp for each combination of station and write to standard output and day/month/year – Compute average for each combination of station and day/month • Hadoop Pipes API for using C++ • Can be done in two MapReduce jobs – Uses sockets to communicate with Hadoop’s task – Could also combine it into single job, which would trackers be faster 169 170 Running a MapReduce Workflow MapReduce Coding Summary • Decompose problem into appropriate workflow • Linear chain of jobs of MapReduce jobs – To run job2 after job1, create JobConf’s conf1 and • For each job, implement the following conf2 in main function – Job configuration – Call JobClient.runJob(conf1); JobClient.runJob(conf2); – Map function – Catch exceptions to re-start failed jobs in pipeline – Reduce function • More complex workflows – Combiner function (optional) – Partition function (optional) – Use JobControl from • Might have to create custom data types as well org.apache.hadoop.mapred.jobcontrol – WritableComparable for keys – We will see soon how to use Pig for this – Writable for values 171 172 The Pig System • Christopher Olston, Benjamin Reed, Utkarsh Let’s see how we can create complex Srivastava, Ravi Kumar, Andrew Tomkins: Pig MapReduce workflows by programming in a Latin: a not-so-foreign language for data high-level language. processing. SIGMOD Conference 2008: 1099- 1110 • Several slides courtesy Chris Olston and Utkarsh Srivastava • Open source project under the Apache Hadoop umbrella 173 174 2

10/6/2011 Overview Why Not SQL or Plain MapReduce? • Design goal: find sweet spot between • SQL difficult to use and debug for many programmers declarative style of SQL and low-level • Programmer might not trust automatic optimizer procedural style of MapReduce and prefers to hard-code best query plan • Programmer creates Pig Latin program, using • Plain MapReduce lacks convenience of readily high-level operators available, reusable data manipulation operators • Pig Latin program is compiled to MapReduce like selection, projection, join, sort program to run on Hadoop • Program semantics hidden in “opaque” Java code – More difficult to optimize and maintain 175 176 Example Data Analysis Task Data Flow Load Visits Find the top 10 most visited pages in each category Group by url Visits Url Info Foreach url Load Url Info generate count User Url Time Url Category PageRank Amy cnn.com 8:00 cnn.com News 0.9 Join on url Amy bbc.com 10:00 bbc.com News 0.8 Group by category Amy flickr.com 10:05 flickr.com Photos 0.7 Foreach category Fred cnn.com 12:00 espn.com Sports 0.9 generate top10 urls 177 178 In Pig Latin Pig Latin Notes • No need to import data into database visits = load ‘/data/visits’ as (user, url, time); gVisits = group visits by url; – Pig Latin works directly with files visitCounts = foreach gVisits generate url, count(visits); • Schemas are optional and can be assigned dynamically urlInfo = load ‘/data/ urlInfo ’ as (url, category, pRank); – Load ‘/data/visits’ as (user, url, time); visitCounts = join visitCounts by url, urlInfo by url; • Can call user-defined functions in every gCategories = group visitCounts by category; construct like Load, Store, Group, Filter, topUrls = foreach gCategories generate top(visitCounts,10); Foreach – Foreach gCategories generate top(visitCounts,10); store topUrls into ‘/data/ topUrls ’; 179 180 3

10/6/2011 Pig Latin Data Model Pig Latin Operators: LOAD • Fully-nestable data model with: • Reads data from file and optionally assigns – Atomic values, tuples, bags (lists), and maps schema to each record • Can use custom deserializer finance yahoo , email news q ueries = LOAD ‘query_log.txt’ USING myLoad() • More natural to programmers than flat tuples AS (userID, queryString, timestamp); – Can flatten nested structures using FLATTEN • Avoids expensive joins, but more complex to process 181 182 Pig Latin Operators: FOREACH Pig Latin Operators: FILTER • Applies processing to each record of a data set • Remove records that do not pass filter • No dependence between the processing of condition different records • Can use user-defined function in filter – Allows efficient parallel implementation condition • GENERATE creates output records for a given input record real_queries = FILTER queries BY userId neq ` bot‘; expanded_queries = FOREACH queries GENERATE userId, expandQuery(queryString); 183 184 Pig Latin Operators: COGROUP Pig Latin Operators: GROUP • Group together records from one or more • Special case of COGROUP, to group single data data sets set by selected fields • Similar to GROUP BY in SQL, but does not results queryString url rank (Lakers, nba.com, 1) (Lakers, top, 50) Lakers, , Lakers nba.com 1 (Lakers, espn.com, 2) (Lakers, side, 20) need to apply aggregate function to records in Lakers espn.com 2 each group Kings nhl.com 1 (Kings, nhl.com, 1) (Kings, top, 30) Kings, , Kings nba.com 2 (Kings, nba.com, 2) (Kings, side, 10) revenue queryString adSlot amount grouped_revenue = GROUP revenue BY Lakers top 50 COGROUP results BY queryString, revenue BY queryString queryString; Lakers side 20 Kings top 30 Kings side 10 185 186 4

Recommend

More recommend