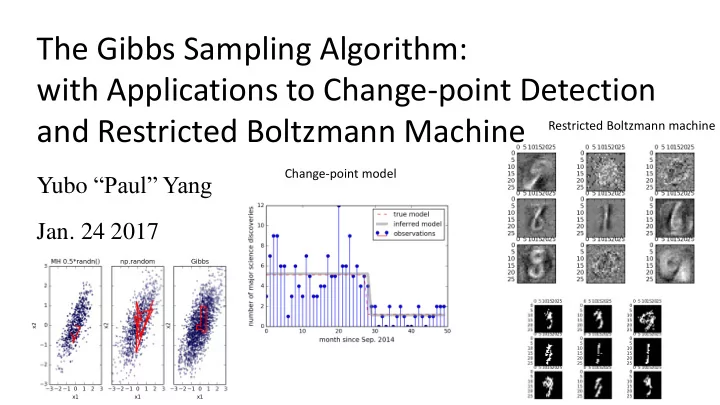

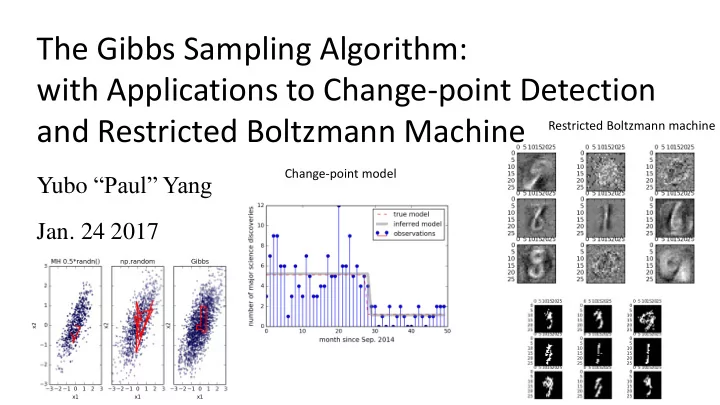

The Gibbs Sampling Algorithm: with Applications to Change-point Detection and Restricted Boltzmann Machine Restricted Boltzmann machine Change-point model Yubo “Paul” Yang Jan. 24 2017

Introduction: History Stuart Geman Donald Geman @ Brown @ Johns Hopkins • • 1971 B.S. in Physics from 1965 B.A. in English UMich Literature from UIUC • • 1973 MS in 1970 Ph.D in Mathematics Neurophysiology from from Northwestern Dartmouth • 1977 Ph.D in Applied Mathematics from MIT • 1984 Gibbs Sampling (IEEE Trans. Pattern Anal. Mach. Intell, 6, 721-741, 1984.) • 1986 Markov Random Field Image Models (PICM. Ed. A.M. Gleason, AMS, Providence) • 1997 Decision Trees and Random Forest (Neural Computation., 9, 1545-1588, 1997) with Y. Amit

Gibbs Sampling: One variable at a time Basic Gibbs sampling from bivariate Normal Basic version : • One variable at a time • Special case of Metropolis-Hasting (MH) i.e. Acceptance = 1 Block version : • Sample all independent variables simultaneously Collapsed version: • Trace over some variables (i.e. ignore them) Samplers within Gibbs: • Eg. Sample some variables with MH

Basic Example: Sample from Bivariate Normal Distribution Example inspired by: MCMC: The Gibbs Sampler , The Clever Machine , https://theclevermachine.wordpress.com/2012/1 1/05/mcmc-the-gibbs-sampler/ Q0/ How to sample 𝑦 from standard normal distribution Ɲ(𝜈 = 0, 𝜏 = 1) ?

Basic Example: Sample from Bivariate Normal Distribution Example inspired by: MCMC: The Gibbs Sampler , The Clever Machine , https://theclevermachine.wordpress.com/2012/1 1/05/mcmc-the-gibbs-sampler/ Q0/ How to sample 𝑦 from standard normal distribution Ɲ(𝜈 = 0, 𝜏 = 1) ? 2𝜌𝜏 2 exp[− 𝑦−𝜈 2 1 2𝜏 2 ] . A0/ np.random.randn() samples from P(x) = Bivariate normal distribution is the generalization of the normal distribution to two variables: 1 𝑨 𝑄 𝑦 1 , 𝑦 2 = Ɲ(𝜈 1 , 𝜈 2 , Σ) = 2𝜌𝜏 1 𝜏 2 1 − 𝜍 2 𝑓𝑦𝑞 − 2(1 − 𝜍 2 )

Basic Example: Sample from Bivariate Normal Distribution Example inspired by: MCMC: The Gibbs Sampler , The Clever Machine , https://theclevermachine.wordpress.com/2012/1 1/05/mcmc-the-gibbs-sampler/ Q0/ How to sample 𝑦 from standard normal distribution Ɲ(𝜈 = 0, 𝜏 = 1) ? 2𝜌𝜏 2 exp[− 𝑦−𝜈 2 1 2𝜏 2 ] . A0/ np.random.randn() samples from P(x) = Bivariate normal distribution is the generalization of the normal distribution to two variables: 1 𝑨 𝑄 𝑦 1 , 𝑦 2 = Ɲ(𝜈 1 , 𝜈 2 , Σ) = 2𝜌𝜏 1 𝜏 2 1 − 𝜍 2 𝑓𝑦𝑞 − 2(1 − 𝜍 2 ) 𝑨 = 𝑦 1 − 𝜈 1 2 + 𝑦 2 − 𝜈 2 2 − 2𝜍 𝑦 1 − 𝜈 1 𝑦 2 − 𝜈 2 𝜏 1 𝜍 and where Σ = 2 2 𝜍 𝜏 2 𝜏 1 𝜏 2 𝜏 1 𝜏 2 For simplicity, let 𝜈 1 = 𝜈 2 = 0 , and 𝜏 1 = 𝜏 2 = 1 then: 2 − 2𝜍𝑦 1 𝑦 2 + 𝑦 2 2 l𝑜 𝑄 𝑦 1 , 𝑦 2 = − 𝑦 1 + 𝑑𝑝𝑜𝑡𝑢. Q/ How to sample 𝒚 𝟐 , 𝒚 𝟑 from 𝑸(𝒚 𝟐 , 𝒚 𝟑 ) ? 2 1 − 𝜍 2

Basic Example: Sample from Bivariate Normal Distribution The joint probability distribution of 𝑦 1 , 𝑦 2 has log: Q/ How to sample 𝒚 𝟐 , 𝒚 𝟑 from 𝑸(𝒚 𝟐 , 𝒚 𝟑 ) ? A/ Gibbs sampling. Fix x2, sample x1 from 𝑸(𝒚 𝟐 |𝒚 𝟑 ) 2 − 2𝜍𝑦 1 𝑦 2 + 𝑦 2 2 l𝑜 𝑄 𝑦 1 , 𝑦 2 = − 𝑦 1 Fix x1, sample x2 from 𝑸(𝒚 𝟑 |𝒚 𝟐 ) + 𝑑𝑝𝑜𝑡𝑢. 2 1 − 𝜍 2 Rinse and repeat

Basic Example: Sample from Bivariate Normal Distribution The joint probability distribution of 𝑦 1 , 𝑦 2 has log: Q/ How to sample 𝒚 𝟐 , 𝒚 𝟑 from 𝑸(𝒚 𝟐 , 𝒚 𝟑 ) ? A/ Gibbs sampling. Fix x2, sample x1 from 𝑸(𝒚 𝟐 |𝒚 𝟑 ) 2 − 2𝜍𝑦 1 𝑦 2 + 𝑦 2 2 l𝑜 𝑄 𝑦 1 , 𝑦 2 = − 𝑦 1 Fix x1, sample x2 from 𝑸(𝒚 𝟑 |𝒚 𝟐 ) + 𝑑𝑝𝑜𝑡𝑢. 2 1 − 𝜍 2 Rinse and repeat The full conditional probability distribution of 𝑦 1 has log: 2 − 2𝜍𝑦 1 𝑦 2 + 𝑑𝑝𝑜𝑡𝑢. = − (𝑦 1 −𝜍𝑦 2 ) 2 ln 𝑄 𝑦 1 𝑦 2 = − 𝑦 1 + 𝑑𝑝𝑜𝑡𝑢. ⇒ 2 1 − 𝜍 2 2 1 − 𝜍 2 1 − 𝜍 2 ) 𝑄 𝑦 1 𝑦 2 = Ɲ(𝜈 = 𝜍𝑦 2 , 𝜏 = new_x1 = np.sqrt(1-rho*rho) * np.random.randn() + rho*x2

Basic Example: Sample from Bivariate Normal Distribution Fixing x2 shifts the mean of x1 and 𝜍 = 0.8 changes its variance

Basic Example: Sample from Bivariate Normal Distribution Gibbs sampler has worse correlation than numpy’s built-in multivariate_normal sampler, 𝑄(𝒚 ′ ) but is much better than naïve Metropolis ( reversible moves, 𝐵 = min(1, 𝑄(𝒚) ) ) Both Gibbs and Metropolis still fail when correlation is too high.

Example inspired by: Ilker Yildirim’s notes Model Example: Train a Change-point Model with Bayesian Inference on Gibbs sampling, http://www.mit.edu/~ilkery/papers/Gibbs Sampling.pdf Bayesian Inference: Improve ‘guess’ model with data. The question that change-point model answers: when did a change occur to the distribution of a random variable? 𝑜 How to estimate the change point from observations?

Example inspired by: Ilker Yildirim’s notes Model Example: Train a Change-point Model with Bayesian Inference on Gibbs sampling, http://www.mit.edu/~ilkery/papers/Gibbs Sampling.pdf • change-point model: a particular probability distribution of observables and model parameters (Gamma prior, Poisson posterior) 𝑜−1 𝑂−1 𝑄𝑝𝑗𝑡𝑡𝑝𝑜 𝑦; 𝜇 = 𝑓 −𝜇 𝜇 𝑦 where 𝑄 𝑦 0 , 𝑦 1 , … , 𝑦 𝑂−1 , 𝜇 1 , 𝜇 2 , 𝑜 = 𝑄𝑝𝑗𝑡𝑡𝑝𝑜 𝑦 𝑗 , 𝜇 1 𝑄𝑝𝑗𝑡𝑡𝑝𝑜 𝑦 𝑗 , 𝜇 2 𝑦! 𝑗=0 𝑗=𝑜 1 Γ(𝑏) 𝑐 𝑏 𝜇 𝑏−1 exp −𝑐𝜇 𝐻𝑏𝑛𝑛𝑏 𝜇 1 ; 𝑏 = 2, 𝑐 = 1 𝐻𝑏𝑛𝑛𝑏 𝜇 2 ; 𝑏 = 2, 𝑐 = 1 𝑉𝑜𝑗𝑔𝑝𝑠𝑛(𝑜, 𝑂) 𝐻𝑏𝑛𝑛𝑏 𝜇; 𝑏, 𝑐 = Q/ What is the full conditional probability of 𝝁 𝟐 ? 𝑉𝑜𝑗𝑔𝑝𝑠𝑛 𝑜; 𝑂 = 1/𝑂 𝑜 𝜇 1 𝜇 2

Example inspired by: Ilker Yildirim’s notes Model Example: Train a Change-point Model with Bayesian Inference on Gibbs sampling, http://www.mit.edu/~ilkery/papers/Gibbs Sampling.pdf • change-point model: a particular probability distribution of observables and model parameters (Gamma prior, Poisson posterior) 𝑜−1 𝑂−1 𝑄𝑝𝑗𝑡𝑡𝑝𝑜 𝑦; 𝜇 = 𝑓 −𝜇 𝜇 𝑦 where 𝑄 𝑦 0 , 𝑦 1 , … , 𝑦 𝑂−1 , 𝜇 1 , 𝜇 2 , 𝑜 = 𝑄𝑝𝑗𝑡𝑡𝑝𝑜 𝑦 𝑗 , 𝜇 1 𝑄𝑝𝑗𝑡𝑡𝑝𝑜 𝑦 𝑗 , 𝜇 2 𝑦! 𝑗=0 𝑗=𝑜 𝐻𝑏𝑛𝑛𝑏 𝜇; 𝑏, 𝑐 = 𝑓 −𝑐𝜇 𝜇 𝑏−1 𝐻𝑏𝑛𝑛𝑏 𝜇 1 ; 𝑏 = 2, 𝑐 = 1 𝐻𝑏𝑛𝑛𝑏 𝜇 2 ; 𝑏 = 2, 𝑐 = 1 𝑉𝑜𝑗𝑔𝑝𝑠𝑛(𝑜, 𝑂) Γ(𝑏) × 𝑐 𝑏 𝑉𝑜𝑗𝑔𝑝𝑠𝑛 𝑜; 𝑂 = 1/𝑂 • Without observation, model parameters come from the prior distribution (the guess): 𝑄 𝜇 1 , 𝜇 2 , 𝑜 = 𝐻𝑏𝑛𝑛𝑏 𝜇 1 ; 𝑏 = 2, 𝑐 = 1 𝐻𝑏𝑛𝑛𝑏 𝜇 2 ; 𝑏 = 2, 𝑐 = 1 𝑉𝑜𝑗𝑔𝑝𝑠𝑛(𝑜, 𝑂) • After observations, model parameters should be sampled from the posterior distribution: 𝑄 𝜇 1 , 𝜇 2 , 𝑜|𝑦 0 , 𝑦 1 , … , 𝑦 𝑂−1 𝑜 Q/ How to sample from the joint posterior distribution of 𝜇 1 , 𝜇 2 , 𝑜 ? 𝜇 1 𝜇 2

Example inspired by: Ilker Yildirim’s notes Model Example: Train a Change-point Model with Bayesian Inference on Gibbs sampling, http://www.mit.edu/~ilkery/papers/Gibbs Sampling.pdf Gibbs sampling require full conditionals 𝑜−1 ln 𝑄 𝜇 1 𝜇 2 , 𝑜, 𝒚 = ln 𝐻𝑏𝑛𝑛𝑏(𝜇 1 ; 𝑏 + 𝑦 𝑗 , 𝑐 + 𝑜) 𝑗=0 𝑂−1 ln 𝑄 𝜇 2 𝜇 1 , 𝑜, 𝒚 = ln 𝐻𝑏𝑛𝑛𝑏(𝜇 2 ; 𝑏 + 𝑦 𝑗 , 𝑐 + 𝑂 − 𝑜) 𝑗=𝑜 ln 𝑄 𝑜 𝜇 1 , 𝜇 2 , 𝒚 = 𝑛𝑓𝑡𝑡 𝑜 𝜇 1 , 𝜇 2 , 𝒚 Q/How to sample this mess?!

Example inspired by: Ilker Yildirim’s notes Model Example: Train a Change-point Model with Bayesian Inference on Gibbs sampling, http://www.mit.edu/~ilkery/papers/Gibbs Sampling.pdf Gibbs sampling require full conditionals 𝑜−1 ln 𝑄 𝜇 1 𝜇 2 , 𝑜, 𝒚 = ln 𝐻𝑏𝑛𝑛𝑏(𝜇 1 ; 𝑏 + 𝑦 𝑗 , 𝑐 + 𝑜) 𝑗=0 𝑂−1 ln 𝑄 𝜇 2 𝜇 1 , 𝑜, 𝒚 = ln 𝐻𝑏𝑛𝑛𝑏(𝜇 2 ; 𝑏 + 𝑦 𝑗 , 𝑐 + 𝑂 − 𝑜) 𝑗=𝑜 ln 𝑄 𝑜 𝜇 1 , 𝜇 2 , 𝒚 = 𝑛𝑓𝑡𝑡 𝑜 𝜇 1 , 𝜇 2 , 𝒚 Q/How to sample this mess?! A/ In general: Metropolis within Gibbs. In this case: bruteforce 𝑄 𝑜 𝜇 1 , 𝜇 2 , 𝒚 , ∀𝑜 = 0, … , 𝑂 − 1 because N is rather small.

Model Example: Train a Change-point Model with Bayesian Inference Model sampled from Metropolis sampler 𝜇 1 samples from Gibbs and naïve Metropolis

Recommend

More recommend