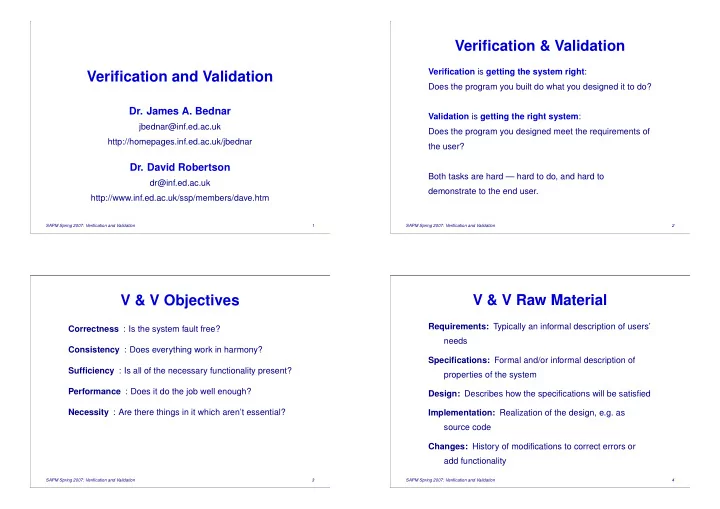

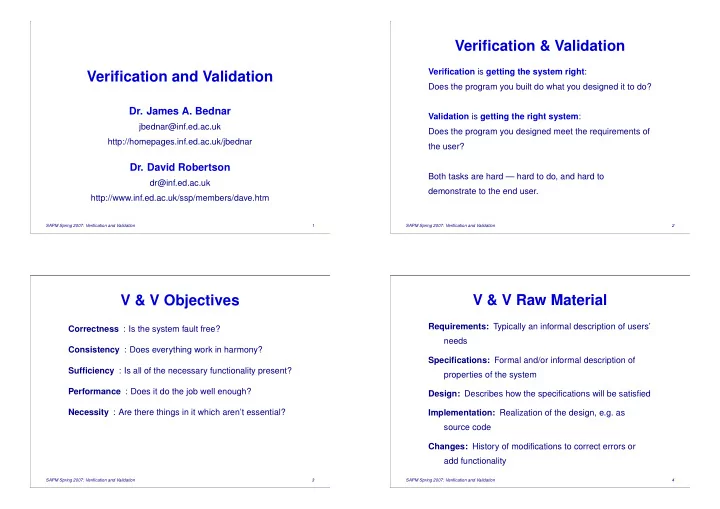

Verification & Validation Verification is getting the system right : Verification and Validation Does the program you built do what you designed it to do? Dr. James A. Bednar Validation is getting the right system : jbednar@inf.ed.ac.uk Does the program you designed meet the requirements of http://homepages.inf.ed.ac.uk/jbednar the user? Dr. David Robertson Both tasks are hard — hard to do, and hard to dr@inf.ed.ac.uk demonstrate to the end user. http://www.inf.ed.ac.uk/ssp/members/dave.htm SAPM Spring 2007: Verification and Validation 1 SAPM Spring 2007: Verification and Validation 2 V & V Objectives V & V Raw Material Requirements: Typically an informal description of users’ Correctness : Is the system fault free? needs Consistency : Does everything work in harmony? Specifications: Formal and/or informal description of Sufficiency : Is all of the necessary functionality present? properties of the system Performance : Does it do the job well enough? Design: Describes how the specifications will be satisfied Necessity : Are there things in it which aren’t essential? Implementation: Realization of the design, e.g. as source code Changes: History of modifications to correct errors or add functionality SAPM Spring 2007: Verification and Validation 3 SAPM Spring 2007: Verification and Validation 4

V & V Approaches Gold Standard: Formal Proofs The idea of formally proving correctness is appealing: Proof of correctness: Formally demonstrate match between program, specifications, and requirements • Represent problem domain and user requirements in logic, e.g. first-order predicate calculus Testing: Verify a finite list of test cases • Represent program specifications in logic, and prove Technical reviews: Structured group review meetings that any program meeting these specifications will Simulation and prototyping: Testing the design satisfy the requirements • Define formal semantics for all the primitives in your Requirements tracing: Relating software/design programming language structures (e.g. modules, use cases) back to • Prove that for all inputs your program will meet the requirements specifications SAPM Spring 2007: Verification and Validation 5 SAPM Spring 2007: Verification and Validation 6 Problems With Formal Proofs Practical Formal Methods Unfortunately, complete formal proofs of large systems are “Lightweight” applications of formal methods that do not rare and extremely difficult because: depend on proving complete program correctness can still • Converting informal user requirements to formal logic be useful (Robertson & Agusti 1999). can be as error prone as converting to a program E.g. one can formalize some of the trickier parts of the • Specifications for realistic programs quickly become too domain and requirements, to clarify what is required complicated for humans or computers to construct proofs before coding. • Developing a logic-based semantics for all languages Also, it can be easier to prove that two programs are and components involved in a large system is daunting equivalent than to prove that either matches a • It is difficult to get end users to validate and approve specification. This can help show that a heavily optimized logic-based representations function matches a simpler but slower equivalent. SAPM Spring 2007: Verification and Validation 7 SAPM Spring 2007: Verification and Validation 8

Cleanroom Software Methodology Testing Example: Cleanroom is a mostly formal SW development Large, complicated (e.g. GUI-based) systems usually use methodology for avoiding defects by developing in an testing for most V & V, as opposed to formal deduction. “ultra-clean” atmosphere (Linger 1994). It is based on: Testing is not straightforward either, because it is usually • Formal specification impractical to test a program on all possible inputs and on • Incremental development (perhaps partitioned by modules) all possible execution paths (which are both potentially infinite). • Structured programming and stepwise refinement (so both structural elements and design choices are constrained) How do we choose which finite and feasibly small set of • Static verification (e.g. using proof of correctness) inputs to test? Consider black-box and clear-box (aka white-box) approaches. • Statistical testing of integrated system, but no unit testing SAPM Spring 2007: Verification and Validation 9 SAPM Spring 2007: Verification and Validation 10 Black-Box Testing (1) Black-Box Testing (2) In black-box testing, tests are derived from the program Equivalence partitioning relies on the assumption that we specification, viewing the system as a black box: can separate inputs into sets that will produce similar system behaviour. Input test data Then methodically choose test cases from each partition. • Guess what’s Inputs triggering anomalies One method is to choose cases from midpoint (typical) inside the box and boundary (atypical) of each partition. • Form equivalence System partitions over The choice of inputs should not depend on understanding Output test results input space the algorithm inside the box, but on understanding the Outputs signalling defects properties of the input space. SAPM Spring 2007: Verification and Validation 11 SAPM Spring 2007: Verification and Validation 12

Black-Box Example Clear-Box Testing (1) Analyse internal structure of code to derive test data. For example, suppose we are testing a search algorithm Example: Binary search routine (Sommerville 2004) which uses a lookup key to find an element in a void Binary_search (elem key, elem* T, int size, boolean &found, int &L) (non-empty) array. { int bott=0; int top=size - 1; int mid=0; One partition of the test cases for this example is between L = (top + bott) / 2; found = (T[L] == key); inputs which output a found element and those for which while (bott <= top && !found) { mid = top + bott / 2; there is no element in the array. if ( T[mid] == key ) { found = true; L = mid; } Both of the partitions would then be tested. else if ( T[mid] < key ) bott = mid - 1; } } SAPM Spring 2007: Verification and Validation 13 SAPM Spring 2007: Verification and Validation 14 Clear-Box Testing (2) Clear-Box Testing (3) Think of program in terms of flow graphs. Now draw a flow graph for the program: 1 while bott <= top loop 2 if not found then 3 if T[mid] == key then 5 4 6 7 if T[mid] < key then 8 9 if−then−else while−loop case−split 10 12 11 13 SAPM Spring 2007: Verification and Validation 15 SAPM Spring 2007: Verification and Validation 16

Clear-Box Testing (4) Testing Levels The paths through this flow graph are: Unit testing: Conformance of module to specification • 1,2,3,4,12,13 Integration testing: Checking that modules work • 1,2,3,5,6,11,2,12,13 together • 1,2,3,5,7,8,10,11,2,12,13 • 1,2,3,5,7,9,10,11,2,12,13 System testing: Concentrates on system rather than If we follow all these paths we know: component capabilities • Every statement in the routine has been executed at Regression testing: Re-doing previous tests to confirm least once that changes haven’t undermined functionality • Every branch has been exercised for a true/false condition These tests complement black-box testing. SAPM Spring 2007: Verification and Validation 17 SAPM Spring 2007: Verification and Validation 18 Unit Testing Integration Testing It is extremely useful to develop unit tests while developing Integration testing focuses on errors in interfaces between components (or even before), and to preserve them components, e.g.: permanently. Unit tests: Import/export type/range errors: some of these can be • Help specify what the component should do detected by compilers or static checkers • Are faster and simpler for debugging than whole systems Import/export representation errors: e.g. an “elapsed • Preserve test code you would be writing anyway, for time” variable exported in milliseconds and imported obscure cases, bug fixes, etc. as seconds • Help show if a change to the component has broken it Timing errors: in real-time systems where producer and • Provide an example of using the component outside of consumer of data work at different speeds the system for which it has been developed SAPM Spring 2007: Verification and Validation 19 SAPM Spring 2007: Verification and Validation 20

Recommend

More recommend