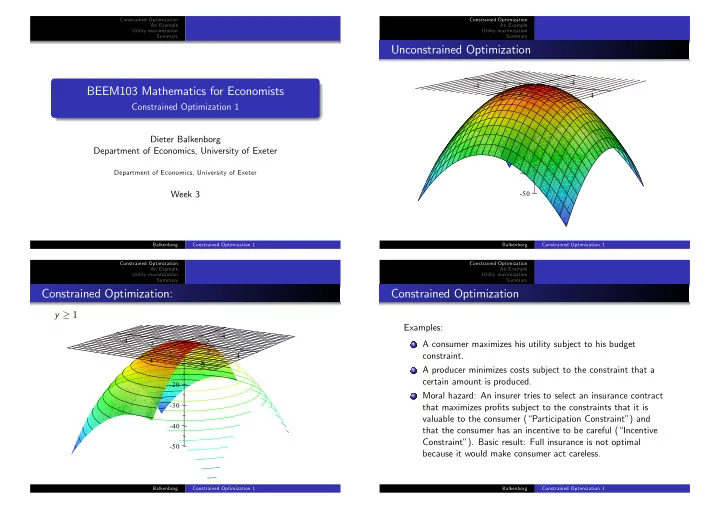

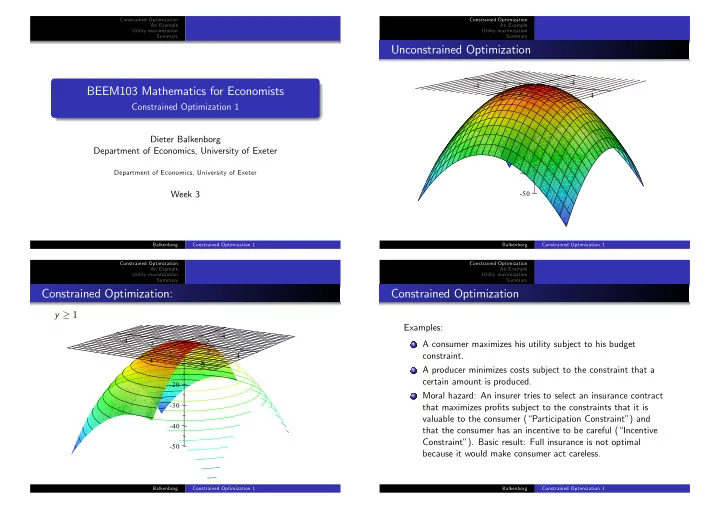

Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary Unconstrained Optimization -4 0 -4 -2 -2 BEEM103 Mathematics for Economists 0 0 2 2 4 4 y x Constrained Optimization 1 -10 -20 Dieter Balkenborg z Department of Economics, University of Exeter -30 Department of Economics, University of Exeter -40 Week 3 -50 Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1 Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary Constrained Optimization: Constrained Optimization y ≥ 1 Examples: -4 -4 -2 0 A consumer maximizes his utility subject to his budget -2 1 0 0 2 2 constraint. 4 4 y x -10 A producer minimizes costs subject to the constraint that a 2 certain amount is produced. -20 Moral hazard: An insurer tries to select an insurance contract z 3 -30 that maximizes profits subject to the constraints that it is valuable to the consumer (“Participation Constraint”) and -40 that the consumer has an incentive to be careful (“Incentive Constraint”). Basic result: Full insurance is not optimal -50 because it would make consumer act careless. Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1

Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary Constrained Optimization Problem The constraints carve out a region of the plane. Objective: Find the (absolute) maximum of the function g 1 (x,y)=0 z = f ( x , y ) subject to the inequality constraints g 5 (x,y)=0 Region where all constraints g 5 (x,y)<0 g 1 ( x , y ) ≥ 0 satisfied g 2 ( x , y ) ≥ 0 g 5 (x,y)>0 . . . g K ( x , y ) ≥ 0 g 1 (x,y)>0 g 1 (x,y)<0 g 2 (x,y)=0 Thus find pair ( x ∗ , y ∗ ) satisfying the constraints such that we have for all g 2 (x,y)>0 other pairs ( x , y ) satisfying the constraints: f ( x ∗ , y ∗ ) ≥ f ( x , y ) . f ( x , y ) is called the “objective function” and I call g 2 (x,y)<0 g 1 ( x , y ) , . . . , g K ( x , y ) the “constraining functions” of the problem. Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1 Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary Example 1: Consumer Optimization Example 2: Cost Minimization A consumer wants to maximize his utility 1 1 2 in a u ( x , y ) = ( x + 1 ) ( y + 1 ) A producer with production function Q ( K , L ) = K 6 L perfectly competitive market wants to minimize costs subject to subject to his budget constraint producing at least Q 0 units. b − p x x − p y y ≥ 0 Maximize − ( rK + wL ) and the non-negativity constraints x ≥ 0 y ≥ 0 subject to Q ( K , L ) − Q 0 ≥ 0 3 y ≥ K 0 2 ≥ 0 1 L 0 0 1 2 3 x Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1

Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary Example 3: Shortest Route The Island A swimmer who is currently at the coordinates ( a , b ) wants to swim along the shortest route to the square island with corner x+1=0 1-x=0 3 points ( − 1 , 1 ) , ( 1 , − 1 ) , ( − 1 , 1 ) , ( 1 , 1 ) . Instead of minimizing the distance we can maximize the negative 2 of the square of the distance y − ( x − a ) 2 − ( y − b ) 2 1-y=0 1 subject to the constraints x + 1 ≥ 0 0 -3 -2 -1 1 2 3 x 1 − x ≥ 0 y+1=0 -1 y + 1 ≥ 0 1 − y ≥ 0 -2 ( x , y ) is a point in the square carved out by the solutions to the -3 four inequalities. Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1 Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary The Swimmer Binding constraints A constraint is binding at the optimum if it holds with equality in x+1=0 1-x=0 the optimum. In the above picture only one of the four the 3 constraints is binding. All non-binding constraints can be ignored. If they are left out the optimum does not change. 2 y x+1=0 1-x=0 3 1-y=0 1 2 (a,b) y 0 -3 -2 -1 1 2 3 1-y=0 x 1 y+1=0 -1 (a,b) 0 -3 -2 -1 1 2 3 x y+1=0 -2 -1 -2 -3 Balkenborg Constrained Optimization 1 Balkenborg -3 Constrained Optimization 1

Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary The Lagrangian Approach The Lagrangian Approach The Lagrangian approach transfers a constrained optimization problem into an unconstrained optimization problem and 1 a pricing problem. 2 The Lagrangian: The new function to be optimized is called the Lagrangian. For L ( x , y ) = f ( x , y ) + λ 1 g 1 ( x , y ) + λ 2 g 2 ( x , y ) + · · · + λ K g K ( x , y ) each constraint a shadow price is introduced, called a Lagrange multiplier. In the new unconstrained optimization problem a constraint can be violated, but only at a cost. The pricing problem is to find shadow prices for the constraints such that the solutions to the new and the original optimization problem are identical. Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1 Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary Theorem Suppose we are given numbers λ 1 , λ 2 , . . . , λ K ≥ 0 and a pair of The Lagrangian: numbers ( x ∗ , y ∗ ) such that L ( x , y ) = f ( x , y ) + λ 1 g 1 ( x , y ) + λ 2 g 2 ( x , y ) + · · · + λ K g K ( x , y ) λ 1 , λ 2 , . . . , λ K ≥ 0 , i.e. Lagrange mutlipliers are non-negative, 1 2 ( x ∗ , y ∗ ) satisfies all the constraints, i.e., g k ( x ∗ , y ∗ ) ≥ 0 for all 1 ≤ k ≤ K . Proof. 3 ( x ∗ , y ∗ ) is an unconstrained maximum of the Lagrangian Because of the complementarity conditions we have L ( x ∗ , y ∗ ) = f ( x ∗ , y ∗ ) . We have L ( x , y ) ≤ L ( x ∗ , y ∗ ) for any L ( x , y ) . ( x , y ) . If ( x , y ) satisfies all constraints then λ k g k ( x , y ) ≥ 0 for The complementarity conditions 4 each constraint since the λ k are non-negative. Hence λ k g k ( x ∗ , y ∗ ) = 0 f ( x , y ) ≤ L ( x , y ) and therefore f ( x , y ) ≤ f ( x ∗ , y ∗ ) for any point ( x , y ) satisfying the constraints. are satisfied, i.e., either the k-Lagrange multiplier is zero or the k-th constraint binds for 1 ≤ k ≤ K. Then ( x ∗ , y ∗ ) is a maximum for the constrained maximization problem. Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1

Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary The Method The Swimmer’s Problem Make an informed guess about which constraints are binding 1 at the optimum. (Suppose there are k ∗ such constraints.) The Lagrangian is Set the Lagrange multipliers for all other constraints zero, i.e. 2 − ( x − a ) 2 − ( y − b ) 2 L ( x , y ) = ignore these constraints. + λ 1 ( x + 1 ) + λ 2 ( 1 − x ) + λ 3 ( y + 1 ) + λ 4 ( 1 − y ) Solve the two first -order conditions ∂ L ∂ x = 0, ∂ L ∂ y = 0 together 3 with the conditions that the k ∗ constraints are binding. FOC: (Notice that we have 2 + k ∗ constraints and equations, namely x , y and k ∗ Lagrange multipliers.) ∂ L = − 2 ( x − a ) + λ 1 − λ 2 = 0 ∂ x Check whether the solution is indeed an unconstrained 4 ∂ L optimum of the Lagrangian. (May be difficult.) = − 2 ( y − b ) + λ 3 − λ 4 = 0 ∂ y Check that the Lagrange multipliers are all non-negative and 5 that the solution ( x ∗ , y ∗ ) satisfies all constraints. If 4. and 5. are violated, start again at 1. with a new guess. 6 Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1 Constrained Optimization Constrained Optimization An Example An Example Utility maximization Utility maximization Summary Summary Case 1: Case 1 x+1=0 1-x=0 Suppose − 1 < x ∗ < 1 , − 1 < y ∗ < 1 . 3 None of the four constraints is “binding” In this case the optimum has to be a stationary point of the 2 objective function. This gives the conditions y 1-y=0 1 ∂ f = − 2 ( x − a ) = 0 ∂ x ∂ f 0 -3 -2 -1 1 2 3 = − 2 ( y − b ) = 0 x ∂ y y+1=0 -1 UNIQUE SOLUTION: ( x ∗ , y ∗ ) = ( a , b ) . Must have: − 1 ≤ a , b ≤ 1 for this to be the optimum. -2 -3 Balkenborg Constrained Optimization 1 Balkenborg Constrained Optimization 1

Recommend

More recommend