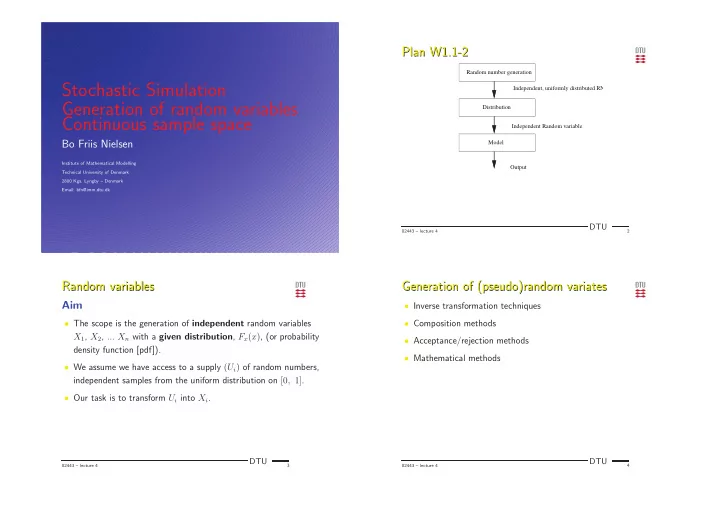

Plan W1.1-2 Plan W1.1-2 Random number generation Stochastic Simulation Independent, uniformly distributed RN Generation of random variables Distribution Continuous sample space Independent Random variable Bo Friis Nielsen Model Institute of Mathematical Modelling Output Technical University of Denmark 2800 Kgs. Lyngby – Denmark Email: bfn@imm.dtu.dk DTU 02443 – lecture 4 2 Random variables Generation of (pseudo)random variates Random variables Generation of (pseudo)random variates Aim • Inverse transformation techniques • The scope is the generation of independent random variables • Composition methods X 1 , X 2 , ... X n with a given distribution , F x ( x ) , (or probability • Acceptance/rejection methods density function [pdf]). • Mathematical methods • We assume we have access to a supply ( U i ) of random numbers, independent samples from the uniform distribution on [0 , 1] . • Our task is to transform U i into X i . DTU DTU 02443 – lecture 4 3 02443 – lecture 4 4

Uniform distribution I Uniform distribution I Inverse transformation Inverse transformation Our norm distribution or building brick, U (0 , 1) The cumulative distribution function (CDF) � � f ( x ) = 1 F ( x ) = x for 0 ≤ x ≤ 1 F ( x ) = P X ≤ x E x = 1 1 V x = 2 12 1 F(x) = P(X<=x) F(x) 0 1 x x x DTU 02443 – lecture 4 5 DTU 02443 – lecture 4 6 The random variable F ( X ) From U to X The random variable F ( X ) From U to X * U = F(X) * U = F(X) U * F(X) = U F(X) −1 X = F (U) X X DTU DTU 02443 – lecture 4 7 02443 – lecture 4 8

Inversion method Inversion method Inversion method Inversion method Assuming C 1 functions (also for g and g − 1 ) and let: The random variable U = F ( X ) � � U=F(X) X = g ( Y ) Y : F Y ( y ) = P Y ≤ y then � � � � � � Y ≤ g − 1 ( x ) = F y ( g − 1 ( x )) F x ( x ) = P X ≤ x = P g ( Y ) ≤ x = P If Y = U then F u ( u ) = u , and F x ( x ) = g − 1 ( x ) . X If � � U = F ( X ) F u ( u ) = P U ≤ u X = F − 1 ( U ) � � � � X ≤ F − 1 ( u ) = F ( F − 1 ( u )) = u F u ( u ) = P F ( X ) ≤ u = P I.e. F ( X ) is uniformly distributed. then X will have the cdf F ( x ) . DTU DTU 02443 – lecture 4 9 02443 – lecture 4 10 Exponential distribution Exponential distribution Uniform distribution II Uniform distribution II The time between events in a Poisson process is exponential distribu- ted. (Arrival time) Now, focus on U ( a, b ) . E X = 1 F − 1 ( u ) = − 1 1 F ( x ) = 1 − exp ( − λx ) λlog (1 − u ) f ( x ) = a ≤ x ≤ b λ b − a So (both 1 − U and U is uniform distributed) F ( x ) = x − a F − 1 ( u ) = a + ( b − a ) u b − a X = − log ( U ) ∈ Ex ( λ ) X = a + ( b − a ) U ∈ U ( a, b ) λ Exponential distribution 1 10 0.9 9 0.8 8 7 0.7 0.6 6 0.5 5 0.4 4 0.3 3 0.2 2 0.1 1 0 0 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 DTU DTU 02443 – lecture 4 11 02443 – lecture 4 12

Pareto Pareto Gaussian Gaussian Is often used in connection to description of income (over a certain X a result of many ( ∞ ) independent sources (Central limit level). theorem) � β � k X ∈ N ( µ, σ 2 ) � � U − 1 X ∈ Pa ( k, β ) F ( x ) = 1 − x ≥ β X = β k x G = Φ − 1 ( U ) G ∈ N (0 , 1) X = µ + σG Gaussian distribution 1 k k ( k − 1) 2 ( k − 2) β 2 0.9 E X = V X = k > 1 , 2 k − 1 β 0.8 0.7 0.6 Pareto distribution, Par(1,1) 1 0.5 Pareto with X ≥ 0 0.9 0.4 0.8 0.3 0.7 0.6 � − k 0.2 � 1 + x 0.5 � � U − 1 k − 1 0.1 0.4 F ( x ) = 1 − X = β 0.3 0 β −3 −2 −1 0 1 2 3 0.2 0.1 0 1 2 3 4 5 6 7 8 9 10 DTU DTU 02443 – lecture 4 13 02443 – lecture 4 14 Mathematical Method Mathematical Method Generation of cos and sin Generation of cos and sin • By means of transformation and other techniques we can obtain stochastic variable with a certain distribution. Sine and cosine can be calculated by the following The Box-Muller method A transformation from polar acceptance/rejection algorith m: � ( θ = 2 πU 2 , r = − 2 ln ( U 1 ) ) into Cartesian 1. Generate V 1 , V 2 ∈ U (0 , 1) coordinates ( X = G 1 and Y = G 2 ). 2. Generate R 2 = V 2 1 + V 2 2 cos (2 πU 2 ) G 1 3. If R 2 > 1 goto 1. = � − 2 ln ( U 1 ) G 1 , G 2 ∈ N 0 , 1 G 2 sin (2 πU 2 ) 4. cos (2 πU 2 ) = V 1 R , sin (2 πU 2 ) = V 2 R Central limit theorem n U i − n � G = eg. n = 6 2 i =1 DTU DTU 02443 – lecture 4 15 02443 – lecture 4 16

LN ( α, β 2 ) LN ( α, β 2 ) General and mulitvariate normal distribution General and mulitvariate normal distribution Logarithmic Gaussian, LN ( α, β 2 ) • Generate n independent values from an N (0 , 1) Y ∈ LN ( α, β 2 ) ln ( Y ) ∈ N α, β 2 distribution, Z i inN (0 , 1) . • X i = µ i + � i j =1 c ij Z j • Where c ij are the elements in the Cholesky factorisation of Y = exp ( X ) X = α + β G G ∈ N (0 , 1) Σ , Σ = CC ′ DTU DTU 02443 – lecture 4 17 02443 – lecture 4 18 Composition methods - hyperexponential Composition methods - Erlang distribution Composition methods - hyperexponential Composition methods - Erlang distribution distribution distribution • The Erlang distribution is a special case of the Gamma m m distribution with integer valued shape parameter p i e − λ i x = � � 1 − e − λ i x � � F ( x ) = 1 − p i • An Erlang distributed random variable can be interpreted as a i =1 i =1 Formally we can express sum of independent exponential variables • We can generate an Erlang- n distributed random variate by Z = X I where I ∈ { 1 , 2 , . . . , m } with P { I = i } = p i and X I ∈ exp ( λ I ) adding n exponential random variates. 1. Choose I ∈ { 1 , 2 , . . . , m } with probabilities p i ’s E ( Z ) = n V ( Z ) = n Z ∈ Erl n ( λ ) 2. Z = − 1 λ λ 2 λ i log ( U ) n n − 1 λ log ( U i ) = − 1 � � λ log (Π n Z = X i = i =1 U i ) i =1 i =1 DTU DTU 02443 – lecture 4 19 02443 – lecture 4 20

Composition methods II Composition methods II Acceptance/rejection Acceptance/rejection Generalization: Problem: we would like to generate X from pdf f , but it is much � f ( x ) = f ( x | y ) f ( y ) dy faster to generate Y with pdf g . NB. X and Y have the same sample space. If X | Y : f ( x | y ) Y : f ( y ) f ( y ) (eg. X | µ ∈ N ( µ, σ 2 ) ) Y is typical a parameter g ( y ) ≤ c for all y and some c Generate: • Step 1. Generate Y having density g . • Generate Y from f ( y ) . • Step 2. Generate a random number U • Generate X from f ( x | y ) where Y is used. • If U ≤ f ( Y ) cg ( Y ) set X = Y .Otherwise return to step 1. g ( y ) dy f ( y ) cg ( y ) = f ( y ) dy c DTU DTU 02443 – lecture 4 21 02443 – lecture 4 22 Exercise 3 Exercise 3 Statistics Toolbox Version 4.0 (R13) 20-Jun-2002 • Generate simulated values from the following distributions . ⋄ Exponential distribution . . ⋄ Normal distribution Random Number Generators. ⋄ Pareto distribution, with β = 1 and experiment with different betarnd - Beta random numbers. values of k values: k = 2.05, k = 2.5, k = 3 og k = 4. binornd - Binomial random numbers. • Verify the results by comparing histograms with analytical chi2rnd - Chi square random numbers. results. exprnd - Exponential random numbers. frnd - F random numbers. • For the Pareto distribution compare mean value and variance, gamrnd - Gamma random numbers. with analytical results, which can be calculated as geornd - Geometric random numbers. k − 1 (for k > 1 ) and V ar { X } = β 2 k k E { X } = β ( k − 1) 2 ( k − 2) (for hygernd - Hypergeometric random numbers. k > 2 ) iwishrnd - Inverse Wishart random matrix. lognrnd - Lognormal random numbers. DTU 02443 – lecture 4 23

Exercise 3 continued Exercise 3 continued • For the normal distribution generate 100 95% confidence intervals for the mean and variance based on 10 observations. Discuss the results. DTU 02443 – lecture 4 25

Recommend

More recommend