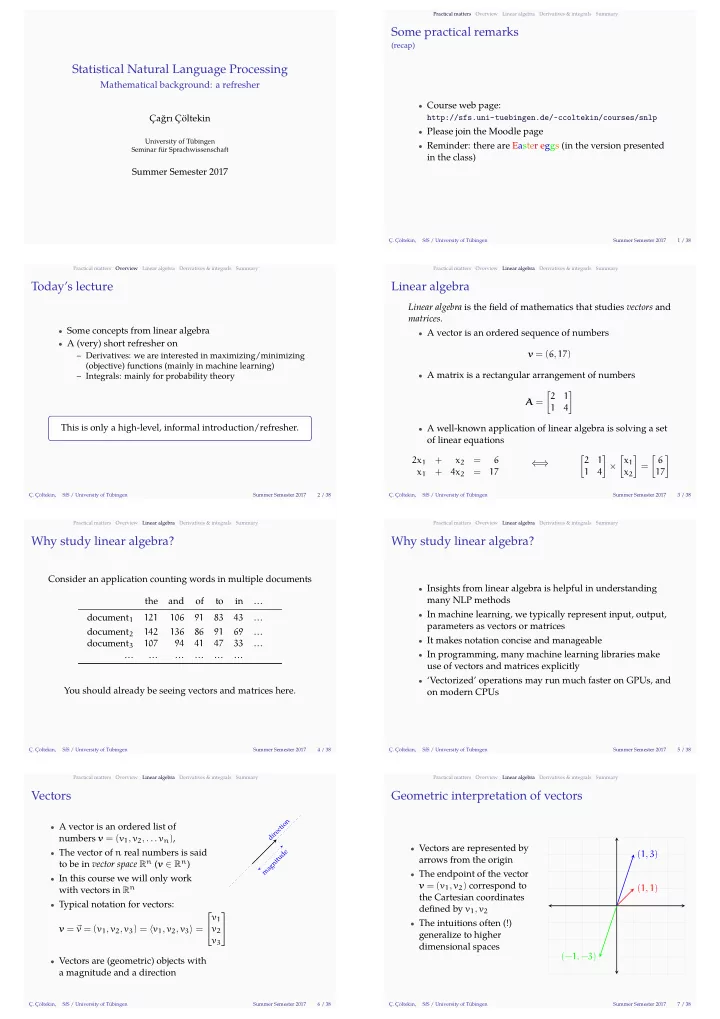

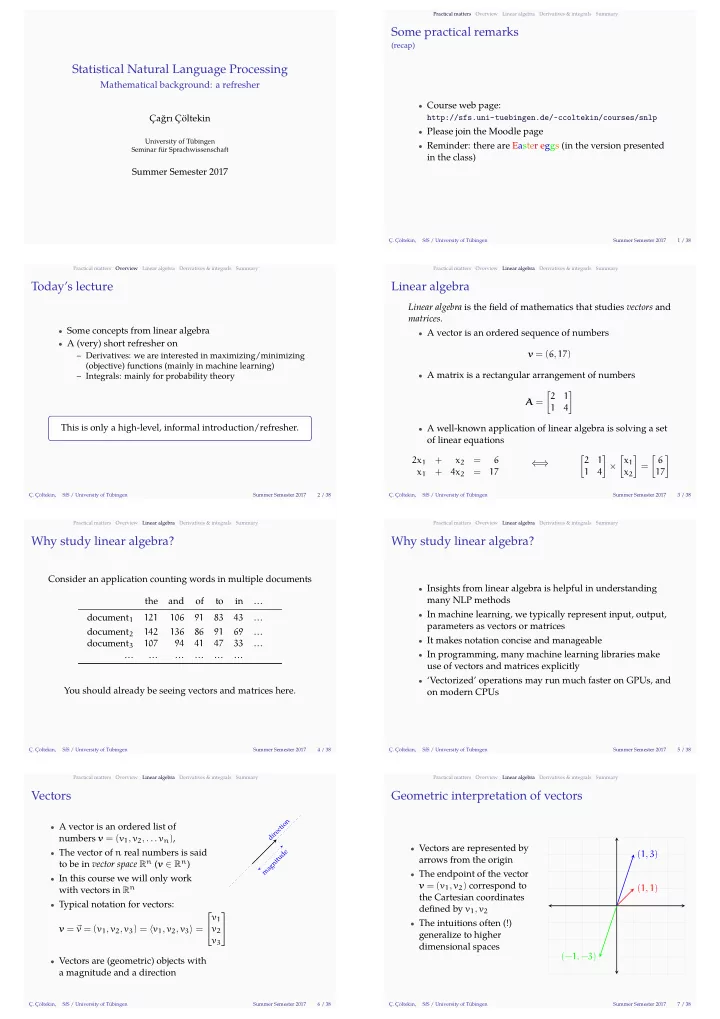

Statistical Natural Language Processing … … … … … … … You should already be seeing vectors and matrices here. Mathematical background: a refresher Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2017 4 / 38 Practical matters Overview … … Derivatives & integrals Summary Summer Semester 2017 3 / 38 Practical matters Overview Linear algebra Derivatives & integrals Why study linear algebra? … Consider an application counting words in multiple documents the and of to in Linear algebra Summary Ç. Çöltekin, Geometric interpretation of vectors 6 / 38 Practical matters Overview Linear algebra Derivatives & integrals Summary arrows from the origin SfS / University of Tübingen the Cartesian coordinates generalize to higher dimensional spaces Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2017 Summer Semester 2017 Ç. Çöltekin, Why study linear algebra? Summer Semester 2017 many NLP methods parameters as vectors or matrices use of vectors and matrices explicitly on modern CPUs Ç. Çöltekin, SfS / University of Tübingen 5 / 38 a magnitude and a direction Practical matters Overview Linear algebra Derivatives & integrals Summary Vectors SfS / University of Tübingen 7 / 38 – Derivatives: we are interested in maximizing/minimizing Linear algebra Some practical remarks (recap) Summary http://sfs.uni-tuebingen.de/~ccoltekin/courses/snlp in the class) Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2017 matrices . Linear algebra is the fjeld of mathematics that studies vectors and Linear algebra Summary Derivatives & integrals Overview Derivatives & integrals Practical matters 2 / 38 Summer Semester 2017 SfS / University of Tübingen Ç. Çöltekin, 1 / 38 This is only a high-level, informal introduction/refresher. Practical matters – Integrals: mainly for probability theory Overview Linear algebra (objective) functions (mainly in machine learning) Derivatives & integrals Summary Today’s lecture Linear algebra of linear equations Overview Practical matters Summer Semester 2017 Çağrı Çöltekin Seminar für Sprachwissenschaft University of Tübingen • Course web page: • Please join the Moodle page • Reminder: there are Easter eggs (in the version presented • Some concepts from linear algebra • A vector is an ordered sequence of numbers • A (very) short refresher on v = ( 6 , 17 ) • A matrix is a rectangular arrangement of numbers [ 2 ] 1 A = 1 4 • A well-known application of linear algebra is solving a set [ 6 [ 2 ] [ x 1 ] ] 2x 1 + x 2 = 6 1 ⇐ ⇒ × = x 1 + 4x 2 = 17 1 4 x 2 17 • Insights from linear algebra is helpful in understanding • In machine learning, we typically represent input, output, 121 106 91 83 43 document 1 142 136 86 91 69 document 2 • It makes notation concise and manageable 107 94 41 47 33 document 3 • In programming, many machine learning libraries make • ‘Vectorized’ operations may run much faster on GPUs, and n • A vector is an ordered list of o i t c e numbers v = ( v 1 , v 2 , . . . v n ) , r i d • Vectors are represented by • The vector of n real numbers is said ( 1 , 3 ) e d u to be in vector space R n ( v ∈ R n ) t i n g a m • The endpoint of the vector • In this course we will only work v = ( v 1 , v 2 ) correspond to ( 1 , 1 ) with vectors in R n • Typical notation for vectors: defjned by v 1 , v 2 v 1 • The intuitions often (!) v = ⃗ v = ( v 1 , v 2 , v 3 ) = ⟨ v 1 , v 2 , v 3 ⟩ = v 2 v 3 (− 1 , − 3 ) • Vectors are (geometric) objects with

Practical matters Derivatives & integrals Summary Derivatives & integrals Linear algebra Overview Practical matters 12 / 38 Summer Semester 2017 SfS / University of Tübingen Ç. Çöltekin, ‘scales’ the vector Multiplying a vector with a scalar Summary Linear algebra Ç. Çöltekin, Overview Overview 11 / 38 Summer Semester 2017 SfS / University of Tübingen Ç. Çöltekin, are also common Summary Derivatives & integrals Linear algebra Overview Practical matters Vector addition and subtraction SfS / University of Tübingen Summer Semester 2017 Practical matters Summer Semester 2017 SfS / University of Tübingen Ç. Çöltekin, equal to the dot product ignores the magnitudes of the vectors similarity is often used as another similarity metric, called cosine Cosine similarity Summary Derivatives & integrals Linear algebra Overview 14 / 38 Summer Semester 2017 Summer Semester 2017 SfS / University of Tübingen Ç. Çöltekin, between two vectors as a similarity measure or, Dot product Summary Derivatives & integrals Linear algebra Overview Practical matters 13 / 38 10 / 38 Practical matters SfS / University of Tübingen Derivatives & integrals Summer Semester 2017 SfS / University of Tübingen Ç. Çöltekin, x y Ç. Çöltekin, commonly used norm L 2 ) norm is the most L2 norm Summary Linear algebra Practical matters Overview Practical matters 8 / 38 Summer Semester 2017 SfS / University of Tübingen Ç. Çöltekin, some machine learning techniques Vector norms Summary Derivatives & integrals Linear algebra 9 / 38 15 / 38 Overview Linear algebra x y Manhattan distance Derivatives & integrals Summary L1 norm norm often encounter is the L1 • Euclidean norm, or L2 (or • The norm of a vector is an indication of its size (magnitude) ( 3 , 3 ) • For v = ( v 1 , v 2 ) , • The norm of a vector is the distance from its tail to its tip • Norms are related to distance measures √ v 2 1 + v 2 ∥ v ∥ 2 = 2 • Vector norms are particularly important for understanding √ √ 3 2 + 3 2 = ∥ ( 3 , 3 ) ∥ 2 = 18 • L2 norm is often written without a subscript: ∥ v ∥ L P norm • Another norm we will In general, L P norm, is defjned as ( n ) 1 ∑ p ( 3 , 3 ) | v i | p ∥ v ∥ p = ∥ v ∥ 1 = | v 1 | + | v 2 | i = 1 ∥ ( 3 , 3 ) ∥ 1 = | 3 | + | 3 | = 6 • L1 norm is related to We will only work with than L1 and L2 norms, but L 0 and L ∞ 2 v For vectors v = ( v 1 , v 2 ) and v + w w = ( w 1 , w 2 ) • For a vector v = ( v 1 , v 2 ) v = ( 1 , 2 ) v • v + w = ( v 1 + w 1 , v 2 + w 2 ) and a scalar a , v − w w ( 1 , 2 ) + ( 2 , 1 ) = ( 3 , 3 ) a v = ( av 1 , av 2 ) • v − w = v + (− w ) • multiplying with a scalar − 0 . 5 v − w ( 1 , 2 ) − ( 2 , 1 ) = (− 1 , 1 ) • For vectors w = ( w 1 , w 2 ) and v = ( v 1 , v 2 ) , • The cosine of the angle between two vectors vw wv = w 1 v 1 + w 2 v 2 cos α = ∥ v ∥∥ w ∥ v w wv = ∥ w ∥∥ v ∥ cos α α • The cosine similarity is related to the dot product, but ∥ v ∥ cos α • The dot product of two orthogonal vectors is 0 • For unit vectors (vectors of length 1) cosine similarity is • ww = ∥ w ∥ • Dot product may be used • The cosine similarity is bounded in range [− 1 , + 1 ]

Recommend

More recommend