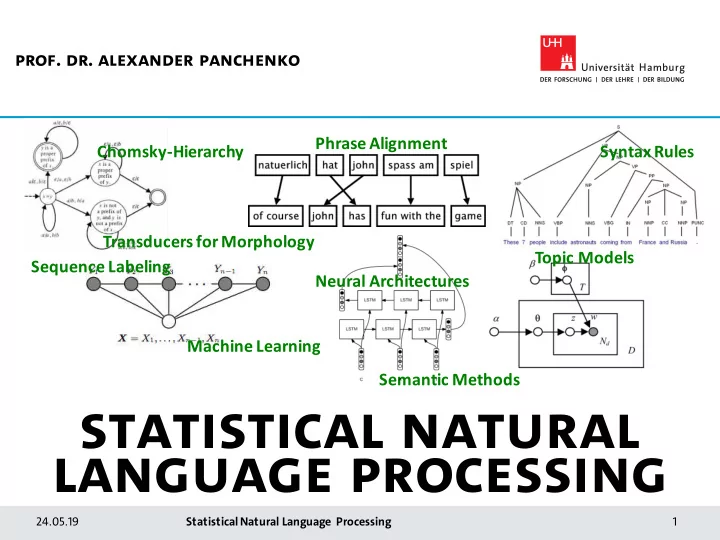

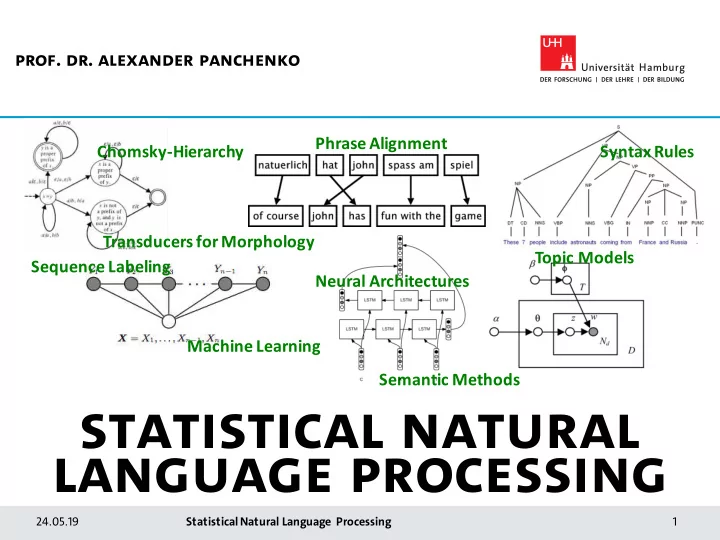

Prof. dr. Alexander panchenko Phrase Alignment Chomsky-Hierarchy Syntax Rules Transducers for Morphology Topic Models Sequence Labeling Neural Architectures Machine Learning Semantic Methods Statistical natural language processing 24.05.19 Statistical Natural Language Processing 1

The Course is based on the nlp course of hamburg university § d Eugen Ruppert, M. Sc. Prof. Dr. Chris Biemann Seid Muhie Yimam, Dr. Author of seminars Author of the lectures Author of seminars 24.05.19 Statistical Natural Language Processing 2

NLP Instructors: meet our teaching team Prof. Alexander Panchenko Olga Kozlova, MSc Dr. Artem Shelmanov Prof. Ekaterina Artemova Skoltech. Lectures, seminars MTS AI, Seminars, HW Skoltech. Seminars, Lecture HSE, Seminars, Lecture? A.Panchenko@skoltech.ru evezhier@gmail.com A.Shelmanov@skoltech.ru echernyak@hse.ru 24.05.19 Statistical Natural Language Processing 3

About myself: a decade of fun (and) R&D in NLP 2002-2008: Bauman Moscow State Technical University , • Engineer in Information Systems, MOSCOW 2008: Xerox Research Centre Europe , Research Intern, • FRANCE 2009-2013: Université catholique de Louvain , PhD in • Computational Linguistics, BELGIUM 2013-2015: Startup in SNA , Research Engineer in NLP, • MOSCOW 2015-2017: TU Darmstadt , Postdoc in NLP, GERMANY • 2017-2019: University of Hamburg , Postdoc in NLP, • GERMANY 2019-now: Skoltech , Assistant Professor in NLP, MOSCOW • https://scholar.google.com/citations?user=BYba9hcAAAAJ 24.05.19 Statistical Natural Language Processing 4

Language Technology Natural Language: • Formal languages? Naturally grown • • Programming Languages? Constantly changing • No well-defined semantics • Many layers of interpretation • Here: Meaning dependent on context • Natural Languages … • Technologies coping with this 24.05.19 Statistical Natural Language Processing 5

classic Nlp? 24.05.19 Statistical Natural Language Processing 6

classic Nlp? Classic NLP 1.6.7 Statistical Machine Learning, Graphical Models: 2008-2012 1.6.8. The Rise of Neural Models in NLP: 2013 - … Neural NLP 24.05.19 Statistical Natural Language Processing 7

Why Language is HARD .. synonymous polysemous Concept Layer Lexical Layer He sat on the river bank and counted his dough. She went to the bank and took out some money. 24.05.19 Statistical Natural Language Processing

OVerview of this course Statistical Methods of Language Technology § focus on methods rather than applications § variety of techniques, focus on statistical methods § efficiency vs. effectiveness Statistical Methods of Language Technology § cores of methods being used in NL systems § adaptations of generally known algorithms to language data § evaluation of techniques Lecture: theory, concepts, algorithms Practice class: hands-on, writing small programs, using available software 24.05.19 Statistical Natural Language Processing 9

Textbooks § Jurafsky, D. and Martin, J. H. (2009): Speech and Language Processing. An Introduction to Natural Language Processing , Computational Linguistics and Speech Recognition. Second Edition. Pearson: New Jersey § Third Edition: https://web.stanford.edu/~jurafsky/slp3/ is recommended and free (cf. chapter correspondence table between 2 nd and 3 rd editions) § Manning, C. D. and Schütze, H. (1999): Foundations of Statistical Natural Language Processing . MIT Press: Cambridge, Massachusetts Literature for specialized topics will be given in-place. http://panchenko.me/slides/cnlp/ 24.05.19 Statistical Natural Language Processing 10

Learning Goals § understand statistical methods for language processing in detail § feeling for language tech applications, avoiding pitfalls § ability to plan technology requirements for a language tech project § analyze and evaluate the use of NLP in applications § see the beauty of language technology, be ready to write your thesis in language tech 24.05.19 Statistical Natural Language Processing 11

Network of the day § Student project, up since 2014. § www.tagesnetzwerk.de Statistical Natural Language Processing 12

Comparative Argumentative Machine § MA project, a paper at SIGIR CHIIR’19 conference in UK § http://ltdemos.informatik.uni-hamburg.de/cam/ § https://arxiv.org/abs/1901.05041 Statistical Natural Language Processing 13

Knowledge-free interpretable word sense disambiguation § MA project, a paper at EMNLP’17 conference in Denmark § http://ltbev.informatik.uni-hamburg.de/wsd/ § https://aclweb.org/anthology/papers/D/D17/D17-2016/ Statistical Natural Language Processing 14

Practice Class Information § In the practice classes you will work on weekly assignments, which will give you some practical experience in NLP § The assignments will be graded on a binary scale (“ok”/”not ok”) § You need 50% of points to pass the course § Depending on nature of the topic, assignments will be § theoretical, i.e. paper-and-pencil § practical, i.e. writing a program and applying it to data § hands-on, i.e. applying a third-party program to data 24.05.19 Statistical Natural Language Processing 15

Organisational Information § The lecture slides, handouts, readings etc. can all be found on the Canvas platform: https://skoltech.instructure.com/courses/1948 § We use the Chat there for discussion and Q&A § Quick feedback form: https://forms.gle/nAq6NjFGWvhp85Ji7 24.05.19 Statistical Natural Language Processing 16

Final Exam § How: § Written exam 2h § When: § Last week of May. § Content: § Lecture § Exercises § Reading 24.05.19 Statistical Natural Language Processing 17

Time SLots For Classes time\day TUESDAY WEDNESDAY THURSDAY 11-12 12-13 13-14 14-15 15-16 16-17 16:00 – 19:00 16:00 – 19:00 17-18 Lecture Practice Class 18-19 24.05.19 Statistical Natural Language Processing 18

Time SLots For Classes Reading the JM book time\day TUESDAY WEDNESDAY THURSDAY 11-12 12-13 13-14 14-15 15-16 16-17 16:00 – 19:00 16:00 – 19:00 17-18 Lecture Practice Class 18-19 24.05.19 Statistical Natural Language Processing 19

Topics of this class § Formal Languages and Automata § Computational Morphology § Sequence Tagging § Topic Modelling § Statistical Machine Translation § Graph-Based Methods § Distributional Semantics § Word Senses and their Disambiguation 24.05.19 Statistical Natural Language Processing 20

• Jurafsky, D. and Martin, J. H. (2009): Speech and Language Processing. An Introduction to Natural Language Processing , Computational Linguistics and Speech Recognition. Second Edition. Pearson: New Jersey. Chapters 2 and 16 • Chomsky, Noam (1959). "On certain formal properties of grammars". Information and Control 2 (2): 137–167 • Refresher on the theory of computation (Turing Machine, etc.) in form of video lectures: https://www.youtube.com/playlist?list=PLBlnK6fEyqRgp46KUv4ZY69yXmpwKOIev FORMAL LANGUAGES AND AUTOMATA CHOMSKY HIERARCHY OF FORMAL LANGUAGES 24.05.19 24.05.19 Statistical Natural Language Processing 21

Recap: Formal Languages and Automata • Automata theory and theory of formal languages are a part of theoretical computer science • Their concepts originate in theoretical linguistics: Noam Chomsky is the originator of the Chomsky hierarchy of formal languages Why talk about it? • complexity of sub-systems of natural language informs complexity of automatic processing machinery • Fundamental results from theoretical computer science have direct implications on implementations for language technology applications 24.05.19 Statistical Natural Language Processing 22

DEFINITIONS • A LANGUAGE is a collection of sentences of finite length all constructed from a finite alphabet of symbols • A GRAMMAR can be regarded as a device that enumerates the sentence of a language • A grammar of language L can be regarded as a function whose range is exactly L 24.05.19 Statistical Natural Language Processing 23

Formal Grammar A formal grammar is a quad-tuple G = (Φ,Σ,R,S) where • Φ is a finite set of non-terminals • Σ a finite set of terminals, disjoint from Φ • R a finite set of production rules of the form α ∈ ( Φ∪ Σ )* → β ∈ ( Φ∪ Σ ) * with α ≠ ε and α ∉ Σ * • S, Element of Φ : start symbol 24.05.19 Statistical Natural Language Processing 24

Derivation, Formal Language, Automaton Let G = (Φ,Σ,R,S) be a formal grammar and let u,v ∈ (Φ ∪ Σ)*. 1. v is directly derivable from u , noted , if u ⇒ v u = awb, v=azb and w → z is a productionrule in R . * 2. v is derivable from u , noted , if there are words w 0 ..w k , such u ⇒ v that u ⇒ w 0 , w n-1 ⇒ w n for all 0<n≤k and w n ⇒ v . * Let G = (Φ,Σ,R,S) be a formal grammar. Then, L ( G ) = { w ∈ Σ * | S ⇒ w } is the formal language generated by G. An automaton is a device that decides, whether a given sentence belongs to a formal language. 24.05.19 Statistical Natural Language Processing 25

Generation and Acceptance Grammar generates Language is accepted by Automaton The complexity of the generating grammar influences the complexity of the accepting automaton 24.05.19 Statistical Natural Language Processing 26

Recommend

More recommend