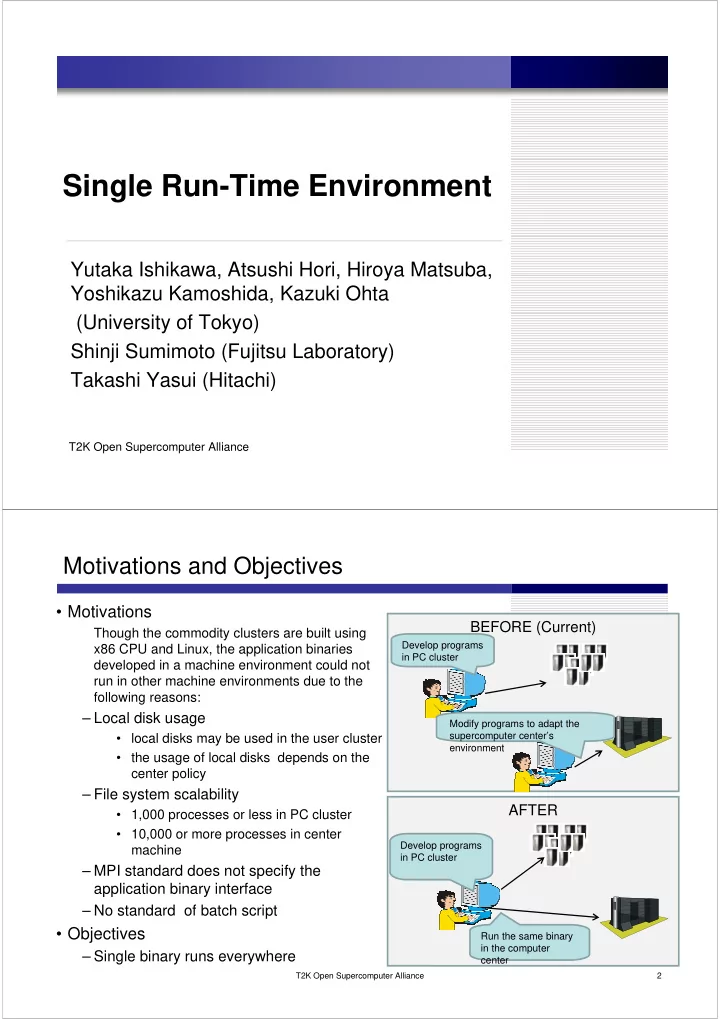

Single Run-Time Environment Yutaka Ishikawa, Atsushi Hori, Hiroya Matsuba, Yoshikazu Kamoshida, Kazuki Ohta (University of Tokyo) Shinji Sumimoto (Fujitsu Laboratory) Takashi Yasui (Hitachi) T2K Open Supercomputer Alliance Motivations and Objectives • Motivations BEFORE (Current) Though the commodity clusters are built using Develop programs x86 CPU and Linux, the application binaries in PC cluster developed in a machine environment could not run in other machine environments due to the following reasons: – Local disk usage Modify programs to adapt the supercomputer center’s • local disks may be used in the user cluster environment • the usage of local disks depends on the center policy – File system scalability AFTER • 1,000 processes or less in PC cluster • 10,000 or more processes in center Develop programs machine in PC cluster – MPI standard does not specify the application binary interface – No standard of batch script • Objectives Run the same binary in the computer – Single binary runs everywhere center T2K Open Supercomputer Alliance 2

Ongoing Research • File System – pdCache [Kazuki Ohta] • File cache system – CatWalk [Atsushi Hori] • Transparent file staging system – STG [Hiroya Matsuba] • Portable high-performance file staging system – File Access Tracer [Takashi Yasui] • Understanding the application I/O behavior • MPI-Adapter [Shinji Sumimoto] – Binary compiled under some MPI implementation may run under other MPI implementations T2K Open Supercomputer Alliance 3 File System Issue: Seek • Many Cores and File Accesses – Assuming that each process runs on each core • Eg., 4 processes runs on 1 node with 4 cores – Each process requests sequential access to a file on the file server • Server side – I/O requests arrive randomly File Server – Too many seeks Process Process Process Process F1 0 F1 0 F1 1 F1 1 F1 2 F1 3 Queue F1 file I/O F2 file I/O F2 0 F2 1 F2 2 F2 2 F2 3 F1 0 F2 2 F1 1 F3 0 F3 1 F3 1 F3 2 F3 3 Process Process Process Process F4 0 F4 0 F4 1 F4 1 F4 2 F4 3 F3 file I/O F4 file I/O This is the traditional I/O issue, but the F3 1 F4 0 F4 1 queues exist in both network and disk I/O T2K Open Supercomputer Alliance 4

File System Issue: Meta Data Handling • Meta data server Compute CPU CPU CPU CPU CPU CPU CPU CPU CPU CPU CPU CPU CPU CPU CPU CPU Nodes Meta Data I/O Meta Data Disk Disk Disk Disk Disk Disk Server Nodes Server T2K Open Supercomputer Alliance 5 pdCache • Cache Servers Client Processes – May be located in compute nodes or in some independent nodes App App App App App App App App App App – cache file and meta data – Reduces I/O Requests • Disk Seeks • Disk I/O Requests Cache Servers Cache Cache Cache Cache Cache Cache • Meta data access Serv Serv Serv Serv Serv Serv – Handles client requests fairly I/O Requests • Portability – Independent of file system Parallel File System Parallel File System – Cluster networks • Related Work Disk Disk Disk Disk Disk Disk Disk Disk – ZOID: I/O Forwarding Infrastructure for Petascale Architectures [Iskra, PPoPP08] – Scalable I/O Performance through I/O delegate and Caching System [Nisar, SC08] T2K Open Supercomputer Alliance 6

pdCache: Software Stack • ADIO: Abstract Device Interface App App App App App App App App for I/O [Thakur96] Client Lib Client Lib Client Lib Client Lib Client Lib Client Lib Client Lib Client Lib – Is designed in ROMIO for BMI BMI BMI BMI BMI BMI BMI BMI B B B B B B B B B B B B B B B B B B B B B B B B MI MI MI MI MPI-IO MI MI MI MI MI MI MI MI MI MI MI MI MI MI MI MI MI MI MI MI M M M M M M M M IB … IB … IB … IB … IB … IB … IB … IB … – Supports most parallel file X X X X X X X X Application Requests systems CacheServer CacheServer • BMI: Buffered Message Interface CacheServer CacheServer [Carns05] BMI ADIO BMI ADIO BMI ADIO BMI ADIO B ADI ADI B ADI ADI B ADI ADI B ADI ADI B B ADI B B ADI B B ADI B B ADI – Is designed in the PVFS2 file MI O O MI O O MI O O MI O O MI MI O MI MI O MI MI O MI MI O M PVF Lustr M PVF Lustr M PVF Lustr M PVF Lustr system IB … … IB … … IB … … IB … … X S e X S e X S e X S e – Supports most cluster networks Cache coherence File System Operations • A remote procedure call mechanism is implemented in PVFS PVFS PVFS PVFS Server Server PVFS BMI PVFS Server Server PVFS PVFS Server Server – To handle application requests Server Server Disk Disk – To communicate with CacheServer’s Disk Disk Disk Disk Disk Disk T2K Open Supercomputer Alliance 7 pdCache: Evaluation • Coming Soon ☺ T2K Open Supercomputer Alliance 8

Catwalk: An Overview • Transparent File Staging – The users do not take care of the file • Catwalk consists of staging commands, but the Catwalk – user library midleware takes care of it – Client process • At a file open, the Catwalk copies the – Server process file from the file server to the local disk if the file does not exist in the local disk • At a file close, the Catwalk copies the file from the local disk to the file server • Assuming Environment – TCP/IP connection between the file server and the cluster • Requires some coordination of network traffic – No requirement of highly network bandwidth – No requirement of the administrator mode to install Catwalk T2K Open Supercomputer Alliance 9 Catwalk: An Overview • Transparent File Staging – The users do not take care of the file • Catwalk consists of staging commands, but the Catwalk – user library midleware takes care of it – Client process • At a file open, the Catwalk copies the – Server process file from the file server to the local disk if the file does not exist in the local disk • At a file close, the Catwalk copies the file from the local disk to the file server • Assuming Environment – TCP/IP connection between the file server and the cluster • Requires some coordination of network traffic – No requirement of highly network bandwidth – No requirement of the administrator mode to install Catwalk T2K Open Supercomputer Alliance 10

Catwalk: Stage In 1. The open system call is intercepted 2. A Catwalk client sends the stage-in request to the Catwalk server 3. The Catwalk server receives the request and enqueues it to the stage-in queue The Catwalk server 4. While the stage-in queue is not empty � dequeues a request from the stage- in queue � sends the requested file to a cluster node along with the ring topology A Catwalk client When the stage-in file arrives � Sends the file to the next cluster node along with the ring toplogy 5. Writing the file to the local disk 6. Notifies the user process T2K Open Supercomputer Alliance 11 Catwalk: Stage Out 1. The create system call is intercepted The Catwalk server 2. A Catwalk client enqueues the stage-out When the stage-out file arrives request to its request 7. Stores the file to the file system 3. When a Catwalk client receives the signal from the user process at the process exiting, this event is sent to the Catwalk server 4. When the Catwalk server receives all the exiting events from the clients, the stageOut token is sent to the cluster node A Catwalk client 4. At receiving the stageOut token, the following procedures are performed until the stageout queue becomes empty: � dequeues a request from the stage-out queue 5. Read the file, and 6. sends the file to the server 6. Sends the stageOut token to the next node in the ring topology T2K Open Supercomputer Alliance 12

CatWalk: User Library Implementation • Hooking the open system call – Using the LD_PRELOAD feature of Linux • The dynamic library specified by the LD_PRELOAD environment variable is used prior to the system dynamic libraries … fd = open(“foo”, …); a.out … If the open system call is failed Stage in: the file is copied int open( const char *path, int flags ) { libcatwalk.so ret = (*open_orig)( path, flags ); if(ret < 0 && (errno == ENOENT)) { issue the original if( (ret = catwalk_stage_in( path ) ) != FAIL ) { system call ret = (*open_orig)( path, flags ); issue the original } system call } libc.so return ret; } T2K Open Supercomputer Alliance 13 CatWalk: Evaluation • T2K Open Supercomputer • 17 nodes – One for file server and 16 for compute nodes • Network – 1 Gbps Ethernet T2K Open Supercomputer Alliance 14

Stage in Stage out CatWalk: Evaluation NFS CatWalk T2K Open Supercomputer Alliance 15 Stage in Stage out CatWalk: Evaluation NFS CatWalk • Server -> Compute Nodes • Stage in – 100 MB/s – Scalable – Limitation of network bandwidth • Stage out • Compute Nodes -> Server – NFS – 20 MB/s T2K Open Supercomputer Alliance 16

Recommend

More recommend