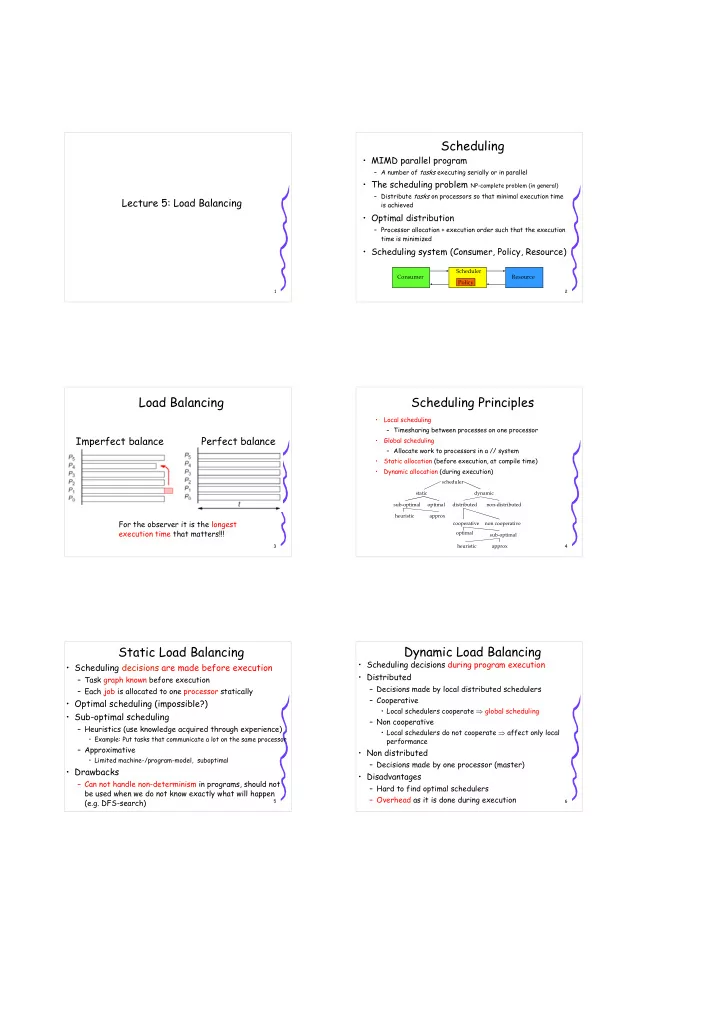

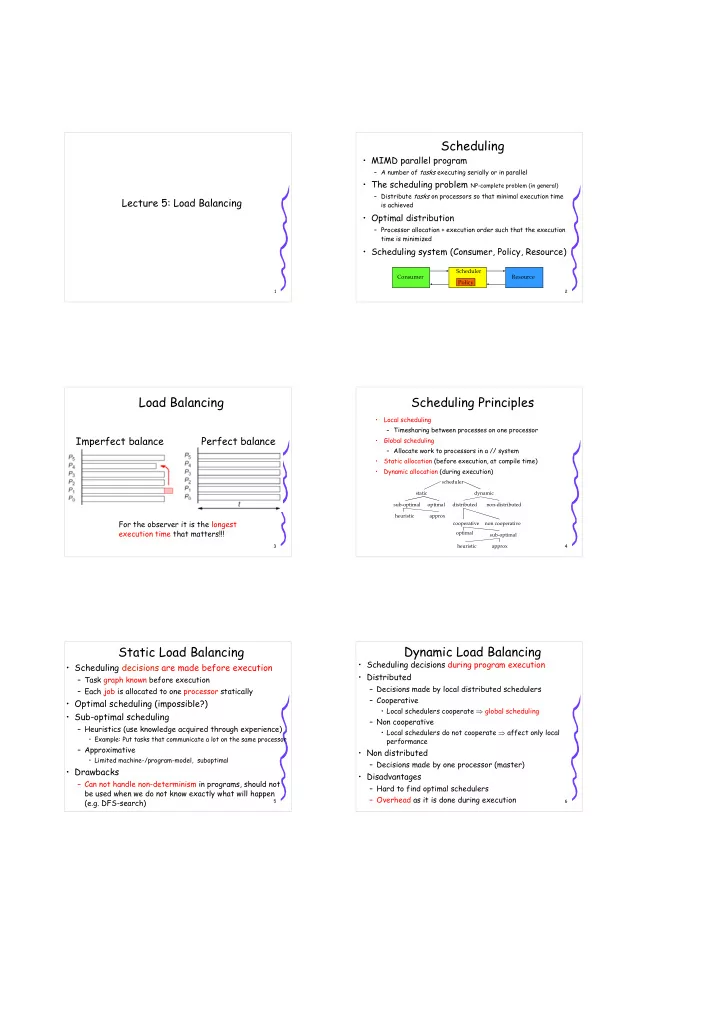

Scheduling • MIMD parallel program – A number of tasks executing serially or in parallel • The scheduling problem NP-complete problem (in general) – Distribute tasks on processors so that minimal execution time Lecture 5: Load Balancing is achieved • Optimal distribution – Processor allocation + execution order such that the execution time is minimized • Scheduling system (Consumer, Policy, Resource) Scheduler Consumer Resource Policy 1 2 Load Balancing Scheduling Principles • Local scheduling – Timesharing between processes on one processor Imperfect balance Perfect balance • Global scheduling – Allocate work to processors in a // system • Static allocation (before execution, at compile time) • Dynamic allocation (during execution) scheduler static dynamic sub-optimal optimal distributed non-distributed heuristic approx For the observer it is the longest cooperative non cooperative execution time that matters!!! optimal sub-optimal 3 heuristic approx 4 Dynamic Load Balancing Static Load Balancing • Scheduling decisions during program execution • Scheduling decisions are made before execution • Distributed – Task graph known before execution – Decisions made by local distributed schedulers – Each job is allocated to one processor statically – Cooperative • Optimal scheduling (impossible?) • Local schedulers cooperate ⇒ global scheduling • Sub-optimal scheduling – Non cooperative – Heuristics (use knowledge acquired through experience) • Local schedulers do not cooperate ⇒ affect only local • Example: Put tasks that communicate a lot on the same processor performance – Approximative • Non distributed • Limited machine-/program-model, suboptimal – Decisions made by one processor (master) • Drawbacks • Disadvantages – Can not handle non-determinism in programs, should not – Hard to find optimal schedulers be used when we do not know exactly what will happen – Overhead as it is done during execution (e.g. DFS-search) 5 6

Other kinds of scheduling Static Scheduling ● Single application / multiple application system • Graph Theory Approach ● Only one application at the time, minimize execution time – (for programs without loops and jumps) for that application – DAG (directed acyclic graph) = task graph ● Several parallel applications (compare to batch-queues), – Start-node (no parents), exit-node (no children) minimize the average execution time for all applications • Machine Model ● Adaptive / non adaptive scheduling – Processors P = {P 1 , ..., P m } T 1 , A 1, D 14 ● Changes behavior depending on feedback from the system – Edge matrix (mxm), comm-cost P i,j 1 ● Is not affected by feedback – Processor performance S i [instructions per second] 5 1 • Parallel Program Model ● Preemptive / non-preemptive scheduling 1 2 ● Allows a process to be interrupted if it is allowed to – Tasks T = {T 1 , ..., T n } 2 3 4 10 5 8 – The execution order is given by the arrows resume later on 2 non-preemptive preemptive 3 – Communication matrix (nxn), no. elem. D i,j 2 2 ● Does not allow a process to 3 1 2 5 – Number of instructions A i 1 5 be interrupted 3 7 8 Optimal Scheduling Algorithms Construction of schedules • The scheduling problem is NP complete for the general case. Exceptions: • Schedule: mapping that allocates one or more – HLF (Highest Level First), CP (Critical Path), LP (Longest disjunct time interval to each task so that Path) which in most cases gives optimal scheduling – Exactly one processor gets each interval • List scheduling: priority list with nodes and allocate the nodes one by one to the processes. Choose the node with highest – The sum of the intervals equals the priority and allocate that to the first available process. Repeat execution time for the task until the list is empty. – Different intervals on the same processor – It varies between algorithms how to compute priority • Tree structured task graph. Simplification: do not overlap – All tasks have the same execution time – The order between tasks is maintained – All processors have the same performance – Some processor is always allocated a job • Arbitrary task graph on two processors. Simplification: – All tasks have the same execution time 9 10 List Scheduling Scheduling of a tree structured task graph • Remember • Level – Each task is allocated a priority & is placed in a list sorted by priority – maximum number of nodes from x to a – When a processor is free, allocate the task with the highest priority terminal node • If two tasks have the same priority, take one randomly • Optimal algorithm (HLF) • Different choice of priority gives different kinds of scheduling – Determine the level of each node = priority – Level gives closest to optimal priority order (HLF) – When a processor is available, schedule the Task #Pr Level ready task with the highest priority 1 1 Number of reasons 1 1 0 3 1 I'm not ready • HLF can fail 2 3 2 1 2 1 2 – You can always construct an example that fails 1 1 3 1 2 – Works for most algorithms 4 4 2 1 1 11 12

Parallelism vs Communication Delay Scheduling Heuristics • Scheduling must be based on both • The complexity increases if the model allows – Communication delay – Tasks with different execution times – The time when a processor is ready to work – Different speed of the communication links • Trade-off between maximizing the parallelism & – Communication conflicts minimizing the communication (max-min problem) – Loops and jumps P1 P2 P1 P2 – Limited networks 1 1 1 Dx • Find suboptimal solutions 2 Dx 2 Dx 3 2 3 – Find, with the help of a heuristic, solutions 3 3 that most of the time are close to optimal Dx > T2 Dx < T2 13 14 Example, Trade-off The Granularity Problem // vs Communication Time • Find the best clustering of tasks in the task graph (minimize execution time) P1 P2 P1 P2 P1 • Coarse Grain 1 1 1 D3 1 D2 D3 D3 – Less parallelism 2 2 3 3 2 2 3 • Fine Grain Dx Dy 3 Dx Dy 4 4 4 – More parallelism 4 – More scheduling time D3 < T2, assign T3 to P2 Time = T1 + D3 + T3 + Dy + T4 , or – More communication conflicts Time = T1 + T2 + Dx + T4 If min(Dx, Dy) > T3 assign T3 to P1 15 16 Dynamic Load Balancing Redundant Computing • Local scheduling • Sometimes you may eliminate communication delays Example: Threads, Processes, I/O by duplicating work • Global scheduling Example: Some simulations P1 P2 P1 P2 – Pool of tasks / distributed pool of tasks 1 1 1 1 1 1 1 • receiver-initiated or sender-initiated 2 3 2 2 3 – Queue line structure 1 1 5 3 1 1 1 4 3 5 4 5 1 1 4 17 18

Pool of Tasks Work Transfer - Distributed Centralized • Centralized • The receiver takes the initiative. ”Pull” • Decentralized – One process asks another process for work • Distributed – The process asks when it is out of work, or has too little to do. • How to choose processor – Works well, even when the system load is high to communicate with? Distributed – Can be expensive to approximate system loads 19 20 Decentralized Work Transfer - Distributed Work Transfer - Decentralized • Example of process choices • The sender takes the initiative. ”Push” – Load – One process sends work to another • (hard) process – Round robin – The process asks (or just sends) when it • Must make sure that the has too many tasks, or high load processes do not “get in phase”, i.e. they all ask the same process – Works well when the system load is low – Randomly (random polling) – Hard to know when to send • Good generator necessary?? 21 22 Queue Line Structure Tree Based Queue • Each process sends to one of two processes • Have two processes per node – generalization of the previous technique • One worker process that – computes – asks the queue for work • Another that – asks (to the left) for new tasks if the queue is nearly empty – receives new tasks from the left neighbor – receives requests from the right neighbor and from the worker process and answers these requests 23 24

Recommend

More recommend