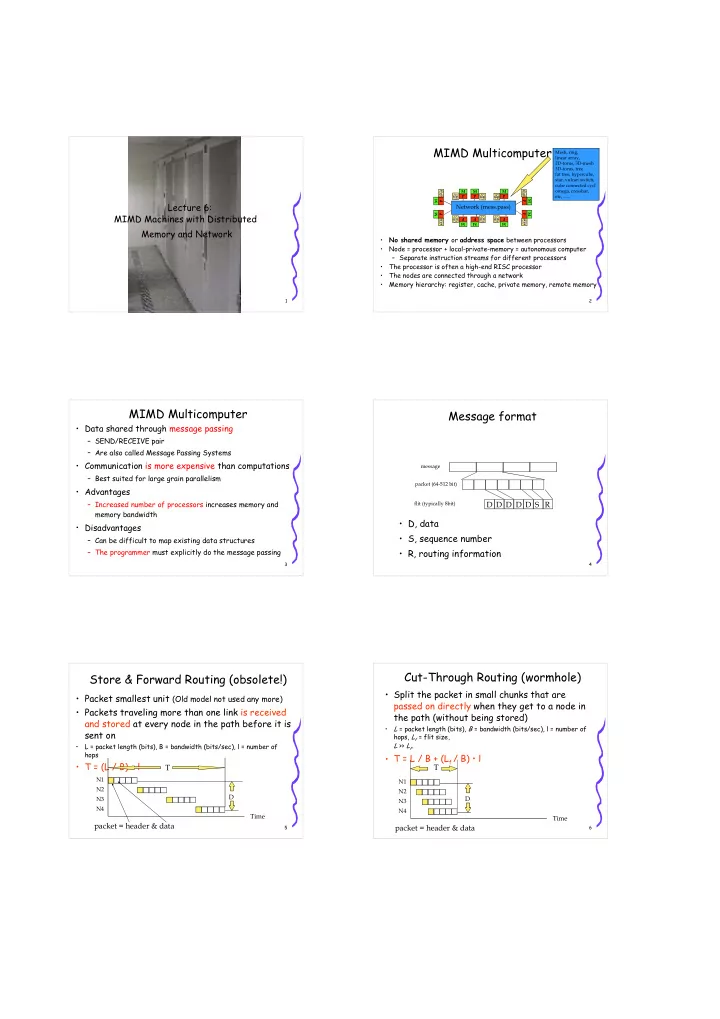

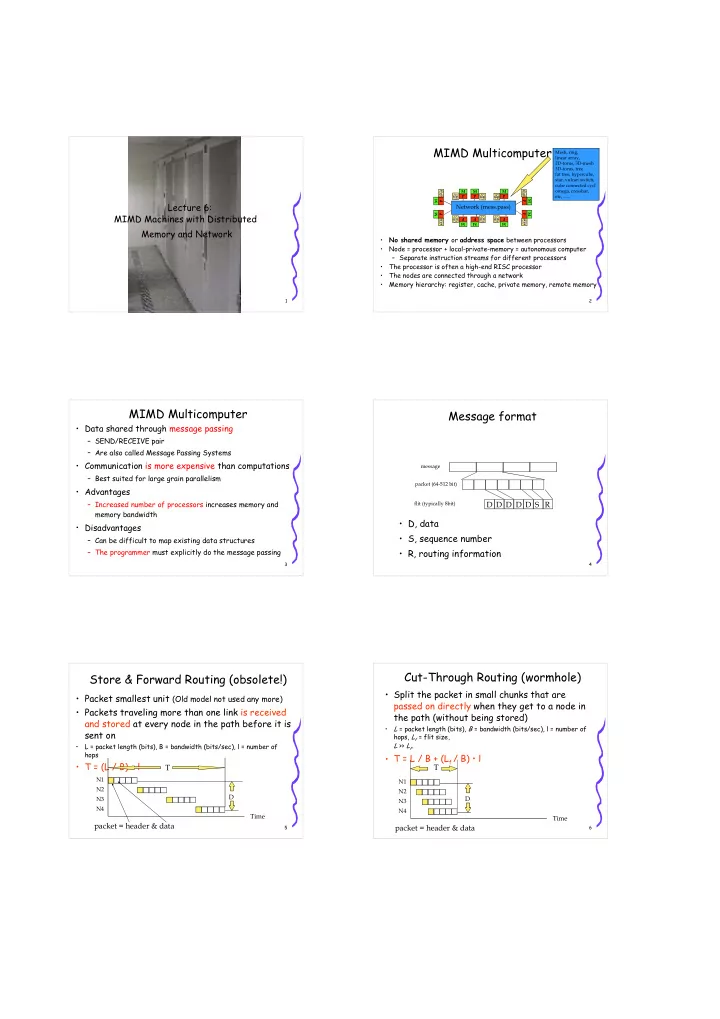

MIMD Multicomputer Mesh, ring, linear array, 2D-torus, 3D-mesh 3D-torus, tree fat tree, hypercube, star, vulcan switch, cube connected cycl’ omega, crossbar, M M M P P P etc, ...... M M Lecture 6: P P Network (mess.pass) MIMD Machines with Distributed M P P M P P P M M M Memory and Network • No shared memory or address space between processors • Node = processor + local-private-memory = autonomous computer – Separate instruction streams for different processors • The processor is often a high-end RISC processor • The nodes are connected through a network • Memory hierarchy: register, cache, private memory, remote memory 1 2 MIMD Multicomputer Message format • Data shared through message passing – SEND/RECEIVE pair – Are also called Message Passing Systems • Communication is more expensive than computations message – Best suited for large grain parallelism packet (64-512 bit) • Advantages – Increased number of processors increases memory and flit (typically 8bit) D D D D D S R memory bandwidth • D, data • Disadvantages • S, sequence number – Can be difficult to map existing data structures – The programmer must explicitly do the message passing • R, routing information 3 4 Cut-Through Routing (wormhole) Store & Forward Routing (obsolete!) • Split the packet in small chunks that are • Packet smallest unit (Old model not used any more) passed on directly when they get to a node in • Packets traveling more than one link is received the path (without being stored) and stored at every node in the path before it is • L = packet length (bits), B = bandwidth (bits/sec), l = number of sent on hops, L f = flit size, L = packet length (bits), B = bandwidth (bits/sec), l = number of L >> L f . • hops • T = L / B + (L f / B) • l • T = (L / B) • l T T N1 N1 N2 N2 D N3 D N3 N4 N4 Time Time packet = header & data packet = header & data 5 6

Message Passing Programming Example: Message Passing on the IBM SP • Formulate programs in terms of sending and • The message is split in a number of packets receiving messages – the packet is split in a number of flits – one flit = 1 byte • Programs are portable between different – the packets varies in length up to 255 flits machines with small changes (e.g., IBM SP, – flit 1 = packet length, flit 2 - flit r = routing info, the rest is data cluster, network of work stations) – each switch step: checks the 1st route-flit, determines the “out- port”, removes the flit (when the packet has reached the destination it no longer contains any route-flits) • To be able to exchange messages the nodes • Buffered Wormhole routing have to know – a flit is moved to the “out-port” as soon as it has arrived on the “in- port” • each others identity – “the right” “out-port” is determined by the second flit in the • the size of the message packet • content type of the message – if links are busy, flits are stored in a for all “in-ports” shared 7 8 buffer (central queue) Execution of programs Programming DMM systems 0 2 (SPMD-Single Program Multiple Data) 1 program myfirst 0 1 2 me = myid() npr = numprocs() 0 2 1 x = rand() program myfirst program myfirst program myfirst write(x,me) me = 1 me = 2 me = 0 to = me+1 mod npr npr = 3 npr = 3 npr = 3 x = 0.65 x = 0.1 from = me+npr-1 mod npr x = 0.25 write(0.65, 1) write(0.1, 2) write(0.25, 0) send(x, to) to = 1 to = 2 to = 0 receive(y,from) from = 0 from = 1 from = 2 write(y,me) send(0.25, 1) send(0.65, 2) send(0.1, 0) receive(y, 0) receive(y, 1) receive(y, 2) end 1. The same program is loaded on all nodes write(0.25, 1) write(0.65, 2) write(0.1, 0) 2. Every node starts to execute its own copy of the program end end end 3. Data is exchanged by send and receive pairs 4. Synchronizing implicit by communication explicit by barriers 9 10 Communication Network • Minimize communication overhead The topology of a network can be either static • Communication cost for ”point-to-point” Store-and-forward: t c = ( α + β L) * #hops or dynamic Cut-through: t c = α + β L • Statical Networks ♦ α is the startup cost (”node latency”) and β is the cost per unit (usally bytes) per link – Point-to-point, direct connections ♦ α >> β ⇒ − α dominates for small messages – Does not change during program execution − β dominates for large messages • Dynamical Networks Collective operations: broadcast, gather, scatter etc. • Group messages whenever possible – Switches • Use more than one copy of the data? – Dynamically configured to fit the needs of • Repeat identical computations/share the result? the program • Overlapping computations/communication? 11 12

Network Parameters Static Connected Networks • Linear array • Network size ( N ): number of nodes D – N nodes, N-1 links • Node degree ( d ) – Inner nodes: node degree d = 2, outer nodes: node degree d = 1 – number of links/channels on each node – diameter D = N-1 – bisection width b = 1 – one-way channels in/out degree, • Ring, node degree d = 2 (node degree = in + out degree) – N nodes, N links – small node degree good , cheap and modular – diameter D = N (for bidirectional ring N/2) – bisection width b = 2 • Network diameter ( D ) • Ring, node degree d = 3 , Chorodal ring of degree 3 – max(shortest path between two arbitrary nodes) – Increase number of links, higher node degree and lower – measured in number of links traversed (hops) diameter • Ring, node degree d = N-1 : Fully coupled network – small diameter is good – diameter D = 1 • Bisection width ( b ) • Star (tree with two levels) – degree d = N-1 , diameter D = 2 – smallest number of edges between two halves of the • Fat tree network 13 14 – more (fatter) channels closer to the root Static Connected Networks HPC2N Super Cluster – Mesh & Torus seth.hpc2n.umu.se • Mesh, k-dimensional with • 240 processors i 120 dual nodes N = n k nodes has • Nodes connected in a 4 x 5 x 6 mesh network with “wrap arounds” – node degree d = 2k for the inner nodes • Built by HPC2N (and CS) – diameter D = k(n-1) • 12 racks – Note! not symmetric; edge nodes • Was build during April-May 2002 • Available for users 2002-06 has degree 2 or 3 (for k=2) • 83% usage (24h/day – 7 days/week) since • Torus 2002-08 • Total peak performance 800 Gflops/s – nxn mesh with wraparounds in row & column dimensions • Top500 2002-06: – symmetric – 94 th fastest in the world • d = 4 – fastest in Sweden • D = 2n/2 = n • Financing: 5 MSEK from the Kempe 15 16 Foundations Seth - Pallas MPI Benchmark SCI Network • Wulfkit3 SCI network by Dolphin IS, Norway • The nodes are connected in a 4 x 5 x 6 mesh network with “wrap arounds” • 667 Mbytes/s peak bandwidth => peak 1.43E-9 sec/byte • 1.46 µ sec node latency • ScaMPI message passing library (MPI) from Scali AS • Software for system surveillance and system management 230 Mbytes/s max bandwidth 3.7 µ sec minimum latency (Multi(16) is pairwise ping-pong between 8 pairs of processors) 17 18

Recommend

More recommend