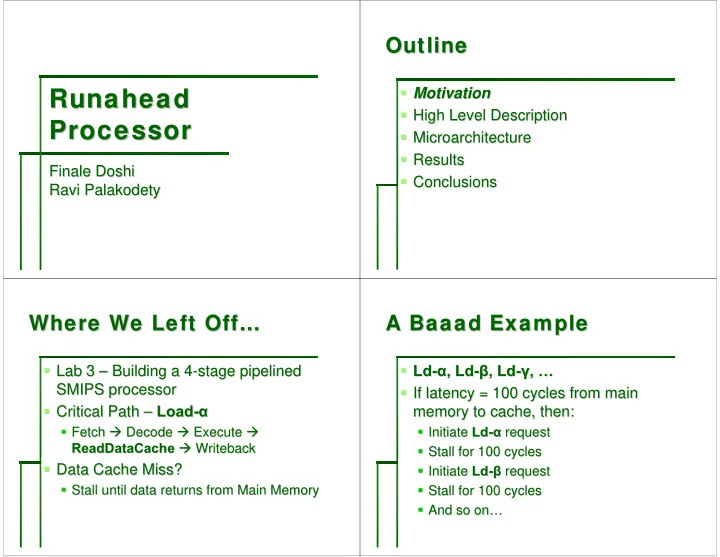

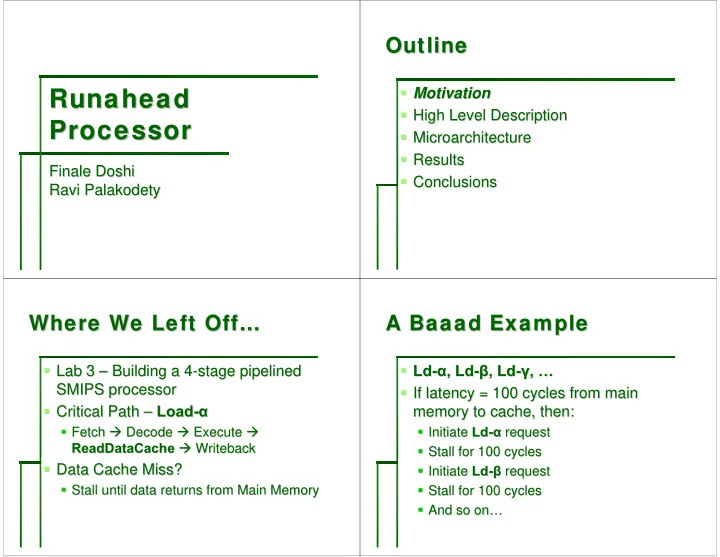

Outline Outline Outline Outline � Motivation � Motivation Runahead Runahead Runahead Runahead � High Level Description � High Level Description Processor Processor Processor Processor � Microarchitecture � Microarchitecture � Results � Results Finale Doshi Doshi Finale � Conclusions � Conclusions Ravi Palakodety Ravi Palakodety Where We Left Off… Where We Left Off… A A Baaad Baaad Baaad Example Example Example Where We Left Off… Where We Left Off… A A Baaad Example � Lab 3 � � Ld � Ld- - α α , , Ld Ld- - β β , Ld , Ld- - γ γ , , … … Lab 3 – – Building a 4 Building a 4- -stage pipelined stage pipelined SMIPS processor SMIPS processor � If latency = 100 cycles from main � If latency = 100 cycles from main � Critical Path – Load Load- - α α � Critical Path – memory to cache, then: memory to cache, then: � Fetch � Decode � Execute � � Initiate Ld- - α α request � Fetch � Decode � Execute � � Initiate Ld request ReadDataCache � � Writeback ReadDataCache Writeback � Stall for 100 cycles � Stall for 100 cycles � Data Cache Miss? � Data Cache Miss? � Initiate Ld- - β β request � Initiate Ld request � Stall until data returns from Main Memory � � Stall for 100 cycles � Stall until data returns from Main Memory Stall for 100 cycles � And so on � And so on… …

Key Insight Key Insight Outline Outline Key Insight Key Insight Outline Outline � “ � Motivation � � “Runahead Runahead” to see whether there are ” to see whether there are Motivation memory accesses in the near future memory accesses in the near future � High Level Description � High Level Description � With an instruction sequence Ld- - α α , , Ld Ld- - β β � With an instruction sequence Ld � Microarchitecture � Microarchitecture � Initiate memory request for � Initiate memory request for Ld Ld- - α α � Results � Results � Continue execution � Continue execution � Conclusions � Conclusions � Initiate memory request for � Initiate memory request for Ld Ld- - β β DataCache DataCache Miss Occurs… Miss Occurs… Miss Occurs… Data Returns.. Data Returns.. DataCache DataCache Miss Occurs… Data Returns.. Data Returns.. � Backup the register file � � Cache is updated from � Backup the register file Cache is updated from MainMem MainMem: : � Keep running instructions � Restore the register file � � Keep running instructions Restore the register file INV as the result of any ops that: � Use � Use INV � Rerun the original “offending” instruction � as the result of any ops that: Rerun the original “offending” instruction � Are � Are DataCache DataCache misses misses � Depend on calculations involving � Depend on calculations involving DataCache DataCache misses misses

Follow the Rules Follow the Rules Outline Outline Follow the Rules Follow the Rules Outline Outline � Do NOT � Motivation � � Do NOT Motivation � Update the � � High Level Description � Update the DataCache DataCache while in while in Runahead Runahead High Level Description mode mode � Microarchitecture � Microarchitecture � Initiate Memory Requests that depend on � Initiate Memory Requests that depend on � Results � Results INV addresses INV addresses � Conclusions � Conclusions � Branch when predicate depends on � Branch when predicate depends on INV INV data data � Initiate Memory Requests that cause � Initiate Memory Requests that cause collisions in DataCache DataCache collisions in Processor Side Processor Side Cache Side Cache Side Processor Side Processor Side Cache Side Cache Side

Execution Execution - - Enter Enter Enter Runahead Runahead Runahead Execution Execution - - In In In Runahead Runahead Runahead Execution Execution Enter Runahead Execution Execution In Runahead Execution Execution - - Exit Exit Exit Runahead Runahead Runahead Design Explorations Design Explorations Execution Execution Exit Runahead Design Explorations Design Explorations � Store Cache Optimization � Store Cache Optimization � Decisions when to exit � Decisions when to exit runahead runahead

Store Cache Store Cache When to Exit When to Exit Runahead Runahead Runahead? ? Store Cache Store Cache When to Exit When to Exit Runahead � Ld Ld- - α α , St , St- - β β , Ld , Ld- - β β � When the “offending” miss returns? OR � � When the “offending” miss returns? OR Ld- - β β as INV , return � Rather than return � Rather than return Ld as INV � When all memory requests that are � , return When all memory requests that are the value that was just stored. the value that was just stored. currently in- currently in -flight are processed? flight are processed? � Use 4 � Use 4- -entry table, as in Branch Predictor entry table, as in Branch Predictor Outline Outline Key Parameters Key Parameters Outline Outline Key Parameters Key Parameters � Motivation � � Vary Latency of Main Memory � Motivation Vary Latency of Main Memory � High Level Description � � As the latency increases, the impact of � As the latency increases, the impact of High Level Description runahead runahead becomes more significant becomes more significant � Microarchitecture � Microarchitecture � � At small latencies, the penalty for At small latencies, the penalty for � Results � Results entering/exiting runahead runahead can reduce can reduce entering/exiting � Conclusions � Conclusions performance performance

Key Parameters Key Parameters Testing Strategy Testing Strategy Key Parameters Key Parameters Testing Strategy Testing Strategy � Vary Size of � Latencies of 1, 20, and 100 cycles � � Vary Size of FIFOs FIFOs Latencies of 1, 20, and 100 cycles � As the � � Fifos � As the FIFOs FIFOs get larger, the processor is get larger, the processor is Fifos of length 2, 5, 8, 15 of length 2, 5, 8, 15 able to run further ahead and generate more able to run further ahead and generate more � Standard benchmarks; focus on � Standard benchmarks; focus on vvadd vvadd parallel memory requests. parallel memory requests. � As the � As the FIFOs FIFOs get larger, the penalty for get larger, the penalty for exiting runahead runahead becomes more severe. becomes more severe. We’ll focus on length 15 fifos We’ll focus on length 15 fifos here since here since exiting they allowed for the most extensive they allowed for the most extensive runahead. . runahead Results Results Results Results Results Results Results Results

Results Results Outline Outline Results Results Outline Outline � Motivation � Motivation � High Level Description � High Level Description � Microarchitecture � Microarchitecture � Results � Results � Conclusions � Conclusions Conclusions Conclusions Conclusions Conclusions Conclusions Conclusions Conclusions Conclusions � Runahead � � Runahead � Runahead is good. is good. Runahead is a cheap and simple way to is a cheap and simple way to improve IPS. improve IPS. � The enter/exit � The enter/exit runahead runahead penalty is small penalty is small enough that the IPS is always enough that the IPS is always comparable to the Lab 3 processor. comparable to the Lab 3 processor. � The control structure is (fairly) � The control structure is (fairly) straightforward, with most improvements straightforward, with most improvements done on the cache side. done on the cache side.

Extensions Extensions Extensions Extensions � Aggressive Branch Prediction � Aggressive Branch Prediction � Don’t stall when branch predicate is INV � Don’t stall when branch predicate is INV � Save valid � Save valid runahead runahead computations computations � Aggressive � Aggressive Prefetching Prefetching � Predict addresses for Ld, St, when the given � Predict addresses for Ld, St, when the given INV . address is INV address is .

Recommend

More recommend