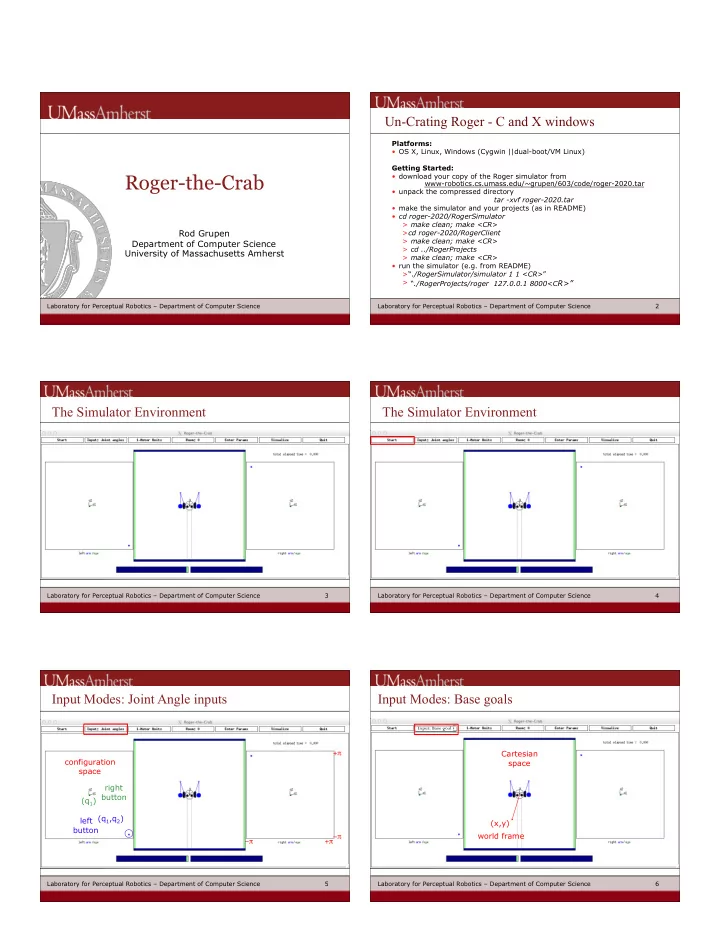

Un-Crating Roger - C and X windows Platforms: • OS X, Linux, Windows (Cygwin ||dual-boot/VM Linux) Getting Started: Roger-the-Crab • download your copy of the Roger simulator from www-robotics.cs.umass.edu/~grupen/603/code/roger-2020.tar • unpack the compressed directory tar -xvf roger-2020.tar • make the simulator and your projects (as in README) • cd roger-2020/RogerSimulator > make clean; make <CR> Rod Grupen > cd roger-2020/RogerClient > make clean; make <CR> Department of Computer Science > cd ../RogerProjects University of Massachusetts Amherst > make clean; make <CR> • run the simulator (e.g. from README) >“ ./RogerSimulator/simulator 1 1 <CR> ” > “ ./RogerProjects/roger 127.0.0.1 8000<C R>” Laboratory for Perceptual Robotics – Department of Computer Science Laboratory for Perceptual Robotics – Department of Computer Science � 2 The Simulator Environment The Simulator Environment Laboratory for Perceptual Robotics – Department of Computer Science � 3 Laboratory for Perceptual Robotics – Department of Computer Science � 4 Input Modes: Joint Angle inputs Input Modes: Base goals Input: Base goal Cartesian + π configuration space space right button (q 1 ) (q 1 ,q 2 ) left (x,y) button world frame −π −π + π Laboratory for Perceptual Robotics – Department of Computer Science � 5 Laboratory for Perceptual Robotics – Department of Computer Science � 6

Input Modes: Arm goals Input Modes: Introducing an Object (Ball) Input: Arm goals Input: Ball position Cartesian Cartesian space space left right arm button right (x,y) button left arm (x,y) world frame world frame Laboratory for Perceptual Robotics – Department of Computer Science � 7 Laboratory for Perceptual Robotics – Department of Computer Science � 8 Input Modes: Map Editor Control Modes Input: Map editor Project-specific control obstacles goals Laboratory for Perceptual Robotics – Department of Computer Science � 9 Laboratory for Perceptual Robotics – Department of Computer Science � 10 Environmental Maps Command line I/O accurate setpoints, different rooms gains, etc Laboratory for Perceptual Robotics – Department of Computer Science � 11 Laboratory for Perceptual Robotics – Department of Computer Science � 12

Project Specific Visualization Terminating project/user defined tools location uncertainty path plans potential maps Laboratory for Perceptual Robotics – Department of Computer Science � 13 Laboratory for Perceptual Robotics – Department of Computer Science � 14 Roger-the-Crab - Kinematic Definition Afferents eyes: ./include/roger.h . θ [2], θ [2] , images[2][128][3] • arms: . . θ 1 [2], θ 1 [2], θ 2 [2], θ 2 [2] • tactile (force) sensors: f [2] R 2 U • mobile base: . . . position (x, x, y, y), orientation ( θ , θ ) • bump (force) sensor: f R 2 • U Laboratory for Perceptual Robotics – Department of Computer Science � 15 Laboratory for Perceptual Robotics – Department of Computer Science � 16 Efferents Robot Interface: Project #1, #2 eye joint angles/velocities • images • always on arm joint angles/velocities eye torques: • tactile (force) sensors • τ [2] • base position (x,y), orientation ( θ ) arm torques: • bump (force) sensor • τ 1 [2], τ 2 [2] • applications simulator mobile base: (MotorUnits.c) • wheel torques τ [2] eye motor torques • arm motor torques • wheel torques • Laboratory for Perceptual Robotics – Department of Computer Science � 17 Laboratory for Perceptual Robotics – Department of Computer Science � 18

Control Interface - include/control.h Hierarchical Control t ypedef struct Robot_interface { // SENSORS double eye_theta[NEYES]; double eye_theta_dot[NEYES]; int image[NEYES][NPIXELS][NPRIMARY_COLORS]; /* rgb */ double arm_theta[NARMS][NARM_JOINTS]; double arm_theta_dot[NARMS][NARM_JOINTS]; double ext_force[NARMS][2]; /* (fx,fy) force on arm endpoint */ double base_position[3]; /* x,y,theta */ double base_velocity[3]; // MOTORS double eye_torque[NEYES]; typedef struct _map { double arm_torque[NARMS][NARM_JOINTS]; int occupancy_map[NBINS][NBINS]; double wheel_torque[NWHEELS]; double potential_map[NBINS][NBINS]; int color_map[NBINS][NBINS]; // TELEOPERATOR } Map; int button_event; double button_reference[2]; // CONTROL MODE int control_mode; int input_mode; Map world_map, arm_map[NARMS]; // REFERENCE VALUE double base_setpoint[3]; /* desired world frame base position (x,y,theta) */ double arm_setpoint[NARMS][NARM_JOINTS]; /* desired arm joint angles */ double eyes_setpoint[NEYES]; /* desired eye pan angle */ } Robot; Laboratory for Perceptual Robotics – Department of Computer Science � 19 Laboratory for Perceptual Robotics – Department of Computer Science � 20 Cumulative Project Work MotorUnits.c current _ higher-level + sensory reference Σ 1. motor units state inputs 2. Cartesian goals 3. oculomotor behavior 4. visual reconstruction - triangulation “ hunting ” - integrated behavior 5. 6. … simulator control_roger() control_base() options: control_arms() 1. path planning control_eyes() 2. learning 3. Pong 4.… control torques Laboratory for Perceptual Robotics – Department of Computer Science � 21 Laboratory for Perceptual Robotics – Department of Computer Science � 22

Recommend

More recommend