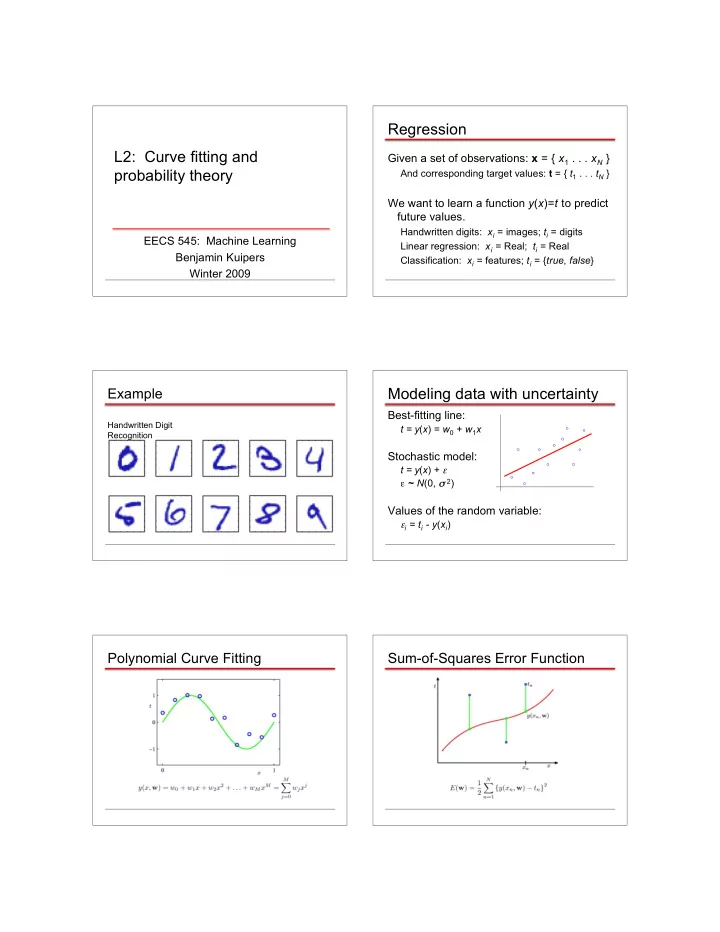

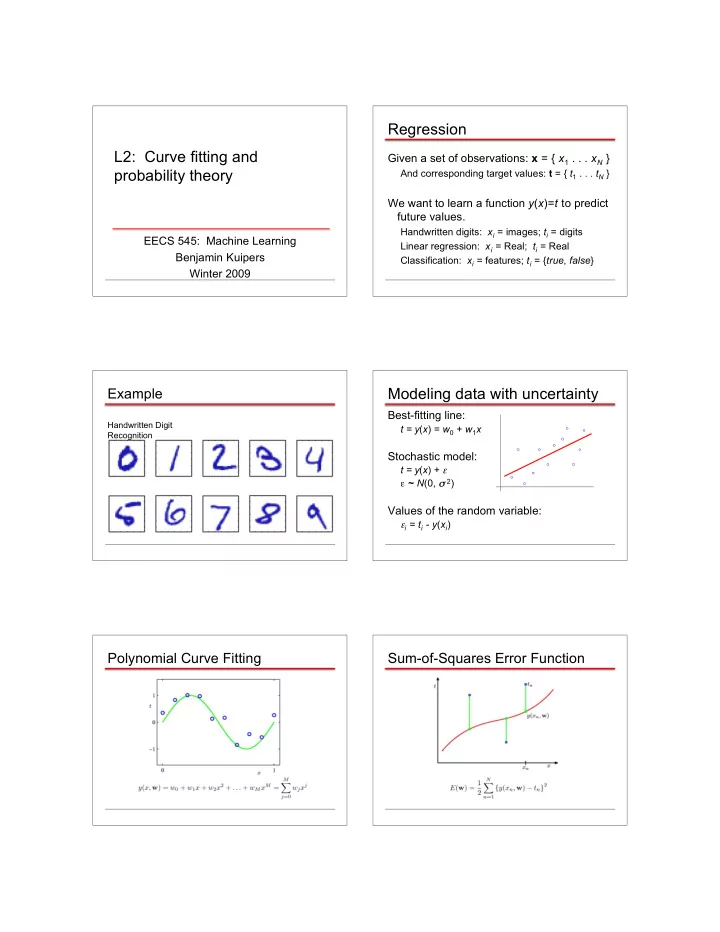

Regression L2: Curve fitting and Given a set of observations: x = { x 1 . . . x N } probability theory And corresponding target values: t = { t 1 . . . t N } We want to learn a function y ( x )= t to predict future values. Handwritten digits: x i = images; t i = digits EECS 545: Machine Learning Linear regression: x i = Real; t i = Real Benjamin Kuipers Classification: x i = features; t i = { true , false } Winter 2009 Example Modeling data with uncertainty Best-fitting line: Handwritten Digit t = y ( x ) = w 0 + w 1 x Recognition Stochastic model: t = y ( x ) + ε ε ~ N (0, σ 2 ) Values of the random variable: ε i = t i - y ( x i ) Polynomial Curve Fitting Sum-of-Squares Error Function

0 th Order Polynomial 1 st Order Polynomial 3 rd Order Polynomial 9 th Order Polynomial Over-fitting Polynomial Coefficients Root-Mean-Square (RMS) Error:

Data Set Size: Data Set Size: 9 th Order Polynomial 9 th Order Polynomial Regularization Regularization: Penalize large coefficient values Regularization: Regularization: vs.

Polynomial Coefficients Where do we want to go? We want to know our level of certainty. To do that, we need probability theory. Probability Theory Probability Theory Apples and Oranges Marginal Probability Joint Probability Conditional Probability Probability Theory The Rules of Probability Sum Rule Sum Rule Product Rule Product Rule

Bayes’ Theorem Probability Densities posterior ∝ likelihood × prior Transformed Densities Expectations Conditional Expectation (discrete) Approximate Expectation (discrete and continuous) Variances and Covariances But what are probabilities? This is a deep philosophical question! Frequentists : Probabilities are frequencies of outcomes, over repeated experiments. Bayesians : Probabilities are expressions of degrees of belief. There’s only one consistent set of axioms. But the two interpretations lead to very different ways to reason with probabilities.

Bayes’ Theorem The Gaussian Distribution posterior ∝ likelihood × prior Gaussian Mean and Variance The Multivariate Gaussian Gaussian Parameter Estimation Maximum (Log) Likelihood Likelihood function

Curve Fitting Re-visited Maximum Likelihood Determine by minimizing sum-of-squares error, . Predictive Distribution MAP: A Step towards Bayes Specify a prior distribution p ( w | α ) over the weight vector w . Gaussian with mean = 0, covariance = α - 1 I . Now compute posterior = likelihood * prior: MAP: A Step towards Bayes Where have we gotten, so far? Least-squares curve fitting is equivalent to Maximum likelihood parameter values, assuming Gaussian noise distribution. Regularization is equivalent to Maximum posterior parameter values, assuming Gaussian prior on parameters. Fully Bayesian curve fitting introduces new Determine by minimizing regularized sum-of-squares error, . ideas (wait for Section 3.3).

Bayesian Curve Fitting Bayesian Predictive Distribution Next The Curse of Dimensionality Decision Theory Information Theory

Recommend

More recommend