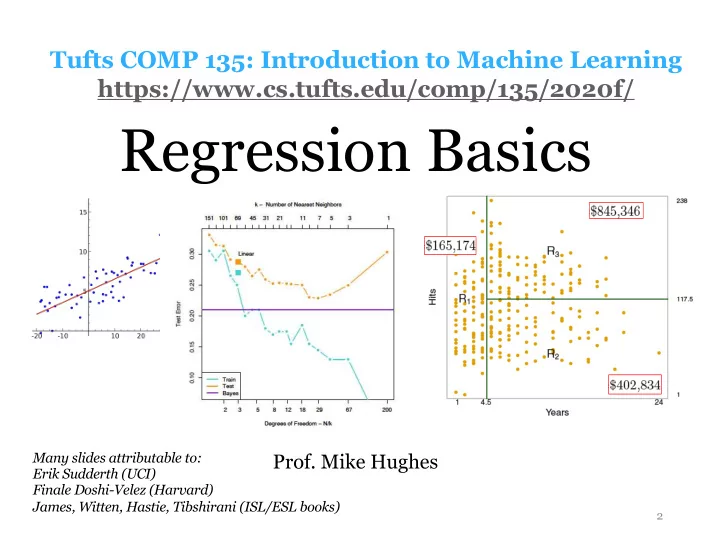

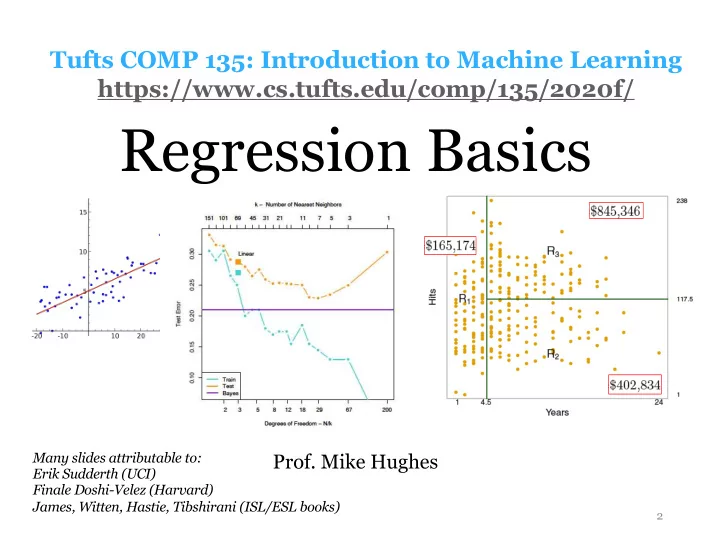

Tufts COMP 135: Introduction to Machine Learning https://www.cs.tufts.edu/comp/135/2020f/ Regression Basics Many slides attributable to: Prof. Mike Hughes Erik Sudderth (UCI) Finale Doshi-Velez (Harvard) James, Witten, Hastie, Tibshirani (ISL/ESL books) 2

Objectives for Today (day 02) • Understand 3 steps of a regression task • Training • Prediction • Evaluation • Metrics: Mean Squared Error vs Mean Absolute Error • Try two methods (focus: prediction and evaluation) • Linear Regression • K-Nearest Neighbors Mike Hughes - Tufts COMP 135 - Spring 2019 3

What will we learn? Evaluation Supervised Training Learning Data, Label Pairs Performance { x n , y n } N measure Task n =1 Unsupervised Learning data label x y Reinforcement Learning Prediction Mike Hughes - Tufts COMP 135 - Spring 2019 4

Task: Regression y is a numeric variable Supervised e.g. sales in $$ Learning regression y Unsupervised Learning Reinforcement Learning x Mike Hughes - Tufts COMP 135 - Spring 2019 5

Regression Example: RideShares Supervised Learning regression Unsupervised Learning Reinforcement Learning Mike Hughes - Tufts COMP 135 - Spring 2019 6

Regression Example: RideShare Mike Hughes - Tufts COMP 135 - Spring 2019 7

Regression Example: RideShare Mike Hughes - Tufts COMP 135 - Spring 2019 8

Regression: Prediction Step Goal: Predict response y well given features x x i , [ x i 1 , x i 2 , . . . x if . . . x iF ] • Input: “features” Entries can be real-valued, or other numeric types (e.g. integer, binary) “covariates” “predictors” “attributes” y ( x i ) ∈ R ˆ • Output: Scalar value like 3.1 or -133.7 “responses” “labels” Mike Hughes - Tufts COMP 135 - Spring 2019 9

Regression: Prediction Step >>> # Given: pretrained regression object model >>> # Given: 2D array of features x_NF >>> x_NF.shape (N, F) >>> yhat_N1 = model.predict(x_NF) >>> yhat_N1.shape (N,1) Mike Hughes - Tufts COMP 135 - Spring 2019 10

Regression: Training Step Goal: Given a labeled dataset, learn a function that can perform prediction well • Input: Pairs of features and labels/responses { x n , y n } N n =1 y ( · ) : R F → R ˆ • Output: Mike Hughes - Tufts COMP 135 - Spring 2019 11

Regression: Training Step >>> # Given: 2D array of features x_NF >>> # Given: 1D array of responses/labels y_N1 >>> y_N1.shape (N, 1) >>> x_NF.shape (N, F) >>> model = RegressionModel() >>> model.fit(x_NF, y_N1) Mike Hughes - Tufts COMP 135 - Spring 2019 12

Regression: Evaluation Step Goal: Assess quality of predictions • Input: Pairs of predicted and “true” responses y ( x n ) , y n } N { ˆ n =1 • Output: Scalar measure of error/quality • Measuring Error: lower is better • Measuring Quality: higher is better Mike Hughes - Tufts COMP 135 - Spring 2019 13

Visualizing errors Mike Hughes - Tufts COMP 135 - Spring 2019 14

Regression: Evaluation Metrics N 1 • mean squared error X y n ) 2 ( y n − ˆ N n =1 N • mean absolute error 1 X | y n − ˆ y n | N n =1 Mike Hughes - Tufts COMP 135 - Spring 2019 15

Regression: Evaluation Metrics https://scikit-learn.org/stable/modules/model_evaluation.html Mike Hughes - Tufts COMP 135 - Spring 2019 16

Linear Regression Parameters: w = [ w 1 , w 2 , . . . w f . . . w F ] weight vector b bias scalar Prediction: F X y ( x i ) , ˆ w f x if + b f =1 Training: find weights and bias that minimize error Mike Hughes - Tufts COMP 135 - Spring 2019 17

Linear Regression predictions as a function of one feature are linear Mike Hughes - Tufts COMP 135 - Spring 2019 18

Linear Regression: Training Goal : Want to find the weight coefficients w and intercept/bias b that minimizing the mean squared error on the N training examples Optimization problem: “Least Squares” N ⌘ 2 ⇣ X min y n − ˆ y ( x n , w, b ) w,b n =1 Mike Hughes - Tufts COMP 135 - Spring 2019 19

Linear Regression: Training Optimization problem: “Least Squares” N ⌘ 2 ⇣ X min y n − ˆ y ( x n , w, b ) w,b n =1 An exact solution for optimal values of w, b exists! x 11 . . . x 1 F 1 x 21 . . . x 2 F 1 ˜ X = Formula with many features (F >= 1 ): . . . x N 1 . . . x NF 1 [ w 1 . . . w F b ] T = ( ˜ X T ˜ X ) − 1 ˜ X T y We will cover and derive this in next class Mike Hughes - Tufts COMP 135 - Spring 2019 20

Nearest Neighbor Regression Parameters: none Prediction: - find “nearest” training vector to given input x - predict y value of this neighbor Training: none needed (use training data as lookup table) Mike Hughes - Tufts COMP 135 - Spring 2019 21

K nearest neighbor regression Parameters: K : number of neighbors Prediction: - find K “nearest” training vectors to input x - predict average y of this neighborhood Training: none needed (use training data as lookup table) Mike Hughes - Tufts COMP 135 - Spring 2019 22

Nearest Neighbor predictions as a function of one feature are piecewise constant Mike Hughes - Tufts COMP 135 - Spring 2019 23

Distance metrics v F u • Euclidean X u dist( x, x 0 ) = ( x f − x 0 f ) 2 t f =1 F X • Manhattan dist( x, x 0 ) = | x f − x 0 f | f =1 • Many others are possible Mike Hughes - Tufts COMP 135 - Spring 2019 24

Error vs Model Complexity Credit: Fig 2.4 ESL textbook Mike Hughes - Tufts COMP 135 - Spring 2019 25

Summary of Methods Function class Knobs to tune How to flexibility interpret? Linear Linear Penalize weights Inspect Regression ( more next week ) weights K Nearest Piecewise constant Number of Neighbors Inspect Neighbors Distance metric neighbors Regression Mike Hughes - Tufts COMP 135 - Spring 2019 26

Objectives for Today (day 02) • Understand 3 steps of a regression task • Chosen performance metric should • Training be integrated at training • Prediction • Mean squared error is “easy”, but • Evaluation not always the right thing to do • Mean Squared Error • Mean Absolute Error • Try two methods (focus: prediction and evaluation) • Linear Regression • K-Nearest Neighbors Mike Hughes - Tufts COMP 135 - Spring 2019 27

Breakout! Lab for day02 Mike Hughes - Tufts COMP 135 - Spring 2019 28

Recommend

More recommend