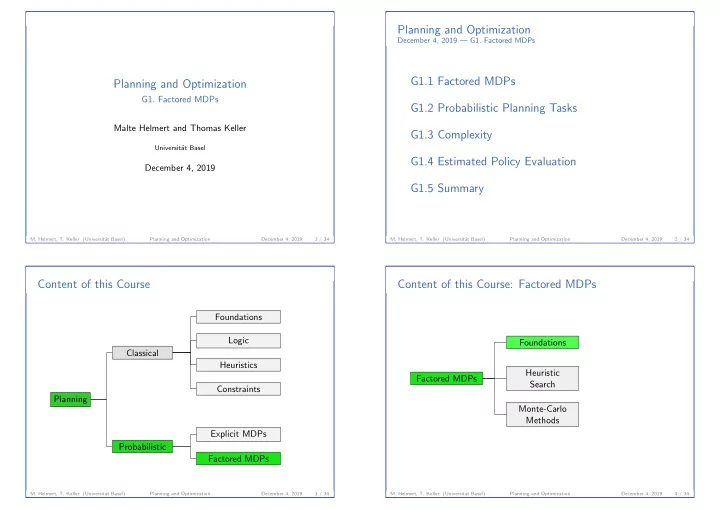

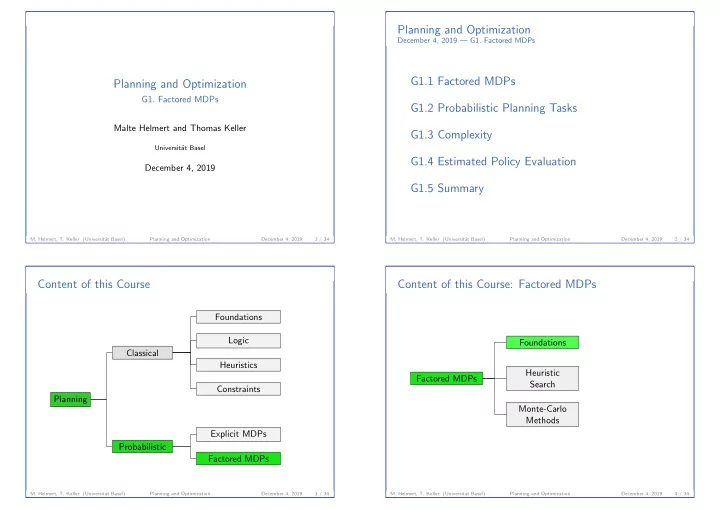

Planning and Optimization December 4, 2019 — G1. Factored MDPs G1.1 Factored MDPs Planning and Optimization G1. Factored MDPs G1.2 Probabilistic Planning Tasks Malte Helmert and Thomas Keller G1.3 Complexity Universit¨ at Basel G1.4 Estimated Policy Evaluation December 4, 2019 G1.5 Summary M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 1 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 2 / 34 Content of this Course Content of this Course: Factored MDPs Foundations Logic Foundations Classical Heuristics Heuristic Factored MDPs Search Constraints Planning Monte-Carlo Methods Explicit MDPs Probabilistic Factored MDPs M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 3 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 4 / 34

G1. Factored MDPs Factored MDPs G1. Factored MDPs Factored MDPs Factored MDPs We would like to specify MDPs and SSPs with large state spaces. In classical planning, we introduced planning tasks to represent G1.1 Factored MDPs large transition systems compactly. ◮ represent aspects of the world in terms of state variables ◮ states are a valuation of state variables ◮ n state variables induce 2 n states � exponentially more compact than “explicit” representation M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 5 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 6 / 34 G1. Factored MDPs Factored MDPs G1. Factored MDPs Factored MDPs Finite-Domain State Variables Syntax of Operators Definition (SSP and MDP Operators) An SSP operator o over state variables V is an MDP operator Definition (Finite-Domain State Variable) with three properties: A finite-domain state variable is a symbol v with an associated ◮ a precondition pre ( o ), a logical formula over V domain dom( v ), which is a finite non-empty set of values. ◮ an effect eff ( o ) over V , defined on the following slides Let V be a finite set of finite-domain state variables. ◮ a cost cost ( o ) ∈ R + 0 A state s over V is an assignment s : V → � v ∈ V dom( v ) An MDP operator o over state variables V is an object such that s ( v ) ∈ dom( v ) for all v ∈ V . with three properties: A formula over V is a propositional logic formula whose atomic ◮ a precondition pre ( o ), a logical formula over V propositions are of the form v = d where v ∈ V and d ∈ dom( v ). ◮ an effect eff ( o ) over V , defined on the following slides ◮ a reward reward ( o ) over V , defined on the following slides For simplicity, we only consider finite-domain state variables here. Whenever we just say operator (without SSP or MDP), both kinds of operators are allowed. M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 7 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 8 / 34

G1. Factored MDPs Factored MDPs G1. Factored MDPs Factored MDPs Syntax of Effects Effects: Intuition Definition (Effect) Effects over state variables V are inductively defined as follows: Intuition for effects: ◮ If v ∈ V is a finite-domain state variable and d ∈ dom( v ), ◮ Atomic effects can be understood as assignments then v := d is an effect (atomic effect). that update the value of a state variable. ◮ If e 1 , . . . , e n are effects, then ( e 1 ∧ · · · ∧ e n ) is an effect ◮ A conjunctive effect e = ( e 1 ∧ · · · ∧ e n ) means that (conjunctive effect). all subeffects e 1 , . . . , e n take place simultaneously. The special case with n = 0 is the empty effect ⊤ . ◮ A probabilistic effect e = ( p 1 : e 1 | . . . | p n : e n ) means that ◮ If e 1 , . . . , e n are effects and p 1 , . . . , p n ∈ [0 , 1] such that exactly one subeffect e i ∈ { e 1 , . . . , e n } takes place with � n i =1 p i = 1, then ( p 1 : e 1 | . . . | p n : e n ) is an effect probability p i . (probabilistic effect). Note: To simplify definitions, conditional effects are omitted. M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 9 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 10 / 34 G1. Factored MDPs Factored MDPs G1. Factored MDPs Factored MDPs Semantics of Effects Semantics of Operators Definition Definition (Applicable, Outcomes) The effect set [ e ] of an effect e is a set of pairs � p , w � , where p is Let V be a set of finite-domain state variables. a probability 0 < p ≤ 1 and w is a partial assignment. The effect Let s be a state over V , and let o be an operator over V . set [ e ] is the set obtained recursively as Operator o is applicable in s if s | = pre ( o ). [ v := d ] = {� 1 . 0 , { v �→ d }�} , The outcomes of applying an operator o in s , written s � o � , are [ e ∧ e ′ ] = � � {� p · p ′ , w ∪ w ′ �} , � {� p , s ′ s � o � = w �} , � p , w �∈ [ e ] � p ′ , w ′ �∈ [ e ′ ] � p , w �∈ [ eff ( o )] n � [ p 1 : e 1 | . . . | p n : e n ] = {� p i · p , w � | � p , w � ∈ [ e i ] } . with s ′ w ( v ) = d if v = d ∈ w and s ′ w ( v ) = s ( v ) otherwise and � is like � but merges � p , s ′ � and � p ′ , s ′ � to � p + p ′ , s ′ � . i =1 where � is like � but merges � p , w ′ � and � p ′ , w ′ � to � p + p ′ , w ′ � . M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 11 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 12 / 34

G1. Factored MDPs Factored MDPs G1. Factored MDPs Probabilistic Planning Tasks Rewards Definition (Reward) A reward over state variables V is inductively defined as follows: G1.2 Probabilistic Planning Tasks ◮ c ∈ R is a reward ◮ If χ is a propositional formula over V , [ χ ] is a reward ◮ If r and r ′ are rewards, r + r ′ , r − r ′ , r · r ′ and r r ′ are rewards Applying an MDP operator o in s induces reward reward ( o )( s ), i.e., the value of the arithmetic function reward ( o ) where all occurrences of v ∈ V are replaced with s ( v ). M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 13 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 14 / 34 G1. Factored MDPs Probabilistic Planning Tasks G1. Factored MDPs Probabilistic Planning Tasks Probabilistic Planning Tasks Mapping SSP Planning Tasks to SSPs Definition (SSP and MDP Planning Task) Definition (SSP Induced by an SSP Planning Task) An SSP planning task is a 4-tuple Π = � V , I , O , γ � where The SSP planning task Π = � V , I , O , γ � induces ◮ V is a finite set of finite-domain state variables, the SSP T = � S , L , c , T , s 0 , S ⋆ � , where ◮ I is a valuation over V called the initial state, ◮ S is the set of all states over V , ◮ O is a finite set of SSP operators over V , and ◮ L is the set of operators O , ◮ γ is a formula over V called the goal. ◮ c ( o ) = cost ( o ) for all o ∈ O , An MDP planning task is a 4-tuple Π = � V , I , O , d � where � if o applicable in s and � p , s ′ � ∈ s � o � p ◮ V is a finite set of finite-domain state variables, ◮ T ( s , o , s ′ ) = 0 otherwise ◮ I is a valuation over V called the initial state, ◮ s 0 = I , and ◮ O is a finite set of MDP operators over V , and ◮ S ⋆ = { s ∈ S | s | = γ } . ◮ d ∈ (0 , 1) is the discount factor. A probabilistic planning task is an SSP or MDP planning task. M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 15 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 16 / 34

G1. Factored MDPs Probabilistic Planning Tasks G1. Factored MDPs Complexity Mapping MDP Planning Tasks to MDPs Definition (MDP Induced by an MDP Planning Task) The MDP planning task Π = � V , I , O , γ � induces the MDP T = � S , L , R , T , s 0 , γ � , where G1.3 Complexity ◮ S is the set of all states over V , ◮ L is the set of operators O , ◮ R ( s , o ) = reward ( o )( s ) for all o ∈ O and s ∈ S , � if o applicable in s and � p , s ′ � ∈ s � o � p ◮ T ( s , o , s ′ ) = 0 otherwise ◮ s 0 = I , and ◮ γ = d . M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 17 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 18 / 34 G1. Factored MDPs Complexity G1. Factored MDPs Complexity Complexity of Probabilistic Planning Membership in EXP Theorem PolicyEx ∈ EXP Definition (Policy Existence) Policy existence ( PolicyEx ) is the following decision problem: Proof. Given: SSP planning task Π The number of states in an SSP planning task is exponential in the Is there a proper policy for Π? Question: number of variables. The induced SSP can be solved in time polynomial in | S | · | L | via linear programming and hence in time exponential in the input size. M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 19 / 34 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 4, 2019 20 / 34

Recommend

More recommend