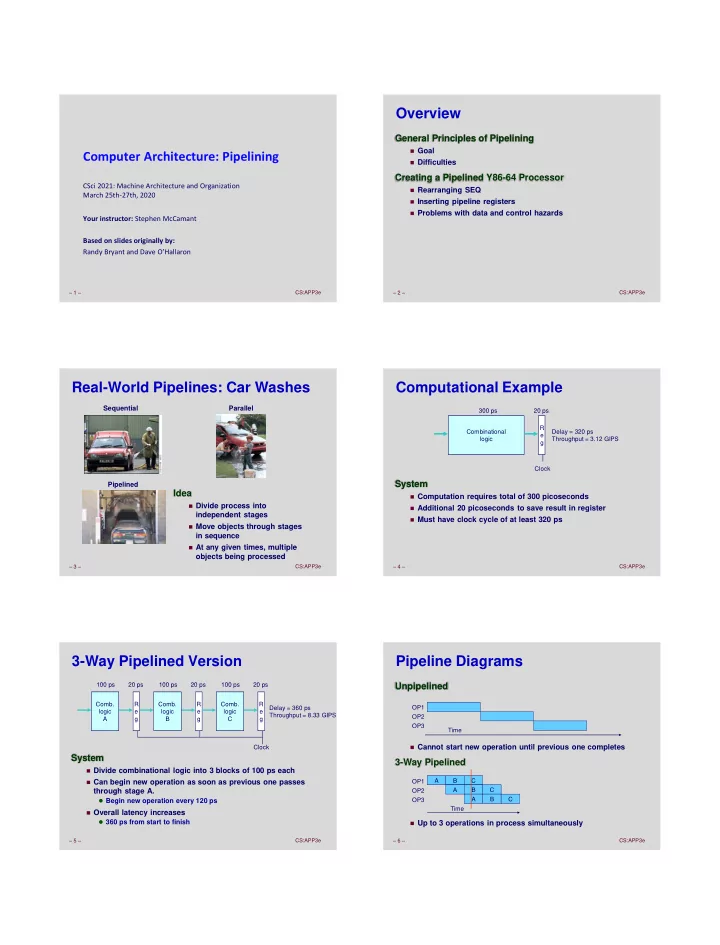

Overview General Principles of Pipelining Goal Computer Architecture: Pipelining Difficulties Creating a Pipelined Y86-64 Processor CSci 2021: Machine Architecture and Organization Rearranging SEQ March 25th-27th, 2020 Inserting pipeline registers Problems with data and control hazards Your instructor: Stephen McCamant Based on slides originally by: Randy Bryant and Dave O’Hallaron – 1 – – 2 – CS:APP3e CS:APP3e Real-World Pipelines: Car Washes Computational Example Sequential Parallel 300 ps 20 ps R Combinational Delay = 320 ps e logic Throughput = 3.12 GIPS g Clock System Pipelined Idea Computation requires total of 300 picoseconds Divide process into Additional 20 picoseconds to save result in register independent stages Must have clock cycle of at least 320 ps Move objects through stages in sequence At any given times, multiple objects being processed – 3 – – 4 – CS:APP3e CS:APP3e 3-Way Pipelined Version Pipeline Diagrams 100 ps 20 ps 100 ps 20 ps 100 ps 20 ps Unpipelined Comb. R Comb. R Comb. R OP1 Delay = 360 ps logic e logic e logic e Throughput = 8.33 GIPS OP2 A g B g C g OP3 Time Clock Cannot start new operation until previous one completes System 3-Way Pipelined Divide combinational logic into 3 blocks of 100 ps each Can begin new operation as soon as previous one passes A B C OP1 A B C through stage A. OP2 Begin new operation every 120 ps OP3 A B C Time Overall latency increases 360 ps from start to finish Up to 3 operations in process simultaneously – 5 – CS:APP3e – 6 – CS:APP3e

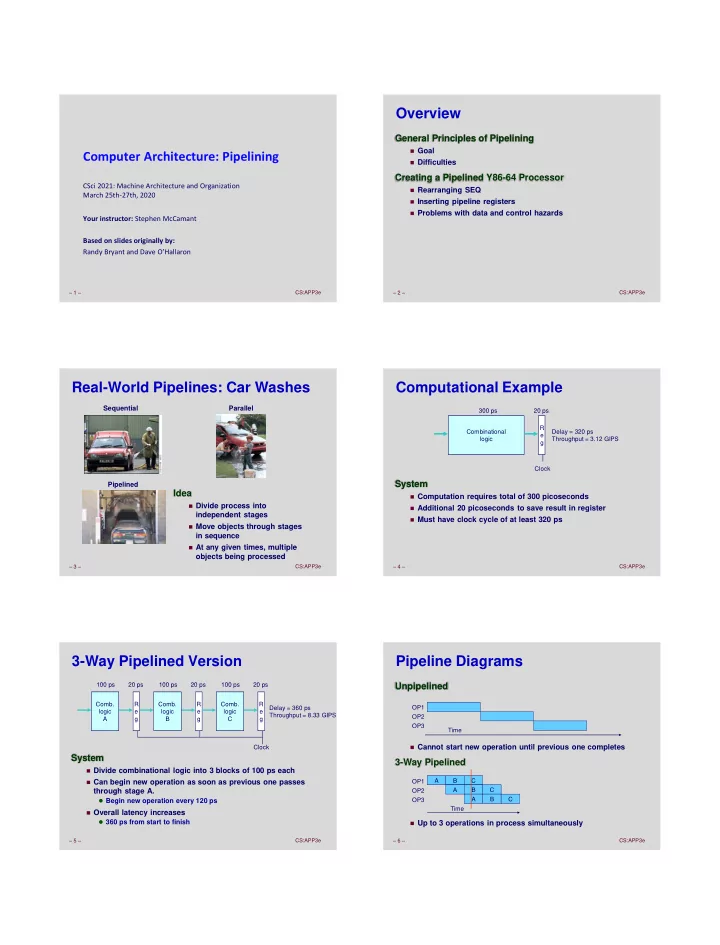

Operating a Pipeline Limitations: Nonuniform Delays 239 241 300 359 50 ps 20 ps 150 ps 20 ps 100 ps 20 ps Clock OP1 A B C Comb. R Comb. R Comb. R OP2 A B C Delay = 510 ps logic e logic e logic e A Throughput = 5.88 GIPS OP3 A B C g B g C g 0 120 240 360 480 640 Time Clock OP1 A B C OP2 A B C 100 ps 100 ps 100 ps 100 ps 20 ps 20 ps 20 ps 20 ps 100 ps 100 ps 100 ps 100 ps 20 ps 20 ps 20 ps 20 ps 100 ps 100 ps 100 ps 100 ps 20 ps 20 ps 20 ps 20 ps OP3 A B C Time Comb. Comb. Comb. Comb. Comb. Comb. R Comb. Comb. Comb. R Comb. Comb. Comb. R R R R R R R R R R logic logic logic logic logic logic e e e e logic logic logic e e e e logic logic logic e e e e A g g g g B g g g C g g g Throughput limited by slowest stage A A A B B B g C C C g Other stages sit idle for much of the time Challenging to partition system into balanced stages Clock Clock Clock Clock – 7 – – 8 – CS:APP3e CS:APP3e Limitations: Register Overhead Data Dependencies 50 ps 20 ps 50 ps 20 ps 50 ps 20 ps 50 ps 20 ps 50 ps 20 ps 50 ps 20 ps R R R R R R Comb. Comb. Comb. Comb. Comb. Comb. R e e e e e e Combinational logic logic logic logic logic logic e g g g g g g logic g Clock Delay = 420 ps, Throughput = 14.29 GIPS Clock As try to deepen pipeline, overhead of loading registers OP1 becomes more significant OP2 Percentage of clock cycle spent loading register: OP3 1-stage pipeline: 6.25% Time 3-stage pipeline: 16.67% 6-stage pipeline: 28.57% System High speeds of modern processor designs obtained through Each operation depends on result from preceding one very deep pipelining – 9 – – 10 – CS:APP3e CS:APP3e Data Hazards Data Dependencies in Processors irmovq $50, %rax 1 addq %rax , %rbx 2 Comb. R Comb. R Comb. R logic e logic e logic e mrmovq 100( %rbx ), %rdx 3 A g B g C g Result from one instruction used as operand for another Clock Read-after-write (RAW) dependency OP1 A B C OP2 A B C Very common in actual programs OP3 A B C Must make sure our pipeline handles these properly OP4 A B C Get correct results Time Minimize performance impact Result does not feed back around in time for next operation Pipelining has changed behavior of system – 11 – CS:APP3e – 12 – CS:APP3e

Exercise Break: Instruction Stages SEQ Hardware Fetch Stages occur in sequence valA ← R[%rsp] One operation in process at a time valM ← M 8 [valA] Decode PC ← valM valB ← R[%rsp] What instruction Execute is this? valE ← valB + 8 ret valP ← PC + 1 Memory icode:ifun ← M 1 [PC] Write-back R[%rsp] ← valE PC update – 13 – – 14 – CS:APP3e CS:APP3e Adding Pipeline Registers SEQ+ Hardware newPC PC valE, valM Still sequential Write back valM implementation Reorder PC stage to put at Data Data Memory memory memory beginning Addr, Data PC Stage valE Task is to select PC for current instruction CC CC ALU Execute Cnd ALU aluA , aluB Based on results computed by previous instruction valA , valB Processor State srcA , srcB Decode dstA , dstB A A B B M M Register Register Register Register file file file file PC is no longer stored in E E icode , ifun valP register rA , rB valC But, can determine PC Instruction Instruction PC PC Fetch memory memory increment increment based on other stored information PC – 15 – – 16 – CS:APP3e CS:APP3e Pipeline Stages PIPE- Hardware Fetch Pipeline registers hold intermediate values Select current PC from instruction Read instruction execution Compute incremented PC Forward (Upward) Paths Decode Values passed from one Read program registers stage to next Cannot jump past Execute stages Operate ALU e.g., valC passes through decode Memory Read or write data memory Write Back Update register file – 17 – CS:APP3e – 18 – CS:APP3e

Signal Naming Conventions Feedback Paths S_Field Predicted PC Value of Field held in stage S pipeline Guess value of next PC register Branch information s_Field Jump taken/not-taken Value of Field computed in stage S Fall-through or target address Return point Read from memory Register updates To register file write ports – 19 – – 20 – CS:APP3e CS:APP3e Predicting the Our Prediction Strategy PC Instructions that Don’t Transfer Control Predict next PC to be valP Always reliable Call and Unconditional Jumps Predict next PC to be valC (destination) Always reliable Conditional Jumps Predict next PC to be valC (destination) Only correct if branch is taken Start fetch of new instruction after current one has completed Typically right 60% of time fetch stage Not enough time to reliably determine next instruction Return Instruction Guess which instruction will follow Don’t try to predict Recover if prediction was incorrect – 21 – – 22 – CS:APP3e CS:APP3e Recovering Pipeline Demonstration from PC 1 2 3 4 5 6 7 8 9 Misprediction irmovq $1,%rax #I1 F D E M W irmovq $2,%rcx #I2 F D E M W irmovq $3,%rdx #I3 F D E M W irmovq $4,%rbx #I4 F D E M W halt #I5 F D E M W Cycle 5 W File: demo-basic.ys I1 M I2 Mispredicted Jump Will see branch condition flag once instruction reaches memory E I3 stage D Can get fall-through PC from valA (value M_valA) I4 Return Instruction F Will get return PC when ret reaches write-back stage (W_valM) I5 – 23 – CS:APP3e – 24 – CS:APP3e

Recommend

More recommend