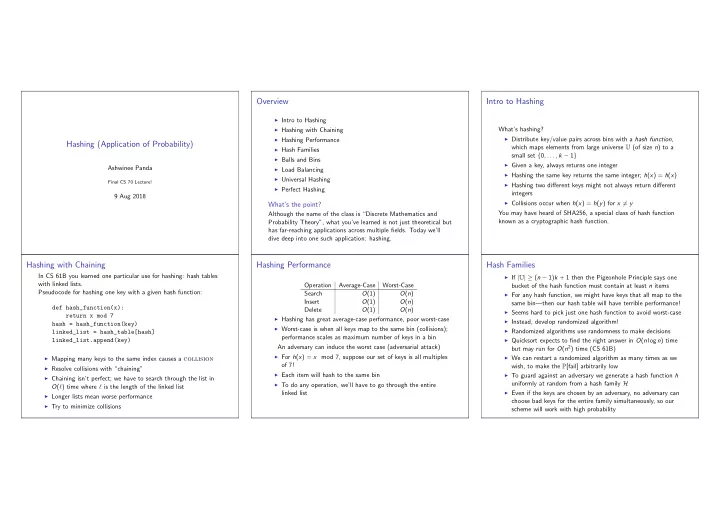

Overview Intro to Hashing ◮ Intro to Hashing ◮ Hashing with Chaining What’s hashing? ◮ Distribute key/value pairs across bins with a hash function , ◮ Hashing Performance Hashing (Application of Probability) which maps elements from large universe U (of size n ) to a ◮ Hash Families small set { 0 , . . . , k − 1 } ◮ Balls and Bins ◮ Given a key, always returns one integer Ashwinee Panda ◮ Load Balancing ◮ Hashing the same key returns the same integer; h ( x ) = h ( x ) ◮ Universal Hashing Final CS 70 Lecture! ◮ Hashing two different keys might not always return different ◮ Perfect Hashing integers 9 Aug 2018 ◮ Collisions occur when h ( x ) = h ( y ) for x � = y What’s the point? You may have heard of SHA256, a special class of hash function Although the name of the class is “Discrete Mathematics and known as a cryptographic hash function. Probability Theory”, what you’ve learned is not just theoretical but has far-reaching applications across multiple fields. Today we’ll dive deep into one such application: hashing. Hashing with Chaining Hashing Performance Hash Families In CS 61B you learned one particular use for hashing: hash tables ◮ If | U | ≥ ( n − 1) k + 1 then the Pigeonhole Principle says one with linked lists. Operation Average-Case Worst-Case bucket of the hash function must contain at least n items Pseudocode for hashing one key with a given hash function: Search O (1) O ( n ) ◮ For any hash function, we might have keys that all map to the Insert O (1) O ( n ) same bin—then our hash table will have terrible performance! def hash_function(x): Delete O (1) O ( n ) ◮ Seems hard to pick just one hash function to avoid worst-case return x mod 7 ◮ Hashing has great average-case performance, poor worst-case ◮ Instead, develop randomized algorithm! hash = hash_function(key) ◮ Worst-case is when all keys map to the same bin (collisions); ◮ Randomized algorithms use randomness to make decisions linked_list = hash_table[hash] performance scales as maximum number of keys in a bin ◮ Quicksort expects to find the right answer in O ( n log n ) time linked_list.append(key) An adversary can induce the worst case (adversarial attack) but may run for O ( n 2 ) time (CS 61B) ◮ For h ( x ) = x mod 7, suppose our set of keys is all multiples ◮ We can restart a randomized algorithm as many times as we ◮ Mapping many keys to the same index causes a collision of 7! wish, to make the P [fail] arbitrarily low ◮ Resolve collisions with “chaining” ◮ Each item will hash to the same bin ◮ To guard against an adversary we generate a hash function h ◮ Chaining isn’t perfect; we have to search through the list in ◮ To do any operation, we’ll have to go through the entire uniformly at random from a hash family H O ( ℓ ) time where ℓ is the length of the linked list ◮ Even if the keys are chosen by an adversary, no adversary can linked list ◮ Longer lists mean worse performance choose bad keys for the entire family simultaneously, so our ◮ Try to minimize collisions scheme will work with high probability

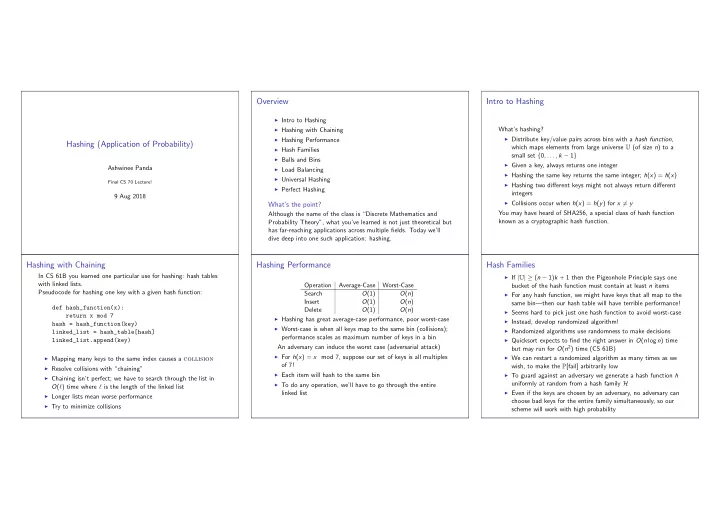

Balls and Bins Balls and Bins Load Balancing X i is the indicator random variable which turns on if the i th ball ◮ If we want to be really random, we’d see hashing as just falls into bin 1 and X is the number of balls that fall into bin 1 ◮ Distributed computing: evenly distribute a workload balls and bins ◮ E [ X i ] = P [ X i = 1] = 1 ◮ Specifically, suppose that the random variables h ( x ) as x ◮ m identical jobs, n identical processors (may not be identical k ◮ E [ X ] = n ranges over U are independent but that won’t actually matter) k E i is the indicator variable that bin i is empty ◮ Balls will be the keys to be stored ◮ Ideally we should distribute these perfectly evenly so each k ) n ◮ Using the complement of X i we find P [ E i ] = (1 − 1 processor gets m n jobs ◮ Bins will be the k locations in hash table ◮ Centralized systems are capable of this, but centralized E is the number of empty locations ◮ The hash function maps each key to a uniformly random k ) n ◮ E [ E ] = k (1 − 1 systems require a server to exert a degree of control that is location often impractical n ) n ≈ n ◮ Each key (ball) chooses a bin uniformly and independently ⇒ E [ E ] = n (1 − 1 e and E [ X ] = n ◮ k = n = n ◮ This is actually similar to balls and bins! ◮ How likely can collisions be? The probability that two balls ◮ How can we expect 1 item per location (very intuitive with n ◮ Let’s continue using our random algorithm of hashing 1 fall into same bin is balls and n bins) and also expect more than a third of k 2 ◮ Let’s try to derive an upper bound for the maximum length, ◮ Birthday Paradox: 23 balls and 365 bins = locations to be empty? ⇒ 50% chance of assuming m = n collision! C is the number of bins with ≥ 2 balls √ 1 k ) n ◮ n ≥ k = ⇒ 2 chance of collision ◮ E [ C ] = n − k + E [ E ] = n − k + k (1 − 1 Load Balancing Load Balancing Universal Hashing Expected max load is � n t ) t H i , t is the event that t keys hash to bin i t =1 t P [ M t ] where P [ M t ] ≤ n ( e What we’ve been working with so far is “ k -wise independent” n ) n − t n ) t (1 − 1 hashing or fully independent hashing. � n � ( 1 ◮ P [ H i , t ] = ◮ Split sum into two parts and bound each part separately. t n n � n ◮ β = ⌈ 5 ln n ◮ For any number of balls k , the probability that they fall into ◮ Approximation: � ≤ t t ( n − t ) n − t by Stirling’s formula ln ln n ⌉ . How did we get this? Take a look at Note 15. t 1 the same bin of n bins is x ) x ≤ e by the limit ◮ � n t =1 t P [ M t ] = � β t =1 t P [ M t ] + � n ◮ Approximation: ∀ x > 0 , (1 + 1 t = β t P [ M t ] n k ◮ Very strong requirement! n ) n − t ≤ 1 and ( 1 n ) t = 1 ◮ Because (1 − 1 n t we can simplify Sum over smaller values: ◮ Fully independent hash functions require a large number of n ) n − t ≤ ◮ Replace t with the upper bound of β n ) t (1 − 1 n n n n − t ◮ � n ( 1 � t t ( n − t ) n − t n t = bits to store t t t ( n − t ) n − t ◮ � β t =1 t P [ M t ] ≤ � β t =1 β P [ M t ] = β � β t =1 P [ M t ] ≤ β Do we compromise, and make our worst case worse so we can have t n − t ) n − t = 1 n − t t ) ≤ e t as the sum of disjoint probabilities is bounded by 1 = 1 t t t t (1 + t t ((1 + n − t ) more space? t t Sum over larger values: M t : event that max list length hashing n items to n bins is t ◮ Often you do have to sacrifice time for space, vice-versa ◮ Use our expression for P [ H i , t ] and see that P [ M t ] ≤ 1 M i , t : event that max list length is t , and this list is in bin i n 2 . ◮ But not this time! Let’s inspect our worst-case ◮ Since this bound decreases as t grows, and t ≤ n : ◮ P [ M t ] = P [ � n i =1 M i , t ] ≤ � n i =1 P [ M i , t ] ≤ � n i =1 P [ H i , t ] ◮ Collisions only care about two balls colliding ◮ � n t = β t P [ M t ] ≤ � n t = β n 1 n 2 ≤ � n 1 ◮ Identically distributed loads means � n n ≤ 1 i =1 P [ H i , t ] = n P [ H i , t ] t = β We don’t need “ k -wise independence” we only need “2-wise ◮ Expected max load is O ( β ) = O ( ln n ln ln n ) The probability that the max list length is t is at most n ( e t ) t independence”

Recommend

More recommend