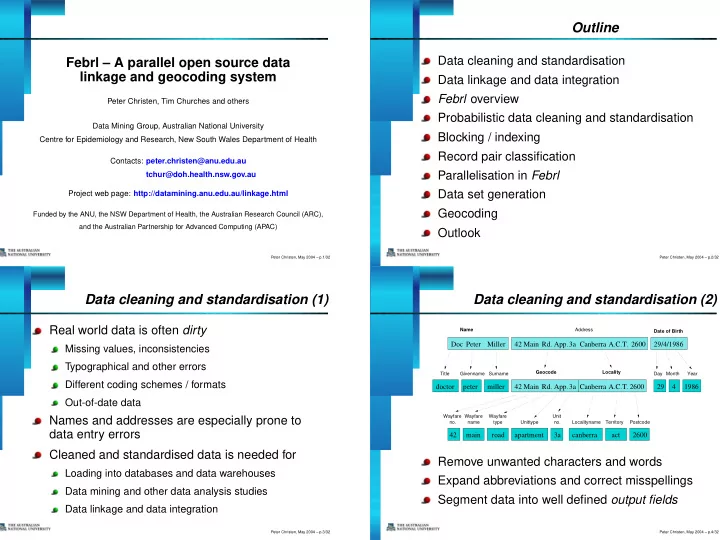

Outline Data cleaning and standardisation Febrl – A parallel open source data linkage and geocoding system Data linkage and data integration Febrl overview Peter Christen, Tim Churches and others Probabilistic data cleaning and standardisation Data Mining Group, Australian National University Blocking / indexing Centre for Epidemiology and Research, New South Wales Department of Health Record pair classification Contacts: peter.christen@anu.edu.au Parallelisation in Febrl tchur@doh.health.nsw.gov.au Data set generation Project web page: http://datamining.anu.edu.au/linkage.html Geocoding Funded by the ANU, the NSW Department of Health, the Australian Research Council (ARC), and the Australian Partnership for Advanced Computing (APAC) Outlook Peter Christen, May 2004 – p.1/32 Peter Christen, May 2004 – p.2/32 Data cleaning and standardisation (1) Data cleaning and standardisation (2) Real world data is often dirty Name Address Date of Birth Doc Peter Miller 42 Main Rd. App. 3a Canberra A.C.T. 2600 29/4/1986 Missing values, inconsistencies Typographical and other errors Geocode Locality Title Givenname Surname Day Month Year Different coding schemes / formats doctor peter miller 42 Main Rd. App. 3a Canberra A.C.T. 2600 29 4 1986 Out-of-date data Wayfare Wayfare Wayfare Unit Names and addresses are especially prone to no. name type Unittype no. Localityname Territory Postcode data entry errors 42 main road apartment 3a canberra act 2600 Cleaned and standardised data is needed for Remove unwanted characters and words Loading into databases and data warehouses Expand abbreviations and correct misspellings Data mining and other data analysis studies Segment data into well defined output fields Data linkage and data integration Peter Christen, May 2004 – p.3/32 Peter Christen, May 2004 – p.4/32

Data linkage and data integration Data linkage techniques The task of linking together records representing Deterministic or exact linkage the same entity from one or more data sources A unique identifier is needed, which is of high quality If no unique identifier is available, probabilistic (precise, robust, stable over time, highly available) linkage techniques have to be applied For example Medicare number (?) Applications of data linkage Probabilistic linkage ( Fellegi & Sunter , 1969) Remove duplicates in a data set (internal linkage) Apply linkage using available (personal) information Merge new records into a larger master data set Examples: names , addresses , dates of birth Create customer or patient oriented statistics Other techniques Compile data for longitudinal studies (rule-based, fuzzy approach, information retrieval) Data cleaning and standardisation are important first steps for successful data linkage Peter Christen, May 2004 – p.5/32 Peter Christen, May 2004 – p.6/32 Febrl – Freely extensible biomedical Probabilistic data cleaning and record linkage standardisation Three step approach An experimental platform for new and improved linkage algorithms 1. Cleaning – Based on look-up tables and correction lists Modules for data cleaning and standardisation, – Remove unwanted characters and words data linkage, deduplication and geocoding – Correct various misspellings and abbreviations Open source https://sourceforge.net/projects/febrl/ 2. Tagging Implemented in Python http://www.python.org – Split input into a list of words, numbers and separators Easy and rapid prototype software development – Assign one or more tags to each element of this list (using look-up tables and some hard-coded rules) Object-oriented and cross-platform (Unix, Win, Mac) Can handle large data sets stable and efficiently 3. Segmenting – Use either rules or a hidden Markov model (HMM) Many external modules, easy to extend to assign list elements to output fields Peter Christen, May 2004 – p.7/32 Peter Christen, May 2004 – p.8/32

Cleaning Tagging Assume the input component is one string Cleaned strings are split at whitespace boundaries into lists of words, numbers, characters, etc. (either name or address – dates are processed differently) Convert all letters into lower case Using look-up tables and some hard-coded rules, each element is tagged with one or more tags Use correction lists which contain pairs of original:replacement strings Example: An empty replacement string results in removing Uncleaned input string: “Doc. peter Paul MILLER” the original string Cleaned string: “dr peter paul miller” Correction lists are stored in text files and can be Word and tag lists: modified by the user [‘dr’, ‘peter’, ‘paul’, ‘miller’] Different correction lists for names and addresses [‘TI’, ‘GM/SN’, ‘GM’, ‘SN’ ] Peter Christen, May 2004 – p.9/32 Peter Christen, May 2004 – p.10/32 Segmenting Hidden Markov model (HMM) Givenname 5% 25% 55% Using the tag list, assign elements in the word list 85% 5% 5% 30% to the appropriate output fields 20% Start Title Middlename End 5% 65% Rules based approach (e.g. AutoStan ) 10% 100% 15% 75% Surname Example: “if an element has tag ‘TI’ then assign the corresponding word to the ‘Title’ output field” A HMM is a probabilistic finite state machine Hard to develop and maintain rules Made of a set of states and transition probabilities Different sets of rules needed for different data sets between these states Hidden Markov model (HMM) approach In each state an observation symbol is emitted with a certain probability distribution A machine learning technique (supervised learning) In our approach, the observation symbols are tags and Training data is needed to build HMMs the states correspond to the output fields Peter Christen, May 2004 – p.11/32 Peter Christen, May 2004 – p.12/32

HMM data segmentation HMM probability matrices 5% 5% Givenname Givenname 25% 25% 55% 55% 85% 5% 85% 5% 5% 5% 30% 30% Start Title 20% Middlename End Start Title 20% Middlename End 5% 65% 5% 65% 10% 10% 100% 100% 15% 15% Surname 75% Surname 75% For an observation sequence we are interested in State the most likely path through a given HMM Observation Start Title Givenname Middlename Surname End (in our case an observation sequence is a tag list ) TI – 96% 1% 1% 1% – GM – 1% 35% 33% 15% – The Viterbi algorithm is used for this task GF – 1% 35% 27% 14% – (a dynamic programming approach) SN – 1% 9% 14% 45% – Smoothing is applied to account for unseen data UN – 1% 20% 25% 25% – (assign small probabilities for unseen observation symbols) Peter Christen, May 2004 – p.13/32 Peter Christen, May 2004 – p.14/32 HMM segmentation example HMM training (1) Givenname 5% 25% Both transition and observation probabilities need 55% 85% 5% 5% to be trained using training data 30% 20% Start Title Middlename End (maximum likelihood estimates (MLE) are derived by 5% 65% accumulating frequency counts for transitions and 10% 100% 15% 75% Surname observations) Training data consists of records, each being a Input word and tag list sequence of tag:hmm_state pairs [‘dr’, ‘peter’, ‘paul’, ‘miller’] [‘TI’, ‘GM/SN’, ‘GM’, ‘SN’ ] Example (2 training records): # ‘42 / 131 miller place manly 2095 new_south_wales’ Two example paths through the HMM NU:unnu,SL:sla,NU:wfnu,UN:wfna1,WT:wfty,LN:loc1,PC:pc,TR:ter1 1: Start -> Title (TI) -> Givenname (GM) -> Middlename (GM) -> Surname (SN) -> End # ‘2 richard street lewisham 2049 new_south_wales’ 2: Start -> Title (TI) -> Surname (SN) -> Givenname (GM) -> NU:wfnu,UN:wfna1,WT:wfty,LN:loc1,PC:pc,TR:ter1 Surname (SN) -> End Peter Christen, May 2004 – p.15/32 Peter Christen, May 2004 – p.16/32

Recommend

More recommend