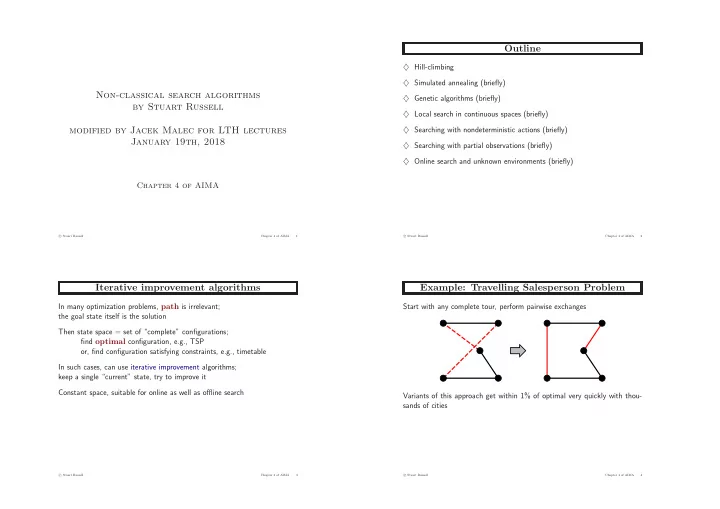

Outline ♦ Hill-climbing ♦ Simulated annealing (briefly) Non-classical search algorithms ♦ Genetic algorithms (briefly) by Stuart Russell ♦ Local search in continuous spaces (briefly) modified by Jacek Malec for LTH lectures ♦ Searching with nondeterministic actions (briefly) January 19th, 2018 ♦ Searching with partial observations (briefly) ♦ Online search and unknown environments (briefly) Chapter 4 of AIMA � Stuart Russell c Chapter 4 of AIMA 1 � Stuart Russell c Chapter 4 of AIMA 2 Iterative improvement algorithms Example: Travelling Salesperson Problem In many optimization problems, path is irrelevant; Start with any complete tour, perform pairwise exchanges the goal state itself is the solution Then state space = set of “complete” configurations; find optimal configuration, e.g., TSP or, find configuration satisfying constraints, e.g., timetable In such cases, can use iterative improvement algorithms; keep a single “current” state, try to improve it Constant space, suitable for online as well as o ffl ine search Variants of this approach get within 1% of optimal very quickly with thou- sands of cities � Stuart Russell c Chapter 4 of AIMA 3 � Stuart Russell c Chapter 4 of AIMA 4

Example: n -queens Hill-climbing (or gradient ascent/descent) Put n queens on an n × n board with no two queens on the same “Like climbing Everest in thick fog with amnesia” row, column, or diagonal function Hill-Climbing ( problem ) returns a state that is a local maximum Move a queen to reduce number of conflicts inputs : problem , a problem local variables : current , a node neighbor , a node current ← Make-Node ( Initial-State [ problem ]) loop do neighbor ← a highest-valued successor of current if Value [neighbor] ≤ Value [current] then return State [ current ] current ← neighbor end h = 5 h = 2 h = 0 Almost always solves n -queens problems almost instantaneously for very large n , e.g., n = 1 million � Stuart Russell c Chapter 4 of AIMA 5 � Stuart Russell c Chapter 4 of AIMA 6 Hill-climbing contd. Simulated annealing Useful to consider state space landscape Idea: escape local maxima by allowing some “bad” moves but gradually decrease their size and frequency objective function global maximum function Simulated-Annealing ( problem, schedule ) returns a solution state inputs : problem , a problem shoulder schedule , a mapping from time to “temperature” local maximum local variables : current , a node "flat" local maximum next , a node T , a “temperature” controlling prob. of downward steps current ← Make-Node ( Initial-State [ problem ]) for t ← 1 to ∞ do T ← schedule [ t ] state space current if T = 0 then return current state next ← a randomly selected successor of current Random-restart hill climbing overcomes local maxima—trivially complete ∆ E ← Value [ next ] – Value [ current ] if ∆ E > 0 then current ← next Random sideways moves escape from shoulders loop on flat maxima else current ← next only with probability e ∆ E/T � Stuart Russell c Chapter 4 of AIMA 7 � Stuart Russell c Chapter 4 of AIMA 8

Properties of simulated annealing Local beam search At fixed “temperature” T , state occupation probability reaches Idea: keep k states instead of 1; choose top k of all their successors Boltzman distribution Not the same as k searches run in parallel! E ( x ) Searches that find good states recruit other searches to join them p ( x ) = α e kT ⇒ always reach best state x ∗ T decreased slowly enough = Problem: quite often, all k states end up on same local hill E ( x ∗ ) E ( x ∗ ) − E ( x ) E ( x ) kT /e kT = e because e � 1 for small T kT Idea: choose k successors randomly, biased towards good ones Is this necessarily an interesting guarantee?? Observe the close analogy to natural selection! Devised by Metropolis et al., 1953, for physical process modelling Widely used in VLSI layout, airline scheduling, etc. � Stuart Russell c Chapter 4 of AIMA 9 � Stuart Russell c Chapter 4 of AIMA 10 Genetic algorithms Genetic algorithms contd. = stochastic local beam search + generate successors from pairs of states GAs require states encoded as strings (GPs use programs) Crossover helps i ff substrings are meaningful components 24748552 32748552 32748152 24 31% 32752411 32752411 23 24748552 24752411 24752411 29% 32752124 32252124 24415124 20 26% 32752411 + = 24415411 24415417 32543213 11 14% 24415124 Fitness Selection Pairs Cross − Over Mutation GAs ̸ = evolution: e.g., real genes encode replication machinery! � Stuart Russell c Chapter 4 of AIMA 11 � Stuart Russell c Chapter 4 of AIMA 12

Continuous state spaces Searching with nondeterministic actions R Suppose we want to site three robot battery loading stations in the hospital: L R L – 6-D state space defined by ( x 1 , y 2 ) , ( x 2 , y 2 ) , ( x 3 , y 3 ) S S – objective function f ( x 1 , y 2 , x 2 , y 2 , x 3 , y 3 ) = R R sum of squared distances from each location to nearest loading station L R L R L L Discretization methods turn continuous space into discrete space, S S S S e.g., empirical gradient considers ± δ change in each coordinate R L R Gradient methods compute L S S ∂ f , ∂ f , ∂ f , ∂ f , ∂ f , ∂ f ∇ f = ∂ x 1 ∂ y 1 ∂ x 2 ∂ y 2 ∂ x 3 ∂ y 3 Erratic vacuum world: modified Suck ; to increase/reduce f , e.g., by x ← x + α ∇ f ( x ) Slippery vacuum world: modified Right and Left . Sometimes can solve for ∇ f ( x ) = 0 exactly (e.g., with one location). Newton–Raphson (1664, 1690) iterates x ← x − H − 1 f ( x ) ∇ f ( x ) to solve ∇ f ( x ) = 0 , where H ij = ∂ 2 f/ ∂ x i ∂ x j � Stuart Russell c Chapter 4 of AIMA 13 � Stuart Russell c Chapter 4 of AIMA 14 Searching with nondeterministic actions Searching with nondeterministic actions And-or search trees And-or search trees For the erratic case: For the slippery case: Right Suck Suck Right GOAL Left Right Suck Suck Right LOOP LOOP LOOP GOAL Suck Left GOAL LOOP � Stuart Russell c Chapter 4 of AIMA 15 � Stuart Russell c Chapter 4 of AIMA 16

Searching with nondeterministic actions Searching with partial observations ♦ no-information case: function And-Or-Graph-Search ( problem ) returns a cond. plan, or failure Or-Search ( problem . Initial-State , problem ,[]) sensorless problem, or function Or-Search ( state,problem,path ) returns a conditional plan or failure conformant problem if problem . Goal-Test ( state ) then return the empty plan ♦ state-space search is made in belief space if state is on path then return failure for each action in problem . Actions ( state ) do ♦ Problem solving: and-or search! plan ← And-Search ( Results ( state,action ), problem ,[ state | path ]) if plan ̸ = failure then return [ action | plan ] return failure function And-Search ( states,problem,path ) returns a conditional plan or failure for each s i in states do plan i ← Or-Search ( s i ,problem,path ) if plan i = failure then return failure return [ if s 1 then plan 1 else if s 2 then plan 2 else if ... plan n − 1 else plan n ] � Stuart Russell c Chapter 4 of AIMA 17 � Stuart Russell c Chapter 4 of AIMA 18 Searching with partial observations Searching with partial observations Deterministic case: Local sensing, deterministic and slippery cases: L {2} [B, Dirty] Right R {2,4} {1,3} R L {4} [B,Clean] {1,3,5,7} {1,2,3,4,5,6,7,8} {2,4,6,8} S S S {4,5,7,8} {2} L R [B, Dirty] R S S {5,7} {3,5,7} {4,6,8} {4,8} [A,Dirty] Right L {1,2,3,4} {1,3} {1,3} L L R R R S S [B,Clean] {6,8} {8} {7} {3,7} {4} L � Stuart Russell c Chapter 4 of AIMA 19 � Stuart Russell c Chapter 4 of AIMA 20

Searching with partial observations Online search and unknown environments Planning for the local sensing case: Interleaving computations and actions: ♦ act 1 ♦ observe the results 3 Right ♦ find out (compute) next action Suck Useful in dynamic domains. [B,Clean] [B,Dirty] Online search usually exploits locality of depth-first-like methods. ♦ random walk 5 2 4 ♦ modified hill-climbing 7 ♦ Learning Real-Time A* (LRTA*) optimism under uncertainty (unexplored areas assumed to lead to goal with least possible cost) � Stuart Russell c Chapter 4 of AIMA 21 � Stuart Russell c Chapter 4 of AIMA 22

Recommend

More recommend