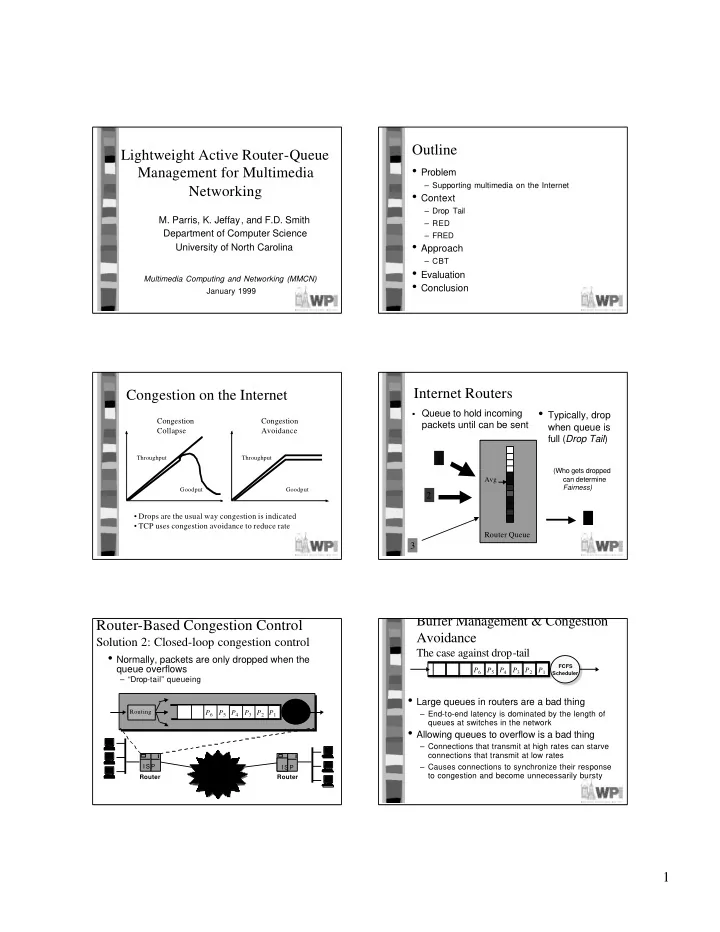

Outline Lightweight Active Router-Queue • Problem Management for Multimedia – Supporting multimedia on the Internet Networking • Context – Drop Tail M. Parris, K. Jeffay, and F.D. Smith – RED Department of Computer Science – FRED • Approach University of North Carolina – CBT • Evaluation Multimedia Computing and Networking (MMCN) • Conclusion January 1999 Internet Routers Congestion on the Internet • Typically, drop � Queue to hold incoming Congestion Congestion packets until can be sent when queue is Collapse Avoidance full ( Drop Tail ) 1 Throughput Throughput (Who gets dropped Avg can determine Fairness) Goodput Goodput 2 • Drops are the usual way congestion is indicated 4 • TCP uses congestion avoidance to reduce rate Router Queue 3 Buffer Management & Congestion Router-Based Congestion Control Avoidance Solution 2: Closed-loop congestion control The case against drop-tail • Normally, packets are only dropped when the FCFS queue overflows P 6 P 5 P 4 P 3 P 2 P 1 Scheduler – “Drop-tail” queueing • Large queues in routers are a bad thing FCFS Routing P 6 P 5 P 4 P 3 P 2 P 1 – End-to-end latency is dominated by the length of Scheduler queues at switches in the network • Allowing queues to overflow is a bad thing – Connections that transmit at high rates can starve connections that transmit at low rates – Causes connections to synchronize their response I S P I S P Inter - to congestion and become unnecessarily bursty Router Router n e t w o r k 1

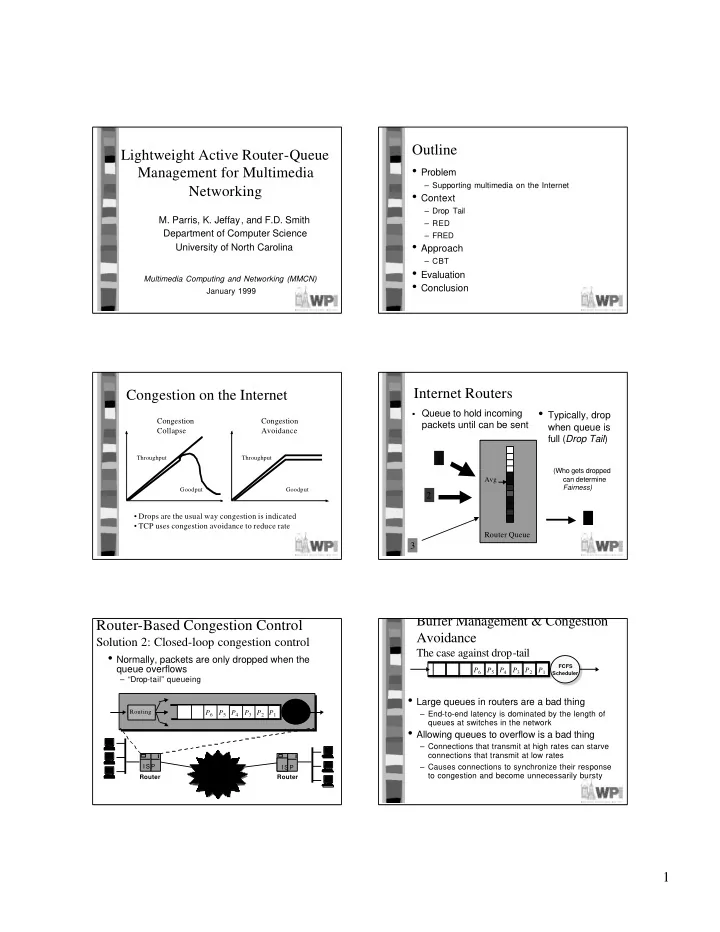

Buffer Management & Congestion Buffer Management & Congestion Avoidance Avoidance Random early detection (RED) packet drop Random early detection (RED) packet drop Average queue length Drop probability Average queue length Drop probability Max Max queue length queue length Forced drop Forced drop Max Max threshold threshold Probabilistic Probabilistic early drop early drop Min Min No drop No drop threshold threshold Time Time • Use an exponential average of the queue length to • Amount of packet loss is roughly proportional to determine when to drop a connection’s bandwidth utilization – Accommodates short-term bursts – But there is no a priori bias against bursty sources • Tie the drop probability to the weighted average queue • Average connection latency is lower length • Average throughput (“goodput”) is higher – Avoids over-reaction to mild overload conditions Buffer Management & Congestion Avoidance Random Early Detection Random early detection (RED) packet drop Algorithm Average queue length Drop probability Max queue length for e ach pack e t ar r i val : Forced drop ca l cul a t e t he a ve r a ge que ue si ze av e Max if ave � min threshold Probabilistic t h early drop d o n ot h i n g Min el se i f min t h � av e � max t h No drop threshold cal cul at e dr op pr obabi l i t y p Time dr op a r r i vi ng pa ck e t wi t h pr oba bi l i t y p el se i f max t h � av e • RED is controlled by 5 parameters d r op t he ar r i vi ng packet – qlen — The maximum length of the queue • The average queue length computation needs to be – w q — Weighting factor for average queue length computation low pass filtered to smooth out transients due to – min th — Minimum queue length for triggering probabilistic drops bursts – max th — Queue length threshold for triggering forced drops – ave = (1 – w q ) ave + w q q – max p — The maximum drop probability Buffer Management & Congestion Random Early Detection Avoidance Performance Random early detection (RED) packet drop Average queue length Drop probability Max queue length Forced drop Max threshold Probabilistic early drop Min No drop threshold Time Drop probability 100% Weighted max p Average Queue Length • Floyd/Jacobson simulation of two TCP ( ftp ) flows min max 2

RED Vulnerability to Random Early Detection (RED) Misbehaving Flows Summary • Controls average queue size • Drop early to signal impending congestion • Drops proportional to bandwidth, but drop rate equal for all flows • Unresponsive traffic will still not slow down! • TCP performance on a 10 Mbps link under RED in the face of a “UDP” blast Dealing With Non-Responsive Router-Based Congestion Control Flows Dealing with heterogeneous/non- Isolating responsive and non- responsive flows responsive flows Packet Packet Classifier Classifier Scheduler Scheduler • Class-based Queuing (CBQ) (Floyd/Jacobson) • TCP requires protection/isolation from non- provides fair allocation of bandwidth to traffic classes responsive flows – Separate queues are provided for each traffic class and • Solutions? serviced in round robin order (or weighted round robin) – n classes each receive exactly 1/ n of the capacity of the link – Employ fair-queuing/link scheduling mechanisms • Separate queues ensure perfect isolation between – Identify and police non-responsive flows (not here) classes – Employ fair buffer allocation within a RED • Drawback: ‘reservation’ of bandwidth and state mechanism information required Dealing With Non-Responsive Dealing With Non-Responsive Flows Flows CBQ performance Fair buffer allocation 1,400 f 1 FCFS Classifier ... Scheduler 1,200 TCP Throughput (Kbytes/s) UDP Bulk Transfer f n 1,000 • Isolation can be achieved by reserving capacity for C B Q 800 flows within a single FIFO queue 600 – Rather than maintain separate queues, keep counts of packets in a single queue 400 R E D • Lin/Morris: Modify RED to perform fair buffer allocation FIFO between active flows 200 – Independent of protection issues, fair buffer allocation 0 between TCP connections is also desirable 0 10 20 30 40 50 60 70 80 90 100 Time (seconds) 3

Flow Random Early Detect Flow Random Early Detection (FRED) Performance in the face of non-responsive • In RED, 10 Mbps � 9 Mbps and 1Mbps � .9 Mbps flows – Unfair 1,400 • In FRED, leave 1 Mbps untouched until 10 Mbps is 1,200 down TCP Throughput (KBytes/sec) UDP Bulk Transfer 1,000 800 600 F R E D 400 R E D 200 • Separate drop probabilities per flow 0 0 10 20 30 40 50 60 70 80 90 100 • “Light” flows have no drops, heavy flows have high drops Time(secs) Congestion Avoidance v . Fair-Sharing Queue Management TCP throughput under different queue management schemes Recommendations 1,400 • Recommend (Braden 1998, Floyd 1998) 1,200 UDP Bulk Transfer TCP Throughput (KBytes/sec) – Deploy RED 1,000 + Avoid full queues, reduce latency, reduce packet drops, avoid lock out C B Q 800 – Continue research into ways to punish aggressive or misbehaving flows 600 F R E D • Multimedia 400 R E D – Does not use TCP + Can tolerate some loss 200 FIFO + Price for latency is too high 0 – Often low-bandwidth 0 10 20 30 40 50 60 70 80 90 100 – Delay sensitive • TCP performance as a function of the state required to ensure/approximate fairness Outline Goals • Problem • Isolation – Supporting multimedia on the Internet – Responsive (TCP) from unresponsive • Context – Unresponsive: multimedia from aggressive – Drop Tail • Flexible fairness – RED – Something more than equal shares for all – FRED • Lightweight • Approach – Minimal state per flow – CBT • Maintain benefits of RED • Evaluation – Feedback • Conclusion – Distribution of drops 4

Class-Based Threshold (CBT) Class-Based Threshold (CBT) • Isolation f 1 � – Packets are classified into 1 of 3 classes f 2 � FCFS Classifier Scheduler – Statistics are kept for each class ... • Flexible fairness f n � – Configurable thresholds determine the ratios between classes during periods of congestion • Designate a set of traffic classes and allocate a fraction • Lightweight of a router’s buffer capacity to each class – State per class and not per flow • Once a class is occupying its limit of queue elements, discard all arriving packets – Still one outbound queue • Maintain benefits of RED • Within a traffic class, further active queue management may be performed – Continue with RED policies for TCP Class-Based Thresholds CBT Implementation Evaluation Inter- network Router Router • Compare: – FIFO queuing (Lower bound baseline) • Implemented in Alt-Q on FreeBSD – RED (The Internet of tomorrow?) • Three traffic classes: – FRED (RED + Fair allocation of buffers ) –TCP – CBT –marked non-TCP (“well behaved UDP”) – CBQ (U p p e r b o u n d b a s e l i n e) –non- marked non-TCP (all others) • Subject TCP flows get RED and non-TCP flows to a weighted average queue occupancy threshold test CBT Evaluation CBT Evaluation Experimental design TCP Throughput 1,400 1,200 Inter- UDP Bulk Transfer network Router 1,000 Router TCP Throughput (kbps) C B Q 800 C B T • RED Settings: 6 ProShare- Unresponsive MM (210Kbps each) 600 F R E D qsize = 60 pkts max-th = 30 pkts 240 FTP-TCP min-th = 15 pkts 400 R E D Wq = 0.002 max-p = 0.1 1 UDP blast (10Mbps, 1KB) 200 FIFO 0 20 60 110 160 180 • CBT Settings: 0 0 10 20 30 40 50 60 70 80 90 100 mm-th = 10 pkts Throughput and Latency udp-th = 2 pkts Elapsed Time (s) 5

Recommend

More recommend