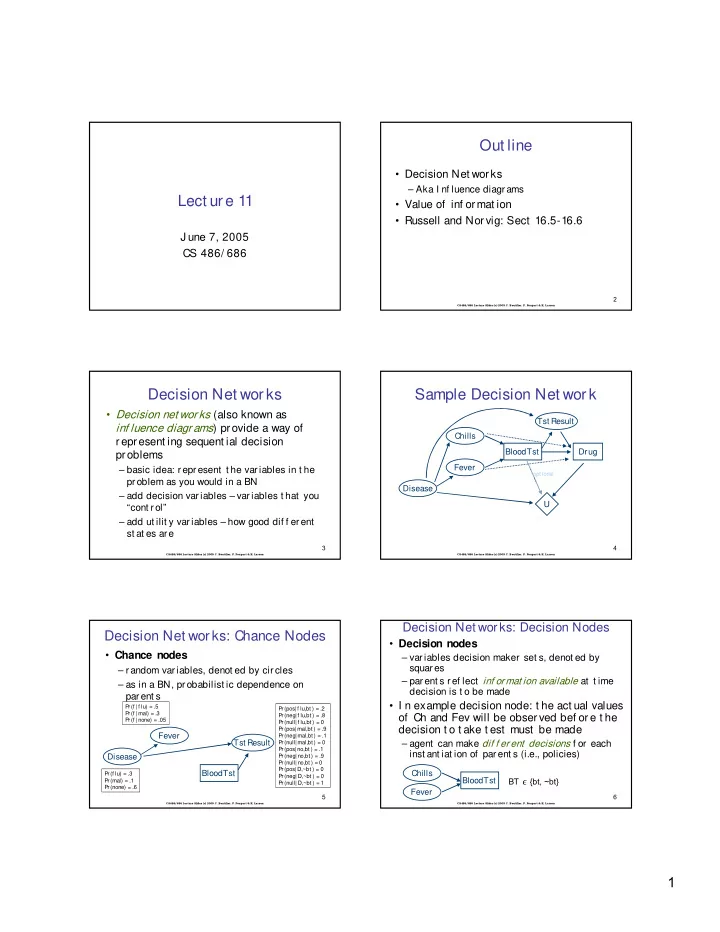

Out line • Decision Net works – Aka I nf luence diagr ams Lect ure 11 • Value of inf ormat ion • Russell and Norvig: Sect 16.5-16.6 J une 7, 2005 CS 486/ 686 2 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson Decision Net works Sample Decision Net work • Decision net wor ks (also known as Tst Result inf luence diagr ams ) provide a way of Chills represent ing sequent ial decision BloodTst Drug problems Fever – basic idea: represent t he variables in t he opt ional problem as you would in a BN Disease – add decision variables – variables t hat you U “cont r ol” – add ut ilit y variables – how good dif f erent st at es are 3 4 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson Decision Net works: Decision Nodes Decision Net wor ks: Chance Nodes • Decision nodes • Chance nodes – variables decision maker set s, denot ed by squares – random var iables, denot ed by circles – parent s ref lect inf ormat ion available at t ime – as in a BN, probabilist ic dependence on decision is t o be made parent s • I n example decision node: t he act ual values Pr(f | f lu) = .5 Pr(pos| f lu,bt ) = .2 Pr(f | mal) = .3 of Ch and Fev will be observed bef ore t he Pr(neg| f lu,bt ) = .8 Pr(f | none) = .05 Pr(null| f lu,bt ) = 0 decision t o t ake t est must be made Pr(pos| mal,bt ) = .9 Fever Pr(neg| mal,bt ) = .1 Tst Result Pr(null| mal,bt ) = 0 – agent can make dif f erent decisions f or each Pr(pos| no,bt ) = .1 inst ant iat ion of parent s (i.e., policies) Disease Pr(neg| no,bt ) = .9 Pr(null| no,bt ) = 0 Pr(pos|D,~bt ) = 0 BloodTst Chills Pr(f lu) = .3 Pr(neg| D,~bt ) = 0 BT ∊ {bt, ~bt} BloodTst Pr(mal) = .1 Pr(null| D,~bt ) = 1 Pr(none) = .6 Fever 5 6 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson 1

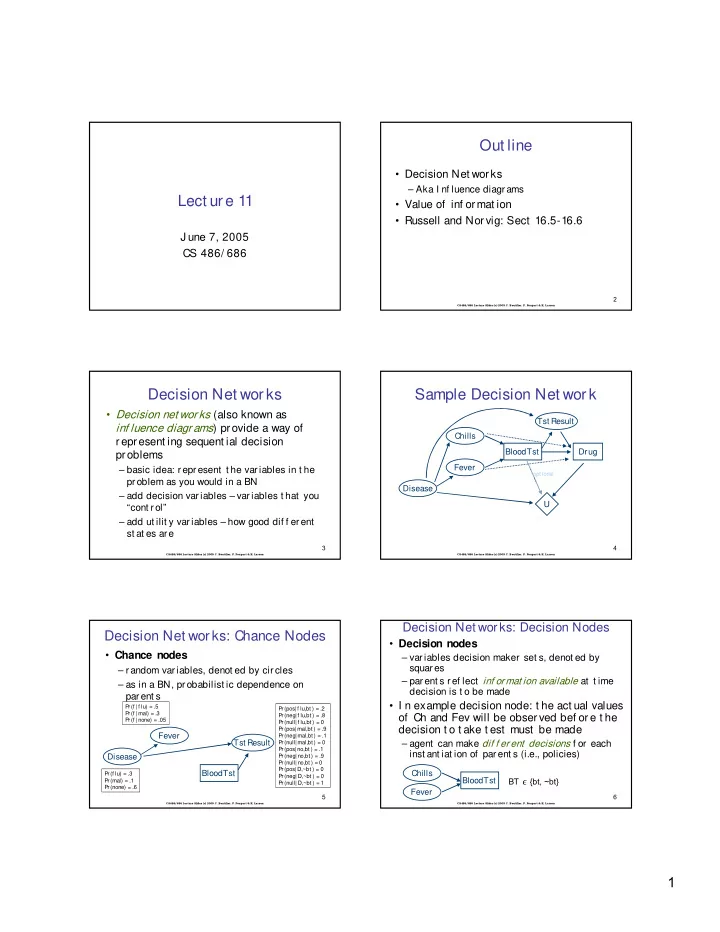

Decision Net wor ks: Value Node Decision Net wor ks: Assumpt ions • Value node • Decision nodes are t ot ally ordered – decision variables D 1 , D 2 , … , D n – specif ies ut ilit y of a st at e, denot ed by a diamond – decisions are made in sequence – ut ilit y depends only on st at e of parent s of value – e.g., BloodTst (yes,no) decided bef ore Drug node (f d,md,no) – generally: only one value node in a decision net work • No-f orget t ing propert y • Ut ilit y depends only on disease and drug – any inf ormat ion available when decision D i is made is available when decision D j is made (f or i < j ) – t hus all parent s of D i are parent s of D j U(f ludrug, f lu) = 20 U(f ludrug, mal) = -300 BloodTst Drug U(f ludrug, none) = -5 U(maldrug, f lu) = -30 Chills U(maldrug, mal) = 10 opt ional Dashed ar cs U(maldrug, none) = -20 ensure t he Disease BloodTst Drug U(no drug, f lu) = -10 no-f orget t ing U(no drug, mal) = -285 pr oper t y U(no drug, none) = 30 Fever U 7 8 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson Policies Value of a Policy • Value of a policy δ is t he expect ed ut ilit y given • Let Par(D i ) be t he parent s of decision node D i t hat decision nodes are execut ed according t o – Dom(Par(D i )) is t he set of assignment s t o parent s δ • A policy δ is a set of mappings δ i , one f or each • Given asst x t o t he set X of all chance decision node D i variables, let δ ( x ) denot e t he asst t o decision – δ i :Dom(Par(D i )) → Dom(D i ) variables dict at ed by δ – δ i associat es a decision wit h each parent asst f or D i – e.g., asst t o D 1 det ermined by it ’s parent s’ asst in x • For example, a policy f or BT might be: – e.g., asst t o D 2 det ermined by it ’s parent s’ asst in x along wit h what ever was assigned t o D 1 – δ BT (c,f ) = bt – etc. – δ BT (c,~f ) = ~bt Chills BloodTst – δ BT (~c,f ) = bt • Value of δ : Fever – δ BT (~c,~f ) = ~bt EU( δ ) = Σ X P( X, δ ( X )) U( X, δ ( X )) 9 10 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson Comput ing t he Best Policy Opt imal Policies • We can work backwards as f ollows • An opt imal policy is a policy δ * such t hat • First comput e opt imal policy f or Drug (last EU( δ * ) ≥ EU( δ ) f or all policies δ dec’n) – f or each asst t o parent s (C,F,BT,TR) and f or each • We can use t he dynamic pr ogramming decision value (D = md,f d,none), comput e t he principle yet again t o avoid enumerat ing expect ed value of choosing t hat value of D all policies – set policy choice f or each value of parent s t o be • We can also use t he st ruct ure of t he Tst Result Chills t he value of D t hat decision net work t o use var iable BloodTst Drug has max value Fever eliminat ion t o aid in t he comput at ion – eg: δ D (c,f ,bt ,pos) = md opt ional Disease U 11 12 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson 2

Comput ing t he Best Policy Comput ing t he Best Policy • How do we comput e t hese expect ed values? • Next comput e policy f or BT given policy – suppose we have asst < c,f ,bt ,pos> t o parent s of Drug – we want t o comput e EU of deciding t o set Drug = md δ D (C,F,BT,TR) j ust det er mined f or Drug – we can run variable eliminat ion! – since δ D (C,F,BT,TR) is f ixed, we can t reat • Treat C,F,BT,TR,Dr as evidence – t his reduces f act ors (e.g., U rest rict ed t o bt ,md : depends on Drug as a normal r andom var iable wit h Dis ) det erminist ic pr obabilit ies – eliminat e remaining variables (e.g., only Disease lef t ) – i.e., f or any inst ant iat ion of par ent s, value – lef t wit h f act or: EU(md| c, f , bt, pos) = Σ Dis P(Dis| c, f , bt, pos, md) U(Dis, bt, md) of Drug is f ixed by policy δ D • We now know EU of doing – t his means we can solve f or opt imal policy Tst Result Dr=md when c,f ,bt ,pos t rue Chills f or BT j ust as bef ore • Can do same f or f d,no t o BloodTst Drug – only uninst ant iat ed vars are r andom vars Fever decide which is best opt ional Disease (once we f ix it s parent s) 13 U 14 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson Comput ing Expect ed Ut ilit ies Opt imizing Policies: Key Point s • The preceding illust r at es a gener al • I f a decision node D has no decisions t hat phenomenon f ollow it , we can f ind it s policy by – comput ing expect ed ut ilit ies wit h BNs is inst ant iat ing each of it s parent s and comput ing t he expect ed ut ilit y of each quit e easy decision f or each parent inst ant iat ion – ut ilit y nodes are j ust f act ors t hat can be – no-f orget t ing means t hat all ot her decisions are dealt wit h using variable eliminat ion inst ant iat ed (t hey must be parent s) EU = Σ A,B,C P(A,B,C) U(B,C) – it s easy t o comput e t he expect ed ut ilit y using VE C – t he number of comput at ions is quit e large: we run = Σ A,B,C P(C| B) P(B| A) P(A) U(B,C) expect ed ut ilit y calculat ions (VE) f or each parent inst ant iat ion t oget her wit h each possible decision • J ust eliminat e variables A U D might allow in t he usual way – policy: choose max decision f or each parent B inst ant ’n 15 16 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson Opt imizing Policies: Key Point s Decision Net work Not es • Decision net works commonly used by decision • When a decision D node is opt imized, it can be analyst s t o help st ruct ure decision problems t reat ed as a random variable • Much work put int o comput at ionally ef f ect ive – f or each inst ant iat ion of it s parent s we now know t echniques t o solve t hese what value t he decision should t ake – j ust t reat policy as a new CPT: f or a given parent – common t rick: replace t he decision nodes wit h random inst ant iat ion x , D get s δ ( x ) wit h probabilit y 1(all variables at out set and solve a plain Bayes net (a ot her decisions get probabilit y zero) subt le but usef ul t ransf ormat ion) • I f we opt imize f rom last decision t o f irst , at • Complexit y much great er t han BN inf erence each point we can opt imize a specif ic decision – we need t o solve a number of BN inf erence problems by (a bunch of ) simple VE calculat ions – one BN problem f or each set t ing of decision node parent s and decision node value – it ’s successor decisions (opt imized) are j ust normal nodes in t he BNs (wit h CPTs) 17 18 CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson CS486/686 Lecture Slides (c) 2005 C. Boutilier, P. Poupart & K. Larson 3

Recommend

More recommend