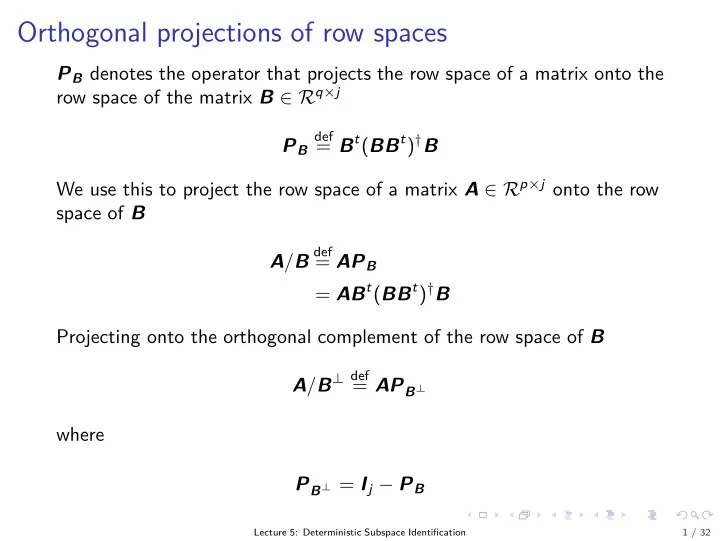

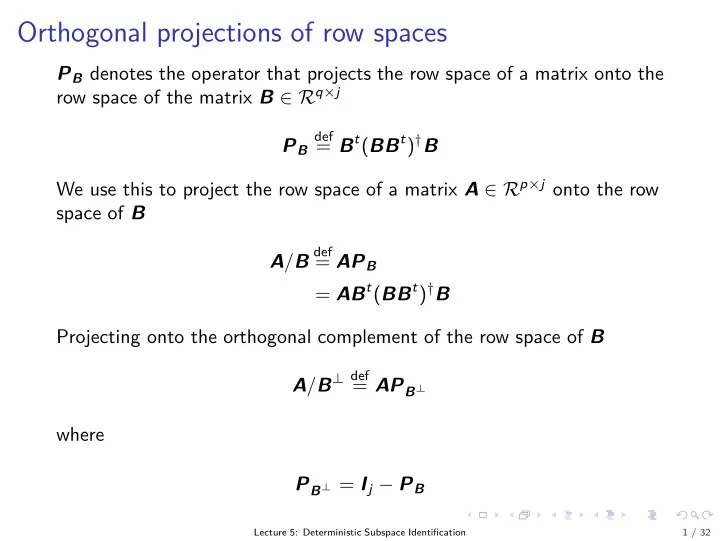

Orthogonal projections of row spaces P B denotes the operator that projects the row space of a matrix onto the row space of the matrix B ∈ R q × j def = B t ( BB t ) † B P B We use this to project the row space of a matrix A ∈ R p × j onto the row space of B def A / B = AP B = AB t ( BB t ) † B Projecting onto the orthogonal complement of the row space of B A / B ⊥ def = AP B ⊥ where P B ⊥ = I j − P B Lecture 5: Deterministic Subspace Identification 1 / 32

Orthogonal projections of row spaces We can decompose A as A = AP B + AP B ⊥ Alternatively, we can decompose A as a linear combination of rows of B and rows of the orthogonal complement of B using def = A / B L B B L B ⊥ B ⊥ def = A / B ⊥ and find A = L B B + L B ⊥ B ⊥ Lecture 5: Deterministic Subspace Identification 2 / 32

Oblique projections of row spaces Decomposition of A as linear combinations of two non-orthogonal matrices B and C and the orthogonal complement of B and C � � ⊥ B A = L B B + L C C + L B ⊥ , C ⊥ C where L C C is defined as the oblique projection of the row space of A along the row space of B on the row space of C def A / C B = L C C B A A / B C C Lecture 5: Deterministic Subspace Identification 3 / 32

Oblique projections of row spaces How to decompose? 1. Project A on joint row space of B and C � C � B t � � CC t � † � C � CB t � C t A / = A BC t BB t B B 2. Decompose along row spaces of B and B Definition 1 (Oblique projections). The oblique projection of the row space of A ∈ R p × j along the row space of B ∈ R q × j on the row space of C ∈ R r × j is defined as �� CC t � † � CB t def � B t � C t A / B C = A C BC t BB t first r columns Properties B / C B = 0 C / C B = C Lecture 5: Deterministic Subspace Identification 4 / 32

Oblique projections of row spaces Corollary 2 (Oblique projections). The oblique projection of the row space of A ∈ R p × j along the row space of B ∈ R q × j on the row space of C ∈ R r × j can also be defined as A / B C = [ A / B ⊥ ] · [ C / B ⊥ ] † · C B A A / B C C B ⊥ Lecture 5: Deterministic Subspace Identification 5 / 32

Principal angles and directions The principal angles between two subspaces are a generalization of an angle between two vectors a 1 = b 1 θ 1 = 0 b 2 θ 2 a 2 Lecture 5: Deterministic Subspace Identification 6 / 32

Principal angles and directions Definition 3 (Principal angles and directions). The principal angles θ 1 ≤ θ 2 ≤ . . . ≤ π/ 2 between the row spaces of A and B of two matrices A ∈ R p × j and B ∈ R q × j and the corresponding principal directions a i ∈ row space A and b i ∈ row space B are defined recursively as: a t b cos θ k = max a ∈ row space A , b ∈ row space B subject to � a � = � b � = 1 and for k > 1, a t a i = 0 for i = 1 , . . . , k − 1 and b t b i = 0 for i = 1 , . . . , k − 1. Lecture 5: Deterministic Subspace Identification 7 / 32

Principal angles and directions Given two matrices A ∈ R p × j and B ∈ R q × j and the singular value decomposition: A t ( AA t ) † AB t ( BB t ) † B = USV t then the principal directions between the row spaces of A and B are equal to the rows of U t and the rows of V t . The cosines of the principal angles between the row spaces of A and B are defined as the singular values (the diagonal of S ). The principal directions and angles between the row spaces of A and B are denoted as: def = U t [ A ∢ B ] def = V t [ A ∢ B ] def [ A ∢ B ] = S Lecture 5: Deterministic Subspace Identification 8 / 32

Principal angles and directions The principal angles and directions between the row spaces of A and B of two matrices A ∈ R p × j and B ∈ R q × j can also be found through the singular value decomposition of BB t � − 1 / 2 = USV t � AA t � − 1 / 2 � AB t � � as: AA t � − 1 / 2 A = U t � def [ A ∢ B ] BB t � − 1 / 2 B = V t � def [ A ∢ B ] def [ A ∢ B ] = S Lecture 5: Deterministic Subspace Identification 9 / 32

Part I Deterministic Subspace Identification Lecture 5: Deterministic Subspace Identification 10 / 32

Deterministic identification problem Given : s measurements of the input u k ∈ R m and the output y k ∈ R l generated by the unknown deterministic system of order n : d k + 1 = A d k + Bu k y k = C d k + Du k Determine : ◮ The order n of the unknown system. ◮ The system matrices A ∈ R n × n , B ∈ R n × m , C ∈ R l × n , D ∈ R l × m (up to within a similarity transformation). Lecture 5: Deterministic Subspace Identification 11 / 32

Block Hankel matrices u 0 u 1 u 2 . . . u j − 1 u 1 u 2 u 3 . . . u j . . . . . . u i − 1 u i u i + 1 . . . u i + j − 2 def = U 0 | 2 i − 1 u i u i + 1 u i + 2 . . . u i + j − 1 u i + 1 u i + 2 u i + 3 . . . u i + j . . . . . . u 2 i − 1 u 2 i u 2 i + 1 . . . u 2 i + j − 2 � � � � U 0 | i − 1 U p = = U i | 2 i − 1 U f Lecture 5: Deterministic Subspace Identification 12 / 32

Block Hankel matrices Block Hankel matrix dimensions ◮ For i block rows and u k ∈ R m , the number of rows in U 0 | 2 i − 1 equals 2 mi . ◮ For s measurement, the number of columns j is typically equal to s − 2 i + 1. Subscript p stands for “past” and subscript f stands for “future”. The matrices U p (the past inputs) and U f (the future inputs) are defined by splitting U 0 | 2 i − 1 in two equal parts of i block rows. Corresponding columns of U p and U f have no elements in common, thus the distinction between past and future. Lecture 5: Deterministic Subspace Identification 13 / 32

Block Hankel matrices Distinction between past and future? u 0 u 1 u 2 . . . u j − 1 . . . u 1 u 2 u 3 u j . . . . . . u i − 1 u i u i + 1 . . . u i + j − 2 def = U 0 | 2 i − 1 u i u i + 1 u i + 2 . . . u i + j − 1 u i + 1 u i + 2 u i + 3 . . . u i + j . . . . . . u 2 i − 1 u 2 i u 2 i + 1 . . . u 2 i + j − 2 � � � � U + U 0 | i p = = U − U i + 1 | 2 i − 1 f Note that U + p has i + 1 block rows, whereas U − p only has i − 1 block rows. Lecture 5: Deterministic Subspace Identification 14 / 32

Input-output data matrices � � U 0 | i − 1 def W 0 | i − 1 = Y 0 | i − 1 � U p � = Y p = W p where Y p is defined in a similar way to U p . Or for a shifted boundary: � U + � W + p p = Y + p Lecture 5: Deterministic Subspace Identification 15 / 32

Notation We denote the state sequence matrix by def � d i � X d ∈ R n × j . . . = d i + 1 d i + j − 2 d i + j − 1 i The observability matrix C CA def CA 2 ∈ R li × n Γ i = . . . CA i − 1 Reversed controllability matrix � � def A i − 1 B A i − 2 B ∈ R n × mi ∆ i [ d ] = . . . AB B Lecture 5: Deterministic Subspace Identification 16 / 32

Notation Block Toeplitz with Markov parameters 0 0 . . . 0 D 0 0 CB D 0 def CAB CB D H d ∈ R li × mi = i . . . . . . CA i − 2 B CA i − 3 B CA i − 4 B . . . D Lecture 5: Deterministic Subspace Identification 17 / 32

Matrix input-output equations Y p = Γ i X d p + H d i U p Y f = Γ i X d f + H d i U f X d f = A i X d p + ∆ i [ d ] U p H d i U f Y f Γ i X d f Lecture 5: Deterministic Subspace Identification 18 / 32

Subspace Theorem for Deterministic Identification Let ◮ u k be persistently excited of order 2 i (i.e. rank U 0 | 2 i − 1 = 2 mi � p ) t � ◮ R (( U f ) t ) ∩ R ( X d = { 0 } ◮ W 1 ∈ R li × li , W 2 ∈ R j × j are weighting matrices, with rank ( W 1 ) = li and rank ( W p ) = rank ( W p W 2 ) Let def O i = Y f / U f W p and � � S 1 � � V t � 0 � U 1 1 = U 1 S 1 V t W 1 O i W 2 = U 2 V t 1 0 0 2 then O i = Γ i X d f n = #( σ i � = 0 ) Γ i = W − 1 1 U 1 S 1 / 2 T 1 f W 2 = T − 1 S 1 / 2 X d V t 1 1 f = Γ † X d i O i Lecture 5: Deterministic Subspace Identification 19 / 32

Subspace Theorem for Deterministic Identification O i = Γ i X d Y f f H d W p i U f rank ( Y f / U f W p ) = n row space ( Y f / U f W p ) = row space ( X d f ) column space ( Y f / U f W p ) = column space ( Γ i ) Lecture 5: Deterministic Subspace Identification 20 / 32

Rank deficiency & LTI systems If u k is persistently exciting of order i , i.e. � � rank U 0 | i − 1 = mi then � U 0 | i − 1 � � � rank W 0 | i − 1 = rank = mi + n Y 0 | i − 1 Proof follows from matrix input-output equation: � � � Y p � � Γ i � � � − H d X d I li 0 i p = 0 I mi U p 0 I mi U p Lecture 5: Deterministic Subspace Identification 21 / 32

Recommend

More recommend